Chapter 1. Security Fundamentals

This chapter introduces the fundamental software security concepts that you need to understand before continuing to later chapters. We explain why there is a need for security, and the roles that are important to the development and operation of software security policies. We also discuss the goals of software security and introduce some important concepts that you should understand as you develop your own security programming skills.

The Need for Security

Only a few years ago, software applications tended to be isolated. Users of these applications were required to present themselves in a known location (for example, a bank branch or office block) that was protected by physical barriers to access such as locks, surveillance cameras, and security guards. Attacks against such software systems were fewer than are experienced today, in part, because gaining access to such a location presented a barrier that many found insurmountable.

The increased connectivity and prevalence of networked applications has removed the insurmountable barrier presented by physical security, and it is not only the networked applications themselves at risk. Increasingly, software systems control access to valuable physical resources (for example, banking software can be used to credit or debit a customer account). Subverting or compromising the software system may be the simplest way to gain access to the physical resource; for example, it may be easier to break into the banking application and create fictitious transactions than it is to crack open the bank vault.

Today, a talented 15-year-old Italian schoolboy, who would be unable to get past a company security guard, might, for personal amusement, be able to convince a networked application that he is a 37-year-old trusted employee from Alabama. More serious, however, is the increase in software hacking for criminal reasons—either to steal intellectual property or, more commonly, to steal information that can be sold to other criminals, such as lists of credit card numbers.

In short, the world has become more hostile towards software. In light of recent changes to social and political attitudes to security, it should be no surprise that the public has an increased expectation that software will be secure. The kinds of security that we discuss in this book can provide some protection against the increased frequency and sophistication of attempts to subvert applications. However, security has also become a tool to promote the sale of software, and claims of “unbreakable” security are now commonplace. The effective use of software security has fallen behind the ideal that is portrayed by marketing departments. Another purpose of this book is to close the gap between the perception and the reality, and to demonstrate how you can increase the security of your applications through the careful application of tried-and-tested technologies.

Roles in Security

In a normal software development project, there are many people who influence software security. People often look at software security from different perspectives and hope to gain different results from its implementation; these are often at odds with the goals of others. In this section, we describe the most common roles, and explain the motivations and goals of those who hold them. The content of this book is aimed at the technical reader, but it is important that you appreciate the complete set of influences that shape the need for and implementation of software security. See Chapter 4 for a more detailed examination of some of these roles and the way they influence the life cycle of an application.

The Business Sponsor

The business sponsor is responsible for commissioning a software development project, and usually owns the problem that the application is intended to solve. The role of the business sponsor, and his expectations of software security, varies depending on the nature of the business and the purpose of the software.

The business sponsor typically lacks technical expertise, but controls the development budget and may support the implementation of software security for the following reasons:

Security is a known requirement of the systems users.

Legislation dictates that the software must implement certain security measures.

Security features are necessary to compete with other products and look good on marketing material.

Lacking formal requirements, the business sponsor will often have opinions to offer on the importance and implementation of software security. These opinions may or may not be in line with the real requirements of the project.

Business sponsors are often the biggest source of tension on a project when it comes to the correct application of security. As you will see throughout this book, software security can be applied only after a careful assessment of the application requirements; however, the business sponsor often wants to bring the application into production as quickly as possible, and this creates a tension between the careful application of a planned security policy and the business requirement that the application ship quickly.

The Architect

The project architect is responsible for the overall design of the application, ensuring that the planned development will meet the business and technical goals that have been specified by the business sponsor. The architect is ideally placed to assess the security needs of the application and to formulate the security policy that will be implemented by the programmers.

The Programmer

The programmer is responsible for implementing the application design produced by the architect and for meeting the software security goals specified in the design. The programmer must have a firm understanding of the security features provided by the development platform in use, and must be trusted to implement the security policy completely and without modification.

The Security Tester

The security tester does not perform the same role as an ordinary application tester. A normal tester creates test scenarios that ensure that the planned functionality works as expected, by simulating the actions of a user. By contrast, the security tester simulates the actions of a hacker, in order to uncover behaviors that would circumvent the software security measures. Security testing is an underrated and underemployed activity, but is vital in validating the security measures designed by the architect and implemented by the programmer.

The System Administrator

The system administrator is responsible for installing, configuring, and managing the application; these tasks require a good understanding of general security issues, and an appreciation of the security features provided by the development platform and the application itself.

One of the most important aspects of system administration is application monitoring. Well-designed applications provide system administrators with information about potential security breaches, and it is the responsibility of the system administrator to monitor for such information and to formulate a response plan in the event that the security of an application is subverted.

The User

The user is the final consumer of the functionality provided by the application, and is often required to interact with its software security measures—for example, by entering a username and password to gain access to its functionality.

The users of an application create their own tensions against the security policy; their expectations that the software system will protect them are high, but their willingness to be constrained by intrusive security measures is limited. For example, retail customers expect that a software system will conceal their credit card numbers from unauthorized third parties and protect their accounts from unauthorized changes.However, the same users will resist taking any responsibility for their own security—for example, by remembering and specifying a PIN code when they purchase goods.

Successful security policies take into account the users’ attitudes, and do not force them to accept security demands that they cannot or will not adhere to. Unsuccessful security policies do not take into account the needs of the user—for example, requiring users to remember long and difficult passwords that are frequently changed. In such circumstances, users will simply write the password down on a piece of paper and thereby negate all of the effort made during the development process.

The Hacker/Cracker

The final role is the cracker, more popularly known as a hacker. The hacker attempts to circumvent or subvert software security for financial gain or perhaps to rise to a perceived intellectual challenge. The hacker is the person whom security measures are meant to foil, but the label does not accurately describe the range of people who will attack software security systems. Throughout this book, we detail a number of specific security systems and explain the type of attack against which each is intended to protect.

Understanding Software Security

There are two kinds of assets that software security sets out to protect; restricted resources and secrets. In this section, we provide an overview of these important categories, which we build on throughout the rest of the book.

Restricted Resources

A restricted resource is any object, feature, or function of your application (or of any software or hardware that your application depends on) that you do not wish to be used or accessed by unauthorized people. This is a very broad definition, but casting our net this wide allows us to demonstrate the common solution to a wide range of closely related issues; the following list describes some restricted resources that you may encounter:

- Disk files

The most commonly encountered restricted resource is the disk file. For example, by default, the Windows operating system allows users to access their own files, but not the files of other users or disk files used by the operating system itself. Users are restricted from accessing files that they have not created.

- Software functions

One of the most familiar restricted resources for you is the software function that should not be available to all users of the application or service. For example, the accounts clerk in a bank should not be able to authorize mortgage loans; such activities are restricted to qualified loan officers.

- Hardware resources

Software security is often used to restrict access to important hardware resources, such as a high-quality color printer. Ordinary users are restricted to printing their documents in monochrome, while the sales staff prints customer presentations in color.

- External services

Increasingly, software security restrictions are applied to external services that have no tangible physical attributes, but affect a company either by incurring a direct financial cost or by distracting staff from their duties. Examples of such services are Internet access, personal emails, and international telephone calls. Access to these services is restricted to those who need them to do their jobs

Trust

In terms of software security, when we trust someone, we grant that person access to one or more restricted resources; for example, we trust our bank loan officer to approve mortgage loans in a responsible way and grant the officer access to the software features for loan approval.

The first step in managing trust is to establish identity, which is the means that a system uses to uniquely differentiate between users. The complexity of the identities used by an application is influenced by the number of users that need identification; if there are small numbers of users, then identities as simple as “Alice” and “Bob” may be sufficient to uniquely identify each person. By contrast, providing unique identification for thousands or millions of people may require more complex identities—for example, social security numbers.

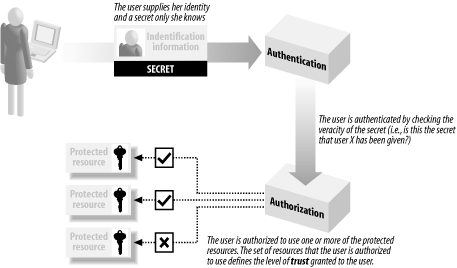

You use authentication to ensure that you have correctly established the identity of a user. If you wish to restrict access to a valuable resource, you cannot take a statement of identity by a user at face value. Consider the ramifications if your bank believed a claim by you that you were “Bill Gates” and granted you access to his deposits. The most common form of authentication requires a user to provide a username and a password to access an application; the username represents the stated user identity and the password is a secret known only to the user (see the next section for information about secrets). We expect that others who might wish to assume this identity will not know the secret password and therefore won’t pass the authentication process.

Once you have authenticated and identified a user, you authorize the user to access one or more restricted resources. The resources to which a user is granted access depend on the level of trust granted by the application; the level of trust is typically determined by the nature of the tasks that a user will undertake; for example, you might grant a user access to a disk file containing details of a new product because that user is employed as a product development engineer, and the contents of that file are required by the user to discharge her duties.

The process of establishing and authenticating an identity, and authorizing access to resources based on that identity is illustrated in Figure 1-1. This process is at the heart of software security, and many applications and systems that may appear to work in radically different ways implement this common approach.

See Chapter 5 for an introduction to the way in which .NET defines and supports restricted resources, and Chapter 6 through Chapter 10 for detailed coverage on how to apply .NET security to your application projects.

Secrets

A secret is any data created or processed by your application that you do not wish to be publicly known. Examples of secrets include the credit card numbers of your clients, and passwords your users enter to authenticate their identities for your application. Secrets are the counterpart to restricted resources. While a restricted resource often represents the ability to perform an action (such as approving a mortgage or printing a color document), a secret typically embodies the data the resource will process (such as the financial details of a customer or the secret marketing plan).

The lifetime of secrets

It is important to consider each type of secret that you work with and assess how long it needs to remain a secret; for example, a customer credit card number needs to be protected only until the card expires, after which, the information you hold on file cannot be used to purchase goods. By contrast, some secrets must be protected forever, such as medical histories.

One mistake that is frequently made is considering the lifetime of a secret in isolation from the real world. For example, you may choose to protect a user’s secret password only until it is changed, perhaps as part of a process where a password is valid for a fixed period. The problem that this presents is that users will often change a password back to its original value as soon as they can, which means that a hacker could access your application if your list of expired passwords were allowed to become public. When you assess the lifetime of a secret, consider that the data itself may have value if it persists outside the application.

Protecting secrets

Secrets are typically protected with cryptography , which is the subject of Part III. Cryptography uses complex mathematical algorithms to encode secrets, and the type of algorithm used depends on the length of time the data needs protection, which is, in turn, influenced by the lifetime of the secret. See Chapter 12 for an introduction to cryptography, and Chapter 13 through Chapter 17 for in-depth coverage of how the .NET Framework supports cryptographic techniques.

End-to-End Security

The final concept we introduce in this chapter is end-to-end security , which is the result of considering the wider aspects of security, beyond the resources and secrets protected by your application. Although this book is focused on implementing software security, it is important that you take a higher-level view, taking into account the real world and its complexity. The following sections highlight other issues that you should consider.

Real-World Trust Relationships

One of the most important things to remember about security is that not everyone shares your motivations and aspirations, and not everyone thinks the way that you think. The most carefully defined software trust system may not reflect the actual trust that has been granted to users of an application.

As a simple example, when we outlined the important roles that play in security, we differentiated between the legitimate users of an application and the hackers who want to subvert it. The reality is less clear-cut; most fraud is perpetrated by employees otherwise trusted by their company; when you grant trust to a user, you may be providing that individual tools that will be used to defraud, and otherwise rob, your company.

Differences in motivation are especially relevant when considering coercion; although you may feel that the security and profitability of your application is paramount, others may not. In some countries, it is common to rob a bank by kidnapping the branch manager’s children, coercing the manager to unlock the bank and provide access to the vaults in order to secure the freedom of his offspring. The robbers have secured access to the protected resources with the explicit cooperation of a trusted employee. When thinking about security, you must give consideration to motivation, both of the potential users and the potential attackers.

Side Channels

A side-channel attack employs methods that have little to do with the software security measures that protect an application. For example, looking over a person’s shoulder while he types his password circumvents any policy that may be put in place to control identity authentication; the attacker has side-stepped the security measures and can now access the system.

Identifying potential side-channel attacks takes a lot of lateral thinking, and requires careful evaluation of the information exposed by your security systems that attackers might be able to exploit. Various smart-card technologies, for example, have been compromised by analyzing variations in power consumption, electromagnetic radiation, and the amount of time taken to process an authentication request associated with their use. Such an attack takes time and determination, but can be profitable if the smart card is used to manage cash transactions or provides access to expensive resources.

Physical Security

Physical security is often ignored when application software is secured. Many years of effort may go into designing an application that carefully controls access to the contents of a warehouse, but if the security of the warehouse itself is weak, then attackers will simply steal your goods directly rather than attempting to subvert your application to ship goods free-of-charge.

A good example of physical security is represented by automated teller machines (ATMs). An ATM employs physical measures to protect against tampering and outright theft, as well as the software measures that authenticate your card and PIN number.

Third-Party Software

Finally, we draw your attention to third-party software, which you may rely on for the development of your application. Examples of this kind of software include development environments (such as Microsoft Visual Studio .NET), class libraries, and language compilers.

It is important for you to understand that by using third-party software, you are trusting the software publisher to produce software that does not present a security risk, either in the functionality that is provided or by the introduction of malicious features. You should also realize that when you distribute software that depends on third-party software, you are asking your customers to grant the same level of trust to the third parties as they do to you and your organization.

For example, you may trust Microsoft to produce safe and secure software, and you may feel confident that Internet Explorer provides a secure environment in which to browse the World Wide Web. However, if you look at the information provided by the “About Internet Explorer” window, you will see that Internet Explorer 6 is built, in part, with software licenses from the following organizations:

National Center for Supercomputing Applications at the University of Illinois at Champaign

Spyglass, Inc.

RSA Data Security, Inc.

The Independent JPEG Group

Intel Corp.

Mainsoft Corp.

When you trust Microsoft to deliver Internet Explorer without the inclusion of security-related defects or malicious code, you also implicitly trust all of the companies listed above, some that you may not even have heard of. Each of these companies may in turn license content or functionality from other publishers, and the chain continues; the trust that you confer on an individual software publisher goes far beyond what you may expect, and this is equally true for development software and class libraries as it is for Windows applications.

When selecting third-party tools and libraries for your development projects, you must consider the level of trust that you assign to the software publisher and any other companies or individuals that may have contributed to these products.

Get Programming .NET Security now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.