Chapter 5. Textures and Image Capture

Shading algorithms are required for effects that need to respond to a changing condition in real time, such as the movement of a light source. But procedural methods can go only so far on their own; they can never replace the creativity of a professional artist. That’s where textures come to the rescue. Texturing allows any predefined image, such as a photograph, to be projected onto a 3D surface.

Simply put, textures are images; yet somehow, an entire vocabulary is built around them. Pixels that make up a texture are known as texels. When the hardware reads a texel color, it’s said to be sampling. When OpenGL scales a texture, it’s also filtering it. Don’t let the vocabulary intimidate you; the concepts are simple. In this chapter, we’ll focus on presenting the basics, saving some advanced techniques for later in this book.

We’ll begin by modifying ModelViewer to support image loading and simple texturing. Afterward we’ll take a closer look at some of the OpenGL features involved, such as mipmapping and filtering. Toward the end of the chapter we’ll use texturing to perform a fun trick with the iPhone camera.

Adding Textures to ModelViewer

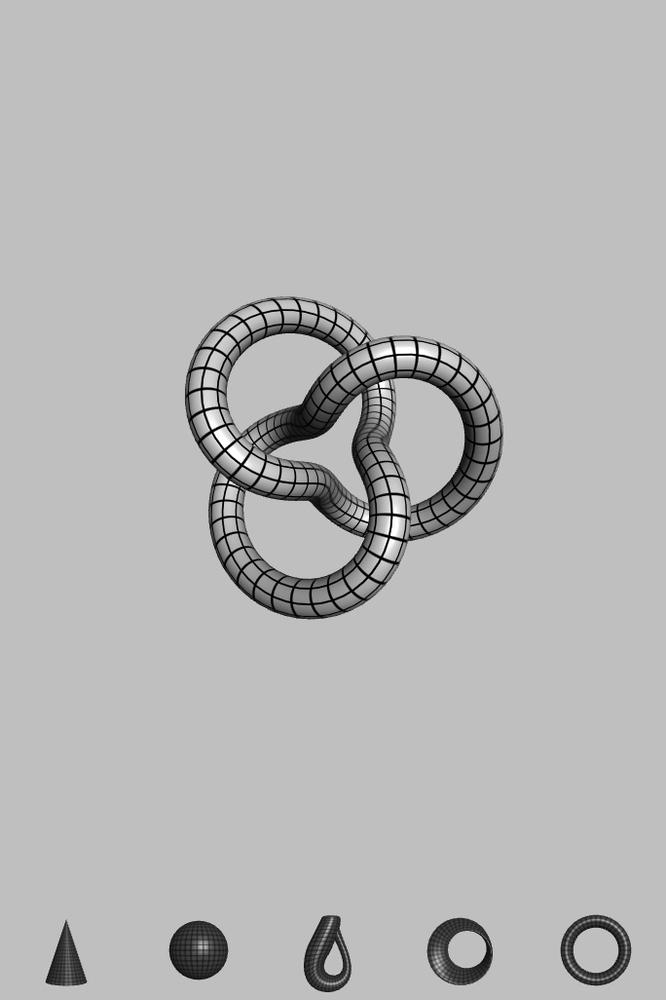

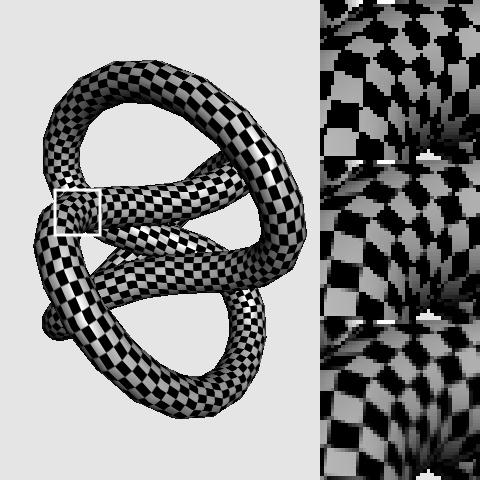

Our final enhancement to ModelViewer wraps a simple grid texture around each of the surfaces in the parametric gallery, as shown in Figure 5-1. We need to store only one cell of the grid in an image file; OpenGL can repeat the source pattern as many times as desired.

The image file can be in a number of different file formats, but we’ll go with PNG for now. It’s popular because it supports an optional alpha channel, lossless compression, and variable color precision. (Another common format on the iPhone platform is PVR, which we’ll cover later in the chapter.)

You can either download the image file for the grid cell from this book’s companion site or create one using your favorite paint or graphics program. I used a tiny 16×16 white square with a 1-pixel black border. Save the file as Grid16.png.

Since we’ll be loading a resource file from an

external file, let’s use the .OBJ-loading sample from

the previous chapter as the starting point. To keep things simple, the

first step is rewinding a bit and using parametric surfaces exclusively.

Simply revert the ApplicationEngine::Initialize method

so that it uses the sphere and cone shapes. To do this, find the following

code:

string path = m_resourceManager->GetResourcePath(); surfaces[0] = new ObjSurface(path + "/micronapalmv2.obj"); surfaces[1] = new ObjSurface(path + "/Ninja.obj");

And replace it with the following:

surfaces[0] = new Cone(3, 1); surfaces[1] = new Sphere(1.4f);

Keep everything else the same. We’ll enhance

the IResourceManager interface later to support image

loading.

Next you need to add the actual image file to your Xcode project. As with the OBJ files in the previous chapter, Xcode automatically deploys these resources to your iPhone. Even though this example has only a single image file, I recommend creating a dedicated group anyway. Right-click the ModelViewer root in the Overview pane, choose Add→New Group, and call it Textures. Right-click the new group, and choose Get Info. To the right of the Path label on the General tab, click Choose, and create a new folder called Textures. Click Choose, and close the group info window.

Right-click the new group, and choose Add→Existing Files. Select the PNG file, click Add, and in the next dialog box, make sure the “Copy items” checkbox is checked; then click Add.

Enhancing IResourceManager

Unlike OBJ files, it’s a bit nontrivial to

decode the PNG file format by hand since it uses a lossless compression

algorithm. Rather than manually decoding the PNG file in the application

code, it makes more sense to leverage the infrastructure that Apple

provides for reading image files. Recall that the

ResourceManager implementation is a mix of

Objective-C and C++; so, it’s an ideal place for calling Apple-specific

APIs. Let’s make the resource manager responsible for decoding the PNG

file. The first step is to open Interfaces.hpp and

make the changes shown in Example 5-1.

New lines are in bold (the changes to the last two lines, which you’ll

find at the end of the file, are needed to support a change we’ll be

making to the rendering engine).

struct IResourceManager {

virtual string GetResourcePath() const = 0;

virtual void LoadPngImage(const string& filename) = 0; virtual void* GetImageData() = 0;

virtual void* GetImageData() = 0; virtual ivec2 GetImageSize() = 0;

virtual ivec2 GetImageSize() = 0; virtual void UnloadImage() = 0;

virtual void UnloadImage() = 0; virtual ~IResourceManager() {}

};

// ...

namespace ES1 { IRenderingEngine*

CreateRenderingEngine(IResourceManager* resourceManager); }

namespace ES2 { IRenderingEngine*

CreateRenderingEngine(IResourceManager* resourceManager); }

virtual ~IResourceManager() {}

};

// ...

namespace ES1 { IRenderingEngine*

CreateRenderingEngine(IResourceManager* resourceManager); }

namespace ES2 { IRenderingEngine*

CreateRenderingEngine(IResourceManager* resourceManager); }

Now let’s open

ResourceManager.mm and update the actual

implementation class (don’t delete the #imports, the

using statement, or the

CreateResourceManager definition). It needs two

additional items to maintain state: the decoded memory buffer

(m_imageData) and the size of the image

(m_imageSize). Apple provides several ways of loading

PNG files; Example 5-2 is the simplest way of

doing this.

class ResourceManager : public IResourceManager {

public:

string GetResourcePath() const

{

NSString* bundlePath =[[NSBundle mainBundle] resourcePath];

return [bundlePath UTF8String];

}

void LoadPngImage(const string& name)

{

NSString* basePath = [NSString stringWithUTF8String:name.c_str()]; NSString* resourcePath = [[NSBundle mainBundle] resourcePath];

NSString* fullPath = [resourcePath stringByAppendingPathComponent:basePath];

NSString* resourcePath = [[NSBundle mainBundle] resourcePath];

NSString* fullPath = [resourcePath stringByAppendingPathComponent:basePath]; UIImage* uiImage = [UIImage imageWithContentsOfFile:fullPath];

UIImage* uiImage = [UIImage imageWithContentsOfFile:fullPath]; CGImageRef cgImage = uiImage.CGImage;

CGImageRef cgImage = uiImage.CGImage; m_imageSize.x = CGImageGetWidth(cgImage);

m_imageSize.x = CGImageGetWidth(cgImage); m_imageSize.y = CGImageGetHeight(cgImage);

m_imageData = CGDataProviderCopyData(CGImageGetDataProvider(cgImage));

m_imageSize.y = CGImageGetHeight(cgImage);

m_imageData = CGDataProviderCopyData(CGImageGetDataProvider(cgImage)); }

void* GetImageData()

{

return (void*) CFDataGetBytePtr(m_imageData);

}

ivec2 GetImageSize()

{

return m_imageSize;

}

void UnloadImage()

{

CFRelease(m_imageData);

}

private:

CFDataRef m_imageData;

ivec2 m_imageSize;

};

}

void* GetImageData()

{

return (void*) CFDataGetBytePtr(m_imageData);

}

ivec2 GetImageSize()

{

return m_imageSize;

}

void UnloadImage()

{

CFRelease(m_imageData);

}

private:

CFDataRef m_imageData;

ivec2 m_imageSize;

};

Most of Example 5-2

is straightforward, but LoadPngImage deserves some

extra explanation:

Create an instance of a

UIImageobject using theimageWithContentsOfFilemethod, which slurps up the contents of the entire file.

Extract the inner

CGImageobject fromUIImage.UIImageis essentially a convenience wrapper forCGImage. Note thatCGImageis a Core Graphics class, which is shared across the Mac OS X and iPhone OS platforms;UIImagecomes from UIKit and is therefore iPhone-specific.

Generate a

CFDataobject from theCGImageobject.CFDatais a core foundation object that represents a swath of memory.

Warning

The image loader in Example 5-2 is simple but not robust. For production code, I recommend using one of the enhanced methods presented later in the chapter.

Next we need to make sure that the resource

manager can be accessed from the right places. It’s already instanced in

the GLView class and passed to the application

engine; now we need to pass it to the rendering engine as well. The

OpenGL code needs it to retrieve the raw image data.

Go ahead and change

GLView.mm so that the resource manager gets passed to

the rendering engine during construction. Example 5-3 shows the relevant section of code

(additions are shown in bold).

m_resourceManager = CreateResourceManager();

if (api == kEAGLRenderingAPIOpenGLES1) {

NSLog(@"Using OpenGL ES 1.1");

m_renderingEngine = ES1::CreateRenderingEngine(m_resourceManager);

} else {

NSLog(@"Using OpenGL ES 2.0");

m_renderingEngine = ES2::CreateRenderingEngine(m_resourceManager);

}

m_applicationEngine = CreateApplicationEngine(m_renderingEngine,

m_resourceManager);

Generating Texture Coordinates

To control how the texture gets applied to

the models, we need to add a 2D texture coordinate attribute to the

vertices. The natural place to generate texture coordinates is in the

ParametricSurface class. Each subclass should specify

how many repetitions of the texture get tiled across each axis of

domain. Consider the torus: its outer circumference is much longer than

the circumference of its cross section. Since the x-axis in the domain

follows the outer circumference and the y-axis circumscribes the cross

section, it follows that more copies of the texture need to be tiled

across the x-axis than the y-axis. This prevents the grid pattern from

being stretched along one axis.

Recall that each parametric surface describes

its domain using the ParametricInterval structure, so

that’s a natural place to store the number of repetitions; see Example 5-4. Note that the repetition counts

for each axis are stored in a vec2.

The texture counts that I chose for the cone and sphere are shown in bold in Example 5-5. For brevity’s sake, I’ve omitted the other parametric surfaces; as always, you can download the code from this book’s website (http://oreilly.com/catalog/9780596804831).

#include "ParametricSurface.hpp"

class Cone : public ParametricSurface {

public:

Cone(float height, float radius) : m_height(height), m_radius(radius)

{

ParametricInterval interval = { ivec2(20, 20), vec2(TwoPi, 1), vec2(30,20) };

SetInterval(interval);

}

...

};

class Sphere : public ParametricSurface {

public:

Sphere(float radius) : m_radius(radius)

{

ParametricInterval interval = { ivec2(20, 20), vec2(Pi, TwoPi), vec2(20, 35) };

SetInterval(interval);

}

...

};

Next we need to flesh out a couple methods in

ParametericSurface (Example 5-6). Recall that we’re passing in a set

of flags to GenerateVertices to request a set of

vertex attributes; until now, we’ve been ignoring the

VertexFlagsTexCoords flag.

void ParametricSurface::SetInterval(const ParametricInterval& interval)

{

m_divisions = interval.Divisions;

m_slices = m_divisions - ivec2(1, 1);

m_upperBound = interval.UpperBound;

m_textureCount = interval.TextureCount;

}

...

void ParametricSurface::GenerateVertices(vector<float>& vertices,

unsigned char flags) const

{

int floatsPerVertex = 3;

if (flags & VertexFlagsNormals)

floatsPerVertex += 3;

if (flags & VertexFlagsTexCoords)

floatsPerVertex += 2;

vertices.resize(GetVertexCount() * floatsPerVertex);

float* attribute = &vertices[0];

for (int j = 0; j < m_divisions.y; j++) {

for (int i = 0; i < m_divisions.x; i++) {

// Compute Position

vec2 domain = ComputeDomain(i, j);

vec3 range = Evaluate(domain);

attribute = range.Write(attribute);

// Compute Normal

if (flags & VertexFlagsNormals) {

...

}

// Compute Texture Coordinates

if (flags & VertexFlagsTexCoords) {

float s = m_textureCount.x * i / m_slices.x;

float t = m_textureCount.y * j / m_slices.y;

attribute = vec2(s, t).Write(attribute);

}

}

}

}

In OpenGL, texture coordinates are normalized such that (0,0) maps to one corner of the image and (1, 1) maps to the other corner, regardless of its size. The inner loop in Example 5-6 computes the texture coordinates like this:

float s = m_textureCount.x * i / m_slices.x; float t = m_textureCount.y * j / m_slices.y;

Since the s coordinate

ranges from zero up to m_textureCount.x (inclusive),

OpenGL horizontally tiles m_textureCount.x

repetitions of the texture across the surface. We’ll take a deeper look

at how texture coordinates work later in the chapter.

Note that if you were loading the model data from an OBJ file or other 3D format, you’d probably obtain the texture coordinates directly from the model file rather than computing them like we’re doing here.

Enabling Textures with ES1::RenderingEngine

As always, let’s start with the ES 1.1

rendering engine since the 2.0 variant is more complex. The first step

is adding a pointer to the resource manager as shown in Example 5-7. Note we’re also adding a

GLuint for the grid texture. Much like framebuffer

objects and vertex buffer objects, OpenGL textures have integer

names.

class RenderingEngine : public IRenderingEngine {

public:

RenderingEngine(IResourceManager* resourceManager);

void Initialize(const vector<ISurface*>& surfaces);

void Render(const vector<Visual>& visuals) const;

private:

vector<Drawable> m_drawables;

GLuint m_colorRenderbuffer;

GLuint m_depthRenderbuffer;

mat4 m_translation;

GLuint m_gridTexture;

IResourceManager* m_resourceManager;

};

IRenderingEngine* CreateRenderingEngine(IResourceManager* resourceManager)

{

return new RenderingEngine(resourceManager);

}

RenderingEngine::RenderingEngine(IResourceManager* resourceManager)

{

m_resourceManager = resourceManager;

glGenRenderbuffersOES(1, &m_colorRenderbuffer);

glBindRenderbufferOES(GL_RENDERBUFFER_OES, m_colorRenderbuffer);

}

Example 5-8 shows the code for loading the texture, followed by a detailed explanation.

void RenderingEngine::Initialize(const vector<ISurface*>& surfaces)

{

vector<ISurface*>::const_iterator surface;

for (surface = surfaces.begin(); surface != surfaces.end(); ++surface) {

// Create the VBO for the vertices.

vector<float> vertices;

(*surface)->GenerateVertices(vertices, VertexFlagsNormals

|VertexFlagsTexCoords);

// ...

// Load the texture.

glGenTextures(1, &m_gridTexture); glBindTexture(GL_TEXTURE_2D, m_gridTexture);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MIN_FILTER, GL_LINEAR);

glBindTexture(GL_TEXTURE_2D, m_gridTexture);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MIN_FILTER, GL_LINEAR); glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MAG_FILTER, GL_LINEAR);

m_resourceManager->LoadPngImage("Grid16.png");

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MAG_FILTER, GL_LINEAR);

m_resourceManager->LoadPngImage("Grid16.png"); void* pixels = m_resourceManager->GetImageData();

ivec2 size = m_resourceManager->GetImageSize();

glTexImage2D(GL_TEXTURE_2D, 0, GL_RGBA, size.x,

size.y, 0, GL_RGBA, GL_UNSIGNED_BYTE, pixels);

void* pixels = m_resourceManager->GetImageData();

ivec2 size = m_resourceManager->GetImageSize();

glTexImage2D(GL_TEXTURE_2D, 0, GL_RGBA, size.x,

size.y, 0, GL_RGBA, GL_UNSIGNED_BYTE, pixels); m_resourceManager->UnloadImage();

// Set up various GL state.

glEnableClientState(GL_VERTEX_ARRAY);

glEnableClientState(GL_NORMAL_ARRAY);

glEnableClientState(GL_TEXTURE_COORD_ARRAY);

m_resourceManager->UnloadImage();

// Set up various GL state.

glEnableClientState(GL_VERTEX_ARRAY);

glEnableClientState(GL_NORMAL_ARRAY);

glEnableClientState(GL_TEXTURE_COORD_ARRAY); glEnable(GL_LIGHTING);

glEnable(GL_LIGHT0);

glEnable(GL_DEPTH_TEST);

glEnable(GL_TEXTURE_2D);

glEnable(GL_LIGHTING);

glEnable(GL_LIGHT0);

glEnable(GL_DEPTH_TEST);

glEnable(GL_TEXTURE_2D); ...

}

...

}

Generate the integer identifier for the object, and then bind it to the pipeline. This follows the Gen/Bind pattern used by FBOs and VBOs.

Set the minification filter and magnification filter of the texture object. The texture filter is the algorithm that OpenGL uses to shrink or enlarge a texture; we’ll cover filtering in detail later.

Tell the resource manager to load and decode the Grid16.png file.

Upload the raw texture data to OpenGL using

glTexImage2D; more on this later.

Tell OpenGL to enable the texture coordinate vertex attribute.

Example 5-8

introduces the glTexImage2D function, which

unfortunately has more parameters than it needs because of historical

reasons. Don’t be intimidated by the eight parameters; it’s much easier

to use than it appears. Here’s the formal declaration:

void glTexImage2D(GLenum target, GLint level, GLint internalformat,

GLsizei width, GLsizei height, GLint border,

GLenum format, GLenum type, const GLvoid* pixels);

- target

This specifies which binding point to upload the texture to. For ES 1.1, this must be

GL_TEXTURE_2D.- level

This specifies the mipmap level. We’ll learn more about mipmaps soon. For now, use zero for this.

- internalFormat

This specifies the format of the texture. We’re using

GL_RGBAfor now, and other formats will be covered shortly. It’s declared as aGLintrather than aGLenumfor historical reasons.- width, height

- border

Set this to zero; texture borders are not supported in OpenGL ES. Be happy, because that’s one less thing you have to remember!

- format

In OpenGL ES, this has to match

internalFormat. The argument may seem redundant, but it’s yet another carryover from desktop OpenGL, which supports format conversion. Again, be happy; this is a simpler API.- type

This describes the type of each color component. This is commonly

GL_UNSIGNED_BYTE, but we’ll learn about some other types later.- pixels

Next let’s go over the

Render method. The only difference is that the vertex

stride is larger, and we need to call

glTexCoordPointer to give OpenGL the correct offset

into the VBO. See Example 5-9.

void RenderingEngine::Render(const vector<Visual>& visuals) const

{

glClearColor(0.5f, 0.5f, 0.5f, 1);

glClear(GL_COLOR_BUFFER_BIT | GL_DEPTH_BUFFER_BIT);

vector<Visual>::const_iterator visual = visuals.begin();

for (int visualIndex = 0;

visual != visuals.end();

++visual, ++visualIndex)

{

// ...

// Draw the surface.

int stride = sizeof(vec3) + sizeof(vec3) + sizeof(vec2);

const GLvoid* texCoordOffset = (const GLvoid*) (2 * sizeof(vec3));

const Drawable& drawable = m_drawables[visualIndex];

glBindBuffer(GL_ARRAY_BUFFER, drawable.VertexBuffer);

glVertexPointer(3, GL_FLOAT, stride, 0);

const GLvoid* normalOffset = (const GLvoid*) sizeof(vec3);

glNormalPointer(GL_FLOAT, stride, normalOffset);

glTexCoordPointer(2, GL_FLOAT, stride, texCoordOffset);

glBindBuffer(GL_ELEMENT_ARRAY_BUFFER, drawable.IndexBuffer);

glDrawElements(GL_TRIANGLES, drawable.IndexCount, GL_UNSIGNED_SHORT, 0);

}

}

That’s it for the ES 1.1 backend! Incidentally, in more complex applications you should take care to delete your textures after you’re done with them; textures can be one of the biggest resource hogs in OpenGL. Deleting a texture is done like so:

glDeleteTextures(1, &m_gridTexture)

This function is similar to

glGenTextures in that it takes a count and a list of

names. Incidentally, vertex buffer objects are deleted in a similar

manner using glDeleteBuffers.

Enabling Textures with ES2::RenderingEngine

The ES 2.0 backend requires some changes to

both the vertex shader (to pass along the texture coordinate) and the

fragment shader (to apply the texel color). You do not call

glEnable(GL_TEXTURE_2D) with ES 2.0; it simply

depends on what your fragment shader

does.

Let’s start with the vertex shader, shown in Example 5-10. This is a modification of the simple lighting shader presented in the previous chapter (Example 4-15). Only three new lines are required (shown in bold).

Note

If you tried some of the other vertex shaders in that chapter, such as the pixel shader or toon shader, the current shader in your project may look different from Example 4-15.

attribute vec4 Position; attribute vec3 Normal; attribute vec3 DiffuseMaterial; attribute vec2 TextureCoord; uniform mat4 Projection; uniform mat4 Modelview; uniform mat3 NormalMatrix; uniform vec3 LightPosition; uniform vec3 AmbientMaterial; uniform vec3 SpecularMaterial; uniform float Shininess; varying vec4 DestinationColor; varying vec2 TextureCoordOut; void main(void) { vec3 N = NormalMatrix * Normal; vec3 L = normalize(LightPosition); vec3 E = vec3(0, 0, 1); vec3 H = normalize(L + E); float df = max(0.0, dot(N, L)); float sf = max(0.0, dot(N, H)); sf = pow(sf, Shininess); vec3 color = AmbientMaterial + df * DiffuseMaterial + sf * SpecularMaterial; DestinationColor = vec4(color, 1); gl_Position = Projection * Modelview * Position; TextureCoordOut = TextureCoord; }

Note

To try these, you can replace the contents

of your existing .vert and

.frag files. Just be sure not to delete the first

line with STRINGIFY or the last line with the

closing parenthesis and semicolon.

Example 5-10 simply passes the texture coordinates through, but you can achieve many interesting effects by manipulating the texture coordinates, or even generating them from scratch. For example, to achieve a “movie projector” effect, simply replace the last line in Example 5-10 with this:

TextureCoordOut = gl_Position.xy * 2.0;

For now, let’s stick with the boring pass-through shader because it better emulates the behavior of ES 1.1. The new fragment shader is a bit more interesting; see Example 5-11.

As before, declare a low-precision varying to receive the color produced by lighting.

Declare a medium-precision varying to receive the texture coordinates.

Declare a uniform sampler, which represents the texture stage from which we’ll retrieve the texel color.

Use the

texture2Dfunction to look up a texel color from the sampler, then multiply it by the lighting color to produce the final color. This is component-wise multiplication, not a dot product or a cross product.

When setting a uniform sampler from within your application, a common mistake is to set it to the handle of the texture object you’d like to sample:

glBindTexture(GL_TEXTURE_2D, textureHandle); GLint location = glGetUniformLocation(programHandle, "Sampler"); glUniform1i(location, textureHandle); // Incorrect glUniform1i(location, 0); // Correct

The correct value of the sampler is the stage index that you’d like to sample from, not the handle. Since all uniforms default to zero, it’s fine to not bother setting sampler values if you’re not using multitexturing (we’ll cover multitexturing later in this book).

Newly introduced in Example 5-11 is the texture2D

function call. For input, it takes a uniform sampler and a

vec2 texture coordinate. Its return value is always a

vec4, regardless of the texture format.

Warning

The OpenGL ES specification stipulates that

texture2D can be called from vertex shaders as

well, but on many platforms, including the iPhone, it’s actually

limited to fragment shaders only.

Note that Example 5-11 uses multiplication to combine the lighting color and texture color; this often produces good results. Multiplying two colors in this way is called modulation, and it’s the default method used in ES 1.1.

Now let’s make the necessary changes to the C++ code. First we need to add new class members to store the texture ID and resource manager pointer, but that’s the same as ES 1.1, so I won’t repeat it here. I also won’t repeat the texture-loading code because it’s the same with both APIs.

One new thing we need for the ES 2.0 backend

is an attribute ID for texture coordinates. See Example 5-12. Note the lack of a

glEnable for texturing; remember, there’s no need for

it in ES 2.0.

struct AttributeHandles {

GLint Position;

GLint Normal;

GLint Ambient;

GLint Diffuse;

GLint Specular;

GLint Shininess;

GLint TextureCoord;

};

...

void RenderingEngine::Initialize(const vector<ISurface*>& surfaces)

{

vector<ISurface*>::const_iterator surface;

for (surface = surfaces.begin(); surface != surfaces.end(); ++surface) {

// Create the VBO for the vertices.

vector<float> vertices;

(*surface)->GenerateVertices(vertices, VertexFlagsNormals|VertexFlagsTexCoords);

// ...

m_attributes.TextureCoord = glGetAttribLocation(program, "TextureCoord");

// Load the texture.

glGenTextures(1, &m_gridTexture);

glBindTexture(GL_TEXTURE_2D, m_gridTexture);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MIN_FILTER, GL_LINEAR);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MAG_FILTER, GL_LINEAR);

m_resourceManager->LoadPngImage("Grid16.png");

void* pixels = m_resourceManager->GetImageData();

ivec2 size = m_resourceManager->GetImageSize();

glTexImage2D(GL_TEXTURE_2D, 0, GL_RGBA, size.x,

size.y, 0, GL_RGBA, GL_UNSIGNED_BYTE, pixels);

m_resourceManager->UnloadImage();

// Initialize various state.

glEnableVertexAttribArray(m_attributes.Position);

glEnableVertexAttribArray(m_attributes.Normal);

glEnableVertexAttribArray(m_attributes.TextureCoord);

glEnable(GL_DEPTH_TEST);

...

}

You may have noticed that the fragment shader declared a sampler uniform, but we’re not setting it to anything in our C++ code. There’s actually no need to set it; all uniforms default to zero, which is what we want for the sampler’s value anyway. You don’t need a nonzero sampler unless you’re using multitexturing, which is a feature that we’ll cover in Chapter 8.

Next up is the Render

method, which is pretty straightforward (Example 5-13). The only way it differs from its ES

1.1 counterpart is that it makes three calls to

glVertexAttribPointer rather than

glVertexPointer, glColorPointer,

and glTexCoordPointer. (Replace everything from

// Draw the surface to the end of the method with the

corresponding code.)

Note

You must also make the same changes to the ES 2.0 renderer that were shown earlier in Example 5-7.

void RenderingEngine::Render(const vector<Visual>& visuals) const

{

glClearColor(0.5f, 0.5f, 0.5f, 1);

glClear(GL_COLOR_BUFFER_BIT | GL_DEPTH_BUFFER_BIT);

vector<Visual>::const_iterator visual = visuals.begin();

for (int visualIndex = 0;

visual != visuals.end();

++visual, ++visualIndex)

{

// ...

// Draw the surface.

int stride = sizeof(vec3) + sizeof(vec3) + sizeof(vec2);

const GLvoid* normalOffset = (const GLvoid*) sizeof(vec3);

const GLvoid* texCoordOffset = (const GLvoid*) (2 * sizeof(vec3));

GLint position = m_attributes.Position;

GLint normal = m_attributes.Normal;

GLint texCoord = m_attributes.TextureCoord;

const Drawable& drawable = m_drawables[visualIndex];

glBindBuffer(GL_ARRAY_BUFFER, drawable.VertexBuffer);

glVertexAttribPointer(position, 3, GL_FLOAT, GL_FALSE, stride, 0);

glVertexAttribPointer(normal, 3, GL_FLOAT, GL_FALSE, stride, normalOffset);

glVertexAttribPointer(texCoord, 2, GL_FLOAT, GL_FALSE, stride, texCoordOffset);

glBindBuffer(GL_ELEMENT_ARRAY_BUFFER, drawable.IndexBuffer);

glDrawElements(GL_TRIANGLES, drawable.IndexCount, GL_UNSIGNED_SHORT, 0);

}

}

That’s it! You now have a textured model viewer. Before you build and run it, select Build→Clean All Targets (we’ve made a lot of changes to various parts of this app, and this will help avoid any surprises by building the app from a clean slate). I’ll explain some of the details in the sections to come.

Texture Coordinates Revisited

Recall that texture coordinates are defined

such that (0,0) is the lower-left corner and (1,1) is the upper-right

corner. So, what happens when a vertex has texture coordinates that lie

outside this range? The sample code is actually already sending

coordinates outside this range. For example, the

TextureCount parameter for the sphere is

(20,35).

By default, OpenGL simply repeats the texture at every integer boundary; it lops off the integer portion of the texture coordinate before sampling the texel color. If you want to make this behavior explicit, you can add something like this to the rendering engine code:

glBindTexture(GL_TEXTURE_2D, m_gridTexture); glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_S, GL_REPEAT); glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_T, GL_REPEAT);

The wrap mode passed in to the third argument

of glTexParameteri can be one of the

following:

- GL_REPEAT

This is the default wrap mode; discard the integer portion of the texture coordinate.

- GL_CLAMP_TO_EDGE

- GL_MIRRORED_REPEAT[4]

If the integer portion is an even number, this acts exactly like

GL_REPEAT. If it’s an odd number, the fractional portion is inverted before it’s applied.

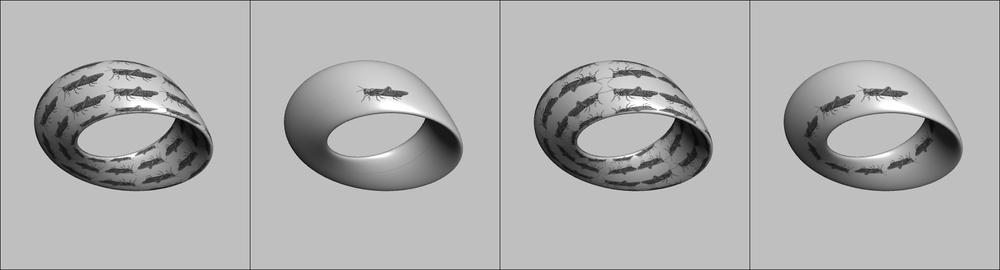

Figure 5-2 shows the three

wrap modes. From left to right they’re repeat, clamp-to-edge, and

mirrored. The figure on the far right uses GL_REPEAT

for the S coordinate and GL_CLAMP_TO_EDGE for the T

coordinate.

What would you do if you wanted to animate your texture coordinates? For example, say you want to gradually move the grasshoppers in Figure 5-2 so that they scroll around the Möbius strip. You certainly wouldn’t want to upload a new VBO at every frame with updated texture coordinates; that would detrimental to performance. Instead, you would set up a texture matrix. Texture matrices were briefly mentioned in Saving and Restoring Transforms with Matrix Stacks, and they’re configured much the same way as the model-view and projection matrices. The only difference is that they’re applied to texture coordinates rather than vertex positions. The following snippet shows how you’d set up a texture matrix with ES 1.1 (with ES 2.0, there’s no built-in texture matrix, but it’s easy to create one with a uniform variable):

glMatrixMode(GL_TEXTURE); glTranslatef(grasshopperOffset, 0, 0);

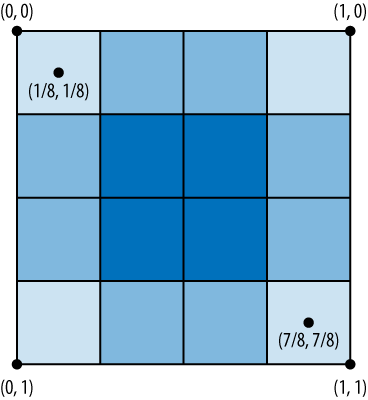

In this book, we use the convention that (0,0) maps to the upper-left of the source image (Figure 5-3) and that the Y coordinate increases downward.

By the way, saying that (1,1) maps to a texel at the far corner of the texture image isn’t a very accurate statement; the corner texel’s center is actually a fraction, as shown in Figure 5-3. This may seem pedantic, but you’ll see how it’s relevant when we cover filtering, which comes next.

Fight Aliasing with Filtering

Is a texture a collection of discrete texels, or is it a continuous function across [0, 1]? This is a dangerous question to ask a graphics geek; it’s a bit like asking a physicist if a photon is a wave or a particle.

When you upload a texture to OpenGL using

glTexImage2D, it’s a collection of discrete texels.

When you sample a texture using normalized texture coordinates, it’s a bit

more like a continuous function. You might recall these two lines from the

rendering engine:

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MIN_FILTER, GL_LINEAR); glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MAG_FILTER, GL_LINEAR);

What’s going on here? The first line sets the minification filter; the second line sets the magnification filter. Both of these tell OpenGL how to map those discrete texels into a continuous function.

More precisely, the minification filter specifies the scaling algorithm to use when the texture size in screen space is smaller than the original image; the magnification filter tells OpenGL what to do when the texture size is screen space is larger than the original image.

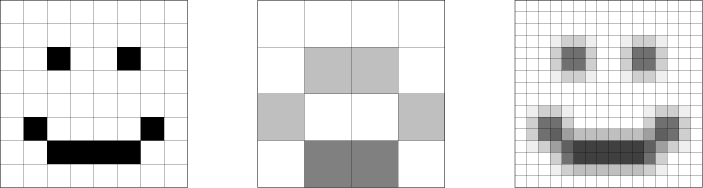

The magnification filter can be one of two values:

- GL_NEAREST

Simple and crude; use the color of the texel nearest to the texture coordinate.

- GL_LINEAR

Indicates bilinear filtering. Samples the local 2×2 square of texels and blends them together using a weighted average. The image on the far right in Figure 5-4 is an example of bilinear magnification applied to a simple 8×8 monochrome texture.

The minification filter supports the same filters as magnification and adds four additional filters that rely on mipmaps, which are “preshrunk” images that you need to upload separately from the main image. More on mipmaps soon.

The available minification modes are as follows:

- GL_NEAREST

- GL_LINEAR

As with magnification, blend together the nearest four texels. The middle image in Figure 5-4 is an example of bilinear minification.

- GL_NEAREST_MIPMAP_NEAREST

Find the mipmap that best matches the screen-space size of the texture, and then use

GL_NEARESTfiltering.- GL_LINEAR_MIPMAP_NEAREST

Find the mipmap that best matches the screen-space size of the texture, and then use

GL_LINEARfiltering.- GL_LINEAR_MIPMAP_LINEAR

Perform

GL_LINEARsampling on each of two “best fit” mipmaps, and then blend the result. OpenGL takes eight samples for this, so it’s the highest-quality filter. This is also known as trilinear filtering.- GL_NEAREST_MIPMAP_LINEAR

Take the weighted average of two samples, where one sample is from mipmap A, the other from mipmap B.

Figure 5-5 compares various filtering schemes.

Deciding on a filter is a bit of a black art;

personally I often start with trilinear filtering

(GL_LINEAR_MIPMAP_LINEAR), and I try cranking down to a

lower-quality filter only when I’m optimizing my frame rate. Note that

GL_NEAREST is perfectly acceptable in some scenarios,

such as when rendering 2D quads that have the same size as the source

texture.

First- and second-generation devices have some restrictions on the filters:

This isn’t a big deal since you’ll almost never want a different same-level filter for magnification and minification. Nevertheless, it’s important to note that the iPhone Simulator and newer devices do not have these restrictions.

Boosting Quality and Performance with Mipmaps

Mipmaps help with both quality and performance. They can help with performance especially when large textures are viewed from far away. Since the graphics hardware performs sampling on an image potentially much smaller than the original, it’s more likely to have the texels available in a nearby memory cache. Mipmaps can improve quality for several reasons; most importantly, they effectively cast a wider net, so the final color is less likely to be missing contributions from important nearby texels.

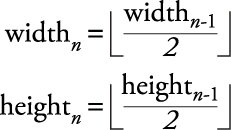

In OpenGL, mipmap zero is the original image, and every following level is half the size of the preceding level. If a level has an odd size, then the floor function is used, as in Equation 5-1.

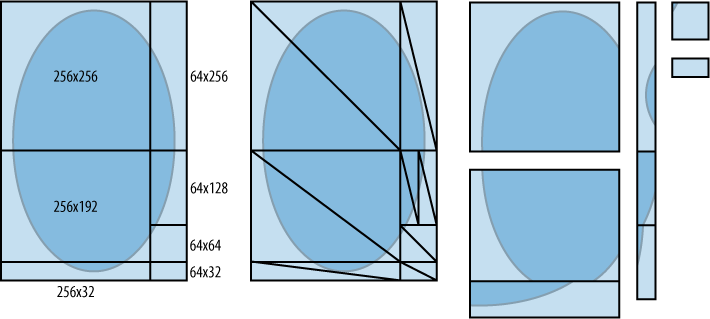

Watch out though, because sometimes you need to ensure that all mipmap levels have an even size. In another words, the original texture must have dimensions that are powers of two. We’ll discuss this further later in the chapter. Figure 5-6 depicts a popular way of neatly visualizing mipmaps levels into an area that’s 1.5 times the original width.

To upload the mipmaps levels to OpenGL, you

need to make a series of separate calls to

glTexImage2D, from the original size all the way down

to the 1×1 mipmap:

glTexImage2D(GL_TEXTURE_2D, 0, GL_RGBA, 16, 16, 0, GL_RGBA, GL_UNSIGNED_BYTE,

pImageData0);

glTexImage2D(GL_TEXTURE_2D, 1, GL_RGBA, 8, 8, 0, GL_RGBA,

GL_UNSIGNED_BYTE, pImageData1);

glTexImage2D(GL_TEXTURE_2D, 2, GL_RGBA, 4, 4, 0, GL_RGBA,

GL_UNSIGNED_BYTE, pImageData2);

glTexImage2D(GL_TEXTURE_2D, 3, GL_RGBA, 2, 2, 0, GL_RGBA,

GL_UNSIGNED_BYTE, pImageData3);

glTexImage2D(GL_TEXTURE_2D, 4, GL_RGBA, 1, 1, 0, GL_RGBA,

GL_UNSIGNED_BYTE, pImageData4);

Usually code like this occurs in a loop. Many OpenGL developers like to use a right-shift as a sneaky way of halving the size at each iteration. I doubt it really buys you anything, but it’s great fun:

for (int level = 0;

level < description.MipCount;

++level, width >>= 1, height >>= 1, ppData++)

{

glTexImage2D(GL_TEXTURE_2D, level, GL_RGBA, width, height,

0, GL_RGBA, GL_UNSIGNED_BYTE, *ppData);

}

If you’d like to avoid the tedium of creating mipmaps and loading them in individually, OpenGL ES can generate mipmaps on your behalf:

// OpenGL ES 1.1 glTexParameteri(GL_TEXTURE_2D, GL_GENERATE_MIPMAP, GL_TRUE); glTexImage2D(GL_TEXTURE_2D, 0, ...); // OpenGL ES 2.0 glTexImage2D(GL_TEXTURE_2D, 0, ...); glGenerateMipmap(GL_TEXTURE_2D);

In ES 1.1, mipmap generation is part of the OpenGL state associated with the current texture object, and you should enable it before uploading level zero. In ES 2.0, mipmap generation is an action that you take after you upload level zero.

You might be wondering why you’d ever want to provide mipmaps explicitly when you can just have OpenGL generate them for you. There are actually a couple reasons for this:

There’s a performance hit for mipmap generation at upload time. This could prolong your application’s startup time, which is something all good iPhone developers obsess about.

When OpenGL performs mipmap generation for you, you’re (almost) at the mercy of whatever filtering algorithm it chooses. You can often produce higher-quality results if you provide mipmaps yourself, especially if you have a very high-resolution source image or a vector-based source.

Later we’ll learn about a couple free tools that make it easy to supply OpenGL with ready-made, preshrunk mipmaps.

By the way, you do have some control over the mipmap generation scheme that OpenGL uses. The following lines are valid with both ES 1.1 and 2.0:

glHint(GL_GENERATE_MIPMAP_HINT, GL_FASTEST); glHint(GL_GENERATE_MIPMAP_HINT, GL_NICEST); glHint(GL_GENERATE_MIPMAP_HINT, GL_DONT_CARE); // this is the default

Modifying ModelViewer to Support Mipmaps

It’s easy to enable mipmapping in the ModelViewer sample. For the ES 1.1 rendering engine, enable mipmap generation after binding to the texture object, and then replace the minification filter:

glGenTextures(1, &m_gridTexture); glBindTexture(GL_TEXTURE_2D, m_gridTexture); glTexParameteri(GL_TEXTURE_2D, GL_GENERATE_MIPMAP, GL_TRUE); glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MIN_FILTER, GL_LINEAR_MIPMAP_LINEAR); glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MAG_FILTER, GL_LINEAR); // ... glTexImage2D(GL_TEXTURE_2D, 0, GL_RGBA, size.x, size.y, 0, GL_RGBA, GL_UNSIGNED_BYTE, pixels);

For the ES 2.0 rendering engine, replace the

minification filter in the same way, but call

glGenerateMipmap after uploading the texture

data:

glGenTextures(1, &m_gridTexture); glBindTexture(GL_TEXTURE_2D, m_gridTexture); glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MIN_FILTER, GL_LINEAR_MIPMAP_LINEAR); glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MAG_FILTER, GL_LINEAR); // ... glTexImage2D(GL_TEXTURE_2D, 0, GL_RGBA, size.x, size.y, 0, GL_RGBA, GL_UNSIGNED_BYTE, pixels); glGenerateMipmap(GL_TEXTURE_2D);

Texture Formats and Types

Recall that two of the parameters to

glTexImage2D stipulate format, and one stipulates type,

highlighted in bold here:

glTexImage2D(GL_TEXTURE_2D, 0, GL_RGBA, 16, 16, 0, GL_RGBA, GL_UNSIGNED_BYTE, pImageData0);

The allowed formats are as follows:

- GL_RGB

- GL_RGBA

- GL_BGRA

Same as

GL_RGBAbut with the blue and red components swapped. This is a nonstandard format but available on the iPhone because of theGL_IMG_texture_format_BGRA8888extension.- GL_ALPHA

Single component format used as an alpha mask. (We’ll learn a lot more about alpha in the next chapter.)

- GL_LUMINANCE

- GL_LUMINANCE_ALPHA

Two component format: grayscale + alpha. This is very useful for storing text.

Don’t dismiss the non-RGB formats; if you don’t need color, you can save significant memory with the one- or two-component formats.

The type parameter in

glTexImage2D can be one of these:

- GL_UNSIGNED_BYTE

- GL_UNSIGNED_SHORT_5_6_5

Each pixel is 16 bits wide; red and blue have five bits each, and green has six. Requires the format to be

GL_RGB. The fact that green gets the extra bit isn’t random—the human eye is more sensitive to variation in green hues.- GL_UNSIGNED_SHORT_4_4_4_4

Each pixel is 16 bits wide, and each component is 4 bits. This can be used only with

GL_RGBA.- GL_UNSIGNED_SHORT_5_5_5_1

This dedicates only one bit to alpha; a pixel can be only fully opaque or fully transparent. Each pixel is 16 bits wide. This requires format to be

GL_RGBA.

It’s also interesting to note the various formats supported by the PNG file format, even though this has nothing to do with OpenGL:

Warning

Just because a PNG file looks

grayscale doesn’t mean that it’s using a grayscale-only

format! The iPhone SDK includes a command-line tool called

pngcrush that can help with this. (Skip ahead to

Texture Compression with PVRTC to see where it’s located.) You

can also right-click an image file in Mac OS X and use the Get

Info option to learn about the internal format.

Hands-On: Loading Various Formats

Recall that the

LoadPngImage method presented at the beginning of the

chapter (Example 5-2) did not return any

format information and that the rendering engine assumed the image data

to be in RGBA format. Let’s try to make this a bit more

robust.

We can start by enhancing the

IResourceManager interface so that it returns some

format information in an API-agnostic way. (Remember, we’re avoiding all

platform-specific code in our interfaces.) For simplicity’s sake, let’s

support only the subset of formats that are supported by both OpenGL and

PNG. Open Interfaces.hpp, and make the changes

shown in Example 5-14. New and modified

lines are shown in bold. Note that the GetImageSize

method has been removed because size is part of

TextureDescription.

enum TextureFormat { TextureFormatGray, TextureFormatGrayAlpha, TextureFormatRgb, TextureFormatRgba, }; struct TextureDescription { TextureFormat Format; int BitsPerComponent; ivec2 Size; }; struct IResourceManager { virtual string GetResourcePath() const = 0; virtual TextureDescription LoadPngImage(const string& filename) = 0; virtual void* GetImageData() = 0; virtual void UnloadImage() = 0; virtual ~IResourceManager() {} };

Example 5-15

shows the implementation to the new LoadPngImage

method. Note the Core Graphics functions used to extract format and type

information, such as CGImageGetAlphaInfo,

CGImageGetColorSpace, and

CGColorSpaceGetModel. I won’t go into detail about

these functions because they are fairly straightforward; for more

information, look them up on Apple’s iPhone Developer site.

TextureDescription LoadPngImage(const string& file)

{

NSString* basePath = [NSString stringWithUTF8String:file.c_str()];

NSString* resourcePath = [[NSBundle mainBundle] resourcePath];

NSString* fullPath = [resourcePath stringByAppendingPathComponent:basePath];

NSLog(@"Loading PNG image %s...", fullPath);

UIImage* uiImage = [UIImage imageWithContentsOfFile:fullPath];

CGImageRef cgImage = uiImage.CGImage;

m_imageData = CGDataProviderCopyData(CGImageGetDataProvider(cgImage));

TextureDescription description;

description.Size.x = CGImageGetWidth(cgImage);

description.Size.y = CGImageGetHeight(cgImage);

bool hasAlpha = CGImageGetAlphaInfo(cgImage) != kCGImageAlphaNone;

CGColorSpaceRef colorSpace = CGImageGetColorSpace(cgImage);

switch (CGColorSpaceGetModel(colorSpace)) {

case kCGColorSpaceModelMonochrome:

description.Format =

hasAlpha ? TextureFormatGrayAlpha : TextureFormatGray;

break;

case kCGColorSpaceModelRGB:

description.Format =

hasAlpha ? TextureFormatRgba : TextureFormatRgb;

break;

default:

assert(!"Unsupported color space.");

break;

}

description.BitsPerComponent = CGImageGetBitsPerComponent(cgImage);

return description;

}

Next, we need to modify the rendering engines

so that they pass in the correct arguments to

glTexImage2D after examining the API-agnostic texture

description. Example 5-16 shows a

private method that can be added to both rendering engines; it works

under both ES 1.1 and 2.0, so add it to both renderers (you will also

need to add its signature to the private: section of

the class declaration).

private:

void SetPngTexture(const string& name) const;

// ...

void RenderingEngine::SetPngTexture(const string& name) const

{

TextureDescription description = m_resourceManager->LoadPngImage(name);

GLenum format;

switch (description.Format) {

case TextureFormatGray: format = GL_LUMINANCE; break;

case TextureFormatGrayAlpha: format = GL_LUMINANCE_ALPHA; break;

case TextureFormatRgb: format = GL_RGB; break;

case TextureFormatRgba: format = GL_RGBA; break;

}

GLenum type;

switch (description.BitsPerComponent) {

case 8: type = GL_UNSIGNED_BYTE; break;

case 4:

if (format == GL_RGBA) {

type = GL_UNSIGNED_SHORT_4_4_4_4;

break;

}

// intentionally fall through

default:

assert(!"Unsupported format.");

}

void* data = m_resourceManager->GetImageData();

ivec2 size = description.Size;

glTexImage2D(GL_TEXTURE_2D, 0, format, size.x, size.y,

0, format, type, data);

m_resourceManager->UnloadImage();

}

Now you can remove the following snippet in

the Initialize method (both rendering engines, but

leave the call to glGenerateMipmap(GL_TEXTURE_2D) in

the 2.0 renderer):

m_resourceManager->LoadPngImage("Grid16");

void* pixels = m_resourceManager->GetImageData();

ivec2 size = m_resourceManager->GetImageSize();

glTexImage2D(GL_TEXTURE_2D, 0, GL_RGBA, size.x, size.y, 0,

GL_RGBA, GL_UNSIGNED_BYTE, pixels);

m_resourceManager->UnloadImage();

Replace it with a call to the new private method:

SetPngTexture("Grid16.png");At this point, you should be able to build and run and get the same results as before.

Texture Compression with PVRTC

Textures are often the biggest memory hog in graphically intense applications. Block compression is a technique that’s quite popular in real-time graphics, even on desktop platforms. Like JPEG compression, it can cause a loss of image quality, but unlike JPEG, its compression ratio is constant and deterministic. If you know the width and height of your original image, then it’s simple to compute the number of bytes in the compressed image.

Block compression is particularly good at photographs, and in some cases it’s difficult to notice the quality loss. The noise is much more noticeable when applied to images with regions of solid color, such as vector-based graphics and text.

I strongly encourage you to use block compression when it doesn’t make a noticeable difference in image quality. Not only does it reduce your memory footprint, but it can boost performance as well, because of increased cache coherency. The iPhone supports a specific type of block compression called PVRTC, named after the PowerVR chip that serves as the iPhone’s graphics processor. PVRTC has four variants, as shown in Table 5-1.

| GL format | Contains alpha | Compression ratio[a] | Byte count |

| GL_COMPRESSED_RGBA_PVRTC_4BPPV1_IMG | Yes | 8:1 | Max(32, Width * Height / 2) |

| GL_COMPRESSED_RGB_PVRTC_4BPPV1_IMG | No | 6:1 | Max(32, Width * Height / 2) |

| GL_COMPRESSED_RGBA_PVRTC_2BPPV1_IMG | Yes | 16:1 | Max(32, Width * Height / 4) |

| GL_COMPRESSED_RGB_PVRTC_2BPPV1_IMG | No | 12:1 | Max(32, Width * Height / 4) |

[a] Compared to a format with 8 bits per component. | |||

Warning

Be aware of some important restrictions with PVRTC textures: the image must be square, and its width/height must be a power of two.

The iPhone SDK comes with a command-line

program called texturetool that you can use to generate

PVRTC data from an uncompressed image, and it’s located here:

/Developer/Platforms/iPhoneOS.platform/Developer/usr/bin

It’s possible Apple has modified the path since

the time of this writing, so I recommend verifying the location of

texturetool using the Spotlight feature in Mac OS X. By

the way, there actually several command-line tools at this location

(including a rather cool one called pngcrush). They’re

worth a closer look!

Here’s how you could use

texturetool to convert Grid16.png

into a compressed image called Grid16.pvr:

texturetool -m -e PVRTC -f PVR -p Preview.png -o Grid16.pvr Grid16.png

Some of the parameters are explained here.

- -m

- -e PVRTC

Use PVRTC compression. This can be tweaked with additional parameters, explained here.

- -f PVR

This may seem redundant, but it chooses the file format rather than the encoding. The

PVRformat includes a simple header before the image data that contains size and format information. I’ll explain how to parse the header later.- -p PreviewFile

This is an optional PNG file that gets generated to allow you to preview the quality loss caused by compression.

- -o OutFile

The encoding argument can be tweaked with optional arguments. Here are some examples:

- -e PVRTC --bits-per-pixel-2

- -e PVRTC --bits-per-pixel-4

Specifies a 4-bits-per-pixel encoding. This is the default, so there’s not much reason to include it on the command line.

- -e PVRTC --channel-weighting-perceptual -bits-per-pixel-2

Use perceptual compression and a 2-bits-per-pixel format. Perceptual compression doesn’t change the format of the image data; rather, it tweaks the compression algorithm such that the green channel preserves more quality than the red and blue channels. Humans are more sensitive to variations in green.

- -e PVRTC --channel-weighting-linear

Apply compression equally to all color components. This defaults to “on,” so there’s no need to specify it explicitly.

Note

At the time of this writing,

texturetool does not include an argument to control

whether the resulting image has an alpha channel. It automatically

determines this based on the source format.

Rather than executing

texturetool from the command line, you can make it an

automatic step in Xcode’s build process. Go ahead and perform the

following steps:

Right-click the Targets group, and then choose Add→New Build Phase→New Run Script Build Phase.

There is lots of stuff in next dialog:

Enter this directly into the script box:

BIN=${PLATFORM_DIR}/../iPhoneOS.platform/Developer/usr/bin INFILE=${SRCROOT}/Textures/Grid16.png OUTFILE=${SRCROOT}/Textures/Grid16.pvr ${BIN}/texturetool -m -f PVR -e PVRTC $INFILE -o $OUTFILE

$(SRCROOT)/Textures/Grid16.png

$(SRCROOT)/Textures/Grid16.pvr

These fields are important to set because they make Xcode smart about rebuilding; in other words, it should run the script only when the input file has been modified.

Close the dialog by clicking the X in the upper-left corner.

Open the Targets group and its child node. Drag the Run Script item so that it appears before the Copy Bundle Resources item. You can also rename it if you’d like; simply right-click it and choose Rename.

Build your project once to run the script. Verify that the resulting PVRTC file exists. Don’t try running yet.

Add Grid16.pvr to your project (right-click the Textures group, select Add→Existing Files and choose Grid16.pvr). Since it’s a build artifact, I don’t recommend checking it into your source code control system. Xcode gracefully handles missing files by highlighting them in red.

Make sure that Xcode doesn’t needlessly rerun the script when the source file hasn’t been modified. If it does, then there could be a typo in script dialog. (Simply double-click the Run Script phase to reopen the script dialog.)

Before moving on to the implementation, we need to incorporate a couple source files from Imagination Technology’s PowerVR SDK.

Go to http://www.imgtec.com/powervr/insider/powervr-sdk.asp.

Click the link for “Khronos OpenGL ES 2.0 SDKs for PowerVR SGX family.”

In your Xcode project, create a new group called PowerVR. Right-click the new group, and choose Get Info. To the right of the “Path” label on the General tab, click Choose and create a New Folder called PowerVR. Click Choose and close the group info window.

After opening up the tarball, look for PVRTTexture.h and PVRTGlobal.h in the Tools folder. Drag these files to the PowerVR group, check the “Copy items” checkbox in the dialog that appears, and then click Add.

Enough Xcode shenanigans, let’s get back to

writing real code. Before adding PVR support to the

ResourceManager class, we need to make some

enhancements to Interfaces.hpp. These changes are

highlighted in bold in Example 5-17.

enum TextureFormat {

TextureFormatGray,

TextureFormatGrayAlpha,

TextureFormatRgb,

TextureFormatRgba,

TextureFormatPvrtcRgb2,

TextureFormatPvrtcRgba2,

TextureFormatPvrtcRgb4,

TextureFormatPvrtcRgba4,

};

struct TextureDescription {

TextureFormat Format;

int BitsPerComponent;

ivec2 Size;

int MipCount;

};

// ...

struct IResourceManager {

virtual string GetResourcePath() const = 0;

virtual TextureDescription LoadPvrImage(const string& filename) = 0;

virtual TextureDescription LoadPngImage(const string& filename) = 0;

virtual void* GetImageData() = 0;

virtual ivec2 GetImageSize() = 0;

virtual void UnloadImage() = 0;

virtual ~IResourceManager() {}

};

Example 5-18

shows the implementation of LoadPvrImage (you’ll

replace everything within the class definition except

the GetResourcePath and LoadPngImage

methods). It parses the header fields by simply casting the data pointer

to a pointer-to-struct. The size of the struct isn’t necessarily the size

of the header, so the GetImageData method looks at the

dwHeaderSize field to determine where the raw data

starts.

...

#import "../PowerVR/PVRTTexture.h"

class ResourceManager : public IResourceManager {

public:

// ...

TextureDescription LoadPvrImage(const string& file)

{

NSString* basePath = [NSString stringWithUTF8String:file.c_str()];

NSString* resourcePath = [[NSBundle mainBundle] resourcePath];

NSString* fullPath = [resourcePath stringByAppendingPathComponent:basePath];

m_imageData = [NSData dataWithContentsOfFile:fullPath];

m_hasPvrHeader = true;

PVR_Texture_Header* header = (PVR_Texture_Header*) [m_imageData bytes];

bool hasAlpha = header->dwAlphaBitMask ? true : false;

TextureDescription description;

switch (header->dwpfFlags & PVRTEX_PIXELTYPE) {

case OGL_PVRTC2:

description.Format = hasAlpha ? TextureFormatPvrtcRgba2 :

TextureFormatPvrtcRgb2;

break;

case OGL_PVRTC4:

description.Format = hasAlpha ? TextureFormatPvrtcRgba4 :

TextureFormatPvrtcRgb4;

break;

default:

assert(!"Unsupported PVR image.");

break;

}

description.Size.x = header->dwWidth;

description.Size.y = header->dwHeight;

description.MipCount = header->dwMipMapCount;

return description;

}

void* GetImageData()

{

if (!m_hasPvrHeader)

return (void*) [m_imageData bytes];

PVR_Texture_Header* header = (PVR_Texture_Header*) [m_imageData bytes];

char* data = (char*) [m_imageData bytes];

unsigned int headerSize = header->dwHeaderSize;

return data + headerSize;

}

void UnloadImage()

{

m_imageData = 0;

}

private:

NSData* m_imageData;

bool m_hasPvrHeader;

ivec2 m_imageSize;

};Note that we changed the type of

m_imageData from CFDataRef to

NSData*. Since we create the NSData

object using autorelease semantics, there’s no need to call a release

function in the UnloadImage() method.

Note

CFDataRef and

NSData are said to be “toll-free bridged,” meaning

they are interchangeable in function calls. You can think of

CFDataRef as being the vanilla C version and

NSData as the Objective-C version. I prefer using

NSData (in my Objective-C code) because it can work

like a C++ smart pointer.

Because of this change, we’ll also need to make

one change to LoadPngImage. Find this line:

m_imageData = CGDataProviderCopyData(CGImageGetDataProvider(cgImage));

and replace it with the following:

CFDataRef dataRef = CGDataProviderCopyData(CGImageGetDataProvider(cgImage)); m_imageData = [NSData dataWithData:(NSData*) dataRef];

You should now be able to build and run, although your application is still using the PNG file.

Example 5-19 adds a new method to the rendering engine for creating a compressed texture object. This code will work under both ES 1.1 and ES 2.0.

private:

void SetPvrTexture(const string& name) const;

// ...

void RenderingEngine::SetPvrTexture(const string& filename) const

{

TextureDescription description =

m_resourceManager->LoadPvrImage(filename);

unsigned char* data =

(unsigned char*) m_resourceManager->GetImageData();

int width = description.Size.x;

int height = description.Size.y;

int bitsPerPixel;

GLenum format;

switch (description.Format) {

case TextureFormatPvrtcRgba2:

bitsPerPixel = 2;

format = GL_COMPRESSED_RGBA_PVRTC_2BPPV1_IMG;

break;

case TextureFormatPvrtcRgb2:

bitsPerPixel = 2;

format = GL_COMPRESSED_RGB_PVRTC_2BPPV1_IMG;

break;

case TextureFormatPvrtcRgba4:

bitsPerPixel = 4;

format = GL_COMPRESSED_RGBA_PVRTC_4BPPV1_IMG;

break;

case TextureFormatPvrtcRgb4:

bitsPerPixel = 4;

format = GL_COMPRESSED_RGB_PVRTC_4BPPV1_IMG;

break;

}

for (int level = 0; width > 0 && height > 0; ++level) {

GLsizei size = std::max(32, width * height * bitsPerPixel / 8);

glCompressedTexImage2D(GL_TEXTURE_2D, level, format, width,

height, 0, size, data);

data += size;

width >>= 1; height >>= 1;

}

m_resourceManager->UnloadImage();

}

SetPngTexture("Grid16.png");SetPvrTexture("Grid16.pvr");Since the PVR file contains multiple mipmap

levels, you’ll also need to remove any code you added for mipmap

autogeneration (glGenerateMipmap under ES 2.0,

glTexParameter with

GL_GENERATE_MIPMAP under ES 1.1).

After rebuilding your project, your app will now be using the compressed texture.

Of particular interest in Example 5-19 is the section that loops over each mipmap

level. Rather than calling glTexImage2D, it uses

glCompressedTexImage2D to upload the data. Here’s its

formal declaration:

void glCompressedTexImage2D(GLenum target, GLint level, GLenum format,

GLsizei width, GLsizei height, GLint border,

GLsizei byteCount, const GLvoid* data);

- target

Specifies which binding point to upload the texture to. For ES 1.1, this must be

GL_TEXTURE_2D.- level

- format

- width, height

- border

Must be zero. Texture borders are not supported in OpenGL ES.

- byteCount

The size of data being uploaded. Note that

glTexImage2Ddoesn’t have a parameter like this; for noncompressed data, OpenGL computes the byte count based on the image’s dimensions and format.- data

The PowerVR SDK and Low-Precision Textures

The low-precision uncompressed formats (565, 5551, and 4444) are often overlooked. Unlike block compression, they do not cause speckle artifacts in the image. While they work poorly with images that have smooth color gradients, they’re quite good at preserving detail in photographs and keeping clean lines in simple vector art.

At the time of this writing, the iPhone SDK

does not contain any tools for encoding images to these formats, but the

free PowerVR SDK from Imagination Technologies includes a tool called

PVRTexTool just for this purpose. Download the SDK as

directed in Texture Compression with PVRTC. Extract the tarball

archive if you haven’t already.

After opening up the tarball, execute the application in Utilities/PVRTexTool/PVRTexToolGUI/MacOS.

Open your source image in the GUI, and select Edit→Encode. After you choose a format (try RGB 565), you can save the output image to a PVR file. Save it as Grid16-PVRTool.pvr, and add it to Xcode as described in Step 5. Next, go into both renderers, and find the following:

SetPvrTexture("Grid16.pvr");And replace it with the following:

SetPvrTexture("Grid16-PVRTool.pvr");You may have noticed that

PVRTexTool has many of the same capabilities as the

texturetool program presented in

the previous section, and much more. It can encode images to a plethora of

formats, generate mipmap levels, and even dump out C header files that

contain raw image data. This tool also has a command-line variant to allow

integration into a script or an Xcode build.

Note

We’ll use the command-line version of

PVRTexTool in Chapter 7 for generating a C header

file that contains the raw data to an 8-bit alpha texture.

Let’s go ahead and flesh out some of the image-loading code to support the uncompressed low-precision formats. New lines in ResourceManager.mm are shown in bold in Example 5-20.

TextureDescription LoadPvrImage(const string& file)

{

// ...

TextureDescription description;

switch (header->dwpfFlags & PVRTEX_PIXELTYPE) {

case OGL_RGB_565:

description.Format = TextureFormat565;

Break;

case OGL_RGBA_5551:

description.Format = TextureFormat5551;

break;

case OGL_RGBA_4444:

description.Format = TextureFormatRgba;

description.BitsPerComponent = 4;

break;

case OGL_PVRTC2:

description.Format = hasAlpha ? TextureFormatPvrtcRgba2 :

TextureFormatPvrtcRgb2;

break;

case OGL_PVRTC4:

description.Format = hasAlpha ? TextureFormatPvrtcRgba4 :

TextureFormatPvrtcRgb4;

break;

}

// ...

}

Next we need to add some new code to the

SetPvrTexture method in the rendering engine class,

shown in Example 5-21. This code works for both

ES 1.1 and 2.0.

void RenderingEngine::SetPvrTexture(const string& filename) const

{

// ...

int bitsPerPixel;

GLenum format;

bool compressed = false;

switch (description.Format) {

case TextureFormatPvrtcRgba2:

case TextureFormatPvrtcRgb2:

case TextureFormatPvrtcRgba4:

case TextureFormatPvrtcRgb4:

compressed = true;

break;

}

if (!compressed) {

GLenum type;

switch (description.Format) {

case TextureFormatRgba:

assert(description.BitsPerComponent == 4);

format = GL_RGBA;

type = GL_UNSIGNED_SHORT_4_4_4_4;

bitsPerPixel = 16;

break;

case TextureFormat565:

format = GL_RGB;

type = GL_UNSIGNED_SHORT_5_6_5;

bitsPerPixel = 16;

break;

case TextureFormat5551:

format = GL_RGBA;

type = GL_UNSIGNED_SHORT_5_5_5_1;

bitsPerPixel = 16;

break;

}

for (int level = 0; width > 0 && height > 0; ++level) {

GLsizei size = width * height * bitsPerPixel / 8;

glTexImage2D(GL_TEXTURE_2D, level, format, width,

height, 0, format, type, data);

data += size;

width >>= 1; height >>= 1;

}

m_resourceManager->UnloadImage();

return;

}

}

Next, we need to make a change to Interfaces.hpp:

enum TextureFormat {

TextureFormatGray,

TextureFormatGrayAlpha,

TextureFormatRgb,

TextureFormatRgba,

TextureFormatPvrtcRgb2,

TextureFormatPvrtcRgba2,

TextureFormatPvrtcRgb4,

TextureFormatPvrtcRgba4,

TextureFormat565,

TextureFormat5551,

};Generating and Transforming OpenGL Textures with Quartz

You can use Quartz to draw 2D paths into an OpenGL texture, resize the source image, convert from one format to another, and even generate text. We’ll cover some of these techniques in Chapter 7; for now let’s go over a few simple ways to generate textures.

One way of loading textures into OpenGL is creating a Quartz surface using whatever format you’d like and then drawing the source image to it, as shown in Example 5-22.

TextureDescription LoadImage(const string& file)

{

NSString* basePath = [NSString stringWithUTF8String:file.c_str()];

NSString* resourcePath = [[NSBundle mainBundle] resourcePath];

NSString* fullPath =

[resourcePath stringByAppendingPathComponent:basePath];

UIImage* uiImage = [UIImage imageWithContentsOfFile:fullPath]; TextureDescription description;

description.Size.x = CGImageGetWidth(uiImage.CGImage);

description.Size.y = CGImageGetHeight(uiImage.CGImage);

description.BitsPerComponent = 8;

description.Format = TextureFormatRgba;

description.MipCount = 1;

m_hasPvrHeader = false;

int bpp = description.BitsPerComponent / 2;

TextureDescription description;

description.Size.x = CGImageGetWidth(uiImage.CGImage);

description.Size.y = CGImageGetHeight(uiImage.CGImage);

description.BitsPerComponent = 8;

description.Format = TextureFormatRgba;

description.MipCount = 1;

m_hasPvrHeader = false;

int bpp = description.BitsPerComponent / 2; int byteCount = description.Size.x * description.Size.y * bpp;

unsigned char* data = (unsigned char*) calloc(byteCount, 1);

int byteCount = description.Size.x * description.Size.y * bpp;

unsigned char* data = (unsigned char*) calloc(byteCount, 1); CGColorSpaceRef colorSpace = CGColorSpaceCreateDeviceRGB();

CGBitmapInfo bitmapInfo =

kCGImageAlphaPremultipliedLast | kCGBitmapByteOrder32Big;

CGContextRef context = CGBitmapContextCreate(data,

CGColorSpaceRef colorSpace = CGColorSpaceCreateDeviceRGB();

CGBitmapInfo bitmapInfo =

kCGImageAlphaPremultipliedLast | kCGBitmapByteOrder32Big;

CGContextRef context = CGBitmapContextCreate(data, description.Size.x,

description.Size.y,

description.BitsPerComponent,

bpp * description.Size.x,

colorSpace,

bitmapInfo);

CGColorSpaceRelease(colorSpace);

CGRect rect = CGRectMake(0, 0, description.Size.x, description.Size.y);

CGContextDrawImage(context, rect, uiImage.CGImage);

description.Size.x,

description.Size.y,

description.BitsPerComponent,

bpp * description.Size.x,

colorSpace,

bitmapInfo);

CGColorSpaceRelease(colorSpace);

CGRect rect = CGRectMake(0, 0, description.Size.x, description.Size.y);

CGContextDrawImage(context, rect, uiImage.CGImage); CGContextRelease(context);

m_imageData = [NSData dataWithBytesNoCopy:data

length:byteCount

freeWhenDone:YES];

CGContextRelease(context);

m_imageData = [NSData dataWithBytesNoCopy:data

length:byteCount

freeWhenDone:YES]; return description;

}

return description;

}

As before, use the

imageWithContentsOfFileclass method to create and allocate aUIImageobject that wraps aCGImageobject.

Since there are four components per pixel (RGBA), the number of bytes per pixel is half the number of bits per component.

Allocate memory for the image surface, and clear it to zeros.

Create a Quartz context with the memory that was just allocated.

Use Quartz to copy the source image onto the destination surface.

Create an

NSDataobject that wraps the memory that was allocated.

If you want to try the Quartz-loading code in the sample app, perform the following steps:

Add Example 5-22 to

ResourceManager.mm.Add the following method declaration to

IResourceManagerin Interfaces.hpp:virtual TextureDescription LoadImage(const string& file) = 0;

In the

SetPngTexturemethod in RenderingEngine.TexturedES2.cpp, change theLoadPngImagecall toLoadImage.In your render engine’s

Initializemethod, make sure your minification filter isGL_LINEARand that you’re callingSetPngTexture.

One advantage of loading images with Quartz is

that you can have it do some transformations before uploading the image to

OpenGL. For example, say you want to flip the image vertically. You could

do so by simply adding the following two lines immediately before the line

that calls CGContextDrawImage:

CGContextTranslateCTM(context, 0, description.Size.y); CGContextScaleCTM(context, 1, -1);

Another neat thing you can do with Quartz is generate new images from scratch in real time. This can shrink your application, making it faster to download. This is particularly important if you’re trying to trim down to less than 10MB, the maximum size that Apple allows for downloading over the 3G network. Of course, you can do this only for textures that contain simple vector-based images, as opposed to truly artistic content.

For example, you could use Quartz to generate a 256×256 texture that contains a blue filled-in circle, as in Example 5-23. The code for creating the surface should look familiar; lines of interest are shown in bold.

TextureDescription GenerateCircle()

{

TextureDescription description;

description.Size = ivec2(256, 256);

description.BitsPerComponent = 8;

description.Format = TextureFormatRgba;

int bpp = description.BitsPerComponent / 2;

int byteCount = description.Size.x * description.Size.y * bpp;

unsigned char* data = (unsigned char*) calloc(byteCount, 1);

CGColorSpaceRef colorSpace = CGColorSpaceCreateDeviceRGB();

CGBitmapInfo bitmapInfo = kCGImageAlphaPremultipliedLast | kCGBitmapByteOrder32Big;

CGContextRef context = CGBitmapContextCreate(data,

description.Size.x,

description.Size.y,

description.BitsPerComponent,

bpp * description.Size.x,

colorSpace,

bitmapInfo);

CGColorSpaceRelease(colorSpace);

CGRect rect = CGRectMake(5, 5, 246, 246);

CGContextSetRGBFillColor(context, 0, 0, 1, 1);

CGContextFillEllipseInRect(context, rect);

CGContextRelease(context);

m_imageData = [NSData dataWithBytesNoCopy:data length:byteCount freeWhenDone:YES];

return description;

}

If you want to try the circle-generation code in the sample app, perform the following steps:

Add Example 5-23 to

ResourceManager.mm.Add the following method declaration to

IResourceManagerin Interfaces.hpp:virtual TextureDescription GenerateCircle() = 0;

In the

SetPngTexturemethod in RenderingEngine.TexturedES2.cpp, change theLoadImagecall toGenerateCircle.In your render engine’s

Initializemethod, make sure your minification filter isGL_LINEARand that you’re callingSetPngTexture.

Quartz is a rich 2D graphics API and could have a book all to itself, so I can’t cover it here; check out Apple’s online documentation for more information.

Dealing with Size Constraints

Some of the biggest gotchas in texturing are the various constraints imposed on their size. Strictly speaking, OpenGL ES 1.1 stipulates that all textures must have dimensions that are powers of two, and OpenGL ES 2.0 has no such restriction. In the graphics community, textures that have a power-of-two width and height are commonly known as POT textures; non-power-of-two textures are NPOT.

For better or worse, the iPhone platform diverges from the OpenGL core specifications here. The POT constraint in ES 1.1 doesn’t always apply, nor does the NPOT feature in ES 2.0.

Newer iPhone models support an extension to ES

1.1 that opens up the POT restriction, but only under a certain set of

conditions. It’s called

GL_APPLE_texture_2D_limited_npot, and it basically states the following:

Nonmipmapped 2D textures that use GL_CLAMP_TO_EDGE wrapping for the S and T coordinates need not have power-of-two dimensions.

As hairy as this seems, it covers quite a few situations, including the common case of displaying a background texture with the same dimensions as the screen (320×480). Since it requires no minification, it doesn’t need mipmapping, so you can create a texture object that fits “just right.”

Not all iPhones support the aforementioned extension to ES 1.1; the only surefire way to find out is by programmatically checking for the extension string, which can be done like this:

const char* extensions = (char*) glGetString(GL_EXTENSIONS); bool npot = strstr(extensions, "GL_APPLE_texture_2D_limited_npot") != 0;

If your 320×480 texture needs to be mipmapped (or if you’re supporting older iPhones), then you can simply use a 512×512 texture and adjust your texture coordinates to address a 320×480 subregion. One quick way of doing this is with a texture matrix:

glMatrixMode(GL_TEXTURE); glLoadIdentity(); glScalef(320.0f / 512.0f, 480.0f / 512.0f, 1.0f);

Unfortunately, the portions of the image that lie outside the 320×480 subregion are wasted. If this causes you to grimace, keep in mind that you can add “mini-textures” to those unused regions. Doing so makes the texture into a texture atlas, which we’ll discuss further in Chapter 7.

If you don’t want to use a 512×512 texture, then it’s possible to create five POT textures and carefully puzzle them together to fit the screen, as shown in Figure 5-7. This is a hassle, though, and I don’t recommend it unless you have a strong penchant for masochism.

By the way, according to the official OpenGL ES 2.0 specification, NPOT textures are actually allowed in any situation! Apple has made a minor transgression here by imposing the aforementioned limitations.

Keep in mind that even when the POT restriction applies, your texture can still be non-square (for example, 512×256), unless it uses a compressed format.

Think these are a lot of rules to juggle? Well it’s not over yet! Textures also have a maximum allowable size. At the time of this writing, the first two iPhone generations have a maximum size of 1024×1024, and third-generation devices have a maximum size of 2048×2048. Again, the only way to be sure is querying its capabilities at runtime, like so:

GLint maxSize; glGetIntegerv(GL_MAX_TEXTURE_SIZE, &maxSize);

Don’t groan, but there’s yet another gotcha I

want to mention regarding texture dimensions. By default, OpenGL expects

each row of uncompressed texture data to be aligned on a 4-byte boundary.

This isn’t a concern if your texture is GL_RGBA with

UNSIGNED_BYTE; in this case, the data is always

properly aligned. However, if your format has a texel size less than 4

bytes, you should take care to ensure each row is padded out to the proper

alignment. Alternatively, you can turn off OpenGL’s alignment restriction

like this:

glPixelStorei(GL_UNPACK_ALIGNMENT, 1);

Also be aware that the PNG decoder in Quartz

may or may not internally align the image data; this can be a concern if

you load images using the CGDataProviderCopyData method

presented in Example 5-15. It’s more

robust (but less performant) to load in images by drawing to a Quartz

surface, which we’ll go over in the next section.

Before moving on, I’ll forewarn you of yet another thing to watch out for: the iPhone Simulator doesn’t necessarily impose the same restrictions on texture size that a physical device would. Many developers throw up their hands and simply stick to power-of-two dimensions only; I’ll show you how to make this easier in the next section.

Scaling to POT

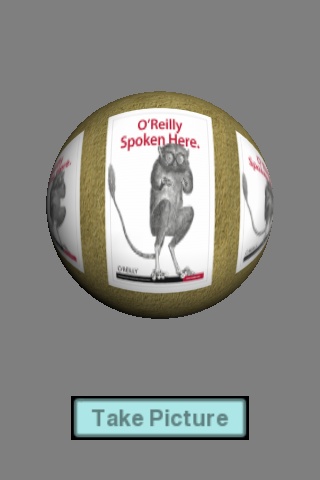

One way to ensure that your textures are power-of-two is to scale them using Quartz. Normally I’d recommend storing the images in the desired size rather than scaling them at runtime, but there are reasons why you might want to scale at runtime. For example, you might be creating a texture that was generated from the iPhone camera (which we’ll demonstrate in the next section).

For the sake of example, let’s walk through

the process of adding a scale-to-POT feature to your

ResourceManager class. First add a new field to the

TextureDescription structure called

OriginalSize, as shown in bold in Example 5-24.

We’ll use this to store the image’s original

size; this is useful, for example, to retrieve the original aspect

ratio. Now let’s go ahead and create the new

ResourceManager::LoadImagePot() method, as shown in

Example 5-25.