Chapter 1. What Are SANs and NAS?

Throughout the history of computing, people have wanted to share computing resources. The Burroughs Corporation had this in mind in 1961 when they developed multiprogramming and virtual memory. Shugart Associates felt that people would be interested in a way to easily use and share disk devices. That’s why they defined the Shugart Associates System Interface (SASI) in 1979. This, of course, was the predecessor to SCSI—the Small Computer System Interface. In the early 1980s, a team of engineers at Sun Microsystems felt that people needed a better way to share files, so they developed NFS. Sun released it to the public in 1984, and it became the Unix community’s prevalent method of sharing filesystems. Also in 1984, Sytec developed NetBIOS for IBM; NetBIOS would become the foundation for the SMB protocol that would ultimately become CIFS, the predominant method of sharing files in a Windows environment.

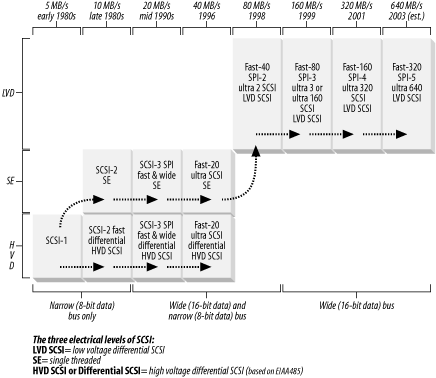

Neither storage area networks (SANs) nor network attached storage (NAS) are new concepts. SANs are simply the next evolution of SCSI, and NAS is the next evolution of NFS and CIFS. Perhaps a brief history lesson will illustrate this (see Figure 1-1).

From SCSI to SANs

As mentioned earlier, SCSI has its origins in SASI—the Shugart Associates System Interface, defined by Shugart Associates in 1979. In 1981, Shugart and NCR joined forces to better document SASI and to add features from another interface developed by NCR. In 1982, the ANSI task group X3T9.3 drafted a formal proposal for the Small Computer System Interface (SCSI), which was to be based on SASI. After work by many companies and many people, SCSI became a formal ANSI standard in 1986. Shortly thereafter, work began on SCSI-2, which incorporated the Common Command Set into SCSI, as well as other enhancements. It was approved in July 1990.[1]

Although SCSI-2 became the de facto interface between storage devices and small to midrange computing devices, not everyone felt that traditional SCSI was a good idea. This was due to the physical and electrical characteristics of copper-based parallel SCSI cables. (SCSI systems based on such cables are now referred to as parallel SCSI, because the SCSI signals are carried across dozens of pairs of conductors in parallel.) Although SCSI has come a long way since 1990, the following limitations still apply to parallel SCSI:

Parallel SCSI is limited to 16 devices on a bus.

It’s possible, but not usually practical, to connect two computing devices to the same storage device with parallel SCSI.

Due to cross-talk between the individual conductors in a multiconductor parallel SCSI cable, as well as electrical interference from external sources, parallel SCSI has cable-length limitations. Although this limitation has been somewhat overcome by SCSI-to-fiber-to-SCSI conversion boxes, these boxes aren’t supported by many software and hardware vendors.

It’s also important to note that each device added to a SCSI chain shortens its total possible length.

Some felt that in order to solve these problems, we needed to change the physical layer. The most obvious answer at the time was fiber optics. Unlike parallel SCSI, fiber cables can go hundreds of meters without significantly changing their transmission characteristics, solving all the problems related to the electrical characteristics of parallel SCSI. It even solved the problem of the number of connections, since each device in the loop had its own transmitting laser. Therefore, additional devices actually increase the total bus length, rather than shorten it.

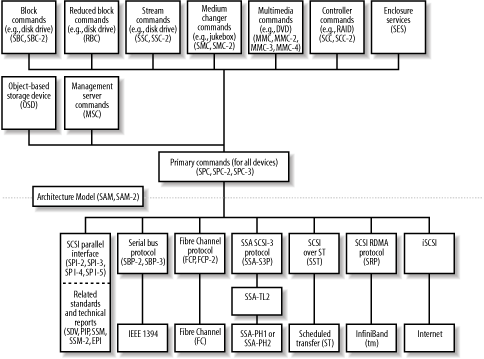

The problem was, how do you take a protocol that was designed to be carried on many conductors in parallel and have it transmitted over only one conductor? The first thing that needed to be done was to separate the SCSI specification into multiple levels—a lesson learned from network protocol development. Each level could behave any way it wanted, as long as it performed the task assigned to it and spoke to the levels above it and below it according the appropriate command set. This was the beginning of the SCSI-3 specification. This separation of the various levels is why the SCSI-2 specification is contained in one document, and the SCSI-3 specification spans more than 20 documents. (Each block in Figure 1-2 represents a separate document of the SCSI-3 specification.)

Once this was done, it became possible to separate the physical layer of SCSI from the higher levels of SCSI. Once the physical layer was given this freedom, limitations caused by the physical layer could be addressed. You can see this separation in the SCSI-3 Architecture Roadmap in Figure 1-2, which was graciously provided by the T10 committee, the group responsible for defining the SCSI-3 architecture. It shows five alternatives to SPI (the SCSI Parallel Interface), including serial SCSI, Fibre Channel, SSA, SCSI over ST, and SCSI over VI. A relatively recent addition to this list that has been gaining acceptance is the iSCSI protocol. iSCSI uses IP as the transport layer to carry serial SCSI traffic.

Tip

As of this writing, iSCSI is gaining ground and market share but is still very new. Once it’s in full swing, I’ll prepare a second edition to this book that includes iSCSI coverage. More information about iSCSI is available in Section A.4.

The most popular alternative to SPI is the Fibre Channel Protocol . Fibre Channel, in contrast to SPI, is a serial protocol that uses only one path to transmit signals and another to receive signals. Fibre Channel offers a number of advantages over parallel SCSI:

- Distance

The distance between devices is no longer important. You can place individual devices up to 10 kilometers apart using single-mode fiber and theoretically go unlimited distances with bridging technologies such as ATM. (It’s impractical at this time to use this for online storage due to the latencies involved in the long distance between the host and storage. However, most people using ATM bridging use it to make remote copies, not for live data access.)

- Speed

Although the bus speed of a single gigabit Fibre Channel connection is now slower than the fastest parallel SCSI implementations, you can trunk multiple Fibre Channel connections together for more bandwidth. (Also, Fibre Channel is much faster than the majority of installed SCSI today, which is usually 20 or 40 MB/s. Additionally, 2-Gb Fibre Channel is now available.)

- Millions of devices connected to one computer

You can connect one million times more devices to a serial SCSI card (i.e., a Fibre Channel host bus adapter [HBA]) than you can to a parallel SCSI card. Parallel SCSI can accept 16 devices, and a Fibre Channel fabric can accept up to 16 million.

- Millions of computers connected to one device

You can easily connect a single storage device to 16 million computers. This allows computers to share resources, such as disk or tape. The only problem is teaching them how to share!

Tip

Most current implementations place limitations on the number of devices that can be connected to a single fabric. It’s unclear at this time how close to 16 million devices we will ever get.

I should probably mention that Fibre Channel doesn’t necessarily mean fiber optic cable. Fibre Channel can also run on special copper cabling. I cover this in more detail in Section 2.1.2.

What Is a SAN?

At this point, my definition of a SAN is as follows:

A SAN is two or more devices communicating via a serial SCSI protocol, such as Fibre Channel or iSCSI.

This definition means that a SAN isn’t a lot of things. By this definition, a LAN that carries nothing but storage traffic isn’t a SAN. What differentiates a SAN from a LAN (or from NAS) is the protocol that is used. If a LAN carries storage traffic using the iSCSI protocol, then I’d consider it a SAN. But simply sending traditional, LAN-based backups across a dedicated LAN doesn’t make that LAN a SAN. Although some people refer to such a network as a storage area network, I do not, and I find doing so very confusing. Such a network is nothing other than a LAN dedicated to a special purpose. I usually refer to this sort of LAN as a “storage LAN” or a “backup network.” A storage LAN is a useful tool that removes storage traffic from the production LAN. A SAN is a network that uses a serial SCSI protocol (e.g., Fibre Channel or iSCSI) to transfer data.

A SAN isn’tnetwork attached storage (NAS). As mentioned previously, SANs use the SCSI protocol, and NAS uses the NFS and SMB/CIFS protocols. (There will be a more detailed comparison between SANs and NAS at the conclusion of this chapter.) The Direct Access File System, or DAFS, pledges to bring SANs and NAS closer together by supporting file sharing via an NFS-like protocol that will also support Fibre Channel as a transport. (DAFS is covered in Appendix A.)

In summary, a SAN is two or more devices communicating via a serial SCSI protocol (e.g., Fibre Channel or iSCSI), and they offer a number of advantages over traditional, parallel SCSI:

Fibre Channel (and iSCSI) can be trunked, where several connections are seen as one, allowing them to communicate much faster than parallel SCSI. Even a single Fibre Channel connection now runs at 2 Gb/s in each direction, for a total aggregate of 4 Gb/s.

You can put up to 16 million devices in a single Fibre Channel SAN (in theory).

You can easily access any device connected to a SAN from any computer also connected to the SAN.

Now that we have covered the evolution of SASI into SCSI, and eventually into SCSI-3 over Fibre Channel and iSCSI, we’ll discuss the area where SANs have seen the most use—and the most success. SANs have significantly changed the way backup and recovery can be done. I will show that storage evolved right along with SCSI, and backup methods that used to work don’t work anymore. This should provide some perspective about why SANs have become so popular.

Backup and Recovery: Before SANs

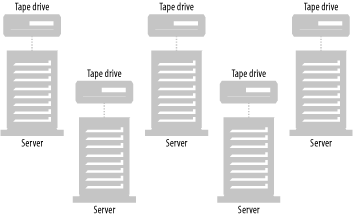

A long time ago in a data center far away, there were servers that were small enough to fit on a tape. This type of data center led to a backup system design like the one in Figure 1-3. Many or most systems came with their own tape drive, and that tape drive was big enough to back up that system—possibly big enough to back up other systems. All that was needed to perform a fully automated backup was to write a few shell scripts and swap out a few tapes in the morning.

For several reasons, bandwidth was not a problem in those days. The first reason was there just wasn’t that much data to back up. Even if the environment consisted of a single 10-Mb hub that was chock full of collisions, there just wasn’t that much data to send across the wire. The second reason that bandwidth wasn’t a problem was that many of the systems could afford to have their own tape drives, so there wasn’t a need to send any data across the LAN.

Gradually, many companies or individuals began to outgrow these systems. Either they got tired of swapping that many tapes, or they had systems that wouldn’t fit on a tape any more. The industry needed to come up with something better.

Things Got Better; Then They Got Worse

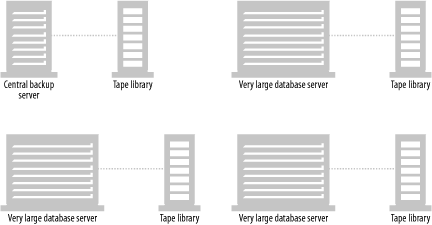

A few early innovators came up with the concept of a centralized backup server. Combining this with a tape stacker made life manageable again. Now all you had to do was spend $5,000 to $10,000 on backup software and $5,000 to $10,000 on hardware, and your problems were solved. Every one of your systems would be backed up across the network to the central backup server, and all you needed to do was install the appropriate piece of software on each backup “client.” These software packages even ported their client software to many different platforms, which meant that all the systems shown in Figure 1-4 could be backed up to the backup server, regardless of what operating system they were running.

Then a different problem appeared. People began to assume that all you had to do was buy a piece of client software, and all your backup problems would be taken care of. As the systems grew larger and the number of systems on a given network increased, it became more and more difficult to back up all the systems across the network in one night. Of course, upgrading from shared networks to switched networks and private VLANs helped a lot, as did Fast Ethernet (100 Mb), followed by Etherchannel and similar technologies (400 Mb), and Gigabit Ethernet. But some had systems that were too large to back up across the network, especially when they started installing very large database servers that contained 100 GB to 1 TB of records and files.

A few backup software companies tried to solve this problem by introducing the media server . In Figure 1-5, the central backup server still controlled all the backups, and still backed up many clients via the 100-MB or 1000-Mb network. However, backup software that supported media servers could attach a tape library to each of the large database servers, allowing these servers to back up to their own locally attached tape drives, instead of sending their data across the network.

Media servers solved the immediate bandwidth problem but introduced significant costs and inefficiencies. Each server needed a tape library big enough to handle a full backup. Such a library can cost from $50,000 to more than $500,000, depending on the size of the database server. This is also inefficient because, many servers of this size don’t need to do a full backup every night. If the database software can perform incremental backups, you may need to perform a full backup only once a week or even once a month, which means that for the rest of the month, most tape drives in this library go unused. Even products that don’t perform a traditional full backup have this problem. These products create a virtual full backup every so often by reading the appropriate files from scores of incremental backups and writing these files to one set of tapes. This method of creating a full backup also needs quite a few tape drives on an occasional basis.

Another thing to consider is that the size of the library (specifically, the number of drives that it contains) is often driven by the restore requirements—not the backup requirements. For example, one company had a 600-GB database they needed backed up. Although they did everything in their power to ensure that a tape restore would never be necessary, they knew they might need to in a true disaster. However, the restore requirement was still three hours. If the restore required reading from tape, the restore requirement didn’t change; it still had to be done in less than three hours. Based on that, they bought a 10-drive library that cost $150,000. Of course, if they could restore the database in three hours, they could back it up in three hours. This meant that this $150,000 library was going unused approximately 21 hours per day.

Enter the SAN

Some backup software vendors attempted to solve the cost problem by allowing a single library to connect to multiple hosts. If you purchased a large library with multiple SCSI connections, you could connect each one to a different host. This allowed you to share the tape library but not the drives. While this ability helped reduce the cost by sharing the robotics, it didn’t completely remove the inefficiencies discussed earlier.

What was really needed was a way to share the drives. And as long as the tape drives were shared, disk drives could be shared too. What if:

A large database server could back up to a locally attached tape drive, but that tape drive could also be seen and used by another large server when it needed to back up to a locally attached tape drive?

The large database server’s disks could be seen by another server that backed up its disks without sending the data through the CPU of the server that’s using the database?

The disks and tape drives were connected in such a way that allowed the data to be sent directly from disk to tape without going through any server’s CPU?

Fibre Channel and SANs have made all of these “what ifs” possible, including many others that will be discussed in later chapters. SANs are making backups more manageable than ever—regardless of the size of the servers being backed up. In many cases, SANs are making things possible that weren’t conceivable with conventional parallel SCSI or LAN-based backups.

From NFS and SMB to NAS

NAS has roots in two main areas: the Server Message Block (SMB) and the Network File System (NFS) protocols. Interestingly enough, these two file-sharing protocols were developed by two of the biggest rivals in the computer industry: Microsoft and Sun Microsystems, and they both appeared in 1984! (IBM and Microsoft developed SMB, and Sun developed NFS.)

SMB/CIFS

When you use your Windows browser to go to “Network Neighborhood” and can read from and write to drives that are actually on other computers, you are probably using the SMB protocol.[2] The first mention of the SMB protocol was in an IBM technical reference in 1984, and it was originally designed to be a network naming and browsing protocol. Shortly thereafter, it was adapted by Microsoft to become a file sharing protocol. Several versions of the SMB protocol have been released throughout the years, and it has become the common file sharing protocol for all Microsoft Windows operating systems (Windows 3.1, 95, 98, Me, NT, 2000, and XP) and IBM OS/2 systems. Microsoft recently changed its name to the Common Internet File System (CIFS).

Like many Microsoft applications, CIFS was designed for simplicity. To allow others to access a drive on your system, simply right-click on a drive icon and select “Sharing.” You then decide whether the drive should be shared read-only or read-write, and what passwords should control access. You can share a complete drive (e.g., C:\) or just a part of the drive (e.g., C:\MYMUSIC).

Please note that I didn’t say that CIFS was originally designed for performance. Actually, as covered later in the book, it was designed with multiple-user access in mind—at the expense of performance. However, Microsoft and other companies have made a number of performance improvements to CIFS in recent years.

The popularity of CIFS has led to many companies installing large, centralized CIFS servers that share drives to hundreds or thousands of PC clients. The most common reason to do this is to centralize the storage of important files. Users are encouraged to save anything important on the “network drive” because many don’t back up their desktops. The administration staff, however, backs up the CIFS server.

Another type of CIFS server is a Unix/Linux box running Samba, which gets its name from the SMB protocol. Such a system can also share its drives with hundreds or thousands of PC clients, who will see it as nothing other than another PC sharing drives. Since both Linux and Samba are free, this solution has become quite popular.

To summarize, SMB has evolved into CIFS and become the predominant way to share files between Windows-based desktops and laptops. CIFS has a few limitations, which are covered in more detail later in this book.

NFS

Like many Unix projects, the Network File System (NFS) began as a pet project of a few engineers at Sun Microsystems. Sun introduced it to the public in 1984, and all Unix vendors subsequently added it to their suite of base applications. Via NFS, any Unix box can read from or write to any filesystem on any other Unix box—provided it has been shared.

NFS is used in a variety of ways. One of the most common uses is to have a central NFS server contain everyone’s home directory. In Unix, a home directory is where users store files. In fact, a properly configured Unix system requires users to store files in their home directories (except for files that are obvious throwaways, such as files placed in /tmp). If everyone’s home directory is on an NFS file server, then all a company’s data is in one place. This offers the same advantages as the CIFS server discussed earlier. With third-party software, PC systems can also access directories on a Unix server that have been shared via NFS.

NFS may have originally been designed with simplicity in mind, but it’s certainly not as easy to use as Windows file sharing.[3] Each implementation of Unix has a command that is used to share a filesystem from the command line and a file that can share that filesystem permanently. For example, BSD derivatives typically use /etc/exports and the exportfs command, while System V derivatives use /etc/dfs/dfstab and the share command. As with all Unix commands, you must learn the command’s syntax to share filesystems, because the syntax is also used in the exports file. Of course, a command’s syntax varies between different versions of Unix.

Another difficulty with NFS is that it was originally based on UDP, rather than TCP. (Even though NFS v3 allows using TCP, most NFS traffic still runs across UDP.) The biggest problem with UDP and NFS is how retransmits are handled. NFS sends 8K packets that must be split into six IP fragments (based on a typical frame size of 1500 bytes). All six fragments must be retransmitted if any fragment is lost. UDP also has no flow control, so a server can ask for more data than it can receive, and excessive UDP retransmits can easily bring a network to its knees. This is why NFS v3 allows for NFS on top of TCP. TCP has well-established flow control, and TCP requires retransmission only of lost packets—not the entire NFS packet like UDP. Of course, the decision to use UDP or TCP makes managing such servers even more complicated.

NFS and CIFS: Before NAS

Both NFS and CIFS servers have been around for several years and have gained quite a bit of popularity. This isn’t to say that either is perfect. There are a number of issues associated with managing NFS and CIFS in the real world.

The first problem with both NFS and CIFS is that a server is usually dedicated to be an NFS or a CIFS server. Each server then represents just another system to manage. Patch management alone can be quite a hassle. Also, just because an environment has enough users to warrant an NFS or CIFS server doesn’t mean they have the expertise necessary to maintain an NFS or CIFS server.

The next problem is that few environments are strictly Unix or strictly Windows; most environments are a mixture of both. Such environments find themselves in a predicament. Do they incur the extra cost of loading PC NFS software on their Windows desktops? Do they use Samba on their Unix boxes to provide CIFS services to PCs? If they do that, will their NT-savvy administrators understand how to administer Samba? Or will their Unix-savvy administrators understand Windows ACLs? The result is that such environments usually end up with a Unix NFS server and an NT CIFS server; now there are two boxes to manage instead of one.

The final problem involves performance. Disks that sit behind a typical NFS or CIFS server are simply not as fast to users as local disk. When saving large files or many files, this performance hit can be quite costly. It persuades people to save their files locally, which defeats the purpose of having network-mounted drives in the first place.

The reason both NFS and CIFS suffer from performance issues is that both were afterthoughts. Unix was developed in the late 60’s, and DOS in the early 80’s. It took both groups several years to realize they needed an easier way to share files and invented NFS and CIFS as a result. Although some tweaks have been made to the kernel to make these protocols faster, the fact is that they are simply another application competing for CPU and network resources. The only way to solve all these problems was to start from scratch, and network attached storage was born.

Enter NAS

If you think about it a moment, the NAS industry is based on selling boxes to do something any Unix or Windows system can do out of the box. How is it, then, that NAS vendors have been so successful? Why is it, then, that many are predicting a predominance of NAS in the future? The answer is that they have done a pretty good job of removing the issues people have with NFS and CIFS.

One thing vendors tried was to make NFS or CIFS servers easier to manage. They created packaged boxes with hot-swappable RAID arrays that significantly increased their availability and decreased the amount of time needed for corrective maintenance. Another novel concept was a single server that provided both NFS and CIFS services—reducing the total number of servers required to do the job. You can even mount the same directory via both NFS and CIFS. NAS vendors also designed user interfaces that made sharing NFS and CIFS filesystems easier. In one way or another, NAS boxes are easier to manage than their predecessors.

NAS vendors have also successfully dealt with the performance problems of both NFS and CIFS. In fact, some have actually made NFS faster than local disk!

The first NAS vendor, Auspex, felt that performance problems happened because the typical NFS[4] implementation forces each NFS request to go through the host CPU. Their solution was to create a custom box with a separate processor for each function. The host processor (HP) would be used only to get the system booted up, and NFS requests would be the responsibility of the network processor (NP) and the storage processor (SP). The result was an NFS server that was much faster than any of its predecessors.

Network Appliance was the next major vendor on the scene, and they felt that the problem was the Unix kernel and filesystem. Their solution was to reduce the kernel to something that would fit on a 3.5-inch floppy disk, completely rewriting the NFS subsystem so that it was more efficient, and including an optimized filesystem designed for use with NVRAM. Network Appliance was the first NAS vendor to publish benchmarks that showed their servers were faster than a locally attached disk.

Note that NAS filers use the NFS or CIFS protocol to transfer files. In contrast, SANs use the SCSI-3 protocol to share devices. (A side-by-side comparison of NAS and SAN can be found at the end of this chapter.)

Let me conclude this section with a definition of network attached storage:

SAN Versus NAS: A Summary

Table 1-1 compares NAS and SAN and should clear up any confusion regarding their similarities and differences.

|

SAN |

NAS | |

|

Protocol |

Serial SCSI-3 |

NFS/CIFS |

|

Shares |

Raw disk and tape drives |

Filesystems |

|

Examples of shared items |

/dev/rmt/0cbn /dev/dsk/c0t0d0s2 \\.\Tape0 |

\\filer\C\directory\filename.doc /nfsmount/directory/filename.txt |

|

Allows |

Different servers can access the same raw disk or tape drive (not typically seen by the end user) |

Different users can access the same filesystem or file |

|

Replaces |

Replaces locally attached disk and tape drives; with SANs, hundreds of systems can now share the same disk or tape drive |

Replaces Unix NFS servers and NT CIFS servers that offer network shared filesystems |

In this book, you will see how SANs and NAS relate to backup and recovery. SANs are an excellent way to increase the value of your existing backup and recovery system and can help you back up systems more easily than would otherwise be possible. I will also talk about NAS appliances and the challenges they bring to your backup and recovery system. I will cover ways to back up such boxes if you can’t afford a commercial backup and recovery product, as well as go into detail about NDMP—the supported way to back up NAS filers.

Which Is Right for You?

One reason this book includes both SANs and NAS is that many people are starting to see filers as a viable alternative to a SAN. While filers were once perceived as “NFS in a box,” people are now using them to host large databases and important production data. Which is right for you? The last section of this chapter attempts to explain the pros and cons of each architecture, allowing you to answer this question.

Tip

Many comments made in the following paragraphs are summaries of statements made in later chapters. For the details behind these summary statements, please read Chapter 2 and Chapter 5.

The Pros and Cons of NAS

As mentioned earlier, NAS filers have become popular with many people for many reasons. The following is a summary of several:

- Filers are fast enough for many applications

Many would argue that SANs are simply more powerful than NAS. Some would argue that NFS and CIFS running on top of TCP/IP creates more overhead on the client than SCSI-3 running on top of Fibre Channel. This would mean that a single host could sustain more throughput to a SAN-based disk than a NAS-based disk. While this may be true on very high-end servers, most real-world applications require much less throughput than the maximum available throughput of a filer.

- NAS offers multihost filesystem access

A downside of SANs is that, while they do offer multihost access to devices, most applications want multihost access to files. If you want the systems connected to a SAN to read and write to the same file, you need a SAN or cluster-based filesystem. Such filesystems are starting to become available, but they are usually expensive and are relatively new technologies. Filers, on the other hand, offer multihost access to files using technology that has existed since 1984.

- NAS is easier to understand

Some people are concerned that they don’t understand Fibre Channel and certainly don’t understand fabric-based SANs. To these people, SANs represent a significant learning curve, whereas NAS doesn’t. With NAS, all that’s needed to implement a filer is to read the manual provided by the NAS vendor, which is usually rather brief; it doesn’t need to be longer. With Fibre Channel, you first need to read about and understand it, and then read the HBA manual, the switch manual, and the manuals that come with any SAN management software.

- Filers are easier to maintain

No one who has managed both a SAN and NAS will argue with this statement. SANs are composed of pieces of hardware from many vendors, including the HBA, the switch or hub, and the disk arrays. Each vendor is new to an environment that hasn’t previously used a SAN. In comparison, filers allow the use of your existing network infrastructure. The only new vendor you need is the manufacturer of the filer itself. SANs have a larger number of components that can fail, fewer tools to troubleshoot these failures, and more possibilities of finger pointing. All in all, a NAS-based network is easier to maintain.

- Filers are much cheaper

Since filers allow you to leverage your existing network infrastructure, they are usually cheaper to implement than a SAN. A SAN requires the purchase of a Fibre Channel HBA to support each host that’s connected to the SAN, a port on a hub or switch to support each host, one or more disk arrays, and the appropriate cables to connect all this together. Even if you choose to install a separate LAN for your NAS traffic, the required components are still cheaper than their SAN counterparts.

- Filers are easy to protect against failure

While not all NAS vendors offer this option, some filers can automatically replicate their filesystems to another filer at another location. This can be done using a very low bandwidth network connection. While this can be accomplished with a SAN by purchasing one of several third-party packages, the functionality is built right into some filers and is therefore less expensive and more reliable.

- Filers are here and now

Many people have criticized SANs for being more hype than reality. Too many vendors’ systems are incompatible, and too many software pieces are just now being released. Many vendors are still fighting over the Fibre Channel standard. While there are many successfully implemented SANs today, there are many that aren’t successful. If you connect equipment from the wrong vendors, things just won’t work. In comparison, filers are completely interoperable, and the standards upon which they are based have been around for years.

Filers aren’t without limitations. Here’s a list of the limitations that exist as of this writing. Whether or not they still exist is left as an exercise for the reader.

- Filers can be difficult to back up to tape

Although the snapshot and off-site replication software offered by some NAS vendors offers some wonderful recovery possibilities that are rather difficult to achieve with a SAN, filers must still be backed up to tape at some point, and backing up a filer to tape can be a challenge. One of the reasons is that performing a full backup to tape will typically task an I/O system much more than any other application. This means that backing up a really large filer to tape will create quite a load on the system. Although many filers have significantly improved the backup and recovery speeds, SANs are still faster when it comes to raw throughput to tape.

- Filers can’t do image-level backup of NAS

To date, all backup and recovery options for filers are file-based, which means the backup and recovery software is traversing the filesystem just as you do. There are a few applications that create millions of small files. Restoring millions of small files is perhaps the most difficult task a backup and recovery system will perform. More time is spent creating the inode than actually restoring the data, which is why most major backup/recovery software vendors have created software that can back up filesystems via the raw device—while maintaining file-level recoverability. Unfortunately, today’s filers don’t have a solution for this problem.

- The upper limit is lower than a SAN

Although it’s arguable that most applications will never task a filer beyond its ability to transfer data, it’s important to mention that theoretically a SAN should be able to transfer more data than NAS. If your application requires incredible amounts of throughput, you should certainly benchmark both. For some environments, NAS offers a faster, cheaper alternative to SANs. However, for other environments, SANs may be the only option. Just make sure to test your system before buying it.

Pros and Cons of SANs

Many people swear by SANs and would never consider using NAS; they are aware that SANs are expensive and represent cutting edge technology. They are willing to live with these downsides in order to experience the advantages they feel only SANs can offer. The following is a summary of these advantages:

- SANs can serve raw devices

Neither NFS nor CIFS can serve raw devices via the network; they can only serve files. If your application requires access to a raw device, NAS is simply not an option.

- SANs are more flexible

What some see as complexity, others see as flexibility. They like the features available with the filesystem or volume manager that they have purchased, and those features aren’t available with NAS. While NFS and CIFS have been around for several years, the filesystem technology that the filer is using is often new, especially when compared to ufs, NTFS, or vxfs.

- SANs can be faster

As discussed above, there are applications where SANs will be faster. If your application requires sustained throughput greater than what is available from the fastest filer, your only alternative is a SAN.

- SANs are easier to back up

The throughput possible with a SAN makes large-scale backup and recovery much easier. In fact, large NAS environments take advantage of SAN technology in order to share a tape library and perform LAN-less backups.

SANs are also not without their foibles. The following list contains the difficulties many people have with SAN technology:

- SANs are often more hype than reality

This has already been covered earlier in this chapter. Perhaps in a few years, the vendors will have agreed upon an appropriate standard, and SAN management software will do everything it’s supposed to do, with SAN equipment that’s completely interoperable. I sure hope this happens.

- SANs are complex

The concepts of Fibre Channel, arbitrated loop, fabric login, and device virtualization aren’t always easy to grasp. The concepts of NFS and CIFS seem much simpler in comparison.

- SANs are expensive

Although they are getting less expensive every day, a Fibre Channel HBA still costs much more than a standard Ethernet NIC. It’s simply a matter of economies of scale. More people need Ethernet than need Fibre Channel.

It All Depends on Your Environment

Which storage architecture is appropriate for you depends heavily on your environment. What is more important to you: cost, complexity, flexibility, or raw throughput? Communicate your storage requirements to both NAS and SAN vendors and see what numbers they come up with for cost. If the cost and complexity of a SAN isn’t completely out of the question, I’d recommend you benchmark both.

Make sure to solicit the help of each vendor during the benchmark. Proper configuration of each system is essential to proper performance, and you probably will not get it right on the first try. Have the vendors install the test NAS or SAN—even if you have to pay for it. It will be worth the money, especially if you’ve never configured one before.

I hope the rest of this book will prove helpful in your quest.

[1] This brief history of SCSI is courtesy of John Lohmeyer, the chairman of the X10 committee.

[2] I say “probably” only because you can buy an NFS client for your Windows desktop as well.

[3] As with many other difficult things about Unix, Unix administrators don’t seem to mind. They might even argue that NFS is easier to configure than CIFS, because they can easily script it.

[4] All early NAS vendors started with NFS first and added CIFS services later.

Get Using SANs and NAS now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.