B.15 LOGARITHM FUNCTIONS

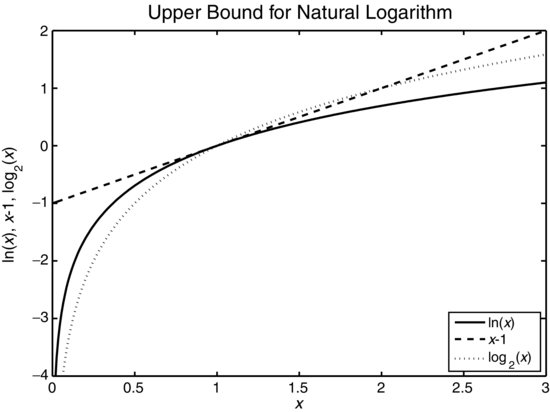

The logarithm function is used in the definitions of entropy and mutual information (see Chapter 10). It also appears in the logarithm random variable and is used for some transformations of random variables. The natural logarithm is plotted in Figure B.10 where we see that it is a monotonically increasing function. It is shown in Problem 10.14 that the natural logarithm is bounded as follows:

Figure B.10 Upper bound for ln(x).

(B.81) ![]()

which is also demonstrated in the figure. This bound is useful for proving various properties in information theory; an example is provided in Problem 10.19 to show that the mutual information is nonnegative. The upper bound is reached only when x = 1 such that ln(1) = 0. Note that the bound generally does not apply to other logarithm bases; for example, it is shown in the figure that log2(x) exceeds x−1 for an interval just above x = 1. We can convert between different bases using the formula:

(B.82) ![]()

Thus, if an upper bound is needed for log2(x), the following can be used:

Because both the slope and the ordinate of ...

Get Probability, Random Variables, and Random Processes: Theory and Signal Processing Applications now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.