Hadoop comes with a set of primitives for data I/O. Some of these are techniques that are more general than Hadoop, such as data integrity and compression, but deserve special consideration when dealing with multiterabyte datasets. Others are Hadoop tools or APIs that form the building blocks for developing distributed systems, such as serialization frameworks and on-disk data structures.

Users of Hadoop rightly expect that no data will be lost or corrupted during storage or processing. However, since every I/O operation on the disk or network carries with it a small chance of introducing errors into the data that it is reading or writing, when the volumes of data flowing through the system are as large as the ones Hadoop is capable of handling, the chance of data corruption occurring is high.

The usual way of detecting corrupted data is by computing a checksum for the data when it first enters the system, and again whenever it is transmitted across a channel that is unreliable and hence capable of corrupting the data. The data is deemed to be corrupt if the newly generated checksum doesn’t exactly match the original. This technique doesn’t offer any way to fix the data—merely error detection. (And this is a reason for not using low-end hardware; in particular, be sure to use ECC memory.) Note that it is possible that it’s the checksum that is corrupt, not the data, but this is very unlikely, since the checksum is much smaller than the data.

A commonly used error-detecting code is CRC-32 (cyclic redundancy check), which computes a 32-bit integer checksum for input of any size.

HDFS transparently checksums all data written to it and

by default verifies checksums when reading data. A separate checksum

is created for every io.bytes.per.checksum

bytes of data. The default is 512 bytes, and since a CRC-32 checksum

is 4 bytes long, the storage overhead is less than 1%.

Datanodes are responsible for verifying the data they

receive before storing the data and its checksum. This applies to data

that they receive from clients and from other datanodes during

replication. A client writing data sends it to a pipeline of datanodes

(as explained in Chapter 3), and the last datanode in

the pipeline verifies the checksum. If it detects an error, the client

receives a ChecksumException, a

subclass of IOException, which it

should handle in an application-specific manner, by retrying the

operation, for example.

When clients read data from datanodes, they verify checksums as well, comparing them with the ones stored at the datanode. Each datanode keeps a persistent log of checksum verifications, so it knows the last time each of its blocks was verified. When a client successfully verifies a block, it tells the datanode, which updates its log. Keeping statistics such as these is valuable in detecting bad disks.

Aside from block verification on client reads, each datanode

runs a DataBlockScanner in a

background thread that periodically verifies all the blocks stored on

the datanode. This is to guard against corruption due to “bit rot” in

the physical storage media. See Datanode block scanner

for details on how to access the scanner reports.

Since HDFS stores replicas of blocks, it can “heal” corrupted

blocks by copying one of the good replicas to produce a new, uncorrupt

replica. The way this works is that if a client detects an error when

reading a block, it reports the bad block and the datanode it was

trying to read from to the namenode before throwing a ChecksumException. The namenode marks the

block replica as corrupt, so it doesn’t direct clients to it, or try

to copy this replica to another datanode. It then schedules a copy of

the block to be replicated on another datanode, so its replication

factor is back at the expected level. Once this has happened, the

corrupt replica is deleted.

It is possible to disable verification of checksums by passing

false to the setVerifyChecksum()

method on FileSystem, before using

the open() method to read a file.

The same effect is possible from the shell by using the -ignoreCrc option with the -get or the equivalent -copyToLocal command. This feature is useful

if you have a corrupt file that you want to inspect so you can decide

what to do with it. For example, you might want to see whether it can

be salvaged before you delete it.

The Hadoop LocalFileSystem performs client-side

checksumming. This means that when you write a file called filename, the filesystem client

transparently creates a hidden file, .filename.crc, in the same directory

containing the checksums for each chunk of the file. Like HDFS, the

chunk size is controlled by the io.bytes.per.checksum

property, which defaults to 512 bytes. The chunk size is stored as

metadata in the .crc file, so the

file can be read back correctly even if the setting for the chunk size

has changed. Checksums are verified when the file is read, and if an

error is detected, LocalFileSystem throws a ChecksumException.

Checksums are fairly cheap to compute (in Java, they are

implemented in native code), typically adding a few percent overhead

to the time to read or write a file.

For most applications, this is an acceptable price to pay for data

integrity. It is, however, possible to disable checksums: typically

when the underlying filesystem supports checksums natively. This is

accomplished by using RawLocalFileSystem in place of LocalFileSystem. To do this globally in an

application, it suffices to remap the implementation for file URIs by

setting the property fs.file.impl

to the value org.apache.hadoop.fs.RawLocalFileSystem.

Alternatively, you can directly create a RawLocalFileSystem

instance, which may be useful if you want to disable checksum

verification for only some reads; for example:

Configuration conf = ... FileSystem fs = new RawLocalFileSystem(); fs.initialize(null, conf);

LocalFileSystem uses

ChecksumFileSystem to do its work,

and this class makes it easy to add checksumming to other

(nonchecksummed) filesystems, as ChecksumFileSystem is just a wrapper around

FileSystem. The general idiom is as

follows:

FileSystem rawFs = ... FileSystem checksummedFs = new ChecksumFileSystem(rawFs);

The underlying filesystem is called the

raw filesystem, and may be retrieved using the

getRawFileSystem() method on

ChecksumFileSystem. ChecksumFileSystem has a few more useful

methods for working with checksums, such as getChecksumFile() for getting the path of a

checksum file for any file. Check the documentation for the

others.

If an error is detected by ChecksumFileSystem when reading a file, it

will call its reportChecksumFailure() method. The

default implementation does nothing, but LocalFileSystem moves the offending file and

its checksum to a side directory on the same device called bad_files. Administrators should

periodically check for these bad files and take action on

them.

File compression brings two major benefits: it reduces the space needed to store files, and it speeds up data transfer across the network, or to or from disk. When dealing with large volumes of data, both of these savings can be significant, so it pays to carefully consider how to use compression in Hadoop.

There are many different compression formats, tools and algorithms, each with different characteristics. Table 4-1 lists some of the more common ones that can be used with Hadoop.[31]

Table 4-1. A summary of compression formats

| Compression format | Tool | Algorithm | Filename extension | Multiple files | Splittable |

|---|---|---|---|---|---|

| DEFLATE[a] | N/A | DEFLATE | .deflate | No | No |

| gzip | gzip | DEFLATE | .gz | No | No |

| bzip2 | bzip2 | bzip2 | .bz2 | No | Yes |

| LZO | lzop | LZO | .lzo | No | No |

[a] DEFLATE is a compression algorithm whose standard implementation is zlib. There is no commonly available command-line tool for producing files in DEFLATE format, as gzip is normally used. (Note that the gzip file format is DEFLATE with extra headers and a footer.) The .deflate filename extension is a Hadoop convention. | |||||

All compression algorithms exhibit a space/time trade-off: faster

compression and decompression speeds usually come at the expense of

smaller space savings. All of the tools listed in Table 4-1 give some control over this trade-off at

compression time by offering nine different options: –1 means optimize for speed and -9 means optimize for space. For example, the

following command creates a compressed file file.gz using the

fastest compression method:

gzip -1 file

The different tools have very different compression characteristics. Gzip is a general-purpose compressor, and sits in the middle of the space/time trade-off. Bzip2 compresses more effectively than gzip, but is slower. Bzip2’s decompression speed is faster than its compression speed, but it is still slower than the other formats. LZO, on the other hand, optimizes for speed: it is faster than gzip (or any other compression or decompression tool[32]), but compresses slightly less effectively.

The “Splittable” column in Table 4-1 indicates whether the compression format supports splitting; that is, whether you can seek to any point in the stream and start reading from some point further on. Splittable compression formats are especially suitable for MapReduce; see Compression and Input Splits for further discussion.

A codec is the implementation of

a compression-decompression algorithm. In Hadoop, a codec is

represented by an implementation of the CompressionCodec

interface. So, for example, GzipCodec encapsulates the compression and

decompression algorithm for gzip. Table 4-2

lists the codecs that are available for Hadoop.

Table 4-2. Hadoop compression codecs

| Compression format | Hadoop CompressionCodec |

|---|---|

| DEFLATE | org.apache.hadoop.io.compress.DefaultCodec |

| gzip | org.apache.hadoop.io.compress.GzipCodec |

| bzip2 | org.apache.hadoop.io.compress.BZip2Codec |

| LZO | com.hadoop.compression.lzo.LzopCodec |

The LZO libraries are GPL-licensed and may not be included

in Apache distributions, so for this reason the Hadoop codecs must be

downloaded separately from http://code.google.com/p/hadoop-gpl-compression/ (or

http://github.com/kevinweil/hadoop-lzo, which

includes bugfixes and more tools). The LzopCodec is compatible with the lzop tool, which is essentially the LZO

format with extra headers, and is the one you normally want. There is

also a LzoCodec for the pure LZO

format, which uses the .lzo_deflate filename extension (by analogy

with DEFLATE, which is gzip without the headers).

CompressionCodec

has two methods that allow you to easily compress or decompress

data. To compress data being written to an output stream, use the

createOutputStream(OutputStream

out) method to create a CompressionOutputStream to which you write

your uncompressed data to have it written in compressed form to the

underlying stream. Conversely, to decompress data being read from an

input stream, call createInputStream(InputStream in) to

obtain a CompressionInputStream,

which allows you to read uncompressed data from the underlying

stream.

CompressionOutputStream and

CompressionInputStream are

similar to java.util.zip.DeflaterOutputStream and

java.util.zip.DeflaterInputStream, except

that both of the former provide the ability to reset their

underlying compressor or decompressor, which is important for

applications that compress sections of the data stream as separate

blocks, such as SequenceFile,

described in SequenceFile.

Example 4-1 illustrates how to use the API to compress data read from standard input and write it to standard output.

Example 4-1. A program to compress data read from standard input and write it to standard output

public class StreamCompressor {

public static void main(String[] args) throws Exception {

String codecClassname = args[0];

Class<?> codecClass = Class.forName(codecClassname);

Configuration conf = new Configuration();

CompressionCodec codec = (CompressionCodec)

ReflectionUtils.newInstance(codecClass, conf);

CompressionOutputStream out = codec.createOutputStream(System.out);

IOUtils.copyBytes(System.in, out, 4096, false);

out.finish();

}

}

The application expects the fully qualified name of the

CompressionCodec implementation

as the first command-line argument. We use ReflectionUtils to construct a new

instance of the codec, then obtain a compression wrapper around

System.out. Then we call the

utility method copyBytes() on

IOUtils to copy the input to the

output, which is compressed by the CompressionOutputStream. Finally, we call

finish() on CompressionOutputStream, which tells

the compressor to finish writing to the compressed stream, but

doesn’t close the stream. We can try it out with the following

command line, which compresses the string “Text” using the StreamCompressor program with the GzipCodec, then decompresses it from

standard input using gunzip:

%echo "Text" | hadoop StreamCompressor org.apache.hadoop.io.compress.GzipCodec \| gunzip -Text

If you are reading a compressed file, you can normally infer

the codec to use by looking at its filename extension. A file ending

in .gz can be read with

GzipCodec, and so on. The

extension for each compression format is listed in Table 4-1.

CompressionCodecFactory provides a way of

mapping a filename extension to a CompressionCodec using its getCodec() method, which takes a Path object for the file in question.

Example 4-2 shows an application that uses

this feature to decompress files.

Example 4-2. A program to decompress a compressed file using a codec inferred from the file’s extension

public class FileDecompressor {

public static void main(String[] args) throws Exception {

String uri = args[0];

Configuration conf = new Configuration();

FileSystem fs = FileSystem.get(URI.create(uri), conf);

Path inputPath = new Path(uri);

CompressionCodecFactory factory = new CompressionCodecFactory(conf);

CompressionCodec codec = factory.getCodec(inputPath);

if (codec == null) {

System.err.println("No codec found for " + uri);

System.exit(1);

}

String outputUri =

CompressionCodecFactory.removeSuffix(uri, codec.getDefaultExtension());

InputStream in = null;

OutputStream out = null;

try {

in = codec.createInputStream(fs.open(inputPath));

out = fs.create(new Path(outputUri));

IOUtils.copyBytes(in, out, conf);

} finally {

IOUtils.closeStream(in);

IOUtils.closeStream(out);

}

}

}

Once the codec has been found, it is used to strip off the

file suffix to form the output filename (via the removeSuffix() static method of CompressionCodecFactory). In this way, a

file named file.gz is

decompressed to file by

invoking the program as follows:

% hadoop FileDecompressor file.gzCompressionCodecFactory

finds codecs from a list defined by the io.compression.codecs configuration

property. By default, this lists all the codecs provided by Hadoop

(see Table 4-3), so you would

need to alter it only if you have a custom codec that you wish to

register (such as the externally hosted LZO codecs). Each codec

knows its default filename extension, thus permitting CompressionCodecFactory to search through

the registered codecs to find a match for a given extension (if

any).

Table 4-3. Compression codec properties

| Property name | Type | Default value | Description |

|---|---|---|---|

io.compression.codecs | comma-separated

Class names | org.apache.hadoop.io.compress.DefaultCodec, org.apache.hadoop.io.compress.GzipCodec,

org.apache.hadoop.io.compress.Bzip2Codec | A list of the CompressionCodec classes

for compression/decompression. |

For performance, it is preferable to use a native library for compression and decompression. For example, in one test, using the native gzip libraries reduced decompression times by up to 50% and compression times by around 10% (compared to the built-in Java implementation). Table 4-4 shows the availability of Java and native implementations for each compression format. Not all formats have native implementations (bzip2, for example), whereas others are only available as a native implementation (LZO, for example).

Table 4-4. Compression library implementations

| Compression format | Java implementation | Native implementation |

|---|---|---|

| DEFLATE | Yes | Yes |

| gzip | Yes | Yes |

| bzip2 | Yes | No |

| LZO | No | Yes |

Hadoop comes with prebuilt native compression libraries for 32- and 64-bit Linux, which you can find in the lib/native directory. For other platforms, you will need to compile the libraries yourself, following the instructions on the Hadoop wiki at http://wiki.apache.org/hadoop/NativeHadoop.

The native libraries are picked up using the Java system property java.library.path. The hadoop script in the bin directory sets this property for you,

but if you don’t use this script, you will need to set the property

in your application.

By default, Hadoop looks for native libraries for the platform

it is running on, and loads them automatically if they are found.

This means you don’t have to change any configuration settings to

use the native libraries. In some circumstances, however, you may

wish to disable use of native libraries, such as when you are

debugging a compression-related problem. You can achieve this by

setting the property hadoop.native.lib to false, which ensures that the built-in

Java equivalents will be used (if they are available).

If you are using a native library and you are doing

a lot of compression or decompression in your application,

consider using CodecPool, which

allows you to reuse compressors and decompressors, thereby

amortizing the cost of creating these objects.

The code in Example 4-3 shows

the API, although in this program, which only creates a single

Compressor, there is really no

need to use a pool.

Example 4-3. A program to compress data read from standard input and write it to standard output using a pooled compressor

public class PooledStreamCompressor {

public static void main(String[] args) throws Exception {

String codecClassname = args[0];

Class<?> codecClass = Class.forName(codecClassname);

Configuration conf = new Configuration();

CompressionCodec codec = (CompressionCodec)

ReflectionUtils.newInstance(codecClass, conf);

Compressor compressor = null;

try {

compressor = CodecPool.getCompressor(codec);

CompressionOutputStream out =

codec.createOutputStream(System.out, compressor);

IOUtils.copyBytes(System.in, out, 4096, false);

out.finish();

} finally {

CodecPool.returnCompressor(compressor);

}

}

}

We retrieve a Compressor

instance from the pool for a given CompressionCodec, which we use in the

codec’s overloaded createOutputStream()

method. By using a finally

block, we ensure that the compressor is returned to the pool even

if there is an IOException while copying the bytes

between the streams.

When considering how to compress data that will be processed by MapReduce, it is important to understand whether the compression format supports splitting. Consider an uncompressed file stored in HDFS whose size is 1 GB. With an HDFS block size of 64 MB, the file will be stored as 16 blocks, and a MapReduce job using this file as input will create 16 input splits, each processed independently as input to a separate map task.

Imagine now the file is a gzip-compressed file whose compressed size is 1 GB. As before, HDFS will store the file as 16 blocks. However, creating a split for each block won’t work since it is impossible to start reading at an arbitrary point in the gzip stream, and therefore impossible for a map task to read its split independently of the others. The gzip format uses DEFLATE to store the compressed data, and DEFLATE stores data as a series of compressed blocks. The problem is that the start of each block is not distinguished in any way that would allow a reader positioned at an arbitrary point in the stream to advance to the beginning of the next block, thereby synchronizing itself with the stream. For this reason, gzip does not support splitting.

In this case, MapReduce will do the right thing and not try to split the gzipped file, since it knows that the input is gzip-compressed (by looking at the filename extension) and that gzip does not support splitting. This will work, but at the expense of locality: a single map will process the 16 HDFS blocks, most of which will not be local to the map. Also, with fewer maps, the job is less granular, and so may take longer to run.

If the file in our hypothetical example were an LZO file, we would have the same problem since the underlying compression format does not provide a way for a reader to synchronize itself with the stream.[33] A bzip2 file, however, does provide a synchronization marker between blocks (a 48-bit approximation of pi), so it does support splitting. (Table 4-1 lists whether each compression format supports splitting.)

As described in Inferring CompressionCodecs using CompressionCodecFactory, if your input files are compressed, they will be automatically decompressed as they are read by MapReduce, using the filename extension to determine the codec to use.

To compress the output of a MapReduce job, in the job

configuration, set the mapred.output.compress property to true and the mapred.output.compression.codec property to

the classname of the compression codec you want to use, as shown in

Example 4-4.

Example 4-4. Application to run the maximum temperature job producing compressed output

public class MaxTemperatureWithCompression {

public static void main(String[] args) throws IOException {

if (args.length != 2) {

System.err.println("Usage: MaxTemperatureWithCompression <input path> " +

"<output path>");

System.exit(-1);

}

JobConf conf = new JobConf(MaxTemperatureWithCompression.class);

conf.setJobName("Max temperature with output compression");

FileInputFormat.addInputPath(conf, new Path(args[0]));

FileOutputFormat.setOutputPath(conf, new Path(args[1]));

conf.setOutputKeyClass(Text.class);

conf.setOutputValueClass(IntWritable.class);

conf.setBoolean("mapred.output.compress", true);

conf.setClass("mapred.output.compression.codec", GzipCodec.class,

CompressionCodec.class);

conf.setMapperClass(MaxTemperatureMapper.class);

conf.setCombinerClass(MaxTemperatureReducer.class);

conf.setReducerClass(MaxTemperatureReducer.class);

JobClient.runJob(conf);

}

}

We run the program over compressed input (which doesn’t have to use the same compression format as the output, although it does in this example) as follows:

% hadoop MaxTemperatureWithCompression input/ncdc/sample.txt.gz outputEach part of the final output is compressed; in this case, there is a single part:

% gunzip -c output/part-00000.gz

1949 111

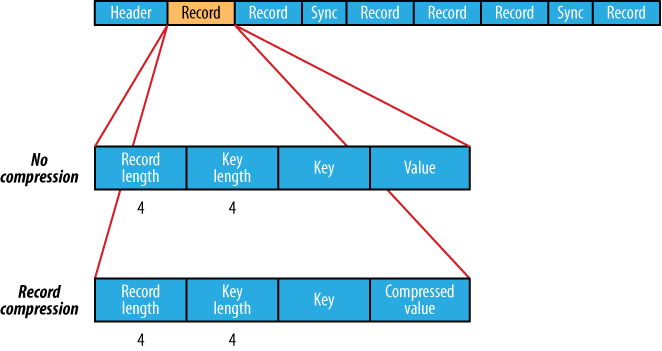

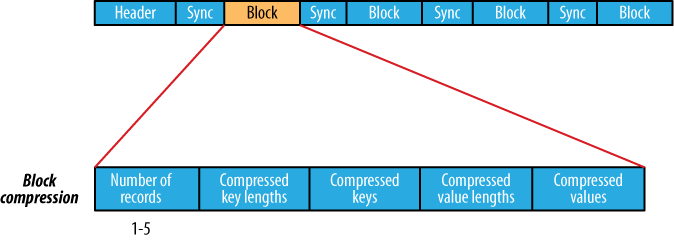

1950 22If you are emitting sequence files for your output, then you can

set the mapred.output.compression.type property to

control the type of compression to use. The default is RECORD, which compresses individual records.

Changing this to BLOCK, which

compresses groups of records, is

recommended since it compresses better (see The SequenceFile format).

Even if your MapReduce application reads and writes uncompressed data, it may benefit from compressing the intermediate output of the map phase. Since the map output is written to disk and transferred across the network to the reducer nodes, by using a fast compressor such as LZO, you can get performance gains simply because the volume of data to transfer is reduced. The configuration properties to enable compression for map outputs and to set the compression format are shown in Table 4-5.

Table 4-5. Map output compression properties

| Property name | Type | Default value | Description |

|---|---|---|---|

mapred.compress.map. output | boolean | false | Compress map outputs. |

mapred.map.output.compression.codec | Class | org.apache.hadoop.io.compress.DefaultCodec | The compression codec to use for map outputs. |

Here are the lines to add to enable gzip map output compression in your job:

conf.setCompressMapOutput(true);

conf.setMapOutputCompressorClass(GzipCodec.class);

Serialization is the process of turning structured objects into a byte stream for transmission over a network or for writing to persistent storage. Deserialization is the reverse process of turning a byte stream back into a series of structured objects.

Serialization appears in two quite distinct areas of distributed data processing: for interprocess communication and for persistent storage.

In Hadoop, interprocess communication between nodes in the system is implemented using remote procedure calls (RPCs). The RPC protocol uses serialization to render the message into a binary stream to be sent to the remote node, which then deserializes the binary stream into the original message. In general, it is desirable that an RPC serialization format is:

- Compact

A compact format makes the best use of network bandwidth, which is the most scarce resource in a data center.

- Fast

Interprocess communication forms the backbone for a distributed system, so it is essential that there is as little performance overhead as possible for the serialization and deserialization process.

- Extensible

Protocols change over time to meet new requirements, so it should be straightforward to evolve the protocol in a controlled manner for clients and servers. For example, it should be possible to add a new argument to a method call, and have the new servers accept messages in the old format (without the new argument) from old clients.

- Interoperable

For some systems, it is desirable to be able to support clients that are written in different languages to the server, so the format needs to be designed to make this possible.

On the face of it, the data format chosen for persistent storage would have different requirements from a serialization framework. After all, the lifespan of an RPC is less than a second, whereas persistent data may be read years after it was written. As it turns out, the four desirable properties of an RPC’s serialization format are also crucial for a persistent storage format. We want the storage format to be compact (to make efficient use of storage space), fast (so the overhead in reading or writing terabytes of data is minimal), extensible (so we can transparently read data written in an older format), and interoperable (so we can read or write persistent data using different languages).

Hadoop uses its own serialization format, Writables, which is certainly compact and fast, but not so easy to extend or use from languages other than Java. Since Writables are central to Hadoop (most MapReduce programs use them for their key and value types), we look at them in some depth in the next three sections, before looking at serialization frameworks in general, and then Avro (a serialization system that was designed to overcome some of the limitations of Writables) in more detail.

The Writable interface defines two methods: one for

writing its state to a DataOutput

binary stream, and one for reading its state from a DataInput binary stream:

package org.apache.hadoop.io;

import java.io.DataOutput;

import java.io.DataInput;

import java.io.IOException;

public interface Writable {

void write(DataOutput out) throws IOException;

void readFields(DataInput in) throws IOException;

}Let’s look at a particular Writable to see what we can do with it. We

will use IntWritable, a wrapper for a Java

int. We can create one and set its

value using the set()

method:

IntWritable writable = new IntWritable();

writable.set(163);

Equivalently, we can use the constructor that takes the integer value:

IntWritable writable = new IntWritable(163);

To examine the serialized form of the IntWritable, we write a small helper method

that wraps a java.io.ByteArrayOutputStream in a

java.io.DataOutputStream (an implementation

of java.io.DataOutput) to capture

the bytes in the serialized stream:

public static byte[] serialize(Writable writable) throws IOException {

ByteArrayOutputStream out = new ByteArrayOutputStream();

DataOutputStream dataOut = new DataOutputStream(out);

writable.write(dataOut);

dataOut.close();

return out.toByteArray();

}

An integer is written using four bytes (as we see using JUnit 4 assertions):

byte[] bytes = serialize(writable);

assertThat(bytes.length, is(4));

The bytes are written in big-endian order (so the most

significant byte is written to the stream first, this is dictated by

the java.io.DataOutput

interface), and we can see their hexadecimal representation by using a

method on Hadoop’s StringUtils:

assertThat(StringUtils.byteToHexString(bytes), is("000000a3"));

Let’s try deserialization. Again, we create a helper

method to read a Writable object

from a byte array:

public static byte[] deserialize(Writable writable, byte[] bytes)

throws IOException {

ByteArrayInputStream in = new ByteArrayInputStream(bytes);

DataInputStream dataIn = new DataInputStream(in);

writable.readFields(dataIn);

dataIn.close();

return bytes;

}

We construct a new, value-less, IntWritable, then call deserialize() to read from the output data

that we just wrote. Then we check that its value, retrieved using the

get() method, is the original

value, 163:

IntWritable newWritable = new IntWritable();

deserialize(newWritable, bytes);

assertThat(newWritable.get(), is(163));

IntWritable

implements the WritableComparable

interface, which is just a subinterface of the Writable and java.lang.Comparable interfaces:

package org.apache.hadoop.io;

public interface WritableComparable<T> extends Writable, Comparable<T> {

}Comparison of types is crucial for MapReduce, where there is a

sorting phase during which keys are compared with one another. One

optimization that Hadoop provides is the RawComparator

extension of Java’s Comparator:

package org.apache.hadoop.io;

import java.util.Comparator;

public interface RawComparator<T> extends Comparator<T> {

public int compare(byte[] b1, int s1, int l1, byte[] b2, int s2, int l2);

}This interface permits implementors to compare records read

from a stream without deserializing them into objects, thereby

avoiding any overhead of object creation. For example, the

comparator for IntWritables

implements the raw compare()

method by reading an integer from each of the byte arrays b1 and b2 and comparing them directly, from the

given start positions (s1 and

s2) and lengths (l1 and l2).

WritableComparator is a

general-purpose implementation of RawComparator for WritableComparable classes. It

provides two main functions. First, it provides a default

implementation of the raw compare() method that deserializes the

objects to be compared from the stream and invokes the object

compare() method. Second, it acts

as a factory for RawComparator

instances (that Writable

implementations have registered). For example, to obtain a

comparator for IntWritable, we

just use:

RawComparator<IntWritable> comparator = WritableComparator.get(IntWritable.class);

The comparator can be used to compare two IntWritable objects:

IntWritable w1 = new IntWritable(163);

IntWritable w2 = new IntWritable(67);

assertThat(comparator.compare(w1, w2), greaterThan(0));

or their serialized representations:

byte[] b1 = serialize(w1);

byte[] b2 = serialize(w2);

assertThat(comparator.compare(b1, 0, b1.length, b2, 0, b2.length),

greaterThan(0));

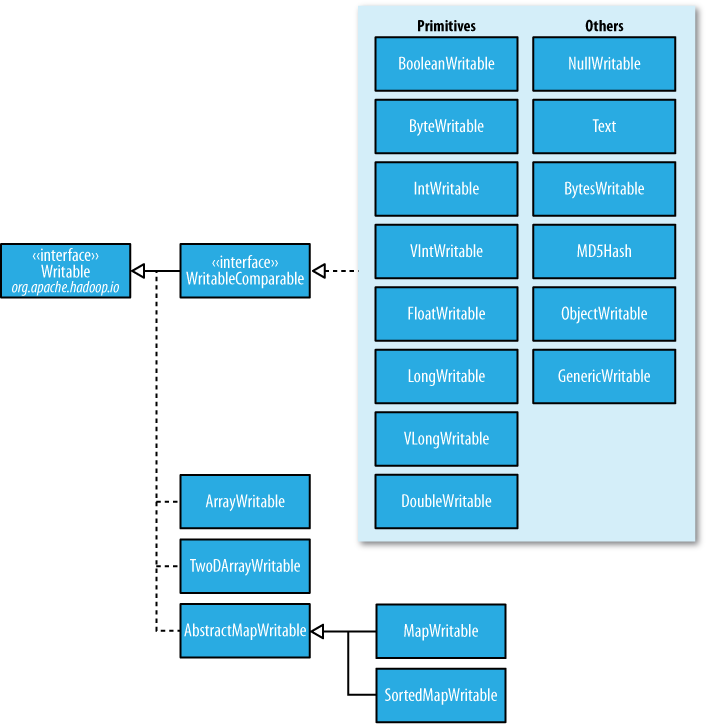

Hadoop comes with a large selection of Writable classes in the org.apache.hadoop.io package. They form the

class hierarchy shown in Figure 4-1.

There are Writable

wrappers for all the Java primitive types (see Table 4-6) except short and char (both of which can be stored in an

IntWritable). All have a get() and a set() method for retrieving and storing

the wrapped value.

Table 4-6. Writable wrapper classes for Java primitives

| Java primitive | Writable implementation | Serialized size (bytes) |

|---|---|---|

boolean | BooleanWritable | 1 |

byte | ByteWritable | 1 |

int | IntWritable | 4 |

VIntWritable | 1–5 | |

float | FloatWritable | 4 |

long | LongWritable | 8 |

VLongWritable | 1–9 | |

double | DoubleWritable | 8 |

When it comes to encoding integers, there is a choice between

the fixed-length formats (IntWritable and LongWritable) and the variable-length

formats (VIntWritable and

VLongWritable). The

variable-length formats use only a single byte to encode the value

if it is small enough (between –112 and 127, inclusive); otherwise,

they use the first byte to indicate whether the value is positive or

negative, and how many bytes follow. For example, 163 requires two

bytes:

byte[] data = serialize(new VIntWritable(163));

assertThat(StringUtils.byteToHexString(data), is("8fa3"));

How do you choose between a fixed-length and a variable-length

encoding? Fixed-length encodings are good when the distribution of

values is fairly uniform across the whole value space, such as a

(well-designed) hash function. Most numeric variables tend to have

nonuniform distributions, and on average the variable-length

encoding will save space. Another advantage of variable-length

encodings is that you can switch from VIntWritable to VLongWritable, since their encodings are

actually the same. So by choosing a variable-length representation,

you have room to grow without committing to an 8-byte long representation from the

beginning.

Text is a Writable for UTF-8 sequences. It can be

thought of as the Writable

equivalent of java.lang.String.

Text is a

replacement for the UTF8 class, which

was deprecated because it didn’t support strings whose encoding was

over 32,767 bytes, and because it used Java’s modified UTF-8.

The Text class uses an

int (with a variable-length

encoding) to store the number of bytes in the string encoding, so

the maximum value is 2 GB. Furthermore, Text uses standard UTF-8, which makes it

potentially easier to interoperate with other tools that understand

UTF-8.

Because of its emphasis on using standard UTF-8, there are

some differences between Text

and the Java String class.

Indexing for the Text class is in terms of position in

the encoded byte sequence, not the Unicode character in the

string, or the Java char code

unit (as it is for String). For

ASCII strings, these three concepts of index position coincide.

Here is an example to demonstrate the use of the charAt() method:

Text t = new Text("hadoop");

assertThat(t.getLength(), is(6));

assertThat(t.getBytes().length, is(6));

assertThat(t.charAt(2), is((int) 'd'));

assertThat("Out of bounds", t.charAt(100), is(-1));

Notice that charAt()

returns an int representing a

Unicode code point, unlike the String variant that returns a char. Text also has a find() method, which is analogous to

String’s indexOf():

Text t = new Text("hadoop");

assertThat("Find a substring", t.find("do"), is(2));

assertThat("Finds first 'o'", t.find("o"), is(3));

assertThat("Finds 'o' from position 4 or later", t.find("o", 4), is(4));

assertThat("No match", t.find("pig"), is(-1));

When we start using characters that are encoded with more

than a single byte, the differences between Text and String become clear. Consider the

Unicode characters shown in Table 4-7.[34]

Table 4-7. Unicode characters

| Unicode code point | U+0041 | U+00DF | U+6771 | U+10400 |

| Name | LATIN CAPITAL LETTER A | LATIN SMALL LETTER SHARP S | N/A (a unified Han ideograph) | DESERET CAPITAL LETTER LONG I |

| UTF-8 code units | 41 | c3 9f | e6 9d b1 | f0 90 90 80 |

| Java representation | \u0041 | \u00DF | \u6771 | \uuD801\uDC00 |

All but the last character in the table, U+10400, can be

expressed using a single Java char. U+10400 is a supplementary

character and is represented by two Java chars, known as a surrogate pair. The

tests in Example 4-5 show the

differences between String and

Text when processing a string

of the four characters from Table 4-7.

Example 4-5. Tests showing the differences between the String and Text classes

public class StringTextComparisonTest {

@Test

public void string() throws UnsupportedEncodingException {

String s = "\u0041\u00DF\u6771\uD801\uDC00";

assertThat(s.length(), is(5));

assertThat(s.getBytes("UTF-8").length, is(10));

assertThat(s.indexOf("\u0041"), is(0));

assertThat(s.indexOf("\u00DF"), is(1));

assertThat(s.indexOf("\u6771"), is(2));

assertThat(s.indexOf("\uD801\uDC00"), is(3));

assertThat(s.charAt(0), is('\u0041'));

assertThat(s.charAt(1), is('\u00DF'));

assertThat(s.charAt(2), is('\u6771'));

assertThat(s.charAt(3), is('\uD801'));

assertThat(s.charAt(4), is('\uDC00'));

assertThat(s.codePointAt(0), is(0x0041));

assertThat(s.codePointAt(1), is(0x00DF));

assertThat(s.codePointAt(2), is(0x6771));

assertThat(s.codePointAt(3), is(0x10400));

}

@Test

public void text() {

Text t = new Text("\u0041\u00DF\u6771\uD801\uDC00");

assertThat(t.getLength(), is(10));

assertThat(t.find("\u0041"), is(0));

assertThat(t.find("\u00DF"), is(1));

assertThat(t.find("\u6771"), is(3));

assertThat(t.find("\uD801\uDC00"), is(6));

assertThat(t.charAt(0), is(0x0041));

assertThat(t.charAt(1), is(0x00DF));

assertThat(t.charAt(3), is(0x6771));

assertThat(t.charAt(6), is(0x10400));

}

}

The test confirms that the length of a String is the number of char code units it contains (5, one from

each of the first three characters in the string, and a surrogate

pair from the last), whereas the length of a Text object is the number of bytes in

its UTF-8 encoding (10 = 1+2+3+4). Similarly, the indexOf() method in String returns an index in char code units, and find() for Text is a byte offset.

The charAt() method in

String returns the char code unit for the given index,

which in the case of a surrogate pair will not represent a whole

Unicode character. The codePointAt() method, indexed by

char code unit, is needed to

retrieve a single Unicode character represented as an int. In fact, the charAt() method in Text is more like the codePointAt() method than its namesake

in String. The only difference

is that it is indexed by byte offset.

Iterating over the Unicode characters in Text is complicated by the use of byte

offsets for indexing, since you can’t just increment the index.

The idiom for iteration is a little obscure (see Example 4-6): turn the Text object into a java.nio.ByteBuffer, then repeatedly

call the bytesToCodePoint()

static method on Text with the

buffer. This method extracts the next code point as an int and updates the position in the

buffer. The end of the string is detected when bytesToCodePoint() returns –1.

Example 4-6. Iterating over the characters in a Text object

public class TextIterator {

public static void main(String[] args) {

Text t = new Text("\u0041\u00DF\u6771\uD801\uDC00");

ByteBuffer buf = ByteBuffer.wrap(t.getBytes(), 0, t.getLength());

int cp;

while (buf.hasRemaining() && (cp = Text.bytesToCodePoint(buf)) != -1) {

System.out.println(Integer.toHexString(cp));

}

}

}

Running the program prints the code points for the four characters in the string:

% hadoop TextIterator

41

df

6771

10400Another difference with String is that Text is mutable (like all Writable

implementations in Hadoop, except NullWritable, which is a singleton). You

can reuse a Text instance by

calling one of the set()

methods on it. For example:

Text t = new Text("hadoop");

t.set("pig");

assertThat(t.getLength(), is(3));

assertThat(t.getBytes().length, is(3));

Warning

In some situations, the byte array returned by the

getBytes() method may be

longer than the length returned by getLength():

Text t = new Text("hadoop");

t.set(new Text("pig"));

assertThat(t.getLength(), is(3));

assertThat("Byte length not shortened", t.getBytes().length,

is(6));

This shows why it is imperative that you always call

getLength() when calling

getBytes(), so you know how

much of the byte array is valid data.

BytesWritable is a

wrapper for an array of binary data. Its serialized format is an

integer field (4 bytes) that specifies the number of bytes to

follow, followed by the bytes themselves. For example, the byte

array of length two with values 3 and 5 is serialized as a 4-byte

integer (00000002) followed by

the two bytes from the array (03

and 05):

BytesWritable b = new BytesWritable(new byte[] { 3, 5 });

byte[] bytes = serialize(b);

assertThat(StringUtils.byteToHexString(bytes), is("000000020305"));

BytesWritable is mutable,

and its value may be changed by calling its set() method. As with Text, the size of the byte array returned

from the getBytes() method for

BytesWritable—the capacity—may

not reflect the actual size of the data stored in the BytesWritable. You can determine the size

of the BytesWritable by calling

getLength(). To

demonstrate:

b.setCapacity(11);

assertThat(b.getLength(), is(2));

assertThat(b.getBytes().length, is(11));

NullWritable is a

special type of Writable, as it

has a zero-length serialization. No bytes are written to, or read

from, the stream. It is used as a placeholder; for example, in

MapReduce, a key or a value can be declared as a NullWritable when you don’t need to use

that position—it effectively stores a constant empty value. NullWritable can also be useful as a key

in SequenceFile when you want to

store a list of values, as opposed to key-value pairs. It is an

immutable singleton: the instance can be retrieved by calling

NullWritable.get().

ObjectWritable is a

general-purpose wrapper for the following: Java primitives, String, enum, Writable, null, or arrays of any of these types. It

is used in Hadoop RPC to marshal and unmarshal method arguments and

return types.

ObjectWritable is useful

when a field can be of more than one type: for example, if the

values in a SequenceFile have

multiple types, then you can declare the value type as an ObjectWritable and wrap each type in an

ObjectWritable. Being a

general-purpose mechanism, it’s fairly wasteful of space since it

writes the classname of the wrapped type every time it is

serialized. In cases where the number of types is small and known

ahead of time, this can be improved by having a static array of

types, and using the index into the array as the serialized

reference to the type. This is the approach that GenericWritable

takes, and you have to subclass it to specify the types to

support.

There are four Writable collection types in the org.apache.hadoop.io package: ArrayWritable,

TwoDArrayWritable,

MapWritable, and

SortedMapWritable.

ArrayWritable and TwoDArrayWritable are Writable implementations for arrays and

two-dimensional arrays (array of arrays) of Writable instances. All the elements of an

ArrayWritable or a TwoDArrayWritable must be instances of the

same class, which is specified at construction, as follows:

ArrayWritable writable = new ArrayWritable(Text.class);

In contexts where the Writable is defined by type, such as in

SequenceFile keys or values, or

as input to MapReduce in general, you need to subclass ArrayWritable (or TwoDArrayWritable, as appropriate) to set

the type statically. For example:

public class TextArrayWritable extends ArrayWritable {

public TextArrayWritable() {

super(Text.class);

}

}

ArrayWritable and TwoDArrayWritable both have get() and set() methods, as well as a toArray() method, which creates a shallow

copy of the array (or 2D array).

MapWritable and SortedMapWritable are implementations of

java.util.Map<Writable,

Writable> and java.util.SortedMap<WritableComparable,

Writable>, respectively. The type of each key and value

field is a part of the serialization format for that field. The type

is stored as a single byte that acts as an index into an array of

types. The array is populated with the standard types in the

org.apache.hadoop.io package, but

custom Writable types are

accommodated, too, by writing a header that encodes the type array

for nonstandard types. As they are implemented, MapWritable and SortedMapWritable use positive byte values for custom types, so a maximum

of 127 distinct nonstandard Writable classes can be used in any

particular MapWritable or

SortedMapWritable instance.

Here’s a demonstration of using a MapWritable with different types for keys

and values:

MapWritable src = new MapWritable();

src.put(new IntWritable(1), new Text("cat"));

src.put(new VIntWritable(2), new LongWritable(163));

MapWritable dest = new MapWritable();

WritableUtils.cloneInto(dest, src);

assertThat((Text) dest.get(new IntWritable(1)), is(new Text("cat")));

assertThat((LongWritable) dest.get(new VIntWritable(2)), is(new

LongWritable(163)));

Conspicuous by their absence are Writable collection implementations for

sets and lists. A set can be emulated by using a MapWritable (or a SortedMapWritable for a sorted set), with

NullWritable values. For lists of

a single type of Writable,

ArrayWritable is adequate, but to

store different types of Writable

in a single list, you can use GenericWritable to wrap the elements

in an ArrayWritable.

Alternatively, you could write a general ListWritable using the ideas from MapWritable.

Hadoop comes with a useful set of Writable implementations that serve most

purposes; however, on occasion, you may need to write your own custom

implementation. With a custom Writable, you have full control over the

binary representation and the sort order. Because Writables are at the heart of the MapReduce

data path, tuning the binary representation can have a significant

effect on performance. The stock Writable implementations that come with Hadoop

are well-tuned, but for more elaborate structures, it is often better

to create a new Writable type,

rather than compose the stock types.

To demonstrate how to create a custom Writable, we shall write an implementation

that represents a pair of strings, called TextPair. The basic implementation is shown

in Example 4-7.

Example 4-7. A Writable implementation that stores a pair of Text objects

import java.io.*;

import org.apache.hadoop.io.*;

public class TextPair implements WritableComparable<TextPair> {

private Text first;

private Text second;

public TextPair() {

set(new Text(), new Text());

}

public TextPair(String first, String second) {

set(new Text(first), new Text(second));

}

public TextPair(Text first, Text second) {

set(first, second);

}

public void set(Text first, Text second) {

this.first = first;

this.second = second;

}

public Text getFirst() {

return first;

}

public Text getSecond() {

return second;

}

@Override

public void write(DataOutput out) throws IOException {

first.write(out);

second.write(out);

}

@Override

public void readFields(DataInput in) throws IOException {

first.readFields(in);

second.readFields(in);

}

@Override

public int hashCode() {

return first.hashCode() * 163 + second.hashCode();

}

@Override

public boolean equals(Object o) {

if (o instanceof TextPair) {

TextPair tp = (TextPair) o;

return first.equals(tp.first) && second.equals(tp.second);

}

return false;

}

@Override

public String toString() {

return first + "\t" + second;

}

@Override

public int compareTo(TextPair tp) {

int cmp = first.compareTo(tp.first);

if (cmp != 0) {

return cmp;

}

return second.compareTo(tp.second);

}

}

The first part of the implementation is straightforward: there

are two Text instance variables,

first and second, and associated constructors,

getters, and setters. All Writable implementations must have a

default constructor so that the MapReduce framework can instantiate

them, then populate their fields by calling

readFields(). Writable instances are mutable

and often reused, so you should take care to avoid allocating objects

in the write() or

readFields() methods.

TextPair’s

write() method serializes each Text object in turn to the output stream, by

delegating to the Text objects

themselves. Similarly, readFields()

deserializes the bytes from the input stream by delegating to each

Text object. The DataOutput and DataInput interfaces have a

rich set of methods for serializing and deserializing Java primitives,

so, in general, you have complete control over the wire format of your

Writable object.

Just as you would for any value object you write in Java, you

should override the hashCode(),

equals(), and

toString() methods from java.lang.Object. The

hashCode() method is used by the HashPartitioner (the

default partitioner in MapReduce) to choose a reduce partition, so you

should make sure that you write a good hash function that mixes well

to ensure reduce partitions are of a similar size.

Warning

If you ever plan to use your custom Writable with TextOutputFormat, then you must implement

its toString() method. TextOutputFormat calls

toString() on keys and values for their

output representation. For TextPair, we write the underlying Text objects as strings separated by a tab

character.

TextPair is an implementation

of WritableComparable, so it

provides an implementation of the compareTo()

method that imposes the ordering you would expect: it sorts by the

first string followed by the second. Notice that TextPair differs from TextArrayWritable from the previous section

(apart from the number of Text

objects it can store), since TextArrayWritable is only a Writable, not a WritableComparable.

The code for TextPair in Example 4-7

will work as it stands; however, there is a further optimization we

can make. As explained in WritableComparable and comparators,

when TextPair is being used as a

key in MapReduce, it will have to be deserialized into an object for

the compareTo() method to be invoked. What

if it were possible to compare two TextPair objects just by looking at their

serialized representations?

It turns out that we can do this, since TextPair is the concatenation of two

Text objects, and the binary

representation of a Text object

is a variable-length integer containing the number of bytes in the

UTF-8 representation of the string, followed by the UTF-8 bytes

themselves. The trick is to read the initial length, so we know how

long the first Text object’s byte

representation is; then we can delegate to Text’s RawComparator, and invoke it with the

appropriate offsets for the first or second string. Example 4-8 gives the details (note that this

code is nested in the TextPair

class).

Example 4-8. A RawComparator for comparing TextPair byte representations

public static class Comparator extends WritableComparator {

private static final Text.Comparator TEXT_COMPARATOR = new Text.Comparator();

public Comparator() {

super(TextPair.class);

}

@Override

public int compare(byte[] b1, int s1, int l1,

byte[] b2, int s2, int l2) {

try {

int firstL1 = WritableUtils.decodeVIntSize(b1[s1]) + readVInt(b1, s1);

int firstL2 = WritableUtils.decodeVIntSize(b2[s2]) + readVInt(b2, s2);

int cmp = TEXT_COMPARATOR.compare(b1, s1, firstL1, b2, s2, firstL2);

if (cmp != 0) {

return cmp;

}

return TEXT_COMPARATOR.compare(b1, s1 + firstL1, l1 - firstL1,

b2, s2 + firstL2, l2 - firstL2);

} catch (IOException e) {

throw new IllegalArgumentException(e);

}

}

}

static {

WritableComparator.define(TextPair.class, new Comparator());

}

We actually subclass WritableComparator rather than implement

RawComparator directly, since it

provides some convenience methods and default implementations. The

subtle part of this code is calculating firstL1 and firstL2, the lengths of the first Text field in each byte stream. Each is

made up of the length of the variable-length integer (returned by

decodeVIntSize() on WritableUtils) and the value it is

encoding (returned by readVInt()).

The static block registers the raw comparator so that whenever

MapReduce sees the TextPair

class, it knows to use the raw comparator as its default

comparator.

As we can see with TextPair, writing raw comparators takes

some care, since you have to deal with details at the byte level. It

is worth looking at some of the implementations of Writable in the org.apache.hadoop.io package for further

ideas, if you need to write your own. The utility methods on

WritableUtils are very handy,

too.

Custom comparators should also be written to be RawComparators, if

possible. These are comparators that implement a different sort

order to the natural sort order defined by the default comparator.

Example 4-9 shows a comparator for

TextPair, called FirstComparator, that considers only the

first string of the pair. Note that we override the

compare() method that takes objects so both

compare() methods have the same semantics.

We will make use of this comparator in Chapter 8, when we look at joins and secondary sorting in MapReduce (see Joins).

Example 4-9. A custom RawComparator for comparing the first field of TextPair byte representations

public static class FirstComparator extends WritableComparator {

private static final Text.Comparator TEXT_COMPARATOR = new Text.Comparator();

public FirstComparator() {

super(TextPair.class);

}

@Override

public int compare(byte[] b1, int s1, int l1,

byte[] b2, int s2, int l2) {

try {

int firstL1 = WritableUtils.decodeVIntSize(b1[s1]) + readVInt(b1, s1);

int firstL2 = WritableUtils.decodeVIntSize(b2[s2]) + readVInt(b2, s2);

return TEXT_COMPARATOR.compare(b1, s1, firstL1, b2, s2, firstL2);

} catch (IOException e) {

throw new IllegalArgumentException(e);

}

}

@Override

public int compare(WritableComparable a, WritableComparable b) {

if (a instanceof TextPair && b instanceof TextPair) {

return ((TextPair) a).first.compareTo(((TextPair) b).first);

}

return super.compare(a, b);

}

}

Although most MapReduce programs use Writable key and value types, this isn’t

mandated by the MapReduce API. In fact, any types can be used; the

only requirement is that there be a mechanism that translates to and

from a binary representation of each type.

To support this, Hadoop has an API for pluggable serialization

frameworks. A serialization framework is represented by an

implementation of Serialization (in the

org.apache.hadoop.io.serializer

package). WritableSerialization, for example, is the

implementation of Serialization for

Writable types.

A Serialization defines a

mapping from types to Serializer instances

(for turning an object into a byte stream) and Deserializer

instances (for turning a byte stream into an object).

Set the io.serializations

property to a comma-separated list of classnames to register Serialization implementations. Its default

value is org.apache.hadoop.io.serializer.WritableSerialization,

which means that only Writable objects can be serialized or

deserialized out of the box.

Hadoop includes a class called JavaSerialization

that uses Java Object Serialization. Although it makes it convenient

to be able to use standard Java types in MapReduce programs, like

Integer or String, Java Object Serialization is not as

efficient as Writables, so it’s not worth making this trade-off (see

the sidebar on the next page).

There are a number of other serialization frameworks that approach the problem in a different way: rather than defining types through code, you define them in a language-neutral, declarative fashion, using an interface description language (IDL). The system can then generate types for different languages, which is good for interoperability. They also typically define versioning schemes that make type evolution straightforward.

Hadoop’s own Record I/O (found in the org.apache.hadoop.record package) has an

IDL that is compiled into Writable objects, which makes it

convenient for generating types that are compatible with MapReduce.

For whatever reason, however, Record I/O was not widely used, and has been

deprecated in favor of Avro.

Apache Thrift and Google Protocol Buffers are both popular serialization frameworks, and they are commonly used as a format for persistent binary data. There is limited support for these as MapReduce formats;[35] however, Thrift is used in parts of Hadoop to provide cross-language APIs, such as the “thriftfs” contrib module, where it is used to expose an API to Hadoop filesystems (see Thrift).

In the next section, we look at Avro, an IDL-based serialization framework designed to work well with large-scale data processing in Hadoop.

Apache Avro[36] is a language-neutral data serialization system. The project was created by Doug Cutting (the creator of Hadoop) to address the major downside of Hadoop Writables: lack of language portability. Having a data format that can be processed by many languages (currently C, C++, Java, Python, and Ruby) makes it easier to share datasets with a wider audience than one tied to a single language. It is also more future-proof, allowing data to potentially outlive the language used to read and write it.

But why a new data serialization system? Avro has a set of features that, taken together, differentiate it from other systems like Apache Thrift or Google’s Protocol Buffers.[37] Like these systems and others, Avro data is described using a language-independent schema. However, unlike some other systems, code generation is optional in Avro, which means you can read and write data that conforms to a given schema even if your code has not seen that particular schema before. To achieve this, Avro assumes that the schema is always present—at both read and write time—which makes for a very compact encoding, since encoded values do not need to be tagged with a field identifier.

Avro schemas are usually written in JSON, and data is usually encoded using a binary format, but there are other options, too. There is a higher-level language called Avro IDL, for writing schemas in a C-like language that is more familiar to developers. There is also a JSON-based data encoder, which, being human-readable, is useful for prototyping and debugging Avro data.

The Avro specification precisely defines the binary format that all implementations must support. It also specifies many of the other features of Avro that implementations should support. One area that the specification does not rule on, however, is APIs: implementations have complete latitude in the API they expose for working with Avro data, since each one is necessarily language-specific. The fact that there is only one binary format is significant, since it means the barrier for implementing a new language binding is lower, and avoids the problem of a combinatorial explosion of languages and formats, which would harm interoperability.

Avro has rich schema resolution capabilities. Within certain carefully defined constraints, the schema used to read data need not be identical to the schema that was used to write the data. This is the mechanism by which Avro supports schema evolution. For example, a new, optional field may be added to a record by declaring it in the schema used to read the old data. New and old clients alike will be able to read the old data, while new clients can write new data that uses the new field. Conversely, if an old client sees newly encoded data, it will gracefully ignore the new field and carry on processing as it would have done with old data.

Avro specifies an object container format for sequences of objects—similar to Hadoop’s sequence file. An Avro data file has a metadata section where the schema is stored, which makes the file self-describing. Avro data files support compression and are splittable, which is crucial for a MapReduce data input format. Furthermore, since Avro was designed with MapReduce in mind, in the future it will be possible to use Avro to bring first-class MapReduce APIs (that is, ones that are richer than Streaming, like the Java API, or C++ Pipes) to languages that speak Avro.

Avro can be used for RPC, too, although this isn’t covered here. The Hadoop project has plans to migrate to Avro RPC, which will have several benefits, including supporting rolling upgrades, and the possibility of multilanguage clients, such as an HDFS client implemented entirely in C.

Avro defines a small number of data types, which can be used to build application-specific data structures by writing schemas. For interoperability, implementations must support all Avro types.

Avro’s primitive types are listed in Table 4-8. Each primitive type may also be

specified using a more verbose form, using the type attribute, such as:

{ "type": "null" }Table 4-8. Avro primitive types

| Type | Description | Schema |

|---|---|---|

null | The absence of a value | "null" |

boolean | A binary value | "boolean" |

int | 32-bit signed integer | "int" |

long | 64-bit signed integer | "long" |

float | Single precision (32-bit) IEEE 754 floating-point number | "float" |

double | Double precision (64-bit) IEEE 754 floating-point number | "double" |

bytes | Sequence of 8-bit unsigned bytes | "bytes" |

string | Sequence of Unicode characters | "string" |

Avro also defines the complex types listed in Table 4-9, along with a representative example of a schema of each type.

Table 4-9. Avro complex types

Each Avro language API has a representation for each Avro type

that is specific to the language. For example, Avro’s double type is represented in C, C++, and

Java by a double, in Python by a

float, and in Ruby by a Float.

What’s more, there may be more than one representation, or mapping, for a language. All languages support a dynamic mapping, which can be used even when the schema is not known ahead of run time. Java calls this the generic mapping.

In addition, the Java and C++ implementations can generate code to represent the data for an Avro schema. Code generation, which is called the specific mapping in Java, is an optimization that is useful when you have a copy of the schema before you read or write data. Generated classes also provide a more domain-oriented API for user code than generic ones.

Java has a third mapping, the reflect mapping, which maps Avro types onto preexisting Java types, using reflection. It is slower than the generic and specific mappings, and is not generally recommended for new applications.

Java’s type mappings are shown in Table 4-10. As the table shows, the specific

mapping is the same as the generic one unless otherwise noted (and

the reflect one is the same as the specific one unless noted). The

specific mapping only differs from the generic one for record, enum, and fixed, all of which have generated classes

(the name of which is controlled by the name and optional namespace attribute).

Note

Why don’t the Java generic and specific mappings use Java

String to represent an Avro

string? The answer is

efficiency: the Avro Utf8

type is mutable, so it may be reused for reading or writing a

series of values. Also, Java String decodes UTF-8 at object

construction time, while Avro Utf8 does it lazily, which can

increase performance in some cases. Note that the Java reflect

mapping does use Java’s String class, since it is designed for

Java compatibility, not performance.

Table 4-10. Avro Java type mappings

| Avro type | Generic Java mapping | Specific Java mapping | Reflect Java mapping |

|---|---|---|---|

null | null type | ||

boolean | boolean | ||

int | int | short or int | |

long | long | ||

float | float | ||

double | double | ||

bytes | java.nio.ByteBuffer | Array of byte | |

string | org.apache.avro.util.Utf8 | java.lang.String | |

array | org.apache.avro.generic.GenericArray | Array or java.util.Collection | |

map | java.util.Map | ||

record | org.apache.avro.generic.GenericRecord | Generated class implementing org.apache.avro.specific.SpecificRecord. | Arbitrary user class with a zero-argument constructor. All inherited nontransient instance fields are used. |

enum | java.lang.String | Generated Java enum | Arbitrary Java enum |

fixed | org.apache.avro.generic.GenericFixed | Generated class implementing org.apache.avro.specific.SpecificFixed. | org.apache.avro.generic.GenericFixed |

union | java.lang.Object | ||

Avro provides APIs for serialization and deserialization, which are useful when you want to integrate Avro with an existing system, such as a messaging system where the framing format is already defined. In other cases, consider using Avro’s data file format.

Let’s write a Java program to read and write Avro data to and from streams. We’ll start with a simple Avro schema for representing a pair of strings as a record:

{

"type": "record",

"name": "Pair",

"doc": "A pair of strings.",

"fields": [

{"name": "left", "type": "string"},

{"name": "right", "type": "string"}

]

}If this schema is saved in a file on the classpath called

Pair.avsc (.avsc is the conventional extension for an

Avro schema), then we can load it using the following

statement:

Schema schema = Schema.parse(getClass().getResourceAsStream("Pair.avsc"));We can create an instance of an Avro record using the generic API as follows:

GenericRecord datum = new GenericData.Record(schema);

datum.put("left", new Utf8("L"));

datum.put("right", new Utf8("R"));Notice that we construct Avro Utf8

instances for the record’s string fields.

Next, we serialize the record to an output stream:

ByteArrayOutputStream out = new ByteArrayOutputStream();

DatumWriter<GenericRecord> writer = new GenericDatumWriter<GenericRecord>(schema);

Encoder encoder = new BinaryEncoder(out);

writer.write(datum, encoder);

encoder.flush();

out.close();There are two important objects here: the

DatumWriter and the

Encoder. A DatumWriter translates data objects

into the types understood by an Encoder,

which the latter writes to the output stream. Here we are using a

GenericDatumWriter, which passes the fields

of GenericRecord to the

Encoder, in this case the

BinaryEncoder.

In this example only one object is written to the stream, but

we could call write() with more

objects before closing the stream if we wanted to.

The GenericDatumWriter needs to be

passed the schema since it follows the schema to determine which

values from the data objects to write out. After we have called the

writer’s write() method, we flush the

encoder, then close the output stream.

We can reverse the process and read the object back from the byte buffer:

DatumReader<GenericRecord> reader = new GenericDatumReader<GenericRecord>(schema);

Decoder decoder = DecoderFactory.defaultFactory()

.createBinaryDecoder(out.toByteArray(), null);

GenericRecord result = reader.read(null, decoder);

assertThat(result.get("left").toString(), is("L"));

assertThat(result.get("right").toString(), is("R"));We pass null to the calls

to createBinaryDecoder() and

read() since we are not reusing objects here

(the decoder or the record, respectively).

Let’s look briefly at the equivalent code using the specific

API. We can generate the Pair class from the

schema file, by using the Avro tools JAR file:[38]

%java -jar $AVRO_HOME/avro-tools-*.jar compile schema \>avro/src/main/resources/Pair.avsc avro/src/main/java

Then instead of a GenericRecord we

construct a Pair instance, which we write to

the stream using a SpecificDatumWriter, and

read back using a SpecificDatumReader:

Pair datum = new Pair(); datum.left = new Utf8("L"); datum.right = new Utf8("R"); ByteArrayOutputStream out = new ByteArrayOutputStream(); DatumWriter<Pair> writer = new SpecificDatumWriter<Pair>(Pair.class); Encoder encoder = new BinaryEncoder(out); writer.write(datum, encoder); encoder.flush(); out.close(); DatumReader<Pair> reader = new SpecificDatumReader<Pair>(Pair.class); Decoder decoder = DecoderFactory.defaultFactory() .createBinaryDecoder(out.toByteArray(), null); Pair result = reader.read(null, decoder); assertThat(result.left.toString(), is("L")); assertThat(result.right.toString(), is("R"));

Avro’s object container file format is for storing sequences of Avro objects. It is very similar in design to Hadoop’s sequence files, which are described in SequenceFile. The main difference is that Avro data files are designed to be portable across languages, so, for example, you can write a file in Python and read it in C (we will do exactly this in the next section).

A data file has a header containing metadata, including the Avro schema and a sync marker, followed by a series of (optionally compressed) blocks containing the serialized Avro objects. Blocks are separated by a sync marker that is unique to the file (the marker for a particular file is found in the header) and that permits rapid resynchronization with a block boundary after seeking to an arbitrary point in the file, such as an HDFS block boundary. Thus, Avro data files are splittable, which makes them amenable to efficient MapReduce processing.

Writing Avro objects to a data file is similar to writing to a

stream. We use a DatumWriter, as before, but instead

of using an Encoder, we create a DataFileWriter instance with

the DatumWriter. Then we can create a new

data file (which, by convention, has a .avro extension) and append objects to

it:

File file = new File("data.avro");

DatumWriter<GenericRecord> writer = new GenericDatumWriter<GenericRecord>(schema);

DataFileWriter<GenericRecord> dataFileWriter =

new DataFileWriter<GenericRecord>(writer);

dataFileWriter.create(schema, file);

dataFileWriter.append(datum);

dataFileWriter.close();The objects that we write to the data file must conform to the

file’s schema, otherwise an exception will be thrown when we call

append().

This example demonstrates writing to a local file

(java.io.File in the previous snippet), but

we can write to any java.io.OutputStream by

using the overloaded create() method on

DataFileWriter. To write a file to HDFS, for

example, get an OutputStream by calling

create() on

FileSystem (see Writing Data).

Reading back objects from a data file is similar to the

earlier case of reading objects from an in-memory stream, with one

important difference: we don’t have to specify a schema since it is

read from the file metadata. Indeed, we can get the schema from the

DataFileReader instance, using

getSchema(), and verify that it is the same

as the one we used to write the original object with:

DatumReader<GenericRecord> reader = new GenericDatumReader<GenericRecord>();

DataFileReader<GenericRecord> dataFileReader =

new DataFileReader<GenericRecord>(file, reader);

assertThat("Schema is the same", schema, is(dataFileReader.getSchema()));DataFileReader is a regular Java

iterator, so we can iterate through its data objects by calling its

hasNext() and

next() methods. The following snippet

checks that there is only one record, and that it has the expected

field values:

assertThat(dataFileReader.hasNext(), is(true));

GenericRecord result = dataFileReader.next();

assertThat(result.get("left").toString(), is("L"));

assertThat(result.get("right").toString(), is("R"));

assertThat(dataFileReader.hasNext(), is(false));Rather than using the usual next()

method, however, it is preferable to use the overloaded form that

takes an instance of the object to be returned (in this case,

GenericRecord), since

it will reuse the object and save allocation and garbage collection

costs for files containing many objects. The following is

idiomatic:

GenericRecord record = null;

while (dataFileReader.hasNext()) {

record = dataFileReader.next(record);

// process record

}If object reuse is not important, you can use this shorter form:

for (GenericRecord record : dataFileReader) {

// process record

}For the general case of reading a file on a Hadoop file

system, use Avro’s FsInput to specify the

input file using a Hadoop Path object.

DataFileReader actually offers random access

to Avro data file (via its seek() and

sync() methods); however, in many cases,

sequential streaming access is sufficient, for which

DataFileStream should be used.

DataFileStream can read from any Java

InputStream.

To demonstrate Avro’s language interoperability, let’s write a data file using one language (Python) and read it back with another (C).

The program in Example 4-10

reads comma-separated strings from standard input and writes them

as Pair records to an Avro data

file. Like the Java code for writing a data file, we create a

DatumWriter and a

DataFileWriter object. Notice that we have

embedded the Avro schema in the code, although we could equally

well have read it from a file.

Python represents Avro records as dictionaries; each line

that is read from standard in is turned into a dict object and appended to the

DataFileWriter.

Example 4-10. A Python program for writing Avro record pairs to a data file

import os

import string

import sys

from avro import schema

from avro import io

from avro import datafile

if __name__ == '__main__':

if len(sys.argv) != 2:

sys.exit('Usage: %s <data_file>' % sys.argv[0])

avro_file = sys.argv[1]

writer = open(avro_file, 'wb')

datum_writer = io.DatumWriter()

schema_object = schema.parse("""\

{ "type": "record",

"name": "Pair",

"doc": "A pair of strings.",

"fields": [

{"name": "left", "type": "string"},

{"name": "right", "type": "string"}

]

}""")

dfw = datafile.DataFileWriter(writer, datum_writer, schema_object)

for line in sys.stdin.readlines():

(left, right) = string.split(line.strip(), ',')

dfw.append({'left':left, 'right':right});

dfw.close()Before we can run the program, we need to install Avro for Python:

%easy_install avro

To run the program, we specify the name of the file to write output to (pairs.avro) and send input pairs over standard in, marking the end of file by typing Control-D: