Chapter 4. The Varnish Configuration Language

As mentioned before, Varnish is a reverse caching proxy. There are many other reverse proxies out there that do caching, even in the open source ecosystem. The main reason Varnish is so popular is, without a doubt, Varnish Configuration Language (VCL)—a domain-specific language used to control the behavior of Varnish.

The flexibility that VCL offers is unprecedented in this kind of software. It’s more a matter of expressing and controlling the behavior by programming it rather than by declaring it in a configuration file. Because of the rich API that is exposed through the objects in VCL, the level of detail with which you can tune Varnish is second to none.

The curly braces, the semicolon statement endings, and the commenting style in VCL remind you of programming languages like C, C++, and Perl. That’s maybe why VCL feels so intuitive; it sure beats defining rules in an XML file.

The Varnish Configuration Language doesn’t just feel like C, it actually gets compiled to C and dynamically loaded as a shared object when the VCL file is loaded by the Varnish runtime. We can even call it transpiling, because we convert a piece of source code to source code in another programming language.

Note

If you’re curious what the C code looks like, just run the varnishd program with the -C option to see the output.

In this chapter you’ll learn how VCL will allow you to hook into the finite state machine of Varnish to programmatically extend its behavior. We’ll cover the various subroutines, objects, and variables that allow you to extend this behavior.

I already hinted at the built-in VCL in Chapter 3. In this chapter you’ll see the actual code of the built-in VCL.

Hooks and Subroutines

VCL is not the kind of language where you start typing away in an empty file or within a main method; it actually restricts you and only allows you to hook into certain aspects of the Varnish execution flow. This execution flow is defined in a finite state machine.

The hooks represent specific stages of the Varnish flow. The behavior of Varnish in these stages is expressed through various built-in subroutines. You define a subroutine in your VCL file, extend the caching behavior in that subroutine, and issue a reload of that VCL file to enable that behavior.

Every subroutine has a fixed set of return statements that represent a state change in the flow.

Warning

If you don’t explicitly define a return statement, Varnish will fall back on the built-in VCL that is hardcoded in the system. This can potentially undo the extended behavior you defined in your VCL file.

This is a common mistake, one I’ve made very early on. And mind you: this is actually a good thing because the built-in VCL complies with HTTP best practices.

I actually advise you to minimize the use of custom VCL and rely on the built-in VCL as much as possible.

You’ll Spend 90% of Your Time in vcl_recv

When you write VCL, you’ll spend about 90% of your time in vcl_recv, 9% in backend_response, and the remaining 1% in various other subroutines.

Client-Side Subroutines

Here’s a list of client-side subroutines:

vcl_recv-

Executed at the beginning of each request.

vcl_pipe-

Pass the request directly to the backend without caring about caching.

vcl_pass-

Pass the request directly to the backend. The result is not stored in cache.

vcl_hit-

Called when a cache lookup is successful.

vcl_miss-

Called when an object was not found in cache.

vcl_hash-

Called after

vcl_recvto create a hash value for the request. This is used as a key to look up the object in Varnish. vcl_purge-

Called when a purge was executed on an object and that object was successfully evicted from the cache.

vcl_deliver-

Executed at the end of a request when the output is returned to the client.

vcl_synth-

Return a synthetic object to the client. This object didn’t originate from a backend fetch, but was synthetically composed in VCL.

Backend Subroutines

And here’s a list of backend subroutines:

vcl_backend_error_fetch-

Called before sending a request to the backend server.

vcl_backend_response-

Called directly after successfully receiving a response from the backend server.

vcl_backend_error-

Executed when a backend fetch was not successful or when the maximum amount of retries has been exceeded.

Custom Subroutines

You can also define your own subroutines and call them from within your VCL code. Custom subroutines can be used to organize and modularize VCL code, mostly in an attempt to reduce code duplication.

The following example consists of a remove_ga_cookies subroutine that contains find and replace logic using regular expressions. The end result is the removal of Google Analytics tracking cookies from the incoming request.

Here’s the file that contains the custom subroutine:

sub remove_ga_cookies {

# Remove any Google Analytics based cookies

set req.http.Cookie = regsuball(req.http.Cookie, "__utm.=[^;]+(; )?", "");

set req.http.Cookie = regsuball(req.http.Cookie, "_ga=[^;]+(; )?", "");

set req.http.Cookie = regsuball(req.http.Cookie, "_gat=[^;]+(; )?", "");

set req.http.Cookie = regsuball(req.http.Cookie, "utmctr=[^;]+(; )?", "");

set req.http.Cookie = regsuball(req.http.Cookie, "utmcmd.=[^;]+(; )?", "");

set req.http.Cookie = regsuball(req.http.Cookie, "utmccn.=[^;]+(; )?", "");

}

Here’s how you call that subroutine:

include "custom_subroutines.vcl";

sub vcl_recv {

call remove_ga_cookies;

}

Return Statements

Whereas the VCL subroutines represent the different states of the state machine, the return statement within each subroutine allows for state changes.

If you specify a valid return statement in a subroutine, the corresponding action will be executed and a transition to the corresponding state will happen. As mentioned before: when you don’t specify a return statement, the execution of the subroutine will continue and Varnish will fall back on the built-in VCL.

Here’s a list of valid return statements:

hash-

Look the object up in cache.

pass-

Pass the request off to the backend, but don’t cache the result.

pipe-

Pass the request off to the backend and bypass any caching logic.

synth-

Stop the execution and immediately return synthetic output. This returns statement takes an HTTP status code and a message.

purge-

Evict the object and its variants from cache. The URL of the request will be used as an identifier.

fetch-

Pass the request off to the backend and try to cache the response.

restart-

Restart the transaction and increase the

req.restartscounter untilmax_restartsis reached. deliver-

Send the response back to the client.

miss-

Synchronously refresh the object from the backend, despite a hit.

lookup-

Use the hash to look an object up in cache.

abandon-

Abandon a backend request and return a HTTP 503 (backend unavailable) error.

The execution flow

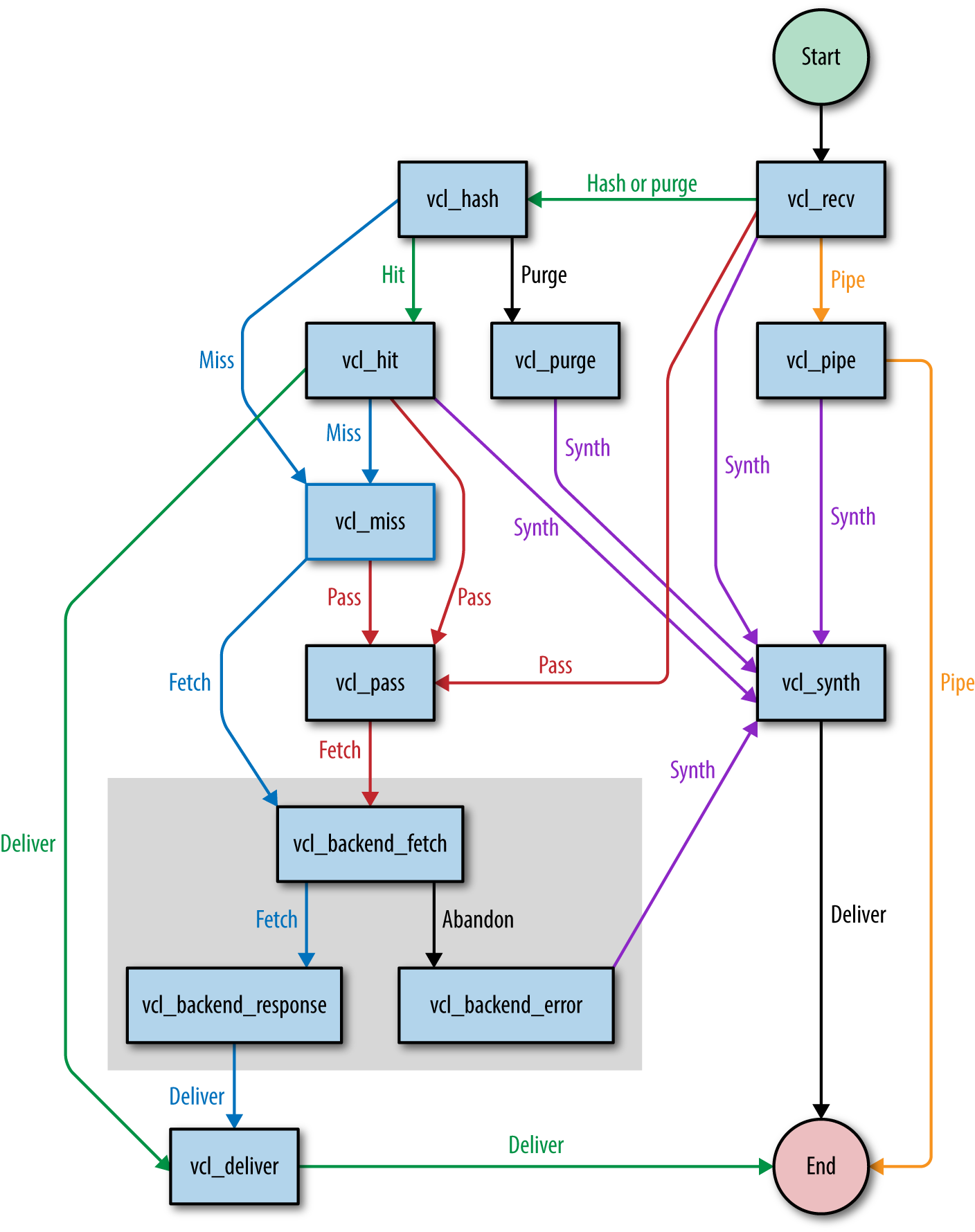

In Chapter 3, I talked about the built-in VCL and in the previous section I listed a set of subroutines and return statements. It’s time to put all the pieces of the puzzle together and compose the execution flow of Varnish.

In Chapter 1, I referred to the finite state machine that Varnish uses. Let’s have a look at it and see how Varnish transitions between states and what causes these transitions.

Figure 4-1 shows a simplified flowchart that explains the execution flow.

Figure 4-1. The simplified flow of execution in Varnish

We can split up the flow into two parts:

-

Backend fetches (the gray box)

-

Request and response handling (the rest of the flowchart)

Note

The purpose of the split is to handle backend fetches asynchronously. By doing that, Varnish can serve stale data while a new version of the cached object is being fetched. This means less request queuing when the backend is slow.

We have now reached a point where the subroutines start making sense. To summarize, let’s repeat some of the important points of the execution flow:

-

Every session starts in

vcl_recv. -

Cache lookups happen in

vcl_hash. -

Non-cacheable requests are directly passed to the backend in

vcl_pass. Responses are not cached. -

Items that were found in cache are handled by

vcl_hit. -

items that were not found are handled by

vcl_miss. -

Cache misses or passed requests are fetched from the backend via

vcl_backend_fetch. -

Backend responses are handled by

vcl_backend_response. -

When a backend fetch fails, the error is handled by

vcl_backend_error. -

Valid responses that were cached, passed, or missed are delivered by

vcl_deliver.

At this point you know the basic vocabulary we’ll use to refer to the different stages of the finite state machine. Now it’s time to learn about the VCL syntax and the VCL objects in order to modify HTTP requests and responses and in order to transition to other stages of the flow.

VCL Syntax

If you want to hook into the Varnish execution flow and extend the subroutines, you’d better know the syntax. Well, let’s talk syntax.

Warning

Varnish version 4 features a quite significant VCL syntax change compared to version 3: every VCL file should start with vcl 4.0;.

Many of the VCL examples in this book do not begin with vcl 4.0; because I assume they’re just extracts and not the full VCL file. Please keep this in mind.

The full VCL reference manual can be found on the Varnish website.

Operators

VCL has a bunch of operators you can use to assign, compare, and match values.

Here’s an example where we combine some operators:

sub vcl_recv {

if(req.method == "PURGE" || req.method == "BAN") {

return(purge);

}

if(req.method != "GET" && req.method != "HEAD") {

return(pass);

}

if(req.url ~ "^/products/[0-9]+/"){

set req.http.x-type = "product";

}

}

-

We use the assignment operator (

=) to assign values to variables or objects. -

We use the comparison operator (

==) to compare values. It returnstrueif both values are equal; otherwise,falseis returned. -

We use the match operator (

~) to perform a regular expression match. If the value matches the regular expression,trueis returned; otherwise,falseis returned. -

The negation operator (

!) returns the inverse logical state. -

The logical and operator (

&&) returnstrueif both operands return true; otherwise,falseis returned.

In the preceding example, we check if:

-

The request method is either equal to

PURGEor toBAN. -

The request method is not equal to

GETand toHEAD. -

The request URL matches a regular expression that looks for product URLs.

There’s also the less than operator (<), the greater than operator (>), the less than or equals operator (<=), and the greater than or equals operator (>=). Go to the operator section of the Varnish documentation site to learn more.

Conditionals

if and else statements—you probably know what they do. Let’s skip the theory and just go for an example:

sub vcl_recv {

if(req.url == "/"){

return(pass);

} elseif(req.url == "/test") {

return(synth(200,"Test succeeded"));

} else {

return(pass);

}

}

Basically, VCL supports if, else, and elseif. That’s it!

Comments

Comments are parts of the VCL that are not interpreted but used to add comments to describe your VCL.

VCL offers three ways to add comments to your VCL:

-

Single-line comments using a double slash

// -

Single-line comments using a hash

# -

Multiline comments in a comment block that is delimited by

/*and*/

Here’s a piece of VCL code that uses all three commenting styles:

sub vcl_recv {

// Single line of out-commented VCL.

# Another way of commenting out a single line.

/*

Multi-line block of commented-out VCL.

*/

}

Scalar Values

You can use strings, integers, and booleans—your typical scalar values—in VCL. VCL also supports time and durations.

Let’s figure out what we can do with those so-called scalar values.

Strings

Strings are enclosed between double quotes and cannot contain new lines. Double quotes cannot be used either, obviously. If you’re planning to use new lines or double quotes in your strings, you’ll need to use long strings that are enclosed between double quotes and curly braces.

Let’s see some code. Here’s an example of normal and long strings:

sub vcl_recv {

set req.http.x-test = "testing 123";

set req.http.x-test-long = {"testing '123', or even "123" for that matter"};

set req.http.x-test-long-newline = {"testing '123',

or even "123"

for that matter"};

}

Note

Strings are easy—just remember that long strings allow new lines and double quotes, whereas regular strings don’t.

Integers

Nothing much to say about integers—they’re just numbers. When you use integers in a string context, they get casted to strings.

Here’s an example of a valid use of integers:

sub vcl_recv {

return(synth(200,"All good"));

}

The first argument of the synth function requires an integer, so we gave it an integer.

sub vcl_recv {

return(synth(200,200));

}

The preceding example is pretty meaningless; the only thing it does is prove that integers get casted to strings.

Durations

Another type that VCL supports is durations. These are used for timeouts, time-to-live, age, grace, keep, and so on.

A duration looks like a number with a string suffix. The suffix can be any of the following values:

-

ms: milliseconds -

s: seconds -

m: minutes -

h: hours -

d: days -

w: weeks -

y: years

So if we want the duration to be three weeks, we define the duration as 3w.

Here’s a VCL example where we set the time-to-live of the response to one hour:

sub vcl_backend_response {

set beresp.ttl = 1h;

}

Durations can contain real numbers. Here’s an example in which we cache for 1.5 hours:

sub vcl_backend_response {

set beresp.ttl = 1.5h;

}

Regular Expressions

VCL supports Perl Compatible Regular Expressions (PCRE). Regular expressions can be used for pattern matching using the ~ match operator.

Regular expressions can also be used in functions like regsub and regsuball to match and replace text.

I guess you want to see some code, right? The thing is that I already showed you an example of regular expressions when I talked about the match operator. So I’ll copy/paste the same example to prove my point:

sub vcl_recv {

if(req.url ~ "^/products/[0-9]+/"){

set req.http.x-type = "product";

}

}

Functions

VCL has a set of built-in functions that perform a variety of tasks. These functions are:

-

regsub -

regsuball -

hash_data -

ban -

synthetic

Regsub

regsub is a function that matches patterns based on regular expressions and is able to return subsets of these patterns. This function is used to perform find and replace on VCL variables. regsub only matches the first occurrence of a pattern.

Here’s a real-life example where we look for a language cookie and extract it from the cookie header to perform a cache variation:

sub vcl_hash {

if(req.http.Cookie

~ "language=(nl|fr|en|de|es)"){

hash_data(regsub(req.http.Cookie,

"^.*;? ?language=(nl|fr|en|de|es)( ?|;| ;).*$","\1"));

}

}

Note

By putting parenthesis around parts of your regular expression, you group these parts. Each group can be addressed in the sub part. You address a group by its grade. In case of the previous example, group \1 represents the first group. That’s the group that contains the acutal language we want to extract.

Regsuball

The only difference between regsub and regsuball is the fact that the latter matches all occurences, whereas the former only matches the first occurence.

When you have to perform a find and replace on a string that has multiple occurences of the pattern you’re looking for, regsuball is the function you need!

Example? Sure! Check out Example 4-1.

You might remember this example from “Custom Subroutines”. If you use Google Analytics, there will be some tracking cookies in your cookie header. These cookies are controlled by Javascript, not by the server. They basically interfere with our hit rate and we want them gone.

The regsuball function is going to look for all occurences of these patterns and remove them.

The very first line, will be responsible for removing the following cookies:

-

__utma -

__utmb -

__utmc -

__utmt -

__utmv -

__utmz

We really need regsuball to do this, because regsub would only remove the first cookie that is matched.

Hash_data

The hash_data function is used in the vcl_hash subroutine and adds data to the hash that is used to identify objects in the cache.

The following example is the same one we used in the regsub example: it adds the language cookie to the hash. Because we didn’t explicitly mention a return statement, the hostname and the URL will also be added after the execution of vcl_hash:

sub vcl_hash {

if(req.http.Cookie ~ "language=(nl|fr|en|de|es)"){

hash_data(regsub(req.http.Cookie,

"^.*;? ?language=(nl|fr|en|de|es)( ?|;| ;).*$","\1"));

}

}

Ban

The ban function is used to ban objects from the cache. All objects that match a specific pattern are invalidated by the internal ban mechanism of Varnish.

We will go into more detail on banning and purging in Chapter 5. But just for the fun of it, I’ll show you an example of a ban function:

sub vcl_recv {

if(req.method == "BAN") {

ban("req.http.host == " + req.http.host + " && req.url == " + req.url);

return(synth(200, "Ban added"));

}

}

What this example does is remove objects from the cache when they’re called via the BAN HTTP method.

Warning

I know, the BAN method isn’t an official HTTP method. Don’t worry, it’s only for internal use.

Be sure to put this piece of VCL before any other code that checks for HTTP methods. Otherwise, your request might end up getting piped to the backend. This will also happen if you don’t return the synthetic response.

The ban function takes a string argument that matches the internal metadata of the cached object with the values that were passed. If an object matches these criteria, it is added to the ban list and removed from cache upon the next request.

Synthetic

The synthetic function returns a synthetic HTTP response in which the body is the value of the argument that was passed to this function. The input argument for this function is a string. Both normal and long strings are supported.

Synthetic means that the response is not the result of a backend fetch. The response is 100% artificial and was composed through the synthetic function. You can execute the synthetic function multiple times and upon each execution the output will be added to the HTTP response body.

The actual status code of such a response is set by resp.status in the vcl_synth subroutine. The default value is, of course, 200.

The synthetic function is restricted to two subroutines:

-

vcl_synth -

vcl_backend_error

These are the two contexts where no backend response is available and where a synthetic response makes sense.

Here’s a code example of synthetic responses:

sub vcl_recv {

return(synth(201,"I created something"));

}

sub vcl_backend_error {

set beresp.http.Content-Type = "text/html; charset=utf-8";

synthetic("An error occured: " + beresp.reason + "<br />");

synthetic("HTTP status code: " + beresp.status + "<br />");

return(deliver);

}

sub vcl_synth {

set resp.http.Content-Type = "text/html; charset=utf-8";

synthetic("Message of the day: " + resp.reason + "<br />");

synthetic("HTTP status code: " + resp.status + "<br />");

return(deliver);

}

Note

Synthetic output doesn’t just contain a string of literals. You can also parse input values. As you can see in the preceding examples, we’re using the reason and the status to get the body and the HTTP status code.

Mind you, in vcl_synth we get these variables through the resp object. This means we’re directly intercepting it from the response that will eventually be sent to the client.

In vcl_backend_error, we don’t use the resp object, but the beresp object. beresp means backend response. So an attempt has been made to fetch data from the backend, but it failed. Instead, the error message is added to the beresp.reason variable.

Includes

Although you will try to rely on the built-in VCL as much as possible, in some cases you’ll still end up with lots of VCL code.

After a while, the sheer amount of code makes it hard to maintain the overview. Includes help you organize your code. An include statement will be processed by the VCL compiler and it will load the content of included file inline.

In the example below we just load the someFile.vcl file. The contents of that file will be placed within the vcl_recv subroutine:

sub vcl_recv {

include "someFile.vcl";

}

And this is what the someFile.vcl file could look like:

if ((req.method != "GET" && req.method != "HEAD")

|| req.http.Authorization || req.http.Cookie) {

return (pass);

}

Importing Varnish Modules

Imports allow you to load Varnish modules (VMODs). These modules are written in C and they extend the behavior of Varnish and enrich the VCL syntax.

Varnish ships with a couple of VMODs that you can enable by importing them.

Here’s an example where we import the std VMOD:

import std;

sub vcl_recv {

set req.url = std.querysort(req.url);

}

The example above executes the querysort function and returns the URL with a sorted set of querystring parameters. This is important, because when a client uses the querystring parameters in different order, it will trigger a cache miss.

Go to the vmod_std documentation page to learn all about this very useful VCL extension.

Backends and Health Probes

All the VCL we covered so far has been restricted to the hooks that allow us to change Varnish’s behavior. But let’s not forget that Varnish is all about caching backend responses. So it’s about time we deal with the VCL aspect of backends.

In general, it looks like this:

backend name {

.attribute = "value";

}

Note

Varnish automatically connects to the backend that was defined first. Other backends can only be used by assigning them in VCL using the req.backend_hint variable.

Here’ a list of supported backend attributes:

host-

A mandatory attribute that represents the hostname or the IP of the backend.

port-

The backend port that will be used. The default is port 80.

connect_timeout-

The amount of time Varnish waits for a connection with the backend. The default value is 3.5 seconds.

first_byte_timeout-

The amount of time Varnish waits for the first byte to be returned from the backend after the initial connection. The default value is 60 seconds.

between_bytes_timeout-

The amount of time Varnish waits between each byte to ensure an even flow of data. The default value is 60 seconds.

max_connections-

The total amount of simultaneous connections to the backend. When the limit is reached, new connections are dropped. Make sure your backend can handle this amount of connections.

probe-

The backend probe that will be used to check the health of the backend. It could be defined inline, or linked to.

Let’s throw in a code example:

backend default {

.host = "127.0.0.1";

.port = "8080";

.connect_timeout = 2s;

.first_byte_timeout = 5s;

.between_bytes_timeout = 1s;

.max_connections = 150;

}

In the preceding example, a connection is made to the local machine on port 8080. We’re willing to wait two seconds for an initial connection. Once the connection is established, we’re going to wait up to five seconds to get the first byte. After that we want to receive bytes with a regular frequency. We’re willing to wait one second between each byte. We will allow up to 150 simultaneous connections to the backend.

Note

If more requests to the backend are queued than what is allowed by the max_connections setting, they will fail.

If any of the criteria is not met, a backend error is thrown and the execution is passed to vcl_backend_error with an HTTP 503 status code.

Without the use of a backend probe, an unhealthy backend can only be spotted when the connection fails. A so-called backend probe will poll the backend on a regular basis and check its health based on certain assertions. Probes can be defined similarly to backends.

In general, it looks like this:

probe name {

.attribute = "value";

}

Here’s a list of supported probe attributes:

url-

The url that is requested by the probe. This defaults to

/. request-

A custom HTTP request that can be sent to the backend.

expected_response-

The expected HTTP status code that is returned by the backend. This defaults to 200.

timeout-

The amount of time the probe waits for a backend response. The default value is 2 seconds.

interval-

How often the probe is run. The default value is 5 seconds.

window-

The amount of polls that are examined to determine the backend health. The default value is 8.

threshold-

The amount of polls in the window that should succeed before a backend is considered healthy. This defaults to 3.

initial-

The amount of initial backend polls it takes when Varnish starts before a backend is considered healthy. Defaults to threshold - 1.

Here’s an example of a backend probe that is defined inline:

backend default {

.host = "127.0.0.1";

.port = "8080";

.probe = {

.url = "/";

.expected_response = 200;

.timeout = 1s;

.interval = 1s;

.window = 5;

.threshold = 3;

.initial = 2;

}

}

This is what happens: the backend tries to connect to the local host on port 8080. There is a backend probe available that determines the backend health. The probe polls the backend on port 8080 on the root URL. In order for a poll to succeed, an HTTP response with a 200 status code is expected, and this response should happen within a second.

Every second, the probe will poll the backend and the result of five polls is used to determine health. Of those five polls, at least three should succeed before we consider the backend to be healthy. At startup time, this should be two polls.

We can also define a probe explicitly, name it, and reuse that probe for multiple backends. Here’s a code example that does that:

probe myprobe {

.url = "/";

.expected_response = 200;

.timeout = 1s;

.interval = 1s;

.window = 5;

.threshold = 3;

.initial = 2;

}

backend default {

.host = "my.primary.backend.com";

.probe = myprobe;

}

backend backup {

.host = "my.backup.backend.com";

.probe = myprobe;

}

This example has two backends, both running on port 80, but on separate machines. We use the myprobe probe to check the health of both machine, but we only define the probe once.

In Chapter 6 we’ll cover some more advanced backend and probing topics.

Access Control Lists

Access control lists, or ACLs as we like to call them, are language constructs in VCL that contain IP addresses, IP ranges, or hostnames. An ACL is named and IP addresses can be matched to them in VCL.

ACLs are mostly used to restrict access to certain parts of your content or logic based on the IP address. The match can be done by using the match operator (~) in an if-clause.

Here’s a code example:

acl allowed {

"localhost"; # myself

"192.0.2.0"/24; # and everyone on the local network

! "192.0.2.23"; # except for one specific IP

}

sub vcl_recv {

if(!client.ip ~ allowed) {

return(synth(403,"You are not allowed to access this page."));

}

}

In the preceding example, only local connections are allowed, or connections that come from the 192.0.2.0/24 IP range, with one exception: connections from 192.0.2.23 aren’t allowed. No other IP addresses are allowed access, either—they will get an HTTP 403 error if they do try.

In Chapter 5, we’ll be using ACLs to restrict access to the cache invalidation mechanism.

VCL Variables

You’re probably already familiar with req.url and client.ip. Yes, these are VCL variables, and let me tell you, there are a lot of them.

Here’s a list of the different variable objects:

bereq-

The backend request data structure.

beresp-

The backend response variable object.

client-

The variable object that contains information about the client connection.

local-

Information about the local TCP connection.

now-

Information about the current time.

obj-

The variable object that contains information about an object that is stored in cache.

remote-

Information about the remote TCP connection. This is either the client or a proxy that sits in front of Varnish.

req-

The request variable object.

req_top-

Information about the top-level request in a tree of ESI requests.

resp-

The response variable object.

server-

Information about the Varnish server.

storage-

Information about the storage engine.

Table 4-1 lists a couple of useful variables and explains what they do. For a full list of variables, go to the variables section in the VCL part of the Varnish documentation site.

| Variable | Returns | Meaning | Readable from | Writeable from |

|---|---|---|---|---|

beresp.do_esi |

boolean |

Process the Edge Side Includes after fetching it. Defaults to false. Set it to true to parse the object for ESI directives. Will only be honored if req.esi is true. |

vcl_backend_response, vcl_backend_error |

vcl_backend_response, vcl_backend_error |

beresp.do_stream |

boolean |

Deliver the object to the client directly without fetching the whole object into varnish. If this request is passed it will not be stored in memory. |

vcl_backend_response, vcl_backend_error |

vcl_backend_response, vcl_backend_error |

beresp.grace |

duration |

Set to a period to enable grace. |

vcl_backend_response, vcl_backend_error |

vcl_backend_response, vcl_backend_error |

beresp.http. |

header |

The corresponding HTTP header. |

vcl_backend_response, vcl_backend_error |

vcl_backend_response, vcl_backend_error |

beresp.keep |

duration |

Set to a period to enable conditional backend requests. The keep time is the cache lifetime in addition to the time-to-live. |

vcl_backend_response, vcl_backend_error |

vcl_backend_response, vcl_backend_error |

beresp.reason |

string |

The HTTP status message returned by the server. |

vcl_backend_response, vcl_backend_error |

vcl_backend_response, vcl_backend_error |

beresp.status |

integer |

The HTTP status code returned by the server. |

vcl_backend_response, vcl_backend_error |

vcl_backend_response, vcl_backend_error |

beresp.ttl |

duration |

The object’s remaining time-to-live, in seconds. |

vcl_backend_response, vcl_backend_error |

vcl_backend_response, vcl_backend_error |

beresp.uncacheable |

boolean |

Setting this variable makes the object uncacheable, which may get stored as a hit-for-pass object in the cache. |

vcl_backend_response, vcl_backend_error |

vcl_backend_response, vcl_backend_error |

client.identity |

string |

Identification of the client, used to load balance in the client director. Defaults to |

client |

client |

client.ip |

IP |

The client’s IP address. |

client |

|

local.ip |

IP |

The IP address of the local end of the TCP connection. |

client |

|

obj.age |

duration |

The age of the object. |

vcl_hit |

|

obj.grace |

duration |

The object’s remaining grace period in seconds. |

vcl_hit |

|

obj.hits |

integer |

The count of cache-hits on this object. A value of 0 indicates a cache miss. |

vcl_hit, vcl_deliver |

|

obj.ttl |

duration |

The object’s remaining time-to-live, in seconds. |

vcl_hit |

|

remote.ip |

IP |

The IP address of the other end of the TCP connection. This can either be the client’s IP or the outgoing IP of a proxy server. |

client |

|

req.backend_hint |

backend |

Sets bereq.backend to this value when a backend fetch is required. |

client |

client |

req.hash_always_miss |

boolean |

Force a cache miss for this request. If set to true, Varnish will disregard any existing objects and always (re)fetch from the backend. This allows you to update the value of an object without having to purge or ban it. |

vcl_recv |

vcl_recv |

req.http. |

header |

The corresponding HTTP header. |

client |

client |

req.method |

string |

The request type (e.g., GET, HEAD, POST, …). |

client |

client |

req.url |

string |

The requested URL. |

client |

client |

resp.reason |

string |

The HTTP status message returned. |

vcl_deliver, vcl_synth |

vcl_deliver, vcl_synth |

resp.status |

int |

The HTTP status code returned. |

vcl_deliver, vcl_synth |

vcl_deliver, vcl_synth |

server.ip |

IP |

The IP address of the server on which the client connection was received. |

client |

Varnish’s Built-In VCL

Remember “Varnish Built-In VCL Behavior” in which I talked about the built-in VCL behavior of Varnish? Now that we know how VCL works, we can translate that behavior into a full-blown VCL file.

Even if you don’t register a VCL file or if your VCL file only contains a backend definition, Varnish will behave as follows:1

/*-

* Copyright (c) 2006 Verdens Gang AS

* Copyright (c) 2006-2015 Varnish Software AS

* All rights reserved.

*

* Author: Poul-Henning Kamp <phk@phk.freebsd.dk>

*

* Redistribution and use in source and binary forms, with or without

* modification, are permitted provided that the following conditions

* are met:

* 1. Redistributions of source code must retain the above copyright

* notice, this list of conditions and the following disclaimer.

* 2. Redistributions in binary form must reproduce the above copyright

* notice, this list of conditions and the following disclaimer in the

* documentation and/or other materials provided with the distribution.

*

* THIS SOFTWARE IS PROVIDED BY THE AUTHOR AND CONTRIBUTORS ``AS IS'' AND

* ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE

* IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE

* ARE DISCLAIMED. IN NO EVENT SHALL AUTHOR OR CONTRIBUTORS BE LIABLE

* FOR ANY DIRECT, INDIRECT, INCIDENTAL, SPECIAL, EXEMPLARY, OR CONSEQUENTIAL

* DAMAGES (INCLUDING, BUT NOT LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS

* OR SERVICES; LOSS OF USE, DATA, OR PROFITS; OR BUSINESS INTERRUPTION)

* HOWEVER CAUSED AND ON ANY THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT

* LIABILITY, OR TORT (INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN ANY WAY

* OUT OF THE USE OF THIS SOFTWARE, EVEN IF ADVISED OF THE POSSIBILITY OF

* SUCH DAMAGE.

*

*

* The built-in (previously called default) VCL code.

*

* NB! You do NOT need to copy & paste all of these functions into your

* own vcl code, if you do not provide a definition of one of these

* functions, the compiler will automatically fall back to the default

* code from this file.

*

* This code will be prefixed with a backend declaration built from the

* -b argument.

*/

vcl 4.0;

#######################################################################

# Client side

sub vcl_recv {

if (req.method == "PRI") {

/* We do not support SPDY or HTTP/2.0 */

return (synth(405));

}

if (req.method != "GET" &&

req.method != "HEAD" &&

req.method != "PUT" &&

req.method != "POST" &&

req.method != "TRACE" &&

req.method != "OPTIONS" &&

req.method != "DELETE") {

/* Non-RFC2616 or CONNECT which is weird. */

return (pipe);

}

if (req.method != "GET" && req.method != "HEAD") {

/* We only deal with GET and HEAD by default */

return (pass);

}

if (req.http.Authorization || req.http.Cookie) {

/* Not cacheable by default */

return (pass);

}

return (hash);

}

sub vcl_pipe {

# By default Connection: close is set on all piped requests, to stop

# connection reuse from sending future requests directly to the

# (potentially) wrong backend. If you do want this to happen, you can undo

# it here.

# unset bereq.http.connection;

return (pipe);

}

sub vcl_pass {

return (fetch);

}

sub vcl_hash {

hash_data(req.url);

if (req.http.host) {

hash_data(req.http.host);

} else {

hash_data(server.ip);

}

return (lookup);

}

sub vcl_purge {

return (synth(200, "Purged"));

}

sub vcl_hit {

if (obj.ttl >= 0s) {

// A pure unadultered hit, deliver it

return (deliver);

}

if (obj.ttl + obj.grace > 0s) {

// Object is in grace, deliver it

// Automatically triggers a background fetch

return (deliver);

}

// fetch & deliver once we get the result

return (miss);

}

sub vcl_miss {

return (fetch);

}

sub vcl_deliver {

return (deliver);

}

/*

* We can come here "invisibly" with the following errors: 413, 417 & 503

*/

sub vcl_synth {

set resp.http.Content-Type = "text/html; charset=utf-8";

set resp.http.Retry-After = "5";

synthetic( {"<!DOCTYPE html>

<html>

<head>

<title>"} + resp.status + " " + resp.reason + {"</title>

</head>

<body>

<h1>Error "} + resp.status + " " + resp.reason + {"</h1>

<p>"} + resp.reason + {"</p>

<h3>Guru Meditation:</h3>

<p>XID: "} + req.xid + {"</p>

<hr>

<p>Varnish cache server</p>

</body>

</html>

"} );

return (deliver);

}

#######################################################################

# Backend Fetch

sub vcl_backend_fetch {

return (fetch);

}

sub vcl_backend_response {

if (beresp.ttl <= 0s ||

beresp.http.Set-Cookie ||

beresp.http.Surrogate-control ~ "no-store" ||

(!beresp.http.Surrogate-Control &&

beresp.http.Cache-Control ~ "no-cache|no-store|private") ||

beresp.http.Vary == "*") {

/*

* Mark as "Hit-For-Pass" for the next 2 minutes

*/

set beresp.ttl = 120s;

set beresp.uncacheable = true;

}

return (deliver);

}

sub vcl_backend_error {

set beresp.http.Content-Type = "text/html; charset=utf-8";

set beresp.http.Retry-After = "5";

synthetic( {"<!DOCTYPE html>

<html>

<head>

<title>"} + beresp.status + " " + beresp.reason + {"</title>

</head>

<body>

<h1>Error "} + beresp.status + " " + beresp.reason + {"</h1>

<p>"} + beresp.reason + {"</p>

<h3>Guru Meditation:</h3>

<p>XID: "} + bereq.xid + {"</p>

<hr>

<p>Varnish cache server</p>

</body>

</html>

"} );

return (deliver);

}

#######################################################################

# Housekeeping

sub vcl_init {

}

sub vcl_fini {

return (ok);

}

As a quick reminder, this is what the preceding code does:

-

It does not support the

PRImethod and throws anHTTP 405error when it is used. -

Request methods that differ from

GET,HEAD,PUT,POST,TRACE,OPTIONS, andDELETEare not considered valid and are piped directly to the backend. -

Only

GETandHEADrequests can be cached, other requests are passed to the backend and will not be served from cache. -

When a request contains a cookie or an authorization header, the request is passed to the backend and the response is not cached.

-

If at this point the request is not passed to the backend, it is considered cacheable and a cache lookup key is composed.

-

A cache lookup key is a hash that is composed using the URL and the hostname or IP address of the request.

-

Objects that aren’t stale are served from cache.

-

Stale objects that still have some grace time are also served from cache.

-

All other objects trigger a miss and are looked up in cache.

-

Backend responses that do not have a positive TTL are deemed uncacheable and are stored in the hit-for-pass cache.

-

Backend responses that send a

Set-Cookieheader are also considered uncacheable and are stored in the hit-for-pass cache. -

Backend responses with a no-store in the

Surrogate-Controlheader will not be stored in cache either. -

Backend responses containing no-cache, no-store, or private in the

Cache-controlheader will not be stored in cache. -

Backend responses that have a

Varyheader that creates cache variations on every request header are not considered cacheable. -

When objects are stored in the hit-for-pass cache, they remain in that blacklist for 120 seconds.

A Real-World VCL File

The VCL file that you see in “Varnish’s Built-In VCL” represents the desired behavior of Varnish. In an ideal world, this VCL code should suffice to make any website bulletproof. The reality is that modern day websites, applications and APIs don’t conform 100% to these rules.

The built-in VCL assumes that cacheable websites do not have cookies. Let me tell you: websites without cookies are few and far between. The following VCL file deals with these real-world cases and will dramatically increase your hit rate.

Warning

Although this real-world VCL file increases your hit rate, it is not tuned for any specific CMS or framework. If you happen to need a VCL file that caters to these specific applications, it will usually come with a CMS or framework module.

There are plenty of good VCL templates out there. I could also write one myself, but I’d just be reinventing the wheel. In the spirit of open source, I’d much rather showcase one of the most popular VCL templates out there.

The author of the VCL file is Mattias Geniar, a fellow Belgian, a fellow member of the hosting industry, a friend, and a true Varnish ambassador. Go to his GitHub repository to see the code.

This VCL template primarily sanitizes the request, optimizes backend connections, facilitates purges, adds caching stats, and adds ESI support.

Note

Room for improvements? Just send Mattias a pull request and explain why.

Conclusion

By now you should know the syntax, functions, different language constructs, return types, variable objects, and execution flow of VCL. Use this chapter as a reference when in doubt.

Don’t forget that the Varnish Cache project has a pretty decent documentation site. If you don’t find the answer to your question in this book, you’ll probably find it there.

At this point, I expect you to be comfortable with the VCL syntax and be able to read and interpret pieces of VCL you come across. In Chapter 7, we’ll dive deeper into some common scenarios in which custom VCL is required.

1 This piece of VCL code is the VCL that is shipped when you install Varnish.

Get Getting Started with Varnish Cache now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.