This section gives a brief introduction to each piece of the enterprise system.

The persistence layer is where you store your businessâs data. As the name implies, data here sticks around for a long time; it persists until you explicitly change or remove it. Most frequently, the persistence layer is a Relational Database Management System (RDBMS).

Because protecting your data is critical, the persistence layer should provide certain guarantees, collectively referred to as ACID: atomicity, consistency, isolation, and durability. Each of these properties plays a different role in maintaining the integrity of your data:

- Atomicity

The ability to group a number of operations together into a single transaction: either they all succeed, or they all fail. The RDBMS should ensure that a failure midway through the transaction does not leave the data in an intermediary, invalid state. For example, a bank account transfer requires debiting funds from one account and crediting funds in another. If one of the operations fails, the other should be rolled back as well; otherwise, one account may be debited without making the corresponding credit in the other account.

Consider the following instructions:

account1.debit(50) # power failure happens here account2.credit(50)

If the database fails between the two statements, where we have a comment to the same effect, the user of the ATM system will likely see an error on-screen and expect no transaction took place. When the database comes back up, though, the bank customer would be short $50 in account one, and be none the richer in account two. Atomicity provides the ability to group statements together into single, atomic units. In Rails, this is accomplished by invoking the method

transactionon a model class. Thetransactionmethod accepts a block to be executed as a single, atomic unit:Account.transaction do account1.debit(50) # power failure happens here account2.credit(50) end

Now, if the power goes out where the comment suggests, the database will ignore the first statement when it boots back up. For all intents and purposes, the first statement in the transaction never occurred.

- Consistency

The guarantee that constraints you define for your data are satisfied before and after each transaction. Different RDBMS systems may have different allowances for inconsistency within a transaction. For example, a complex set of bank transfers may, if executed in the wrong order, allow an account to drop to a balance below zero. However, if by the end of all the transfers, all balances are positive, the consistency check that all balances are positive has been guaranteed.

- Isolation

The guarantee that while a transaction is in process, no other transaction can access its intermediaryâand possibly inconsistentâdata. For example, a bank deposit requires checking an accountâs existing balance, adding the deposit amount to this balance, and then updating the account record with the new balance. If you are transferring $100 from one account to another, with one statement to debit $100 from the first account, and another statement to add $100 to the second account, isolation prevents your total balance from ever appearing to be $100 less between the two statements. Figure 1-5 illustrates this. Without the transaction in thread 1, the output time 3 would have been

0 + 100.- Durability

The guarantee that once your database accepts data and declares your transaction successful, the data you inserted or modified will persist in the database until you explicitly remove or modify it again. Similarly, data you deleted will be gone forever. There is no code example to demonstrate durability. It is a property of how the RDBMS interacts with the operating system and hardware. Short of a disk failure that actually destroys data, if the database returns control to you after a statement, you can assume the effects of the statement are permanently stored on disk, and a reset of the database or some other activity that clears system memory will not affect this assumption.

Note that many databases do allow you to relax the durability restriction to increase speed at the expense of reliability, but doing so is not generally recommended, unless your data is not very important to you.

Of course, having a database that is ACID-compliant is not enough to guarantee your dataâs integrity. Armed with this set of guarantees, it is now up to you, the database designer, to properly set up the database schema to do so. In Chapters 4 through 9, we will build up a complex schema, and then provably guarantee our dataâs integrity.

The application layer represents everything that operates between the data

layer and the outside world. This layer is responsible for

interpreting user requests, acting on them, and returning results,

usually in the form of web pages. When you start your first Rails

project by invoking rails

{projectname}, what you have created is the

application layer.

Depending on what your application is, the application layer can have different relationships with the data layer. If, for example, the purpose of your website is to provide Flash games for visitors to play, the application layerâand most developer effortâwill focus on the games users play. However, the application layer may also facilitate user login, as well as storage and retrieval of high scores in the database.

More commonly, though, websites present information to users and allow them to act upon it in some way; for example, online news sites that display articles or movie ticket vendor sites that provide movie synopses and show times in theatres nationwide. In these cases, the application layer is the interface into the data stored in database.

In its simplest form, a single Rails application comprises the whole of the application layer. When a user requests a web page, an instance of the full application handles the request. The entry point into the code base is determined by the requested URL, which translates into a controller class and action method pair. Code executes, usually retrieving data from the database, culminating in the rendering of a web page. At this point, the handling of the request is complete. This is the simplest of architectures (it was shown in Figure 1-1).

The configuration above can take you quite far, but it can only take you so far.

As your company grows, the complexity of your business needs may become too large to be managed well within a single application. The complexity can come either in the user interface, or in the back-end logic that powers it.

If you operate a blog site that is wildly successful, you may want a variety of different user interfaces and feature sets based on the target audience. For example, the young-adult site may have a feature set geared toward building discussion within a social network, while the adult-targeted site might be devoid of the social aspect altogether, and instead include spell-checking and other tools to make the content appear more polished. Or you might even spin off a completely different application that uses the same underlying content structure, but with a completely different business model. For example, a website for submitting writing assignments, where students are able to read and comment on other studentsâ work, could easily share the same underlying data structures as the blog sites. Figure 1-6 illustrates.

The first front-end may be the teen-targeted site, the second the adult-targeted site, and the third the homework-submission site. All three contain only the user-interface and the workflow logic. They communicate over the network with a single content management service, which is responsible for storing and retrieving the actual content from the database and providing the correct content to each site.

The opposite type of complexity is perhaps even more common. As your website grows in leaps and bounds, with each new feature requiring as much code as the originally launched website in its entirety, it often becomes beneficial to split up the application into smaller, more manageable pieces. Each major piece of functionality and the corresponding portions of the data layer are carved out into its own service, which publishes a specific API for its specialized feature set. The front-end, then, consumes these service APIs, and weaves a user interface around them. Based on the level of complexity and the need to manage it, services can even consume the APIs of other services as well.

In this configuration, shown in Figure 1-7, the front-end is a very manageable amount of code, unconcerned with the complex implementations of the services it consumes behind the scenes. Each service is also a manageable piece of code as well, unconcerned with the inner workings of other services or even the front-end itself.

Services, as in service-oriented architecture, and web services are distinct, but oft-confused concepts. The former variety live within your firewall (described later in this chapter) and are the building blocks of your larger application. The latter, web services, straddle the firewall and provide third parties access to your services. One way to think of this distinction is that the services have been placed âon the public Web.â Functionally, a service and a web service may be equivalent. Or, the web service may impose usage restrictions, require authentication or encryption, and so on. Figure 1-8 shows a web service backed by the same two services as the front-end HTML-based web application. Users equipped with a web browser visit the front-end HTML site, while third-party developers can integrate their own applications with the web service.

This was the briefest introduction to SOA and web services possible. In Chapter 13, weâll look much more closely at what a service is, as well as how one fits into a service-oriented architecture. Weâll also examine a variety of circumstances to see when moving to SOA makes senseâorganizationally or technically. In Chapter 14, weâll go over best practices for creating your own services and service APIs, and in Chapters 15 and 16, weâll build a service-oriented application using XML-RPC. Chapter 17 will provide an introduction to building web services RESTfully. In Chapter 18, weâll build a RESTful web service.

All of your data lives in databases in its most up-to-date, accurate form. However, there are two shortcomings to retrieving a piece of data from the database every time you need it.

First, itâs hard to scale databases linearly with traffic. What does this mean? Imagine your database system and application can comfortably support 10 concurrent usersâ requests, as in Figure 1-9.

Now imagine the number of requests doubles. If your application adheres to the share-nothing principal encouraged in Dave Thomasâs Agile Web Development with Rails (Pragmatic Bookshelf), you can easily add another application server and load balance the traffic. However, you cannot simply add another database, because the database itself is still a shared resource. In Figure 1-10, the database is no better off than it would have been with 20 connections to the same application server. The database still must deal with 20 requests per second.

The second shortcoming with requesting data from the database each time you need it is due to the fact that the format of information in the database does not always exactly match the format of data your application needs; sometimes a transformation or two is required to get data from a fully normalized format into objects your application can work with directly.

This doesnât mean thereâs a problem with your database design. The format you chose for the database might very well be the format you need to preserve your dataâs integrity, which is extremely important. Unfortunately, it may be costly to transform the data from the format in the database to the format your application wants it in each time you need it. This is where caching layers comes in.

There are many different types of, and uses for, caches. Some, such as disk caches and query plan caches, require little or no effort on your part before you can take advantage of them. Others you need to implement yourself. These fall into two categories: pre-built and real-time.

For data that changes infrequently or is published on a schedule, a pre-built cache is simple to create and can reduce database load dramatically. Every night, or on whatever schedule you define, all of the data to be cached is read from the database, transformed into a format that is immediately consumable by your application, and written into a scalable, redundant caching system. This can be a Memcache cluster or a Berkley Database (BDB) file that is pushed directly onto the web servers for fastest access (Figure 1-11).

Real-time caches fall into three main categories. The first and

simplest real-time cache is a physical model cache. In its

simplest incarnation, this is simply an in-memory copy of the results

of select queries, which are cleared whenever the data is updated or

deleted. When you need a piece of data, you check the cache first

before making a database request. If the data isnât in the cache, you

get it from the database, and then store the value in the cache for

next time. There is a Rails plugin for simple model caching called

cached_model, but often you will

have to implement caching logic yourself to get the most out of

it.

The next type of real-time cache is a logical model cache. While you may get quite a bit of mileage out of a physical model cache, if your application objects are complex, it may still take quite a bit of processing to construct your objects from the smaller constituent pieces, whether those smaller pieces are in a physical model cache or not. Caching your logical models post-processed from physical models can give your application a huge performance boost. However, knowing when to expire logical model caches can become tricky, as they are often made up of large numbers of records originating from several different database tables. A real-time logical model cache is essentially the same as a pre-built cache, but with the added complexity of expiry. For maximum benefit, a physical model cache should sit underneath and feed the logical model cache rebuilding process.

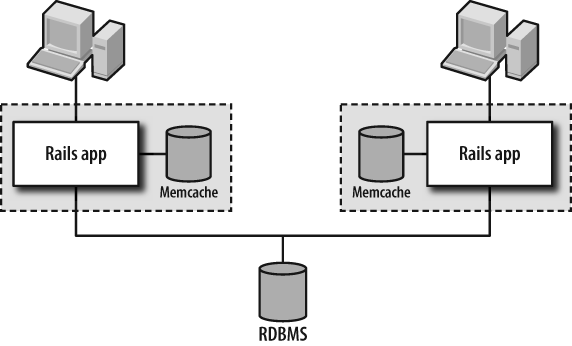

Note that both physical and logical model caches must be shared in some way between all application servers. Because the data in the cache can be invalidated due to actions on any individual application server, there either needs to be a single shared cache, or otherwise the individual caches on each application server need to notify each other of the expiry somehow. The most common way this is implemented in Rails is via Memcache, with the configuration shown in Figure 1-12.

The final type of real-time cache is a local, user-level cache. In most load-balanced setups, itâs possible to have âsticky sessions,â which guarantee that a visitor to your site will have all of her requests handled by the same server. With this in mind and some understanding of user behavior, you can preload information thatâs likely to be useful to the visitorâs next request and store it in a local in-memory cache on the web server where it will be needed. This can be information about the user herself, such as her name and any required authentication information. If the last request was a search, it could be the next few pages of search results. Figure 1-13 shows a user-level cache, local to each application server, backed by Memcache.

Depending on the nature of your application, as well as where your bottlenecks are, you may find you need one type of cache, or you may find you need all of them.

A messaging system, such as an Enterprise Service Bus (ESB), allows independent pieces of your system to operate asynchronously. An event may occur on your front-end website, or perhaps even in the service layer, requiring actions to be taken that do not affect the results to be presented to the user during his current request. For example, placing an order on a website requires storing a record of the transaction in the database and processing credit card validation while the user is still online. But it also may require additional actions, such as emailing a confirmation note or queuing up the order in the fulfillment centerâs systems. These additional actions take time, so rather than making the user wait for the processing in each system to complete, your application can send a single message into the ESB: âUser X purchased Y for $Z.â Any external process that needs this information can listen for the message and operate accordingly when it sees a message itâs interested in.

Using a messaging system like this, which allows many subscribers to listen for any given message, enables you to add additional layers of processing to data without having to update your main application. This cuts down on the amount of systems that need to be retested when you add additional functionality. It also cuts down on the number of issues the application responsible for user flow needs to be aware of. That application can concentrate on its specific functionâuser flowâand other parts of your application can take over and manage other tasks asynchronously.

The web server has a relatively simple role in the context of a Rails application. The purpose of the server is simply to accept connections from clients and pass them along to the application.

The one subtle point to keep in mind is that users visiting your site are not the only clients of your application who will interact with the web server. When you break up your application into separate services with separate front-ends, each piece is a full Rails application stack, including the web server.

The firewall is the barrier of trust. More than protecting against malicious hacking attempts, the firewall should be an integral part of large systems design. Within the firewall, you can simplify application logic by assuming access comes from a trusted source. Except for the most sensitive applications (e.g., controlled government systems that must protect access to secret information), you can eliminate authentication between different pieces of your application.

On the other hand, any piece of the system that accepts requests from outside of the firewall (your application layer, front-end, or web services) may need to authenticate the client to ensure that client has the correct level of access to make the request.

Get Enterprise Rails now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.