Chapter 4. Understanding and Deciding

OUR ABILITY TO UNDERSTAND and decide stems from our need to delegate the effort and attention it takes to get by. So we store, organize, and sequence everything weâve learned and done to make it as accessible and usable to ourselves as possible. This allows us to instantly respond to unfolding experiences. One metaphor that illustrates this is the culinary concept of mise en place. A French phrase, it roughly translates to âeverything in its place.â It describes the way chefs arrange their tools, ingredients, tableware, and working spaces in anticipation of the number of orders and how they need to be prepared (see Figure 4-1). There might be a dessert station, where all of the desserts are assembled. In this case, all of the dessert ingredients, dessert plates, and preparation tools are arranged in one area of the kitchen, within reach of the dessert chef. This allows the chef to fulfill orders rapidly as they come in; he or she can assemble any one of the menu items without leaving the station or rummaging for that darned scraper.

Figure 4-1. Mise en place describes a well-staged working area, here with prepped vegetables and condiments

Human cognition is organized around the present moment like mise en place; mostly we need to understand what is going on and what is going to happen next. Our memory, sensory and motor skills, reflexes, and attention are structured to support that. We protect ourselves from the amount of information we experience, minimize and optimize our ongoing efforts, and prepare for new information and experiences as we go along. Like mise en place, we create efficient and repeatable patterns in thought and activityâthe schemas and models as described earlierâto help us get a handle on our experiences.

The term satisficing, coined by the economist and scientist Herbert Simon in 1956, neatly combines two words, âsatisfyâ and âsuffice,â to describe how we do just enough to meet our needs. Cognitive processing in the brain is one of the most resource intensive biological processes that our bodies can perform. So to conserve energy, we default to using the bare minimum. As usability expert Steve Krug noted in the title of his book, âDonât make me think!â isnât just a good usability rule; itâs a core survival instinct. We experience a complete and contiguous reality, but in fact, our minds try to filter out as much as possible on two levels: from our conscious awareness or from any awareness at all. And unlike in the London Underground, we donât seem to mind the gaps at all.

Because individual senses are imprecise and limited, cognition allows us to make decisions and act, despite imperfect or incomplete information in a highly dynamic environment. We can contextualize, filter, and prioritize information rapidly, then apply it meaningfully, with the least amount of effort possible. Simply put, it makes our behaviors adaptive and responsive. We are also capable of processing information in an astonishing variety of ways. Rough calculations put our memory at somewhere upward of 1 petabyte, or 1,000 terabytes, of information. For comparison, the entire Library of Congress is 250 terabytes. Despite being built to use our informational and analytical capabilities as frugally as possible, individual cognition is still a pretty impressive feat. One personâs brain can store information at the same order of magnitude as the entire internet.1

The Foundations of Understanding: Recognition, Knowledge, Skills, and Narratives

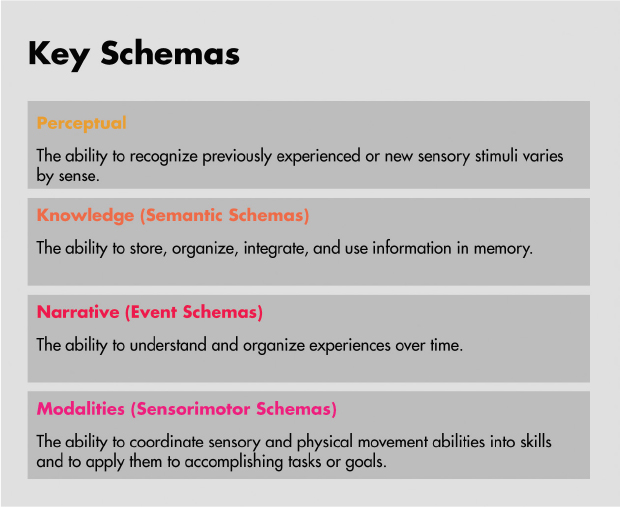

There are several theories about the schemas and models that people developâhow we structure and internalize information and experiences. Each is a different kind of mise en place: a way to organize our knowledge and abilities to fit our needs. We are not born with these abilities; rather, we start to develop them at a very early age, and continue through the rest of our lives. There are four that are most relevant to product design (see Figure 4-2).

Figure 4-2. While there are other schemas, these four are key to product design

Perceptual schemas exist across all of our senses and help us extract meaningful information from sensory data. For example, we experience sound as a big undifferentiated mess, all the air waves funneled together onto our eardrum. We automatically try to separate that one big audio stream into individual sources by listening for similarities in sound as well as spatializing the position with our binaural hearing. We can instantaneously recognize and distinguish the wind blowing from a police siren from puppy hiccups.2 We can visually recognize individual objects, even when their forms overlap. We automatically organize distance into foreground and background, which helps us understand which objects are in front of, or behind, one another. We can calculate the trajectory of moving objects and follow them with our eyes. These are just a few examples of the organizational systems that exist within all of our sensory modalities.

Semantic models help us organize meaning into knowledge. These kinds of models include linguistics and the use of symbols, like iconography or data visualization. Navigations, color codes, and information architectures are kinds of semantic systems that designers create to help people understand how to use an interface and how to accomplish their goals through its use.

Modalities and multimodalities encompass the way we develop sensory and physical skills, coordinating them to be able to interact with physical objects and environments. They are also known as sensorimotor schemas. In product design, these schemas inform the creation of interaction models, gesture systems, controller elements, and product behaviors.

Event or operational models help us figure out continuity (the consistency of experiences over time) and causality (the relationship between cause and effect). We are born storytellers, and we experience events over time as narratives. As Donald Norman observed, âPeople are innately disposed to look for causes of events, to form explanations and stories.â3 In design, these models are employed to help create feedback loops, structure task models and flows, identify design patterns, and define the beginning, middle, and end of experiences.

There are many other kinds of schemas and models that work together. People have varying aptitudes across abilities: some people donât have a very good sense of direction, some are much stronger with math than words, and others are very good at reading the emotional states of others. Two things to keep in mind are that we are constantly developing or refining models and schemas, and we are mostly doing it without being aware of it all.

One important integration for designers to understand is tool use: this activity integrates our mental model of objects, our body schema, and our modalities. As cognitive researchers have noted, our minds experience tools as an extension of our bodies, as if they were our fingers, arms, or toes:

After using a mechanical grabber that extended their reach, people behaved as though their arm really was longer ⦠Itâs a phenomenon each of us unconsciously experiences every day, the researchers said. The reason you were able to brush your teeth this morning without necessarily looking at your mouth or arm is because your toothbrush was integrated into your brainâs representation of your arm...Whatâs more, study participants perceived touches delivered on the elbow and middle fingertip of their arm as if they were farther apart after their use of the grabbing tool.4 5

When we use tools, our minds treat them as if they were a part of us. We imbue them with our own abilities to sense the world around us, to perform tasks, and to express ourselves. We feel the roughness of a piece of paper through the vibration of a pencil. We extend prosody to our musical instruments, and it feels a bit like singing or speaking, infusing sound with emotion, humor, urgency or calm. Designers may believe that devices extend human capabilities with useful features. The reverse is also true: tools become more useful when we can extend ourselves and our body schemas into them.

Aware and Non-Aware: Fast and Slow Thinking

Life fluctuates between the familiar and the unknown, which is why we have two types of understanding and deciding, described by the Nobel Prizeâwinning psychologist and behavioral economist Daniel Kahneman as System 1 and System 2, respectively, fast and slow thinking:

System 1 operates automatically and quickly, with little or no effort and no sense of voluntary control. System 2 allocates attention to the effortful mental activities that demand it, including complex computations. The operations of System 2 are often associated with the subjective experience of agency, choice, and concentration.6

We try to experience as much as possible through System 1, fast thinking. It encompasses most of the experiences in life that could be described as automatic, effortless, or routine. Once we understand how our front door opens, we just open it. After we have learned how to brush our teeth, we can do it while thinking about what shoes we are going to wear for our client meeting. System 2, or slow thinking, activates in situations that introduce uncertainty, unpredictability, complexity, risk, or novelty. It also allows for higher-level decision making, like problem solving, setting goals, challenging physical tasks, or planning future activities. These take more concentration and effort.

When designers think of engagement, it would probably resemble System 2. However, most of human experience falls within System 1. As Kahneman writes, âIn the unlikely event of this book being made into a film, System 2 would be a supporting character who believes herself to be the hero.â7 Similar to Kahnemanâs supporting character, much of interaction design has elevated the status of aware attention to the hero of product design, and much of the performance of interaction design is measured to that level. This seems odd, since we are built to actively avoid as many of those kinds of experiences as possible. Some of our most successful experiencesâthe ones that are foundational to our daily livesâare the ones that most quickly and easily become invisible to us. As experience designers, we must recognize that most of what we create should not demand awareness from users.

We experience products and devices using both fast and slow thinking. Physical typing skills, knowledge of the alphabet, and click or touch commands become a part of non-aware behaviors, and we can take them for granted. When our focus is fed up, it allows higher-level goals to become achievable, like writing an email, snapping that hilarious photo, or beating a personal best on a favorite game level. New interfaces that feel âintuitiveâ and easy to use often tap into this non-aware knowledge and ability. In addition, what we perceive as a single task is very often already multitasking, combining multiple non-aware tasks together, or combining non-aware tasks with a single aware task. From that perspective, much of daily human activity would really be considered multitasking. Multitasking between two aware tasks is very difficult and quickly impairs the performance and success of each task significantly. Thus, the level of awareness required by each task is an important consideration when integrating them.

Agency: Balancing Self-Control and Problem Solving

Self-control and problem solving are related forms of cognition, though this may not be immediately obvious. âSeveral psychological studies have shown that people who are simultaneously challenged by a demanding cognitive task and by a temptation are more likely to yield to temptation.â8 One of these studies asked its participants to memorize a list of seven digits for a few minutes. They were then given the choice between âa sinful chocolate cakeâ and a âvirtuous fruit saladâ (see Figure 4-3). Those who had performed the memorization task were much more likely to choose the chocolate cake. Other studies in this vein demonstrated the interdependence of self-control, problem solving, and personal choice.9

Figure 4-3. Reading about psychology and interaction design is challenging. Now would you prefer this chocolate cake or watermelon?

Self-control is often described as the ability to regulate attention, emotion, and effort. Performing a physically strenuous task requires suppressing the feelings of pain or aches we feel in our body. Solving an analytical geometry requires maintaining focus. Choosing healthy foods for dinner requires resisting temptation. While these tasks seem unrelated, they draw from the same pool of cognitive energy. This is not a metaphor. They all cause similar drops in blood glucose.10 Like physical energy, mental energy can be depleted and restored. This phenomenon, known as ego depletion, is linked to cognitive load, our ability to solve problems and make decisions.

Itâs strange to think of it this way, but our senses of personal agency and free will come from the same part of ourselves as our ability to complete a physics assignment. When we arenât appropriately challenged, we may also not feel very fulfilled. When we cannot plan goals for ourselves, we feel aimless. Our sense of identity, of who we are, emerges from the kinds of problems we solve, the kinds of choices we make, when we show determination, and when we give into our impulses. Like sunken treasure, we find what is valuable, rewarding, and meaningful within the fluid depths of reasoning, desire, and choice.

There is a however, a subtle difference between external reward and what people find intrinsically rewarding. Gamification taps into this, by giving structure to the ways people align self-control with problem solving and goal completion. Companies are delving deeper into behavioral economicsâthe psychology of motivation and habit formationâto understand how technology and products become a part of usersâ lives. Tying longer term personal goals more directly to immediate tasks empowers users to complete tasks more quickly and to feel a greater sense of reward.

Motivation, Delight, Learning, and Reward: Creating Happiness

How will users react to a product? How will they learn to use it? What will persuade them to keep using it after the first moments of novelty wear off? Motivation and learning play a large role.

Psychologist B.J. Foggâs behavioral model stresses three core motivators (sensation, anticipation, and belonging), each with a pair of opposites: pleasure and pain, hope and fear, and acceptance and rejection.11 By coordinating them, and focusing on smaller, easier behaviors, more sustained changes can happen over time.

There is a common mistake of expecting devices to move us toward the achievement of longer-term changes or even continued use, when they are not equipped to do so. They are much better at creating immediate motivations and delight and facilitating flow states. By doing those, habits may change and more permanent behaviors may be established. Toe dippers may become regular users.

The notion of happiness quickly gets into philosophical questions, and Morten L. Kringelbach and Kent C. Berridge use philosophy as an opening to discuss the topic in The Neuroscience of Happiness and Pleasure: âSince Aristotle, happiness has been usefully thought of as consisting of at least two aspects: hedonia (pleasure) and eudaimonia (a life well lived). In contemporary psychology, these aspects are usually referred to as pleasure and meaning, and positive psychologists have recently proposed adding a third distinct component of engagement related to feelings of commitment and participation in life (Seligman et al. 2005).â12

Therefore, there are three levels of happiness: immediate delight (usually something based on senses, aesthetic stimulation, or interest); flow states (around activities and our ability to stay focused and achieve them); and gratifying longer-term changes (like changing behaviors to become better at a skill or developing a good habit).

The delight that we get from sensory and mental stimulation is usually short term. Flourishes like a cool animation, an interesting sound, or a clever phrase are fun and fleeting. That stimulation can, however, be used as a path to deeper change, like flow states and habits. Of course, those habits can be good or bad, and result in changes that range from better health to the epidemic of continuous partial attention caused by twitch-checking our phones and Facebook hundreds of times a day.

Learning is also a form of deeper change, creating cognitive patterns, rather than behavioral ones. To design learning experiences, it is important to motivate action that results in a valuable reward. The trick is to clearly demonstrate that the action caused the reward. Psychologists believe that learning works like memory, with the added factor of contingencies. As psychologists Peter Lindsay and Donald Norman put it, âThe problem facing the learner is to determine the conditions that are relevant to the situation, to determine what the appropriate actions are, and to record that information properly.â13

Summary

Patterns in sensing, thought, and behavior result in the development of models and schemas. These allow us to allocate our limited attention and cognitive resouces to the experiences that really matter. There are multiple theories about the kinds of models and schemas we develop, and how we develop them.

Cognition can be split between non-aware and aware, which align with psychologist Daniel Kahnemanâs System 1 and System 2 thinking. Many designs aim to create engaging experiences (i.e., ones that are very aware), but non-aware experiences are much more common and may be more appropriate. The relentless grab for attention has succeeded in sapping usersâ focus. Designing for reliable non-aware experiences that restore focus is ripe for exploration.

1 âMemory capacity of brain is 10 times more than previously thought,â Salk News, January 2016, https://www.salk.edu/news-release/memory-capacity-of-brain-is-10-times-more-than-previously-thought/.

2 Matthew Kennelly, âBuck Has the Hiccups,â YouTube video, 0:15, April 27, 2015, https://www.youtube.com/watch?v=6QslV86odco.

3 Donald Norman, The Design of Everyday Things (New York: Basic Books, 2013), 55.

4 Angelo Maravita and Atsushi Iriki, âTools for the body,â Trends in Cognitive Sciences 8, no. 2 (2004): 79-86. http://dx.doi.org/10.1016/j.tics.2003.12.008.

5 âBrain Sees Tools as Extensions of Body,â Live Science, June 22, 2009, https://www.livescience.com/9664-brain-sees-tools-extensions-body.html.

6 Daniel Kahneman, Thinking, Fast and Slow (New York: Farrar, Straus and Giroux, 2011), 20.

7 Kahneman, Thinking, Fast and Slow, 31.

8 Kahneman, Thinking, Fast and Slow, 41.

9 Kahneman, Thinking, Fast and Slow, 40.

10 Kahneman, Thinking, Fast and Slow, 43.

11 B. J. Fogg, âB. J. Foggâs Behavior Model,â accessed January 20, 2018, http://www.behaviormodel.org.

12 Morten L. Kringelbach and Kent C. Berridge, âThe Neuroscience of Happiness and Pleasure,â Social Research 77, no. 2 (2010): 659.

13 Peter H. Lindsay and Donald A. Norman, Human Information Processing (New York, Academic Press, 1977), 499.

Get Designing Across Senses now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.