Chapter 4. Concurrent Programming

The future of programming is concurrent programming. Not too long ago, sequential, command-line programming gave way to graphical, event-driven programming, and now single-threaded programming is yielding to multithreaded programming.

Whether you are writing a web server that must handle many clients simultaneously or writing an end-user application such as a word processor, concurrent programming is for you. Perhaps the word processor checks for spelling errors while the user types. Maybe it can print a file in the background while the user continues to edit. Users expect more today from their applications, and only concurrent programming can deliver the necessary power and flexibility.

Delphi Pascal includes features to support concurrent programming—not as much support as you find in languages such as Ada, but more than in most traditional programming languages. In addition to the language features, you can use the Windows API and its semaphores, threads, processes, pipes, shared memory, and so on. This chapter describes the features that are unique to Delphi Pascal and explains how to use Delphi effectively to write concurrent programs. If you want more information about the Windows API and the details of how Windows handles threads, processes, semaphores, and so on, consult a book on Windows programming, such as Inside Windows NT, second edition, by David Solomon (Microsoft Press, 1998).

Threads and Processes

This section provides an overview of multithreaded programming in Windows. If you are already familiar with threads and processes in Windows, you can skip this section and continue with the next section, The TThread Class.”

A thread is a flow of control in a program. A program can have many threads, each with its own stack, its own copy of the processor’s registers, and related information. On a multiprocessor system, each processor can run a separate thread. On a uniprocessor system, Windows creates the illusion that threads are running concurrently, though only one thread at a time gets to run.

A process is a collection of threads all running in a single address space. Every process has at least one thread, called the main thread. Threads in the same process can share resources such as open files and can access any valid memory address in the process’s address space. You can think of a process as an instance of an application (plus any DLLs that the application loads).

Threads in a process can communicate easily because they can share variables. Critical sections protect threads from stepping on each others’ toes when they access shared variables. (Read the section Synchronizing Threads" later in this chapter, for details about critical sections.)

You can send a Windows message to a particular thread, in which case the receiving thread must have a message loop to handle the message. In most cases, you will find it simpler to let the main thread handle all Windows messages, but feel free to write your own message loop for any thread that needs it.

Separate processes can communicate in a variety of ways, such as messages, mutexes (short for mutual exclusions), semaphores, events, memory-mapped files, sockets, pipes, DCOM, CORBA, and so on. Most likely, you will use a combination of methods. Separate processes do not share ordinary memory, and you cannot call a function or procedure from one process to another, although several remote procedure call mechanisms exist, such as DCOM and CORBA. Read more about processes and how they communicate in the section Processes" later in this chapter.

Delphi has built-in support for multithreaded programming—writing applications and DLLs that work with multiple threads in a process. Whether you work with threads or processes, you have the full Windows API at your disposal.

In a

multithreaded application or library, you must be sure that the

global variable IsMultiThread is True. Most

applications do this automatically by calling

BeginThread or using the

TThread class. If you write a DLL that might be

called from a multithreaded application, though, you might need to

set IsMultiThread to True manually.

Scheduling and States

Windows schedules threads according to their priorities. Higher priority threads run before lower priority threads. At the same priority, Windows schedules threads so that each thread gets a fair chance to run. Windows can stop a running thread (called preempting the thread) to give another thread a chance to run. Windows defines several different states for threads, but they fall into one of three categories:

- Running

A thread is running when it is active on a processor. A system can have as many running threads as it has processors—one thread per processor. A thread remains in the running state until it blocks because it must wait for some operation (such as the completion of I/O). Windows then preempts the thread to allow another thread to run, or the thread suspends itself.

- Ready

A thread is ready to run if it is not running and is not blocked. A thread that is ready can preempt a running thread at the same priority, but not a thread at a higher priority.

- Blocked

A thread is blocked if it is waiting for something: an I/O or similar operation to complete, access to a shared resource, and so on. You can explicitly block a thread by suspending it. A suspended thread will wait forever until you resume it.

The essence of writing multithreaded programming is knowing when to block a thread and when to unblock it, and how to write your program so its threads spend as little time as possible in the blocked state and as much time as possible in the running state.

If you have many threads that are ready (but not running), that means you might have a performance problem. The processor is not able to keep up with the threads that are ready to run. Perhaps your application is creating too many active threads, or the problem might simply be one of resources: the processor is too slow or you need to switch to a multiprocessor system. Resolving resource problems is beyond the scope of this book—read almost any book on Windows NT administration to learn more about analyzing and handling performance issues.

Synchronizing Threads

The

biggest concern in multithreaded programming is preserving data

integrity. Threads that access a common variable can step on each

others’ toes. Example 4-1 shows a simple class

that maintains a global counter. If two threads try to increment the

counter at the same time, it’s possible for the counter to get

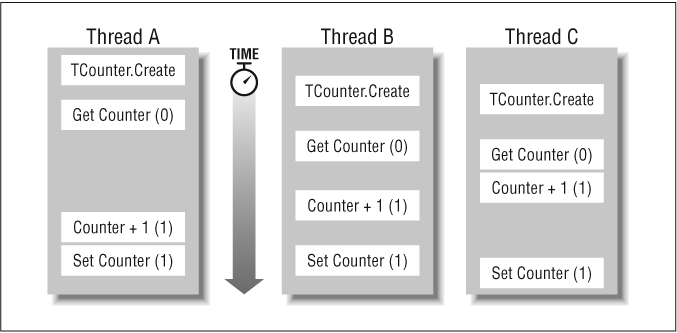

the wrong value. Figure 4-1 illustrates this

problem: Counter starts at

and should become 3 after creating three TCounter

objects. The three threads compete for the shared variable, and as a

result, Counter ends up with the incorrect value

of 1. This is known as a race condition because

each thread races to finish its job before a different thread steps

in and bungles things.

var

Counter: Integer;

type

TCounter = class

public

constructor Create;

function GetCounter: Integer;

end;

constructor TCounter.Create;

begin

inherited;

Counter := Counter + 1;

end;

function TCounter.GetCounter: Integer;

begin

Result := Counter;

end;This example uses an integer counter because integers are simple.

Reading an integer value is an atomic operation,

that is, the operation cannot be interrupted by another thread. If

the counter were, say, a Variant, reading its

value involves multiple instructions, and another thread can

interrupt at any time, so even reading the Variant

counter is not safe in a multithreaded program without some way to

limit access to one thread at a time

Critical sections

To preserve the integrity of the Counter variable,

every thread must cooperate and agree that only one thread at a time

should change the variable’s value. Other threads can look at

the variable and get its value, but when a thread wants to change the

value, it must prevent all other threads from also trying to change

the variable’s value. The standard technique for ensuring

single-thread access is a critical section.

A critical section is a region of code that is reserved for single-thread access. When one thread enters a critical section, all other threads are kept out until the first thread leaves the critical section. While the first thread is in the critical section, all other threads can continue to run normally unless they also want to enter the critical section. Any other thread that tries to enter the critical section blocks and waits until the first thread is done and leaves the critical section. Then the next thread gets to enter the critical section. The Windows API defines several functions to create and use a critical section, as shown in Example 4-2.

var

Counter: Integer;

CriticalSection: TRtlCriticalSection;

type

TCounter = class

public

constructor Create;

function GetCounter: Integer;

end;

constructor TCounter.Create;

begin

inherited;

EnterCriticalSection(CriticalSection);

try

Counter := Counter + 1;

finally

LeaveCriticalSection(CriticalSection);

end;

end;

function TCounter.GetCounter: Integer;

begin

// Does not need a critical section because integers

// are atomic.

Result := Counter;

end;

initialization

InitializeCriticalSection(CriticalSection);

finalization

DeleteCriticalSection(CriticalSection);

end.The new TCounter class is

thread-safe, that is, you can safely share an

object of that type in multiple threads. Most Delphi classes are not

thread-safe, so you cannot share a single object in multiple threads,

at least, not without using critical sections to protect the

object’s internal state.

One advantage of object-oriented programming is that you often

don’t need a thread-safe class. If each thread creates and uses

its own instance of the class, the threads avoid stepping on each

others’ data. Thus, for example, you can create and use

TList and other objects within a thread. The only

time you need to be careful is when you share a single

TList object among multiple threads.

Because threads wait for a critical section to be released, you should keep the work done in a critical section to a minimum. Otherwise, threads are waiting needlessly for the critical section to be released.

Multiple simultaneous readers

In the TCounter class, any thread can safely

examine the counter at any time because the

Counter variable is atomic. The critical section

affects only threads that try to change the counter. If the variable

you want to access is not atomic, you must protect reads and writes,

but a critical section is not the proper tool. Instead, use the

TMultiReadExclusiveWriteSynchronizer class, which

is declared in the SysUtils unit. The unwieldy

name is descriptive: it is like a critical section, but it allows

many threads to have read-only access to the critical region. If any

thread wants write access, it must wait until all other threads are

done reading the data. Complete details on this class are in Appendix B.

Exceptions

Exceptions in a thread cause the application to terminate, so you should catch exceptions in the thread and find another way to inform the application’s main thread about the exception. If you just want the exception message, you can catch the exception, get the message, and pass the message string to the main thread so it can display the message in a dialog box, for example.

If you want the main thread to

receive the actual exception object, you need to do a little more

work. The problem is that when a

try-except block finishes

handling an exception, Delphi automatically frees the exception

object. You need to write your exception handler so it intercepts the

exception object, hands it off to the main thread, and prevents

Delphi from freeing the object prematurely. Modify the exception

frame on the runtime stack to trick Delphi and prevent it from

freeing the exception object. Example 4-3 shows one

approach, where a thread procedure wraps a

try-except block around the

thread’s main code block. The parameter passed to the thread

function is a pointer to an object reference where the function can

store an exception object or nil if the thread

function completes successfully.

type

PObject = ^TObject;

PRaiseFrame = ^TRaiseFrame;

TRaiseFrame = record

NextFrame: PRaiseFrame;

ExceptAddr: Pointer;

ExceptObject: TObject;

ExceptionRecord: PExceptionRecord;

end;

// ThreadFunc catches exceptions and stores them in Param^, or nil

// if the thread does not raise any exceptions.

function ThreadFunc(Param: Pointer): Integer;

var

RaiseFrame: PRaiseFrame;

begin

Result := 0;

PObject(Param)^ := nil;

try

DoTheThreadsRealWorkHere;

except

// RaiseList is nil if there is no exception; otherwise, it

// points to a TExceptionFrame record.

RaiseFrame := RaiseList;

if RaiseFrame <> nil then

begin

// When the thread raises an exception, store the exception

// object in the parameter's object reference. Then set the

// object reference to nil in the exception frame, so Delphi

// does not free the exception object prematurely.

PObject(Param)^ := RaiseFrame.ExceptObject;

RaiseFrame.ExceptObject := nil;

end;

end;

end;Deadlock

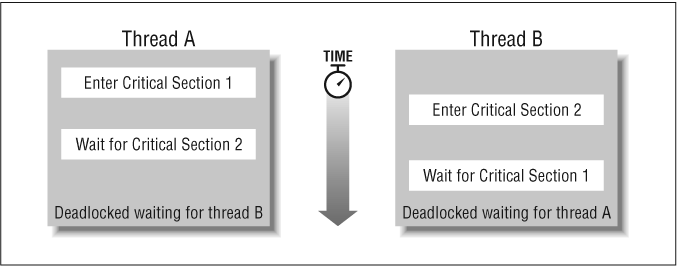

Deadlock occurs when threads wait endlessly for each other. Thread A waits for thread B, and thread B waits for thread A, and neither thread accomplishes anything. Whenever you have multiple threads, you have the possibility of creating a deadlock situation. In a complex program, it can be difficult to detect a potential deadlock in your code, and testing is an unreliable technique for discovering deadlock. Your best option is to prevent deadlock from ever occurring by taking preventive measures when you design and implement the program.

A common source of deadlock is when a thread must wait for multiple resources. For example, an application might have two global variables, and each one has its own critical section. A thread might need to change both variables and so it tries to enter both critical sections. This can cause deadlock if another thread also wants to enter both critical sections, as depicted in Figure 4-2.

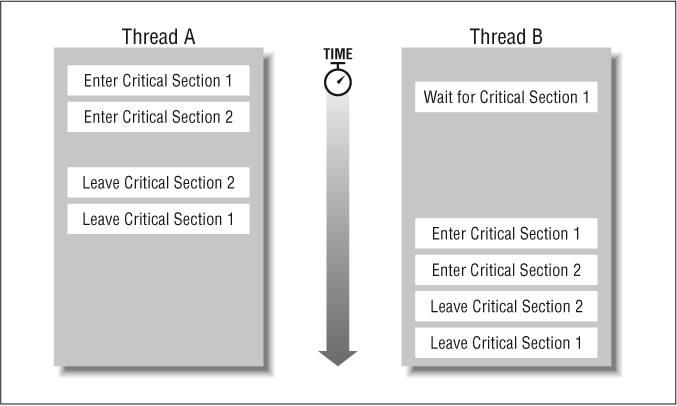

You can easily avoid deadlock in this situation by ensuring that both threads wait for the critical sections in the same order. When thread A enters critical section 1, it prevents thread B from entering the critical section, so thread A can proceed to enter critical section 2. Once thread A is finished with both critical sections, thread B can enter critical section 1 and then critical section 2. Figure 4-3 illustrates how the two threads can cooperate to avoid deadlock.

Another way to prevent deadlock when dealing with multiple resources is to make sure a thread gets all its required resources or none of them. If a thread needs exclusive access to two files, for example, it must be able to open both files. If thread A opens file 1, but cannot open file 2 because thread B owns file 2, then thread A closes file 1 and waits until both files are free.

Deadlock—causes and prevention—is a standard topic in computer science curricula. Many computer science textbooks cover the classic strategies for handling deadlock. Delphi does not have any special deadlock detection or prevention features, so you have only your wits to protect you.

Multithreaded Programming

The key to effective multithreaded programming is to know when you must use critical sections, and when you should not. Any variable that can be changed by multiple threads must be protected, but you don’t always know when a global variable can be changed. Any composite data must be protected for read and write access. Something as simple as reading a property value might result in changes to global variables in another unit, for example. Windows itself might store data that must be protected. Following is a list of areas of concern:

Any call to the Windows GDI (graphics device interface) must be protected. Usually, you will use Delphi’s VCL instead of calling the Windows API directly.

Some references to a VCL component or control must be protected. Each release of Delphi increases the thread safety of the VCL, and in Delphi 5, most of the VCL is thread-safe. Of course, you cannot modify a VCL object from multiple threads, and any VCL property or method that maps to the Windows GDI is not thread-safe. As a rule, anything visual is not thread-safe, but behind-the-scenes work is usually safe. If you aren’t sure whether a property or method is safe, assume it isn’t.

Reading long strings and dynamic arrays is thread-safe, but writing is not. Referring to a string or dynamic array might change the reference count, but Delphi protects the reference count to ensure thread safety. When changing a string or dynamic array, though, you should use a critical section, just as you would when changing any other variable. (Note that Delphi 4 and earlier did not protected strings and dynamic arrays this way.)

Allocating memory (with

GetMemorNew) and freeing memory (withFreeMemandDispose) is thread-safe. Delphi automatically protects its memory allocator for use in multiple threads (ifIsMultiThreadis True, which is one reason to stick with Delphi’sBeginThreadfunction instead of using the Windows APICreateThreadfunction).Creating or freeing an object is thread-safe only if the constructor and destructor are thread-safe. The memory-management aspect of creating and destroying objects is thread-safe, but the rest of the work in a constructor or destructor is up to the programmer. Unless you know that a class is thread-safe, assume it isn’t. Creating a VCL control, for example, is not thread-safe. Creating a

TListorTStringListobject is thread-safe.

When you need to call a Windows GDI function or access the VCL,

instead of using a critical section, Delphi provides another

mechanism that works better: the TThread class and

its Synchronize method, which you can read about

in the next section.

The TThread Class

The

easiest way to create a multithreaded application in Delphi is to

write a thread class that inherits from TThread.

The TThread class is not part of the Delphi

language, but is declared in the Classes unit.

This section describes the class because it is so important in Delphi

programming.

Override the Execute method to perform the

thread’s work. When Execute finishes, the

thread finishes. Any thread can create any other thread simply by

creating an instance of the custom thread class. Each instance of the

class runs as a separate thread, with its own stack.

When you need to protect a VCL access or call to the Windows GDI, you

can use the Synchronize method. This method takes

a procedure as an argument and calls that procedure in a thread-safe

manner. The procedure takes no arguments.

Synchronize suspends the current thread and has

the main thread call the procedure. When the procedure finishes,

control returns to the current thread. Because all calls to

Synchronize are handled by the main thread, they

are protected against race conditions. If multiple threads call

Synchronize at the same time, they must wait in

line, and one thread at a time gets access to the main thread. This

process is often called serializing because

parallel method calls are changed to serial method calls.

When writing the synchronized procedure, remember that it is called

from the main thread, so if you need to know the true thread ID, read

the ThreadID property instead of calling the

Windows API GetCurrentThreadID function.

For example, suppose you want to write a text editor that can print in the background. That is, the user asks to print a file, and the program copies the file’s contents (to avoid race conditions when the user edits the file while the print operation is still active) and starts a background thread that formats the file and queues it to the printer.

Printing a file involves the VCL, so you must take care to

synchronize every VCL access. At first this seems problematic,

because almost everything the thread does accesses the VCL’s

Printer object. Upon closer inspection, you can

see that you have several ways to reduce the interaction with the

VCL.

The first step is to copy some

information from the Printer object to the

thread’s local fields. For example, the thread keeps a copy of

the printer’s resolution, so the thread does not have to ask

the Printer object for that information. The next

step is to break down the task of printing a file into basic steps,

and to isolate the steps that involve the VCL. Printing a file

involves the following steps:

Start the print job.

Initialize the margins, page number, and page position.

If this is a new page, print the page header and footer.

Print a line of text and increment the page position.

If the page position is past the end of the page, start a new page.

If there is no more text, end the print job; otherwise go back to step 2.

The basic operations that must be synchronized with the VCL are:

Start the print job.

Print a header.

Print a footer.

Print a line of text.

Start a new page.

End the print job.

Each synchronized operation needs a parameterless procedure, so any information these procedures need must be stored as fields in the thread class. The resulting class is shown in Example 4-4.

type

TPrintThread = class(TThread)

private

fText: TStrings; // fields to support properties

fHeader: string;

fExceptionMessage: string;

fPrinter: TPrinter;

PixelsPerInch: Integer; // local storage for synchronized thunks

LineHeight: Integer;

YPos: Integer;

Line: string;

LeftMargin, TopMargin: Integer;

PageNumber: Integer;

protected

procedure Execute; override;

procedure PrintText;

procedure EndPrinting; // procedures for Synchronize

procedure PrintLine;

procedure PrintHeader;

procedure PrintFooter;

procedure StartPrinting;

procedure StartNewPage;

property Header: string read fHeader;

property Printer: TPrinter read fPrinter;

property Text: TStrings read fText;

public

constructor Create(const Text, Header: string;

OnTerminate: TNotifyEvent);

destructor Destroy; override;

property ExceptionMessage: string read fExceptionMessage;

end;If the print job raises an exception, the exception message is stored

as the ExceptionMessage property. The main thread

can test this property when the thread finishes.

The TThread.Create constructor takes a

Boolean argument: if it is True, the thread is

initially suspended until you explicitly resume it. If the argument

is False, the thread starts immediately. Whenever you override the

constructor, you usually want to pass True to the inherited

constructor. Complete the initialization your constructor requires,

and as the last step, call Resume. By starting the

thread in a suspended state, you avoid race conditions where the

thread might start working before your constructor is finished

initializing the thread’s necessary fields. Example 4-5 shows the

TPrintThread.Create constructor.

constructor TPrintThread.Create(const Text, Header: string;

OnTerminate: TNotifyEvent);

begin

inherited Create(True);

fHeader := Header;

// Save the text as lines, so they are easier to print.

fText := TStringList.Create;

fText.Text := Text;

// Save a reference to the current printer in case

// the user prints a different file to a different printer.

fPrinter := Printers.Printer;

// Save the termination event handler.

Self.OnTerminate := OnTerminate;

// The thread will free itself when it terminates.

FreeOnTerminate := True;

// Start the thread.

Resume;

end;The overridden Execute method calls

PrintText to do the real work, but it wraps the

call to PrintText in an exception handler. If

printing raises an exception, the exception message is saved for use

by the main thread. The Execute method is shown in

Example 4-6.

// Run the thread.

procedure TPrintThread.Execute;

begin

try

PrintText;

except

on Ex: Exception do

fExceptionMessage := Ex.Message;

end;

end;The PrintText method manages the main print loop,

calling the synchronized print procedures as needed. The thread

manages the bookkeeping details, which do not need to be

synchronized, as you can see in Example 4-7.

// Print all the text, using the default printer font.

procedure TPrintThread.PrintText;

const

Leading = 120; // 120% of the nominal font height

var

I: Integer;

NewPage: Boolean;

begin

Synchronize(StartPrinting);

try

LeftMargin := PixelsPerInch;

TopMargin := PixelsPerInch;

YPos := TopMargin;

NewPage := True;

PageNumber := 1;

for I := 0 to Text.Count-1 do

begin

if NewPage then

begin

Synchronize(PrintHeader);

Synchronize(PrintFooter);

NewPage := False;

end;

// Print the current line.

Line := Text[I];

Synchronize(PrintLine);

YPos := YPos + LineHeight * Leading div 100;

// Has the printer reached the end of the page?

if YPos > Printer.PageHeight - TopMargin then

begin

if Terminated then

Printer.Abort;

if Printer.Aborted then

Break;

Synchronize(StartNewPage);

YPos := TopMargin;

NewPage := True;

PageNumber := PageNumber + 1;

end;

end;

finally

Synchronize(EndPrinting);

end;

end;

PrintText seems to spend a lot of time in

synchronized methods, and it does, but it also spends time in its own

thread, letting Windows schedule the main thread and the print thread

optimally. Each synchronized print method is as small and simple as

possible to minimize the amount of time spent in the main thread.

Example 4-8 gives examples of these methods, with

PrintLine, StartNewPage, and

StartPrinting. The remaining methods are similar.

// Save the printer resolution so the print thread can use that // information without needing to access the Printer object. procedure TPrintThread.StartPrinting; begin Printer.BeginDoc; PixelsPerInch := Printer.Canvas.Font.PixelsPerInch; end; // Print the current line of text and save the height of the line so // the work thread can advance the Y position on the page. procedure TPrintThread.PrintLine; begin Printer.Canvas.TextOut(LeftMargin, YPos, Line); LineHeight := Printer.Canvas.TextHeight(Line); end; // Start a new page. The caller resets the Y position. procedure TPrintThread.StartNewPage; begin Printer.NewPage; end;

To start a print process, just create an instance of

TPrintThread. As the last argument, pass an event

handler, which the thread calls when it is finished. A typical

application might display some information in a status bar, or

prevent the user from exiting the application until the print

operation is complete. One possible approach is shown in Example 4-9.

// The user chose the File>Print menu item, so print the file.

procedure TMDIChild.Print;

begin

if PrintThread <> nil then

MessageDlg('File is already being printed.', mtWarning, [mbOK], 0)

else

begin

PrintThread := TPrintThread.Create(Editor.Text, FileName,

DonePrinting);

MainForm.SetPrinting(True);

end;

end;

// When the file is done printing, check for an exception,

// and clear the printing status.

procedure TMDIChild.DonePrinting(Sender: TObject);

begin

if PrintThread.ExceptionMessage <> '' then

MessageDlg(PrintThread.ExceptionMessage, mtError, [mbOK], 0);

PrintThread := nil;

MainForm.SetPrinting(False);

end;

The BeginThread and EndThread Functions

If you don’t want to write a

class, you can use BeginThread and

EndThread. They are wrappers for the Win32 API

calls CreateThread and

ExitThread functions, but you must use

Delphi’s functions instead of the Win32 API directly. Delphi

keeps a global flag,

IsMultiThread

, which is True if your program calls

BeginThread or starts a thread using

TThread. Delphi checks this flag to ensure thread

safety when allocating memory. If you call the

CreateThread function directly, be sure to set

IsMultiThread to True.

Note that using the BeginThread and

EndThread functions does not give you the

convenience of the Synchronize method. If you want

to use these functions, you must arrange for your own serialized

access to the VCL.

The BeginThread function is almost exactly the

same as CreateThread, but the parameters use

Delphi types. The thread function takes a Pointer

parameter and returns an Integer result, which is

the exit code for the thread. The EndThread

function is just like the Windows ExitThread

function: it terminates the current thread. See Chapter 5, for details about these functions. For an

example of using BeginThread, see the section

Futures" at the end of this

chapter.

Thread Local Storage

Windows has a feature

where each thread can store limited information that is private to

the thread. Delphi makes it easy to use this feature, called

thread local storage, without worrying about the

limitations imposed by Windows. Just declare a variable using

threadvar instead of var.

Ordinarily, Delphi creates a single instance of a unit-level variable

and shares that instance among all threads. If you use

threadvar, however, Delphi creates a unique,

separate instance of the variable in each thread.

You

must declare threadvar variables at the unit

level, not local to a function or procedure. Each thread has its own

stack, so local variables are local to a thread anyway. Because

threadvar variables are local to the thread and

that thread is the only thread that can access the variables, you

don’t need to use critical sections to protect them.

If you use the TThread class, you should use

fields in the class for thread local variables because they incur

less overhead than using threadvar variables. If

you need thread local storage outside the thread object, or if you

are using the BeginThread function, use

threadvar.

Be careful when using the threadvar variables in a

DLL. When the DLL is unloaded, Delphi frees all

threadvar storage before it calls the

DllProc or the finalization sections in the DLL.

Processes

Delphi has some support for multithreaded applications, but if you want to write a system of cooperating programs, you must resort to the Windows API. Each process runs in its own address space, but you have several choices for how processes can communicate with each other:

- Events

An event is a trigger that one thread can send to another. The threads can be in the same or different processes. One thread waits on the event, and another thread sets the event, which wakes up the waiting thread. Multiple threads can wait for the same event, and you can decide whether setting the event wakes up all waiting threads or only one thread.

- Semaphores

A semaphore shares a count among multiple processes. A thread in a process waits for the semaphore, and when the semaphore is available, the thread decrements the count. When the count reaches zero, threads must wait until the count is greater than zero. A thread can release a semaphore to increment the count. Where a mutex lets one thread at a time gain access to a shared resource, a semaphore gives access to multiple threads, where you control the number of threads by setting the semaphore’s maximum count.

- Pipes

A pipe is a special kind of file, where the file contents are treated as a queue. One process writes to one end of the queue, and another process reads from the other end. Pipes are a powerful and simple way to send a stream of information from one process to another—on one system or in a network.

- Memory-mapped files

The most common way to share data between processes is to use memory-mapped files. A memory-mapped file, as the name implies, is a file whose contents are mapped into a process’s virtual address space. Once a file is mapped, the memory region is just like normal memory, except that any changes are stored in the file, and any changes in the file are seen in the process’s memory. Multiple processes can map the same file and thereby share data. Note that each process maps the file to a different location in its individual address space, so you can store data in the shared memory, but not pointers.

Many books on advanced Windows programming cover these topics, but you have to map the C and C++ code examples given in these books to Delphi. To help you, this section presents an example that uses many of these features.

Suppose you are writing a text editor and you want a single process that can edit many files. When the user runs your program or text editor, it first checks whether a process is already running that program, and if so, forwards the request to the existing process. The forwarded request must include the command-line arguments so the existing process can open the requested files. If, for any reason, the existing process is slow to respond, the user might be able to run the program many times, each time adding an additional request to the existing process. This problem clearly calls for a robust system for communicating between processes.

The single application functions as client and server. The first time the program runs, it becomes the server. Once a server exists, subsequent invocations of the program become clients. If multiple processes start at once, there is a race condition to see who becomes the server, so the architecture must have a clean and simple way to decide who gets to be server. To do this, the program uses a mutex.

A mutex (short for mutual exclusion) is a critical section that works across processes. The program always tries to create a mutex with a specific name. The first process to succeed becomes the server. If the mutex already exists, the process becomes a client. If any error occurs, the program raises an exception, as you can see in Example 4-10.

const MutexName = 'Tempest Software.Threaditor mutex'; var SharedMutex: THandle; // Create the mutex that all processes share. The first process // to create the mutex is the server. Return True if the process is // the server, False if it is the client. The server starts out // owning the mutex, so you must release it before any client // can grab it. function CreateSharedMutex: Boolean; begin SharedMutex := CreateMutex(nil, True, MutexName); Win32Check(SharedMutex <> 0); Result := GetLastError <> Error_Already_Exists; end;

The mutex also protects the memory-mapped file the clients use to send a list of filenames to the server. Using a memory-mapped file requires two steps:

Create a mapped file. The mapped file can be a file on disk or it can reside in the system page file.

Map a view of the file into the process’s address space. The view can be the entire file or a contiguous region of the file.

After

mapping the view of the memory-mapped file, the process has a pointer

to a shared memory region. Every process that maps the same file

shares a single memory block, and changes one process makes are

immediately visible to other processes. The shared file might have a

different starting location in each process, so you should not store

pointers (including long strings, wide strings, dynamic arrays, and

complex Variants) in the shared file.

The program uses the TSharedData record for

storing and retrieving the data. Note that

TSharedData uses short strings to avoid the

problem of storing pointers in the shared file. Example 4-11 shows how to create and map the shared file.

const

MaxFileSize = 32768; // 32K should be more than enough.

MaxFileCount = MaxFileSize div SizeOf(ShortString);

SharedFileName = 'Tempest Software.Threaditor shared file';

type

PSharedData = ^TSharedData;

TSharedData = record

Count: Word;

FileNames: array[1..MaxFileCount] of ShortString;

end;

var

IsServer: Boolean;

SharedFile: THandle;

SharedData: PSharedData;

// Create the shared, memory-mapped file and map its entire contents

// into the process's address space. Save the pointer in SharedData.

procedure CreateSharedFile;

begin

SharedFile := CreateFileMapping($FFFFFFFF, nil, Page_ReadWrite,

0, SizeOf(TSharedData), SharedFileName);

Win32Check(SharedFile <> 0);

// Map the entire file into the process address space.

SharedData := MapViewOfFile(SharedFile, File_Map_All_Access, 0, 0, 0);

Win32Check(SharedData <> nil);

// The server created the shared data, so make sure it is

// initialized properly. You don't need to clear everything,

// but make sure the count is zero.

if IsServer then

SharedData.Count := 0;

end;The client writes its command-line arguments to the memory-mapped file and notifies the server that it should read the filenames from the shared data in the memory-mapped file. Multiple clients might try to write to the memory-mapped file at the same time, or a client might want to write when the server wants to read. The mutex protects the integrity of the shared data.

The client calls

EnterMutex, which waits until the mutex is

available, then grabs it. Once a client owns the mutex, no other

thread in any process can grab the mutex. The client is free to write

to the shared memory without fear of data corruption. The client

copies its command-line arguments to the shared data, then releases

the mutex by calling LeaveMutex. Example 4-12 shows these two functions.

// Enter the critical section by grabbing the mutex.

// This procedure waits until it gets the mutex or it

// raises an exception.

procedure EnterMutex;

resourcestring

sNotResponding = 'Threaditor server process is not responding';

sNoServer = 'Threaditor server process has apparently died';

const

TimeOut = 10000; // 10 seconds

begin

case WaitForSingleObject(SharedMutex, TimeOut) of

Wait_Timeout:

raise Exception.Create(sNotResponding);

Wait_Abandoned:

raise Exception.Create(sNoServer);

Wait_Failed:

RaiseLastWin32Error;

end;

end;

// Leave the critical section by releasing the mutex so another

// process can grab it.

procedure LeaveMutex;

begin

Win32Check(ReleaseMutex(SharedMutex));

end;The client wakes up the server by setting an event. An event is a way for one process to notify another without passing any additional information. The server waits for the event to be triggered, and after a client copies a list of filenames into the shared file, the client sets the event, which wakes up the server. Example 4-13 shows the code for creating the event.

const EventName = 'Tempest Software.Threaditor event'; var SharedEvent: THandle; // Create the event that clients use to signal the server. procedure CreateSharedEvent; begin SharedEvent := CreateEvent(nil, False, False, EventName); Win32Check(SharedEvent <> 0); end;

The server grabs the mutex so it can copy the filenames from the shared file. To avoid holding the mutex too long, the server copies the filenames into a string list and immediately releases the mutex. Then the server opens each file listed in the string list. Network latency or other problems might slow down the server when opening a file, which is why the server copies the names and releases the mutex as quickly as it can.

Tip

Everyone

has run into the infinite wait problem at some time. You click the

button and wait, but the hourglass cursor never disappears. Something

inside the program is waiting for an event that will never occur. So

what do you do? Do you press Ctrl-Alt-Del and try to kill the

program? But the program might not have saved your data yet. It could

be waiting for a response from the server. If you kill the program

now, you might lose all your work so far. It’s so frustrating

to be faced with the hourglass and have no way to recover.Such

scenarios should never happen, and it’s up to us—the

programmers—to make sure they don’t.Windows will let your

thread wait forever if you use Infinite as the

time out argument to the wait functions, such as

WaitForSingleObject. The only time you should use

an Infinite time out, though, is when a server

thread is waiting for a client connection. In that case, it is

appropriate for the server to wait an indeterminate amount of time.

In almost every other case, you should specify an explicit time out.

Determine how long a user should wait before the program reports that

something has gone wrong. If your thread is deadlocked, for example,

the user has no way of knowing or stopping the thread, short of

killing the process in the Task Manager. By setting an explicit time

out, as shown in Example 4-12, the program can report

a coherent message instead of leaving the bewildered user wondering

what is happening.

The server opens each of these files as though the user had chosen

File → Open in the text editor. Because opening a file involves

the VCL, the server uses Synchronize to open each

file. After opening the files, the server waits on the event again.

The server spends most of its time waiting to hear from a client, so

it must not let the waiting interfere with its normal work. The

server does its work in a separate thread that loops forever: waiting

for an event from a client, getting the filenames from the shared

file, opening the files, then waiting again. Example 4-14 shows the code for the server thread.

type

TServerThread = class(TThread)

private

fFileName: string;

fFileNames: TStringList;

public

constructor Create;

destructor Destroy; override;

procedure OpenFile;

procedure RestoreWindow;

procedure Execute; override;

property FileName: string read fFileName;

property FileNames: TStringList read fFileNames;

end;

var

ServerThread: TServerThread;

{ TServerThread }

constructor TServerThread.Create;

begin

inherited Create(True);

fFileNames := TStringList.Create;

FreeOnTerminate := True;

Resume;

end;

destructor TServerThread.Destroy;

begin

FreeAndNil(fFileNames);

inherited;

end;

procedure TServerThread.Execute;

var

I: Integer;

begin

while not Terminated do

begin

// Wait for a client to wake up the server. This is one of the few

// times where a wait with an INFINITE time out is appropriate.

WaitForSingleObject(SharedEvent, INFINITE);

EnterMutex;

try

for I := 1 to SharedData.Count do

FileNames.Add(SharedData.FileNames[I]);

SharedData.Count := 0;

finally

LeaveMutex;

end;

for I := 0 to FileNames.Count-1 do

begin

fFileName := FileNames[I];

Synchronize(OpenFile);

end;

Synchronize(RestoreWindow);

FileNames.Clear;

end;

end;

procedure TServerThread.OpenFile;

begin

// Create a new MDI child window

TMDIChild.Create(Application).OpenFile(FileName);

end;

// Bring the main form forward, and restore it from a minimized state.

procedure TServerThread.RestoreWindow;

begin

if FileNames.Count > 0 then

begin

Application.Restore;

Application.BringToFront;

end;

end;The client is quite simple. It grabs the mutex, then copies its command-line arguments into the shared file. If multiple clients try to run at the same time, the first one to grab the mutex gets to run, while the others must wait. Multiple clients might run before the server gets to open the files, so each client appends its filenames to the list, as you can see in Example 4-15.

// A client grabs the mutex and appends the files named on the command

// line to the filenames listed in the shared file. Then it notifies

// the server that the files are ready.

procedure SendFilesToServer;

var

I: Integer;

begin

if ParamCount > 0 then

begin

EnterMutex;

try

for I := 1 to ParamCount do

SharedData.FileNames[SharedData.Count + I] := ParamStr(I);

SharedData.Count := SharedData.Count + ParamCount;

finally

LeaveMutex;

end;

// Wake up the server

Win32Check(SetEvent(SharedEvent));

end;

end;When it starts, the application calls the

StartServerOrClient procedure. This procedure

creates or opens the mutex and so learns whether the program is the

server or a client. If it is the server, it starts the server thread

and sets IsServer to True. The server initially

owns the shared mutex, so it can safely create and initialize the

shared file. Then it must release the mutex. If the program is a

client, it sends the command-line arguments to the server, and sets

IsServer to False. The client doesn’t own

the mutex initially, so it has nothing to release. Example 4-16 shows the

StartServerOrClient procedure.

procedure StartServerOrClient;

begin

IsServer := CreateSharedMutex;

try

CreateSharedFile;

CreateSharedEvent;

finally

if IsServer then

LeaveMutex;

end;

if IsServer then

StartServer

else

SendFilesToServer;

end;To start the server, simply create an instance of

TServerThread. To stop the server, set the

thread’s Terminated flag to True, and signal

the event to wake up the thread. The thread wakes up, goes through

its main loop, and exits because the Terminated

flag is True. To make sure the thread is cleaned up properly, the

main thread waits for the server thread to exit, but it doesn’t

wait long. If something goes wrong, the main thread simply abandons

the server thread so the application can close. Windows will clean up

the thread when the application terminates. Example 4-17 shows the StartServer and

StopServer procedures.

// Create the server thread, which will wait for clients // to connect. procedure StartServer; begin ServerThread := TServerThread.Create; end; procedure StopServer; begin ServerThread.Terminate; // Wake up the server so it can die cleanly. Win32Check(SetEvent(SharedEvent)); // Wait for the server thread to die, but if it doesn't die soon // don't worry about it, and let Windows clean up after it. WaitForSingleObject(ServerThread.Handle, 1000); end;

To clean up, the program must unmap the shared file, close the mutex,

and close the event. Windows keeps the shared handles open as long as

one thread keeps them open. When the last thread closes a handle,

Windows gets rid of the mutex, event, or whatever. When an

application terminates, Windows closes all open handles, but

it’s always a good idea to close everything explicitly. It

helps the person who must read and maintain your code to know

explicitly which handles should be open when the program ends. Example 4-18 lists the unit’s

finalization section, where all the handles are

closed. If a serious Windows error were to occur, the application

might terminate before all the shared items have been properly

created, so the finalization code checks for a valid handle before

closing each one.

finalization

if SharedMutex <> 0 then

CloseHandle(SharedMutex);

if SharedEvent <> 0 then

CloseHandle(SharedEvent);

if SharedData <> nil then

UnmapViewOfFile(SharedData);

if SharedFile <> 0 then

CloseHandle(SharedFile);

end.The final step is to edit the project’s source file. The first

thing the application does is call

StartServerOrClient. If the program is a client,

it exits without starting the normal Delphi application. If it is the

server, the application runs normally (with the server thread running

in the background). Example 4-19 lists the new

project source file.

program Threaditor;

uses

Forms,

Main in 'Main.pas' {MainForm},

Childwin in 'ChildWin.pas' {MDIChild},

About in 'About.pas' {AboutBox},

Process in 'Process.pas';

{$R *.RES}

begin

StartServerOrClient;

if IsServer then

begin

Application.Initialize;

Application.Title := 'Threaditor';

Application.CreateForm(TMainForm, MainForm);

Application.Run;

end;

end.The remainder of the application is the standard MDI project from

Delphi’s object repository, with only a few modifications.

Simply adding the Process unit and making one

small change to the project source file is all you need to do.

Different applications might need to take different actions when the

clients run, but this example gives you a good framework for

enhancements.

Futures

Writing a concurrent program can be more difficult than writing a sequential program. You need to think about race conditions, synchronization, shared variables, and more. Futures help reduce the intellectual clutter of using threads. A future is an object that promises to deliver a value sometime in the future. The application does its work in the main thread and calls upon futures to fetch or compute information concurrently. The future does its work in a separate thread, and when the main thread needs the information, it gets it from the future object. If the information isn’t ready yet, the main thread waits until the future is done. Programming with futures hides much of the complexity of multithreaded programming.

Define a future class by inheriting from TFuture

and overriding the Compute method. The

Compute method does whatever work is necessary and

returns its result as a Variant. Try to avoid

synchronization and accessing shared variables during the

computation. Instead, let the main thread handle communication with

other threads or other futures. Example 4-20 shows

the declaration for the TFuture class.

type

TFuture = class

private

fExceptObject: TObject;

fExceptAddr: Pointer;

fHandle: THandle;

fTerminated: Boolean;

fThreadID: LongWord;

fTimeOut: DWORD;

fValue: Variant;

function GetIsReady: Boolean;

function GetValue: Variant;

protected

procedure RaiseException;

public

constructor Create;

destructor Destroy; override;

procedure AfterConstruction; override;

function Compute: Variant; virtual; abstract;

function HasException: Boolean;

procedure Terminate;

property Handle: THandle read fHandle;

property IsReady: Boolean read GetIsReady;

property Terminated: Boolean read fTerminated write fTerminated;

property ThreadID: LongWord read fThreadID;

property TimeOut: DWORD read FTimeOut write fTimeOut;

property Value: Variant read GetValue;

end;The constructor initializes the future object, but refrains from

starting the thread. Instead, TFuture overrides

AfterConstruction and starts the thread after all

the constructors have run. That lets a derived class initialize its

own fields before starting the thread.

When the application needs the future value, it reads the

Value property. The GetValue

method waits until the thread is finished. If the thread is already

done, Windows returns immediately. If the thread raised an exception,

the future object reraises the same exception object at the original

exception address. This lets the calling thread handle the exception

just as though the future was computed in the calling thread rather

than in a separate thread. If everything goes as planned, the future

value is returned as a Variant. Example 4-21 lists the implementation of the

TFuture class.

// Each Future computes its value in ThreadFunc. Any number of

// ThreadFunc instances can be active at a time. Windows requires a

// thread to catch exceptions, or else Windows shuts down the

// application. ThreadFunc catches exceptions and stores them in the

// Future object.

function ThreadFunc(Param: Pointer): Integer;

var

Future: TFuture;

RaiseFrame: PRaiseFrame;

begin

Result := 0;

Future := TFuture(Param);

// The thread must catch all exceptions within the thread.

// Store the exception object and address in the Future object,

// to be raised when the value is needed.

try

Future.fValue := Null;

Future.fValue := Future.Compute;

except

RaiseFrame := RaiseList;

if RaiseFrame <> nil then

begin

Future.fExceptObject := RaiseFrame.ExceptObject;

Future.fExceptAddr := RaiseFrame.ExceptAddr;

RaiseFrame.ExceptObject := nil;

end;

end;

end;

{ TFuture }

// Create the Future and start its computation.

constructor TFuture.Create;

begin

inherited;

// The default time out is Infinite because a general-purpose

// future cannot know how long any concrete future should take

// to finish its task. Derived classes should set a different

// value that is appropriate to the situation.

fTimeOut := Infinite;

end;

// The thread is started in AfterConstruction to let the derived class

// finish its constructor in the main thread and initialize the TFuture

// object completely before the thread starts running. This avoids

// any race conditions when initializing the TFuture-derived object.

procedure TFuture.AfterConstruction;

begin

inherited;

// Start the Future thread.

fHandle := BeginThread(nil, 0, ThreadFunc, Self, 0, fThreadID);

Win32Check(Handle <> 0);

end;

// If the caller destroys the Future object before the thread

// is finished, there is no nice way to clean up. TerminateThread

// leaves the stack allocated and might introduce all kinds of nasty

// problems, especially if the thread is in the middle of a kernel

// system call. A less violent solution is just to let the thread

// finish its job and go away naturally. The Compute function should

// check the Terminated flag periodically and return immediately when

// it is true.

destructor TFuture.Destroy;

begin

if Handle <> 0 then

begin

if not IsReady then

begin

Terminate; // Tell the thread to stop.

try

GetValue; // Wait for it to stop.

except

// Discard any exceptions that are raised now that the

// computation is logically terminated and the future object

// is being destroyed. The caller is freeing the TFuture

// object, and does not expect any exceptions from the value

// computation.

end;

end;

Win32Check(CloseHandle(Handle));

end;

inherited;

end;

// Return true if the thread is finished.

function TFuture.GetIsReady: Boolean;

begin

Result := WaitForSingleObject(Handle, 0) = Wait_Object_0;

end;

// Wait for the thread to finish, and return its value.

// If the thread raised an exception, reraise the same exception,

// but now in the context of the calling thread.

function TFuture.GetValue: Variant;

resourcestring

sAbandoned = 'Future thread terminated unexpectedly';

sTimeOut = 'Future thread did not finish before timeout expired';

begin

case WaitForSingleObject(Handle, TimeOut) of

Wait_Abandoned: raise Exception.Create(sAbandoned);

Wait_TimeOut: raise Exception.Create(sTimeOut);

else

if HasException then

RaiseException;

end;

Result := fValue;

end;

function TFuture.HasException: Boolean;

begin

Result := fExceptObject <> nil;

end;

procedure TFuture.RaiseException;

begin

raise fExceptObject at fExceptAddr;

end;

// Set the terminate flag, so the Compute function knows to stop.

procedure TFuture.Terminate;

begin

Terminated := True;

end;To use a future, derive a class from TFuture and

override the Compute method. Create an instance of

the derived future class and read its Value

property when you need to access the future’s value.

Suppose you want to add a feature to the threaded text editor: when the user is searching for text, you want the editor to search ahead for the next match. For example, the user opens the Find dialog box, enters a search string, and clicks the Find button. The editor finds the text and highlights it. While the user checks the result, the editor searches for the next match in the background.

This is a perfect job for a future. The future searches for the next match and returns the starting position of the match. The next time the user clicks the Find button, the editor gets the result from the future. If the future is not yet finished searching, the editor waits. If the future is done, it returns immediately, and the user is impressed by the speedy result, even when searching a large file.

The future keeps a copy of the file’s contents, so you

don’t have to worry about multiple threads accessing the same

file. This is not the best architecture for a text editor, but is

demonstrates how a future can be used effectively. Example 4-22 lists the TSearchFuture

class, which does the searching.

type

TSearchFuture = class(TFuture)

private

fEditorText: string;

fFindPos: LongInt;

fFindText: string;

fOptions: TFindOptions;

procedure FindDown(out Result: Variant);

procedure FindUp(out Result: Variant);

public

constructor Create(Editor: TRichEdit; Options: TFindOptions;

const Text: string);

function Compute: Variant; override;

property EditorText: string read fEditorText;

property FindPos: LongInt read FFindPos write fFindPos;

property FindText: string read fFindText;

property Options: TFindOptions read fOptions;

end;

{ TSearchFuture }

constructor TSearchFuture.Create(Editor: TRichEdit;

Options: TFindOptions; const Text: string);

begin

inherited Create;

TimeOut := 30000; // Expect the future to do its work in < 30 sec.

// Save the basic search parameters.

fEditorText := Editor.Text;

fOptions := Options;

fFindText := Text;

// Start searching at the end of the current selection,

// to avoid finding the same text over and over again.

fFindPos := Editor.SelStart + Editor.SelLength;

end;

// Simple-minded search.

function TSearchFuture.Compute: Variant;

begin

if not (frMatchCase in Options) then

begin

fFindText := AnsiLowerCase(FindText);

fEditorText := AnsiLowerCase(EditorText);

end;

if frDown in Options then

FindDown(Result)

else

FindUp(Result);

end;

procedure TSearchFuture.FindDown(out Result: Variant);

var

Next: PChar;

begin

// Find the next match.

Next := AnsiStrPos(PChar(EditorText) + FindPos, PChar(FindText));

if Next = nil then

// Not found.

Result := -1

else

begin

// Found: return the position of the start of the match.

FindPos := Next - PChar(EditorText);

Result := FindPos;

end;

end;If the user edits the file, changes the selection, or otherwise

invalidates the search, the editor frees the future because it is no

longer valid. The next time the user starts a search, the editor must

start up a brand-new future. Example 4-23 shows the

relevant method for the TMDIChild form.

// If the user changes the selection, restart the background search.

procedure TMDIChild.EditorSelectionChange(Sender: TObject);

begin

if Future <> nil then

RestartSearch;

end;

// When the user closes the Find dialog, stop the background

// thread because it probably isn't needed any more.

procedure TMDIChild.FindDialogClose(Sender: TObject);

begin

FreeAndNil(fFuture);

end;

// Restart the search thread after a search parameter changes.

procedure TMDIChild.RestartSearch;

begin

FreeAndNil(fFuture);

fFuture := TSearchFuture.Create(Editor, FindDialog.Options,

FindDialog.FindText);

end;When the user clicks the Find button, the

TFindDialog object fires its

OnFind event. The event handler first checks

whether it has a valid future, and if not, it starts a new future.

Thus, all searching takes place in a background thread. The

TSearchFuture object returns the position of the

next match or -1 if the text cannot be found. Most of the work of the

event handler is scrolling the editor to make sure the selected text

is visible—managing the search future is trivial in comparison,

as you can see in Example 4-24.

// The user clicked the Find button in the Find dialog.

// Get the next match, which might already have been found

// by a search future.

procedure TMDIChild.FindDialogFind(Sender: TObject);

var

FindPos: LongInt;

SaveEvent: TNotifyEvent;

TopLeft, BottomRight: LongWord;

Top, Left, Bottom, Right: LongInt;

Pos: TPoint;

SelLine, SelChar: LongInt;

ScrollLine, ScrollChar: LongInt;

begin

// If the search has not yet started, or if the user changed

// the search parameters, restart the search. Otherwise, the

// future has probably already found the next match. In either

// case the reference to Future.Value will wait until the search

// is finished.

if (Future = nil) or

(Future.FindText <> FindDialog.FindText) or

(Future.Options <> FindDialog.Options)

then

RestartSearch;

FindPos := Future.Value;

if FindPos < 0 then

MessageBeep(Mb_IconWarning)

else

begin

// Bring the focus back to the editor, from the Find dialog.

Application.MainForm.SetFocus;

// Temporarily disable the selection change event

// to prevent the future from being restarted until after

// the selection start and length have both been set.

SaveEvent := Editor.OnSelectionChange;

try

Editor.OnSelectionChange := nil;

Editor.SelStart := FindPos;

Editor.SelLength := Length(FindDialog.FindText);

finally

Editor.OnSelectionChange := SaveEvent;

end;

// Start looking for the next match.

RestartSearch;

// Scroll the editor to bring the selection in view.

// Start by getting the character and line index of the top-left

// corner of the rich edit control.

Pos.X := 0;

Pos.Y := 0;

TopLeft := Editor.Perform(Em_CharFromPos, 0, LParam(@Pos));

Top := Editor.Perform(Em_LineFromChar, Word(TopLeft), 0);

Left := Word(TopLeft) - Editor.Perform(Em_LineIndex, Top, 0);

// Then get the line & column of the bottom-right corner.

Pos.X := Editor.ClientWidth;

Pos.Y := Editor.ClientHeight;

BottomRight := Editor.Perform(Em_CharFromPos, 0, LParam(@Pos));

Bottom := Editor.Perform(Em_LineFromChar, Word(BottomRight), 0);

Right := Word(BottomRight) -

Editor.Perform(Em_LineIndex, Bottom, 0);

// Is the start of the selection in view?

// If the line is not in view, scroll vertically.

SelLine := Editor.Perform(Em_ExLineFromChar, 0, FindPos);

if (SelLine < Top) or (SelLine > Bottom) then

ScrollLine := SelLine - Top

else

ScrollLine := 0;

// If the column is not visible, scroll horizontally.

SelChar := FindPos - Editor.Perform(Em_LineIndex, SelLine, 0);

if (SelChar < Left) or (SelChar > Right) then

ScrollChar := SelChar - Left

else

ScrollChar := 0;

Editor.Perform(Em_LineScroll, ScrollChar, ScrollLine);

end;

end;The major advantage to using futures is their simplicity. You can

often implement the TFuture-derived class as a

simple, linear subroutine (albeit one that checks

Terminated periodically). Using a future is as

simple as accessing a property. All the synchronization is handled

automatically by TFuture.

Concurrent programming can be tricky, but with care and caution, you can write applications that use threads and processes correctly, efficiently, and effectively.

Get Delphi in a Nutshell now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.