Automated Testing Isnât

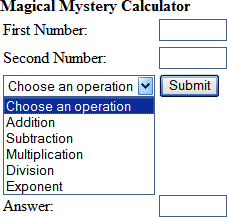

Itâs very tempting to speak of âautomated testingâ as if it were âautomated manufacturingââwhere we have the robot doing the exact same thing as the thinking human. So we take an application like the one shown in Figure 16-1 with a simple test script like this:

Enter 4 in the first box.

Enter 4 in the second box.

Select the Multiply option from the Operations drop-down.

Press Submit.

Expect â16â in the answer box.

Figure 16-1. A very simple application

We get a computer to do all of those steps, and call it automation. The problem is that there is a hidden second expectation at the end of every test case documented this way: âAnd nothing else odd happened.â

The simplest way to deal with this ânothing else oddâ is to capture the entire screen and compare runs, but then any time a developer moves a button, or you change screen resolution, color scheme, or anything else, the software will throw a false error.

These days itâs more common to check only for the exact assertion. Which means you miss things like the following:

An iconâs background color is not transparent.

After the submit, the Operations drop-down changed back to the default of Plus, so it reads like â4 + 4 = 16â.

After the second value is entered, the cancel button becomes disabled.

The Answer box is editable when it should be disabled (grayed out).

The operation took eight ...

Get Beautiful Testing now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.