3.6 ENTROPY CODING

It is worthwhile to consider the theoretical limits for the minimum number of bits required to represent an audio sample. Shannon, in his mathematical theory of communication [Shan48], proved that the minimum number of bits required to encode a message, X, is given by the entropy, He(X). The entropy of an input signal can be defined as follows. Let X = [x1, x2, … , xN] be the input data vector of length N and pi be the probability that i-th symbol (over the symbol set, V = [υ1, υ2, … , υK]) is transmitted. The entropy, He(X), is given by

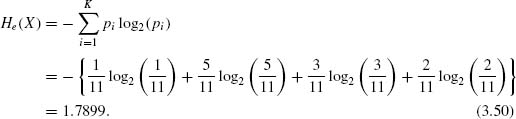

![]()

In simple terms, entropy is a measure of uncertainty of a random variable. For example, let the input bitstream to be encoded be X = [4 5 6 6 2 5 4 4 5 4 4], i.e., N = 11; symbol set, V = [2 4 5 6] and the corresponding probabilities are ![]() , respectively, with K = 4. The entropy, He(X), can be computed as follows:

, respectively, with K = 4. The entropy, He(X), can be computed as follows:

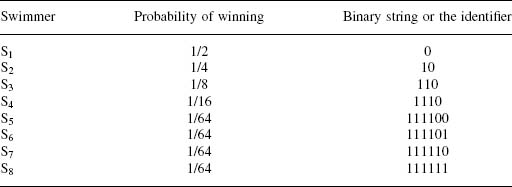

Table 3.3. An example entropy code for Example 3.2.

Example 3.2

Consider eight swimmers {S1, S2, S3, S4, S5, S6, S7, and S8} in a race with win probabilities {1/2, 1/4, 1/8, 1/16, 1/64, 1/64, 1/64, and 1/64}, respectively. ...

Get Audio Signal Processing and Coding now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.