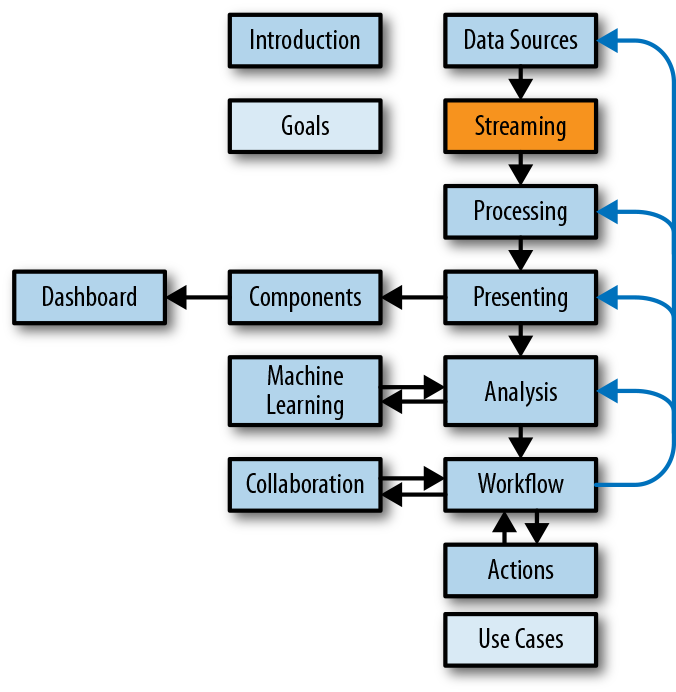

Chapter 4. Streaming Your Data

This chapter covers some specifics of how to stream data that you have identified. There are always more ways to stream data, but this covers the most common methods currently in use.

There are two important aspects to consider with any streaming data endeavor: publication and subscription. Both must be in place to effectively stream data for a visualization. Regardless of your data source, you will need to publish the data into a stream. Streams of data are often divided into channels and sometimes further divided into events. A service needs to exist to publish to, and a client needs to be connected to that service to publish events into it. This is an important detail to consider when evaluating data streaming services. First you need to get your data into the service; then you need to subscribe to a data stream. The exact method you use for this will depend on the service you choose. You will then receive the stream of data that is published, filtered by channels and events in some cases. This is the data you will be able to visualize.

Many publishers and many subscribers can be working within the same data stream. This is one of the advantages of streaming data: it lends itself well to a distributed architecture. You could have a large collection of servers publish their errors into a data stream and have multiple subscribers to that data stream for different purposes. Subscribers could include an operations dashboard, event-based fixit scripts, alert generators, and interactive analysis tools. It is also possible to have the service and publishing components combined. This can be easy to deploy but limits options on combining information from multiple sources. websocketd is a good example of a service combined with a publisher that is easy to set up and creates a single publisher to a data stream.

Key things to consider in choosing a service for streaming data are cost, scalability, maintenance, security, and any special requirements you have, like programming language support. Table 4-1 lists some popular examples.

| Service | Licensing | Notes |

|---|---|---|

| Socket.IO | Open source | A popular and easy standard for building your own streaming. |

| SocketCluster | Open source | A distributed version of Socket.IO for scalability. |

websocketd |

Open source | Utility to stream anything from a console. |

| WebSockets | Standard | A standard supported by most browsers. The current baseline for all streaming services. |

| Pusher | Commercial | A complete streaming server solution. Paid service. |

| PubNub | Commercial | A complete streaming server solution. Paid service. |

How to Stream Data

A few scenarios can cover most needs in streaming data. Weâll look at each of these in turn:

Using a publish/subscriber channel or message queue

Streaming file contents

Emitting messages from something that is already streaming

Streaming from the console

Polling a service or API

A publish/subscriber channel is a mechanism for publishing events into a channel to be consumed by all those who subscribe to it. This is a pattern intended for streaming data and is also known as pub/sub. Pub/sub libraries will handle all of the connections for you, but you may need to buffer the data yourself.

Streaming file contents may sound odd because theyâre static and can be run through a program to analyze at will or in a batch. But you may still want to do this if your file is the beginning of a workflow of multiple components that you want to monitor as it occurs. For example, if you have a long list of URLs in a file to run against several APIs and services, it may take a while to get the results back. When you do, it would be a shame to find out that an error had occurred or youâd gathered the wrong information, or theyâd all ended up redirecting to the same spot. These are things that would stand out if you were streaming the lines of the file to a service and seeing the results as they returned.

To stream the contents of a file, you can either have a service hold the file open and stream the contents at an interval per record or dump the contents of the file into a service and allow it to keep it in memory while it emits the records at an interval. If the file is below a record count that can comfortably fit into memoryâmaybe less than 1 GB of recordsâthen the second method is preferred. If the file is much larger than you want to store in memory, keep it open and stream at the interval you need. If you are able to work with the file in memory, you have the added advantage of being able to do some sorts on the data before sending. Papa Parse is an easy-to-implement library that you can use to stream your files. It allows you to attach each line to an event so that you can stream it:

Papa.parse(bigFile,{worker:true,step:function(results){YourStreamingEventFunction();}});

Many applications stream data between components. Many of them have a convenient spot to tap into and emit events as they occur. For example, you can stream a MySQL binlog thatâs used for replication by using an app that watches the binlog as if it were a replication server and emits the information as JSON. One such library for MySQL and Node.js is ZongJi:

varzongji=newZongJi({/* ... MySQL Connection Settings ... */});// Each change to the replication log results in an eventzongji.on('binlog',function(objMsg){fnOnMessage(objMsg);})

Similar libraries can be found for other data storage technologies and anything that has multiple components.

Streaming from a console gets back to the original streaming data example of watching tailed logs scroll by. By turning a scrolling console into a streaming data source, you can add a lot of processing and interaction to the data instead of just watching it fly by. websocketd is a convenient little open source application that will allow you to turn anything emitted in a console into a WebSocket with the lines sent to the console as messages. These will need to be parsed into some sort of structure to be useful. You can create multiple instances that run in the background or as services. This can be very effective to get a good idea of whatâs going on and whatâs useful, but may not be a long-term solution.

To start websocketd and create a stream, use a shell command like the following:

websocketd --port=8080 ./script-with-output.sh

Then connect to the websocketd stream in JavaScript:

// setup WebSocket with callbacksvarws=newWebSocket('ws://localhost:8080/');ws.onmessage=function(objMsg){fnOnMessage(objMsg);};

Finally, polling an API is an attractive option because APIs are everywhere. They are the most prolific method of data exchange between systems. There are several types of APIs, but most of them will return either a list of results or details on a record returned in a previous response. Both of these work well in an interactive streaming data visualization client. The API polling workflow is as follows:

Call the API for a list.

Stream the list to the client at a reasonable interval.

Run any processing and filters that fit your goals.

For any records that pass the conditions to get details, make the details API call.

Show the results of the details API call as they return.

When you reach the end of the list, make another API call for the list.

Stream only the new items from the API call.

Repeat.

Using one of the options described here, you can stream just about anything you would like to and get a live view of what is transpiring.

Buffering

If we suppose that an analyst can understand up to 20 records per second while intently watching the screen, it may not make sense to fly past that limit when data is coming in faster than that. Buffering allows data to be cached before going to the next step; it means you can intelligently stream records to a downstream process instead of being at the mercy of the speed of the incoming data. This makes a big difference in the following instances:

When the data is streaming in much faster than it can be consumed

When the data source is unpredictable, and the data can come in large bursts

When the data comes in all at once but needs to be streamed out

Cross these scenarios with the likely data needs of an analyst, and you start to have some logic that maintains the position of the analystâs view within the buffer as well as the buffer itself. The analystâs views in the buffer are

Newest data (data as it arrives)

Oldest data (data about to leave the buffer)

Samples of data (random selections from the buffer)

Any of these buffer views can be mixed with conditions on what records are worth viewing to filter out anything unneeded for the current task. To meet all of these conditions, the following rules can be applied in your buffering logic:

Put all incoming data into a large cache.

If the data is larger than the cache, decide what to do with it. You can

Replace the entire contents of the cache with the new data.

Accept a portion of the new data if you have not seen enough of the previous data.

Grow the cache.

Set the viewable items within the cache to correspond to the buffer view required by the analyst.

When new data is added at a rate that can be easily consumed, move it into the cache and the views where appropriate.

When data is added too quickly to be consumed intelligently, trickle it in at a pace that is suitable.

When data is not arriving at all, start streaming records that havenât been seen yet.

Figure 4-1 shows the relationship between a changing cache and moving views within it.

Figure 4-1. Buffer views and movement in relation to a cache for streaming data

Streaming Best Practices

These are guidelines to consider implementing in your data streaming strategy:

Stream data as something that is fire-and-forget, not as blocking for any process or as a reliable record.

Use a protocol that encrypts the data in transport for anything sensitive. Without an encrypted transport such as WSS, a simple packet sniffer can see the data in plain text passively.

Create multiple channels or queues to organize streams of data. Each channel can be assigned a different purpose and set of processes.

Donât mix data schemas in the same channel. This makes it much easier to process anything within that channel.

Separate analyst feedback events into a separate channel. This helps with the processes of team collaboration and peer review, and can also help prevent feedback loops.

Consider a distributed and coordinated service if your scale goes beyond what a single server can handle. In some instances, having multiple clients connected and getting different results at different times causes confusion (and worse).

Use technologies that abstract the specific streaming protocol and allow multiple protocols to be used easily and interchangeably.

Going from not streaming data to streaming data might be the largest obstacle in producing a working solution that you can show to others to get feedback. It will require some access to data or systems that are not typical. If you can get a plug-in or official feature to stream what you need, thatâs always your best bet. If not, you can still connect at the points mentioned here.

Get Visualizing Streaming Data now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.