Chapter 3. Breaking Entropy

Understanding Entropy

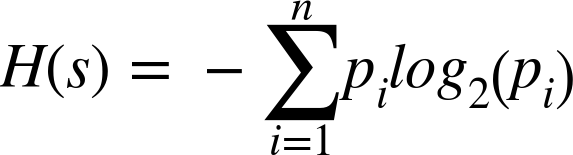

Because he had nothing better to do, Dr. Shannon called the LOG2 version of a number entropy, or the smallest number of bits required to represent a value. He further extended the concept of entropy (why not recycle terminology...) to entire data sets, where you could describe the smallest number of bits needed to represent the entire data set. He worked out all the math and gave us this lovely formula for H(s)1 as the Entropy of a Set:

This might look rather intimidating,2 so let’s pick it apart:3

- en·tro·py

-

(noun)

A thermodynamic quantity representing the unavailability of a system’s thermal energy for conversion into mechanical work, often interpreted as the degree of disorder or randomness in the system. (wrt physics)

Lack of order or predictability; gradual decline into disorder. (wrt H.P. Lovecraft)

A logarithmic measure of the rate of transfer of information in a particular message or language. (in information theory)

To be practical and concrete, let’s begin with a group of letters; for example:

G = [A,B,B,C,C,C,D,D,D,D]4

First, we calculate the set S of the data grouping G. (This is “set” in the mathematical sense: a group of numbers that occur only once, and whose ordering doesn’t matter.)

S = set(G) = [A,B,C,D]

This is the set of unique symbols in G.

Next, we calculate the probability of occurrence for each ...

Get Understanding Compression now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.