Chapter 4. Conditional Promises—and Deceptions

Not all promises are made without attached conditions. For instance, we might promise to pay for something “cash on delivery” (i.e., only after a promised item has been received). Such promises will be of central importance in discussing processes, agreements, and trading.

The Laws of Conditional Promising

The truth or falsity of a condition may be promised as follows. An agent can promise that a certain fact is true (by its own assessment), but this is of little value if the recipient of the promise does not share this assessment.

-

I promise X only if condition Y is satisfied.

A promise that is made subject to a condition that is not assessable cannot be considered a promise. However, if the state of the condition that predicates it has also been promised, completing a sufficient level of information to make an assessment, then it can be considered a promise. The trustworthiness of the promise is up to the assessing agent to decide. So there is at least as much trust required to assess conditionals as there is for unconditional promises.

We can try to state this as a rule:

A conditional promise cannot be assessed unless the assessor also sees that the condition itself is promised.

I call this quenching of the conditionals. Conversely:

If the condition is promised to be false, the remaining promise is rendered empty, or worthless.

A conditional promise is not a promise unless the condition itself is also promised.

Note that because promising the truth of a condition and promising some kind of service are two different types of promises, we must then deduce that both of these promises are (i) made to and from the same set of agents, and therefore (ii) calibrated to the same standards of trustworthiness. Thus, logically we must define it to be so.

We can generalize these thoughts for promises of a more general nature by using the following rules.

Local Quenching of Conditionals

If a promise is provided, subject to the provision of a prerequisite promise, then the provision of the prerequisite by the same agent is completely equivalent to the unconditional promise being made:

-

I promise X if Y.

-

I promise Y, too.

This combination is the same as “I promise X unconditionally.” The regular rules of good old simplistic Boolean logic now apply within the same agent. If they didn’t, we would consider the agent irrational.

This appeal to local logic gives us a rewriting rule for promises made by a single agent in the promise graph. The + is used to emphasize that X is being offered, and to contrast this with the next case.

Assisted Promises

Consider now a case in which one agent assists another in keeping a promise. According to our axioms, an agent can only promise its own behaviour, thus the promise being made comes only from the principal agent, not the assistant. The assistant might not even be known to the final promisee.1 Assistance is a matter of voluntary cooperation on the part of the tertiary agent.

So, if a promise is once again provided, subject to the promise of a pre-requisite condition, then the quenching of that condition by a separate assistant agent might also be acceptable, provided the principal promiser also promises to acquire the service from the assistant.

-

I promise X if Y.

-

I have been promised Y.

-

I promise to use Y is now equivalent to “I can probably promise X.”

Notice that, when quenched by an assistant, the trustworthiness is now an assessment about multiple agents, not merely the principal. A recipient of such a promise might assess the trustworthiness of the promise differently, given that other agents are involved, so it would be questionable (even a lie or deception) to reduce the three promises to a single promise of X, without qualification. The promises are similar, but not identical, as the assessment takes into account the uncertainties in the information provided.

I call this an assisted promise.

Conditional Causation and Dependencies

If we are trying to engineer a series of beneficial outcomes, telling a story in a simple linear fashion, preconditions are a useful tool. Sometimes people refer to this kind of arrangement of promises as choreography or even orchestration.

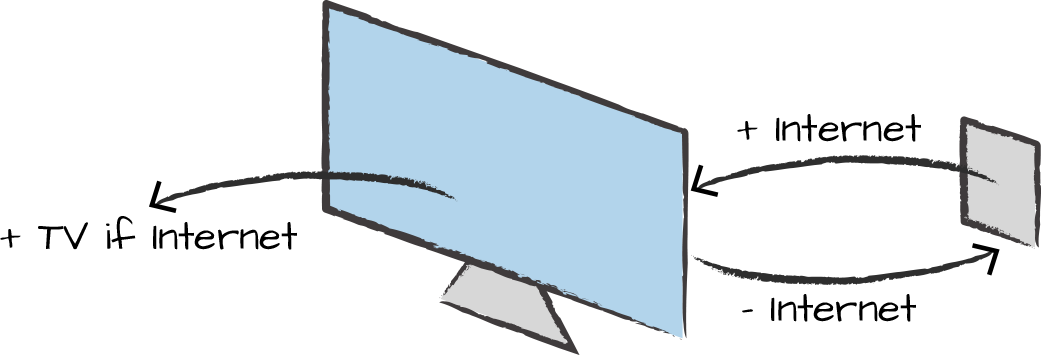

There are many examples of technology that make promises conditional on dependencies (Figure 4-1). Electrical devices that use batteries are an example. Software is often packaged in such a way as to rely on the presence of other components. Some packages do not even make it clear that their promises are conditional promises: they deceive users by not telling whether promises are conditional, so that one cannot judge how fragile they are before trying to install them.

Figure 4-1. A conditional promise, conditional on a dependency, such as a television whose service depends on the promise of an Internet connection from the wall socket.

Circular Conditional Bindings: The Deadlock Carousel

Deadlocks occur when agents make conditions on each other so that no actual promise is given without one of the two relaxing its constraints on the other. For instance:

-

Agent 1 promises X if Agent 2 keeps promise Y.

-

Agent 2 promises Y if Agent 1 keeps promise X.

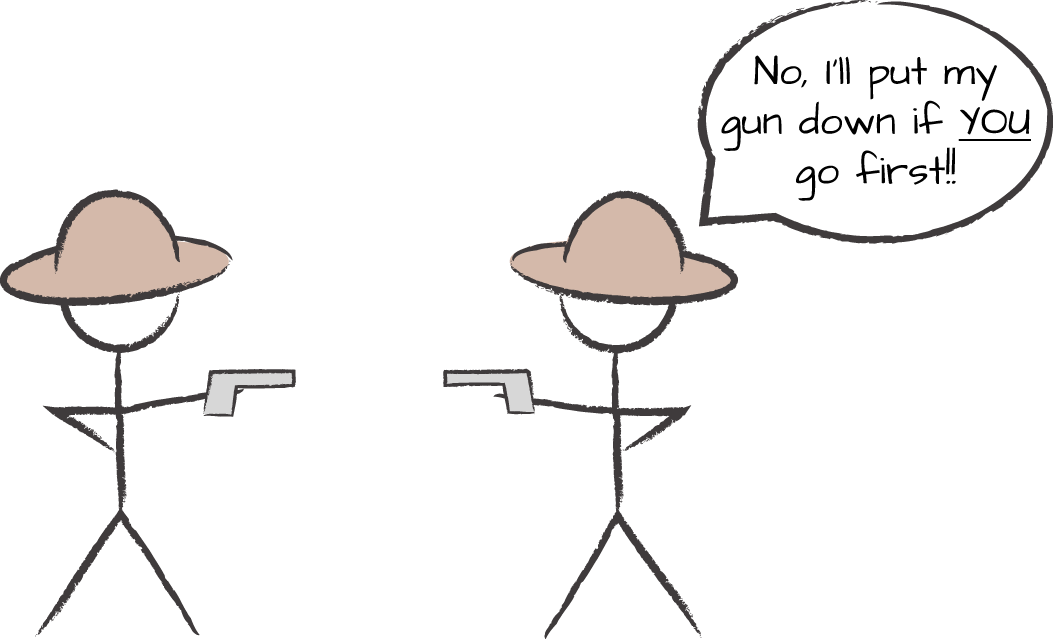

The dragon swallows its tail! This pair of promises represents a standoff or deadlock. One of the two agents has to go first. Someone has to break the symmetry to get things moving.

It is like two mistrusting parties confronting one another: hand over the goods, and I’ll give you the money! No, no, if you hand over the money, I’ll give you the goods (Figure 4-2).

Figure 4-2. A deadlock: I’ll go, if you go first!

Interestingly, if you are a mathematician, you might recognize that this deadlock is exactly like an equation. Does the righthand side determine the lefthand side, or does the lefthand side determine the righthand side? When we solve equations, we end up not with a solution but with a generator of solutions. It is only when we feed in a boundary condition that it starts to generate a stream of solutions like a never-ending motor.

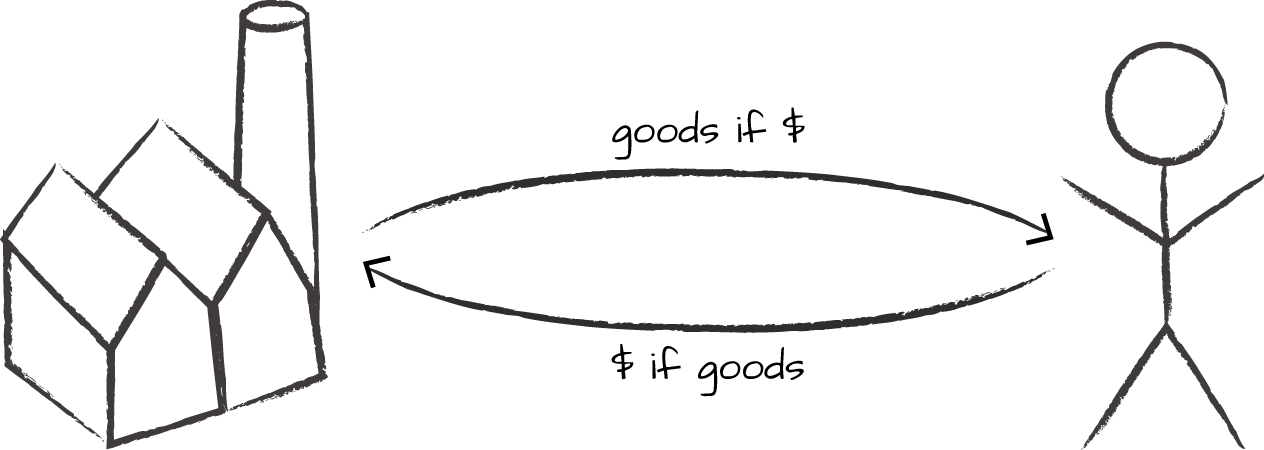

The same is true of these promises. If one of the agents can’t just go first, and remove its blocking condition, then the cycle continues to go around and never generates the end result (see Figure 4-3). If the promise is a one-off (a so-called idempotent operation) then it quickly reaches its final state. If the promise is repeatable, then Agent 1 will continue to give X to agent 2, and agent 2 (on receiving X) will respond with Y to agent 1. Curiously, the deadlock is actually the generator of the desired behaviour, like a motor, poised to act.

Figure 4-3. Circular dependencies — trust issues: you get the goods when we see the money, or you’ll get the money when we see the merchandise!

The Curse of Conditions, Safety Valves

The matter of deadlocks shows us that conditions are a severe blocker to cooperative behaviour. Conditions are usually introduced into promises because an agent does not trust another agent to keep a promise it relies on. The first agent wants to hedge its own promise so it does not appear to be responsible or liable for the inability for it to keep its own promise. Recall that a conditional promise is not really a promise at all, unless the condition has also been promised.

In the next chapter, we’ll see how work from cooperative game theory shows that agents can win over the long term when they remove conditions and volunteer their services without demanding up-front payment. Conditional strategies like “tit for tat” are successful once cooperation is ongoing, but if one agent fails to deliver for some reason, tolerance of the faults keeps the cooperation going, rather than have it break down into a standoff again. Examples of this can been seen in everything from mechanical systems to international politics. The utility of Promise Theory here is in being able to see what is common across such a wide span of agency.

So what is the point of conditions? If they only hinder cooperation, why do we bother at all? One answer is that they act as a long-term safety valve (i.e., a kind of dead-man’s brake) if one agent in a loop stops keeping its promise. A condition would stop other agents from keeping their promise without reciprocation, creating an asymmetry that might be perceived as unfair, or even untenable. Thus, conditions become useful once a stable trade of promises is ongoing. First, a relationship needs to get started.

Other Circular Promises

The case above is the simplest kind of loop in a promise, but we can make any loops with any number of agents. How can we tell if these promises can lead to a desired end state? For example:

-

1 promises to drive 2 if 3 fixes 1’s car.

-

2 promises to lend 3 a tool if 1 drives 2.

-

3 promises to fix 1’s car if 2 will lend a tool.

Such examples may or may not have a solution. In this case, there is another deadlock that can only be resolved by having one of the agents keep its promise without any condition. The conditions could be added back later.

Some circular promises can be consistent in the long run, but need to be started by breaking the deadlock with some initial voluntary offsetting of a condition. The three promises above are a case in point. Assuming all three agents are keeping these promises on a continuous basis, it all works out, but if someone is waiting out of mistrust for evidence of the other, it will never get started. Readers who are programmers, steeped in imperative thinking, should beware of thinking of promises with conditionals as being just like flowcharts.

A simple answer to this is average consistency from graph theory. So-called eigenvalue solutions, or fixed points of the maps, are the way to solve these problems for self-consistency. Game theory’s Nash equilibrium is one such case. Not all problems of this kind have solutions, but many do. A set of promises that does not have a solution will not lead to promises kept, so it will be a failure. I don’t want to look at this matter in any more depth. Suffice it to say that there is a well-known method for answering this question using the method of adjacency matrices for the network of promise bindings. The fixed-point methodology allows us to frame bundles of promises as self-sustaining mathematical games. There are well-known tools for solving games.

Logic and Reasoning: The Limitations of Branching and Linear Thinking

Conditions like the ones above form the basis of Boolean reasoning. It is the way we learn to categorize outcomes and describe pathways. This form of reasoning grew up in the days of ballistic warfare, where branching outcomes from ricochets and cannonballs hitting their targets were the main concerns.

This “if-then-else” framework is the usual kind of reasoning we learn in school. It is a serial procedure, based on usually binary true/false questions. It leads to simple flowcharts and the kind of linear storyline that many find appealing.

Boolean logic is not the only kind of reasoning, however. Electronic circuits do not have to work with only two possible values; they can support a continuum of values, such as the volume control on your music player. In biology, DNA works with an alphabet of four states, and chemical signals work on cells by changing concentration gradients. In computer simulations, cellular automata perform computations and solve problems by emergent outcomes with no linear reasoning at all. Random, so-called Monte Carlo methods can answer questions by trial and error, like pinning a tail on the donkey.

Most of us are less well trained in the methods mentioned above, and thus we tend to neglect them when solving problems involving parallelism. I believe that is a mistake because the world at large is a parallel system.

The compelling thing about our ballistic reasoning is that it results in stories, like the kind that humans told around the fire for generations. Our attachment to stories is remarkable. The way we communicate is in linear narratives, so we tend to think in this way, too. If you have ever tried parallel programming or managing a team, you’ll know that even though you serialize the outcomes in your planning and reporting, the work does not have to be done as an exact facsimile of the story you tell. We muddle the parallel in serial story lines.3

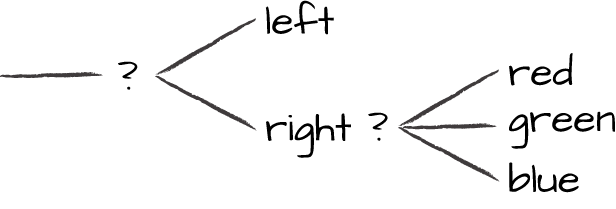

Serialization seems simpler than parallelization because we reason in that way as human beings. But the branching that is usually required in serial reasoning actually ends up increasing the final complexity by magnifying the number of possible outcomes (see Figure 4-4).

Whenever logical reasoning enters a problem, the complexity rises dramatically. Our present-day model of reasoning has its roots in ballistics, and often views unfolding processes as branches that diverge. You can see why in Figure 4-4.

Figure 4-4. Decisions lead to many possible worlds or outcomes, like a game of billiards. This is “ballistic reasoning” or deterministic reasoning.

The idea of a process with diverging possibilities is somewhat like the opposite of a promise converging on a definite outcome. So logic is a sort of sleeping enemy that we often feel we cannot do without.

Complexity begins with conditionals (i.e., the answer to a question that decides the way forward). In a sequence of promises, we might want something to be conditional on a promise being made or on a promise being kept. This means we need to think about verification and trust as basic parts of the story.

You might think that conditional promises look just like if-then-else computer programming, but no. An imperative computer program is a sequence of impositions, not promises. Most programming languages do not normally promise that something will be kept persistently; they deal in only transient states, throwing out requests, and leave the verification to the programmer. A sequence of conditional promises is more like a logistics delivery chain, which we’ll discuss in the next chapter. Conditionals are the basis for cooperation.

Some Exercises

-

If you know a promise is contingent on some other agent’s cooperation, how would you explain this to a promisee? If you make a promise without a condition, would this count as a deception?

-

Software packages are often installed with dependencies: you must install X before you can install Y. Express this as promises instead. What is the agent that makes the promise? Who is the promisee?

-

The data transfer rate indicated on a network connection is a promise made usually about the maximum possible rate. By not declaring what that depends on, it can be deceptive. Suggest a more realistic promise for a network data rate, taking into account the possible obstacles to keeping the promise, like errors, the counterpart’s capabilities, and so on.

1 When a conditional promise is made and quenched by an assistant, the “contact” agent is directly responsible by default. We shall refine this view with alternative semantics later, since this is all a matter of managing the uncertainty of the promise being kept. As soon as we allow rewriting rules of this basic type, it is possible to support multiple solutions for bringing certainty with graded levels of complexity.

2 Some computer languages do have constructs called promises, but, although related, these are not precisely the same as the subject of this book.

3 This post hoc narrative issue is at the heart of many discussions about fault diagnosis. When things go wrong, it leads to a distorted sense of causation.

4 This is inaccurate usage of the term idempotence, but it has already become a cultural norm.

Get Thinking in Promises now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.