Linear Predictor

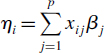

The structure of the model relates each observed y value to a predicted value. The predicted value is obtained by transformation of the value emerging from the linear predictor. The linear predictor, η (eta), is a linear sum of the effects of one or more explanatory variables, xj:

where the xs are the values of the p different explanatory variables, and the βs are the (usually) unknown parameters to be estimated from the data. The right-hand side of the equation is called the linear structure.

There are as many terms in the linear predictor as there are parameters, p, to be estimated from the data. Thus, with a simple regression, the linear predictor is the sum of two terms whose parameters are the intercept and the slope. With a one-way ANOVA with four treatments, the linear predictor is the sum of four terms leading to the estimation of the mean for each level of the factor. If there are covariates in the model, they add one term each to the linear predictor (the slope of each relationship). Interaction terms in a factorial ANOVA add one or more parameters to the linear predictor, depending upon the degrees of freedom of each factor (e.g. there would be three extra parameters for the interaction between a two-level factor and a four-level factor, because (2 – 1) × (4 – 1) = 3).

To determine the fit of a given model, a GLM evaluates the linear predictor for each ...

Get The R Book now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.