If members of the newly empowered developer class really are the New Kingmakers, shaping their own destiny and increasingly setting the technical agenda, how could we tell? What would happen if developers could choose their technologies, rather than having them chosen for them?

- First, there would be greater technical diversity. Where enterprises tend to consolidate their investments in as few technologies as possible (according to the “one throat to choke” model) developers, as individuals, are guided by their own preferences rather than a corporate mandate. Because they’re more inclined to use the best tool for the job, a developer-dominated marketplace would demonstrate a high degree of fragmentation.

- Second, open source would grow and proliferate. Whether it’s because they enjoy the collaboration, abhor unnecessary duplication of effort, because they’re building a resume of code, because they find it easy to obtain, or because it costs them nothing, developers prefer open source over proprietary commercial alternatives in the majority of cases. If developers were calling the shots, we’d expect to see open source demonstrating high growth.

- Third, developers would ignore or bypass vendor-led, commercially oriented technical standards efforts. Corporate-led standards tend to be designed by committee, with consensus and buy-in from multiple parties required prior to sign off. Like any product of a committee, standards designed in this fashion tend to be over-complicated and over-architected. This complexity places an overhead on developers who must then learn the standard before they can leverage it. Given that developers would, like any of us, prefer the simplest path, a world controlled by developers would see simple, organic standards triumphing over vendor-backed, artificially constructed alternatives.

- Last, technology vendors would prostrate themselves in an effort to court developers. If developers are materially important to their respective businesses, they’d be behaving accordingly, making it easier for them to build relationships with technologists.

As it happens, all four of these things that would happen in this theoretical developer-led world have happened in the real world.

Not too long ago, conventional wisdom dictated that enterprises strictly limit themselves to one of two competing technology stacks—Java or .NET. But in truth, the world was never that simple. While the Sun vs Microsoft storyline supplied journalists with the sort of one-on-one rivalry they love to mine, the reality was never so black and white. Even as the enterprises focused on the likes of J2EE, Perl, PHP, and others were flowing like water around the “approved” platforms, servicing workloads where development speed and low barriers to entry were at a premium. It was similar to what had occurred years earlier, when Java and C# supplanted the platforms (C, C++, etc.) that preceded them.

Fragmentation in the language and platform space is nothing new: “different tools for different jobs” has always been the developers’ mantra, if not that of the buyers supplying them. But the pace of this fragmentation is accelerating, with the impacts downstream significantly less clear.

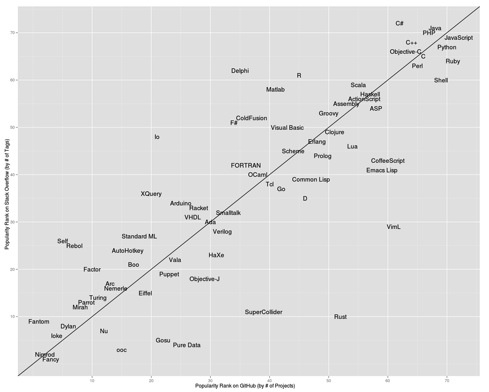

Today, Java and .NET remain widely used. But they’re now competing with a dozen competitive languages, not just one or two. Newly liberated developers are exercising their newfound freedoms, aggressively employing languages once considered “toys” compared to the more traditional and enterprise-approved environments. By my firm RedMonk’s metrics, Java and C#—the .NET stack’s primary development language—are but two of the languages our research considers Tier 1 (see below). JavaScript, PHP, Python, and Ruby in particular have exploded in popularity over the last few years and are increasingly finding a place even within conservative enterprises. Elsewhere, languages like CoffeeScript and Scala, which were designed to be more accessible versions of JavaScript and Java, respectively, are demonstrating substantial growth.

Nor are programming language stacks the only technology category experiencing fragmentation. Even the database market is decentralizing. Since their invention in the late 1960s and the subsequent popularization in the 1970s, relational databases have been the dominant form of persisting information. From an application-development perspective, relational databases were the answer regardless of the question. Oracle, IBM, and Microsoft left little oxygen for other would-be participants in the database space, and they collectively ensured that virtually every application deployed was backed by a relational database. This dominance, fueled in part by enterprise conservatism, was sustained for decades.

The first crack in the armor came with the arrival of open source alternatives. MySQL in particular leveraged free availability and an easier-to-use product to become the most-popular database in the world. But for all of its popularity, it was quite similar to the commercial products it competed with: it was, in the end, another relational database. And while the relational model is perfect for many tasks, it is obviously not perfect for every task.

When web-native firms like Facebook and Google helped popularize infrastructures composed of hundreds of small servers rather than a few very big ones, developers began to perceive some of the limitations of this relational database model. Some of them went off and created their own new databases that were distinctly non-relational in their design. The result today is a vibrant, diverse market of non-relational databases optimized for almost any business need.

CIOs choose software according to a number of different factors. Quality of technology is among them, but they are also concerned with the number of vendor relationships a business has to manage, the ability to get a vendor onto the approved-supplier lists, the various discounts offered for volume purchases, and more. A developer, by contrast, typically just wants to use the best tool for the job. Where a CIO might employ a single relational database because of non-technical factors, a developer might instead turn to a combination of eventually consistent key value stores, in memory databases and caching systems for the same project. As developers have become more involved in the technology decision-making process, it has been no surprise to see the number of different technologies employed within a given business skyrocket.

Fragmentation is now the rule, not the exception.

In 2001, IBM publicly committed to spending $1 billion on Linux. To put this in context, that figure represented 1.2% of the company’s revenue that year and a fifth of its entire 2001 R&D spend. Between porting its own applications to Linux and porting Linux to its hardware platforms, IBM, one of the largest commercial technology vendors on the planet, was pouring a billion dollars into the ecosystem around an operating system originally written by a Finnish graduate student that no single entity—not even IBM—could ever own. By the time IBM invested in the technology, Linux was already the product of years of contributions from individual developers and businesses all over the world.

How did this investment pan out? A year later, Bill Zeitler, head of IBM’s server group, claimed that they’d made almost all of that money back. “We’ve recouped most of it in the first year in sales of software and systems. We think it was money well spent. Almost all of it, we got back.”

Linux has defied the predictions of competitors like Microsoft and traditional analyst firms alike to become a worldwide phenomenon, and a groundbreaking success. Today it powers everything from Android devices to IBM mainframes. All of that would mean little if it were the exception that proved the rule. Instead Linux has paved the way for enterprise acceptance of open source software. It’s difficult to build the case that open source isn’t ready for the enterprise when Linux is the default operating system of your datacenters.

Linux was the foundation of LAMP, a collection of operating system, web server, programming language, and database software that served as an alternative to solutions from Microsoft, among others. Lightweight, open source, and well suited to a variety of tasks, LAMP was propelled to worldwide fame by the developers who loved it. The effects of their affection can still be seen. The March 2012 Netcraft survey found that Apache was on 65.24% of the more than 644 million servers surveyed worldwide. The next closest competitor was Microsoft’s Internet Information Server—at 13.81%. MySQL, meanwhile, was sufficiently ubiquitous that in order to acquire it with the EU’s regulatory approval, Oracle was compelled to make a series of promises, among them a commitment to continue developing the product. And while PHP is competing in an increasingly crowded landscape, it powers tens of millions of websites around the world, and is the fifth most-popular programming language as of March 2012 as measured by Ohloh, a code-monitoring site.

Beyond LAMP, open source is increasingly the default mode of software development. For example, Java, once closed source software, was strategically open sourced in an effort to both widen its appeal and remain competitive. Companies developing software, meanwhile, are open sourcing their internal development efforts at an accelerating rate. In new market categories, open source is the rule, proprietary software the exception. In the emerging non-relational database market, for example, the most popular and adopted projects are all open source. Mike Stonebreaker, the founder of seven different database companies, told the GlueCon conference audience in 2010 that it was impossible to be a new project in the database space without being open source.

The ascendance of open source is not altruistic; it’s simply good business for contributors and consumers alike. But the reason it’s good business is that it makes developers happier, more productive, and more efficient.

When Mosaic Communications Corporation was renamed Netscape in November 1994, the Web was still mostly an idea. Google was four years from incorporating, ten away from its public offering, and twelve from becoming a verb in the Oxford English Dictionary. Not only was Facebook not yet invented, Mark Zuckerberg wasn’t even in high school yet. It’s difficult to even recall in these latter days what life was like before the largest information network the world has ever seen became available all the time, on devices that hadn’t been invented yet.

The large technology vendors may not have understood the potential—there were few who did—but they at least knew enough to hedge their bets with the World Wide Web. IBM obtained the IBM.com domain in March 1986. Oracle would follow with its own .com in December 1988, as would Microsoft in May of 1991. For businesses that had evolved to sell technology to businesses, it was difficult to grasp the wider implications of a public Internet. At Microsoft, in fact, it took an internal email from Bill Gates—subject line “The Internet Tidal Wave”—to wake up the software giant to the opportunity.

By the time Google followed in Altavista’s footsteps, however, the desire for large vendors to extend their businesses to the Web was strong. Strong enough that they were willing to put their traditional animosity aside and collaborate on standards around what was being referred to as “web services.”

Eventually encompassing more than a hundred separate standards, web services pushed by the likes of IBM, Microsoft, and Sun Microsystems were an attempt by the technology industry to transform the public Internet into something that looked more like a corporate network. From SOAP to WS-Discovery to WS-Inspection to WS-Interoperability to WS-Notification to WS-Policy to WS Reliable Messaging to WS-ReliableMessaging (not a typo, Reliable Messaging and ReliableMessaging are different standards) to WS-Transfer, for every potential business use case, there was a standard, maintained by a standards body like OASIS or the W3C, and developed by large enterprise technology vendors.

It made perfect sense to the businesses involved: the Internet was a peerless network, but one they found lacking, functionally. And rather than have each vendor solve this separately, and worse—incompatibly—the vendors would collaborate on solutions that would be publicly available, and vendor agnostic by design.

There was just one problem: developers ignored the standards.

This development should have surprised no one. While the emerging standards made sense for specialized business use cases, they were generally irrelevant to individual developers. Worse, there were more than a hundred standards, each with its own set of documentation—and the documentation for each standard often exceeded a hundred pages. What made sense from the perspective of a business made no sense whatsoever to the legions of developers actually building the Web. Among developers, the web services efforts were often treated as a punchline. David Heinemeier Hansson, the creator of the popular Ruby on Rails web framework, referred to them as the “WS-Deathstar.”

Beyond the inherent difficulties of pushing dozens of highly specialized, business-oriented specifications onto an unwilling developer population, the WS-* set of standards had to contend with an alternative called Representational State Transfer (REST). Originally introduced and defined by Roy Fielding in 2000 in his doctoral dissertation, REST was everything that WS-* was not. Fielding, one the authors of HTTP, the protocol that still powers the Internet today—you’ve probably typed http:// many times yourself—advocated for a simple style that both reflected and leveraged the way the Internet itself had been constructed. Unsurprisingly, this simpler approach proved popular.

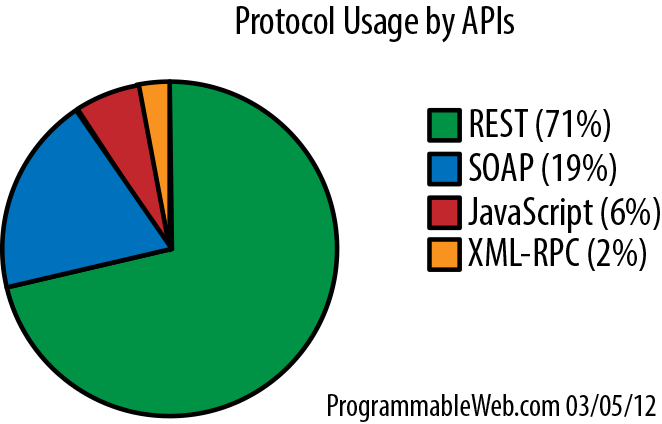

At Amazon, for example, developers were able to choose between the two different mechanisms to access data: SOAP or REST. Even in 2004, 80% of those leveraging Amazon Web Services did so via REST. Two years after that, Google deprecated their SOAP API for search. And they were just the beginning.

It’s hard to feel too sorry for SOAP’s creators, however—particularly if what one Microsoft developer told Tim O’Reilly is true: “It was actually a Microsoft objective to make [SOAP] sufficiently complex that only the tools would read and write this stuff,” he explained, “and not humans.”

But it’s important to understand the impact. By the latter half of the last decade, the writing was on the wall. Even conservative, CIO-oriented analyst firms were acknowledging REST’s role and the weaknesses of the vendor-led WS-* set of standards. The Gartner Group’s Nick Gall went so far as to attack the WS-* stack, saying the following in 2007:

Web Services based on SOAP and WSDL are “Web” in name only. In fact, they are a hostile overlay of the Web based on traditional enterprise middleware architectural styles that has fallen far short of expectations over the past decade.

Companies like eBay that were founded when SOAP looked as if it would persist (in spite of developer distaste for it) have largely continued to support these APIs alongside REST alternatives in order to avoid breaking the application built on them. But few businesses that were founded after SOAP’s popularity peaked offer anything but REST or REST-style interfaces. From ESPN to Facebook to Twitter, these businesses’ APIs reject the complexity of the WS-* stack, favoring the more-basic mechanism of HTTP transport. The Programmable Web, a website that serves as a directory for APIs, reports that as of March 2012, 71% of the 5,287 APIs were REST based. Less than a fifth were SOAP.

The market spoke, and even the vendors pushing competitive protocols had to listen. The market-wide acceptance of REST was a major victory for developers. Besides the practical implications—a Web that remained free of over-architected web services interfaces—the success of REST was a symbol. For perhaps the first time in the history of the technology industry, the actual practitioners were able to subvert and ultimately sideline the product of a massive, cross-industry enterprise technology consortium.

Which is why today, REST or the even simpler approaches it gave rise to dominate the Web.

Historically, appreciation for the importance of developers has been uneven, as have been corporate efforts to court the developer population. Developers require a distinct, specific set of incentives and resources. Here are five businesses that, in at least one area, understood the importance of developers and engaged appropriately.

In August 2011, a month after reporting record earnings, Apple surpassed Exxon as the world’s biggest company by market capitalization. This benchmark was reached in part because of fluctuations in oil price that affected Exxon’s valuation, but there’s little debate that Apple is ascendant. By virtually any metric, Steve Jobs’s second tenure at Apple has turned into one of the most successful in history, in any industry.

Seamlessly moving from hit product to hit product, the Apple of 2012 looks nothing like the Apple of the late nineties, when Dell CEO Michael Dell famously suggested that Apple should be shut down, to “give the money back to the shareholders.” It is no longer the leader in smartphone shipments, but according to independent analyst Horace Dediu, Apple regularly collects two-thirds of the industry’s total available mobile phone profits. Meanwhile, its dominance of the tablet market is so overwhelming that it’s being called “unbeatable” by industry observers. Which isn’t surprising, since a standalone iPad revenue stream would place it in the top third of Fortune 500 businesses. Even its forgotten desktop business is showing steady if unspectacular growth.

There are many factors contributing to Apple’s remarkable success, from the brilliance of the late Steve Jobs to the supply chain sophistication of current CEO Tim Cook. And of course, Apple’s success is itself fueling more success. In the tablet market, for example, would-be competitors face not only the daunting task of countering Apple’s unparalleled hardware and software design abilities, but the economies of scale that allow Apple to buy components more cheaply than anyone else. The perfect storm of Apple’s success is such that some analysts are forecasting that it could become the world’s first trillion-dollar company.

Lost in the shuffle has been the role of developers in Apple’s success. But while the crucial role played by Apple’s developers in the company’s success might be lost on the mainstream media, it is not lost on Apple itself. In March 2012, Apple dedicated the real estate on its website to the following graphic.

Setting aside the 25 billion applications milestone for a moment, consider the value of the home page. For a major retailer to devote the front page of a website to anything other than product is unusual. This action implies that Apple expects to reap tangible benefits from thanking its developers—most notably when it comes to recruiting additional developers. Clothed in this humble “thank you” is a subliminal message aimed at those building for its platform: develop for Apple, and you can sell in great volume.

As a rule, developers want the widest possible market. If they’re selling software, a larger audience means more potential sales. But even if they’re developing for reasons other than profit, a larger market can mean better visibility for their code—and that, in turn, can translate into higher consulting rates, job offers, and more. By emphasizing their enormous volume, Apple is actively reminding developers that tens of thousands of other developers have already decided to build for iOS and been successful in doing it. If you’re going to market to developers, this is how you do it. Subtly, and based on success.

Apple obviously realizes just how important developers are to its success. It’s hard to remember now, but there was a time when there wasn’t “an app for that.” When the iPhone was first released, it didn’t run third-party applications: the only applications available were those that came standard on the phone. It wasn’t until a year later that Apple released a software development kit (SDK) for developers to begin building applications for the hardware. By 2009, a mere two years after the iPhone was launched, Apple was running the ads that would turn “there’s an app for that” into the cliché it is today.

While Apple’s application strategy has unquestionably been crucial to the success to date of the iPad and iPhone—not to mention the developers who’ve earned $4 billion via the App Store—it’s the higher switching costs created by Apple’s application strategy that might be of greater long-term importance. In their book Information Rules, Haas School of Business Professor Carl Shapiro and Google Chief Economist Hal Varian claim that “the profits you can earn from a customer—on a going forward, present-value basis—exactly equal the total switching costs.” This, more than Apple’s design abilities, and even more than its supply chain excellence, may be the real concern for would-be Apple competitors. Each application downloaded onto an iPad—particularly each app purchased—is one more powerful reason not to defect to a competitive platform like Android or Windows Mobile. An iPhone or iPad user contemplating a switch would have to evaluate whether they can get all of the same applications on a competitive platform, then decide whether they’re willing to purchase the commercial apps a second time for a different platform.

Apple has, with its success, created a virtuous cycle that will continue to reward it for years to come. Developers are attracted to its platform because of the size of the market…those developers create thousands of new applications…the new applications give consumers thousands of additional reasons to buy Apple devices rather than the competition…and those new Apple customers give even more developers reason to favor Apple. Apple not only profits from this virtuous cycle, it benefits from ever-increasing economies of scale, realizing lower component costs than competitors.

None of which would be possible without the developers Apple has recruited and, generally, retained.

The company that started the cloud computing craze was founded in 1994 as a bookstore. The quintessential Internet company, Amazon.com competed with the traditional brick-and-mortar model via an ever-expanding array of technical innovations: some brilliant, others mundane. The most-important of Amazon’s retail innovations co-opted its customers into contributors. From affiliate marketing programs to online reviews, Amazon used technology to enable its customers’ latent desire to more fully participate in the buying process. The world’s largest retailer was, by design, a bottom-up story from the beginning. It was also, by necessity, a technology company. The combination is why Amazon is the most-underrated threat to the enterprise technology sector on the planet.

In 2006, Amazon’s Web Services (AWS) division introduced two new services called the Elastic Compute Cloud (EC2) and Simple Storage Service (S3). These services, elemental in their initial form, were difficult to use, limited in functionality, but breathtakingly cheap—my first bill from Amazon Web Services was for $0.12. From such humble beginnings came what we today call the cloud. Today, there are sizable businesses—a great many of them, in fact—that run their infrastructure off of machines they rent from Amazon.

Amazon’s insight seems obvious now, but was far less so at the time. As Microsoft’s then Chief Software Architect Ray Ozzie said in 2008, “[the cloud services model] really isn’t being taken seriously right now by anybody except Amazon.” Making the technology, expertise, and economies of scale that went into building Amazon.com available for sale, at rates even an individual could afford was, at the time, a move that baffled the market. No longer would developers need to purchase their own hardware: they could rent it from the cloud.

The decision to make its infrastructure available for pennies on the dollar—literally—did two things. First, it ensured that it would have no immediate competition from major systems vendors. Major enterprise technology vendors are built on margin models; volume sales were of no interest to them. Why sell thousands of servers at ten cents an hour when you can sell a single mainframe for a few hundred thousand? As one senior executive put it, “I’m not interested in being in the hosting business.” Much later, these same vendors would later come to see Amazon as a threat.

More importantly, Amazon’s cloud efforts gave it an unprecedented ability to recruit developers. Because it was both a volume seller and technology company at its core, Amazon realized the importance of recruiting developers early—moving its entire organization to services-based interfaces. At the time, this was revolutionary; while everyone was talking about “Service Oriented Architectures,” almost no one had built one. And certainly no one had built one at Amazon’s scale. While this had benefits for Amazon internally, its practical import was that, if Amazon permitted it, anyone from outside Amazon could interact with its infrastructure as if they were part of the company. Need to provision a server, spin up a database, or accept payments? Outside developers could now do this on Amazon’s infrastructure as easily as employees. Suddenly, external developers could not only extend Amazon’s own business using their services—they could build their own businesses on hardware they rented from the one-time bookstore, now a newly minted technology vendor.

In pioneering the cloud market, Amazon captured the attention of millions of developers worldwide. Its developer attention is such, in fact, that even vendors that might rightly regard Amazon as a threat are forced to partner with it, for fear of ignoring a vital, emerging market.

This is the power of developers.

In May 2009 at the conclusion of the second-annual Google I/O conference in San Francisco, then VP of Engineering Vic Gondotra, channeling Steve Jobs, had “one more thing” for attendees: a free phone. In something of a technology conference first, Google gave every attendee a brand new mobile phone, the HTC Hero. Even by industry standards, this was generous; the typical giveaway is a cheaply manufactured backpack drowning in sponsor logos.

Ostensibly a “thank you” to developers and the Android community, it is perhaps more accurate to characterize this as an audacious, expensive, developer-recruitment exercise. Seventeen months into its existence, Android was an interesting project, but an also-ran next to Apple’s iPhone OS (it was not renamed iOS until June 2010). Google understood that developers are more likely to build for themselves—what’s referred to in the industry as “scratching their own itch”—Google made sure that several thousand developers motivated enough to attend their conference had an Android device to use for themselves.

The statistics axiom that correlation does not prove causation certainly applies here, but it’s impossible not to notice the timing of that handset giveaway. On the day that Google sent all of those I/O attendees home happy, the number of Android devices being activated per day was likely in the low tens of thousands (Google hasn’t made this data available). By the time the conference rolled around again a year later, the number was around 100,000. By 2011, it was around 400,000. Nine months later, in February of 2012, Google announced at the Mobile World Congress that it was lighting up 850,000 Android devices per day. And just ahead of the release of the iPhone 5, Google disclosed that it was currently activating 1.3 million Android devices daily. It is, by virtually any measure, the fastest-growing operating system in history.

Any number of factors contributed to this success. Google successfully borrowed the Microsoft strategy of working with multiple hardware partners to maximize penetration. It made opportunistic hires for its Android team, and it offered high-quality linked services like Gmail, to name just three. But in allocating its capital toward free hardware for developers—a tactic it has repeated multiple times since—Google is telegraphing its belief that developer recruitment was and is crucial to the platform’s success. This belief is backed by history: from Microsoft’s Windows to Apple’s competing iOS, the correlation between operating system and developer adoption has been immensely strong. Few companies, however, have grasped that as deeply as Google, which put its money where its mouth was in courting a developer audience.

In a May 2001 address at the Stern School of Business entitled “The Commercial Software Model,” Microsoft Senior Vice President Craig Mundie said that the Gnu Public License (GPL)—the license that governs the Linux kernel, among other projects—posed “a threat to the intellectual property of any organization making use of it.” A month later, in an interview with the Chicago Sun-Times, Microsoft CEO Steve Ballmer characterized Linux as a “cancer that attaches itself in an intellectual property sense to everything it touches.” Six years later, Ballmer and Microsoft were on the offensive, alleging in an interview with Fortune that the Linux kernel violates 42 Microsoft patents.

In 2009, Microsoft, calling it “the community’s preferred license,” released 20,000 lines of code under the GPL, intended for inclusion into the Linux kernel. By 2011, Microsoft was testing Linux running on top of its Azure cloud platform. By 2012, Microsoft was in the top 20 contributors to the release of the 3.2 Linux kernel.

While this remarkable about face might seem embarrassing from a public relations perspective, it’s simply good business. Microsoft’s ability to set its reservations regarding open source aside may in fact be a pivotal moment in the company’s history. The epiphany reportedly came in a meeting with Bill Gates in the summer of 2008, a week before Gates retired. His conclusion? Microsoft had no choice but to participate in open source. Credit Gates for understanding that the world had changed around the Redmond software giant. As Tim O’Brien, the General Manager of their Platform Strategy Group told my colleague in February:

We need to think more like the Web…. [O]ne stack to run them all has gone away. This stuff about single vendor stacks is behind us. The days of recruiting developers to where you are is over. You have to go to where they are.

Microsoft remains a firm believer in the virtues of intellectual property and proprietary software. Its strategic understanding of open source was, in a sense, skin deep, in that it did nothing to fundamentally alter the company’s DNA. But its understanding that the days of dictating to developers are over is evident in their product strategy.

In the mobile world, Microsoft has implicitly blessed Xamarin, a startup that sells commercially an open source version of their .NET stack. On the cloud, developers can employ Microsoft’s .NET stack or erstwhile competitors like Java, JavaScript, or PHP, and build software in the open source Eclipse development environment. And since 2008, Microsoft has been a sponsor of the Apache Software Foundation, an open source governance non-profit.

In other words, the once-dominant Microsoft is adjusting to the shifting landscape; one in which the developers, not the vendors, are in charge. Steve Ballmer, famous for jumping up and down on a stage screaming, “DEVELOPERS! DEVELOPERS! DEVELOPERS!” finally seems to be putting them front and center with the company’s strategy.

In an interview with Fortune in 2007, Netflix CEO Reed Hastings summed up his company’s future simply, saying “We named the company Netflix for a reason; we didn’t name it DVDs-by-mail. The opportunity for Netflix online arrives when we can deliver content to the TV without any intermediary device.” In other words, the company’s original, popular DVD-by-mail business model that vanquished once mighty Blockbuster was always little more than an intermediary step toward a digital model.

In 1999, Netflix’s current path toward online delivery would have been impractical, if not impossible. Not only were content owners years away from accepting the reality that users would find ways to obtain their content online—legally or otherwise—the technology was not ready. As recently as 2005, the year YouTube was founded, the combination of slower home broadband connections and immature video codecs meant that even thirty-second videos were jerky, buffered affairs. The performance of streaming full-length feature films would have been unacceptable to most customers.

Two years later, however, Netflix was ready. In 2007, Netflix’s “Watch Now”—subsequently rebranded “Watch Instantly”—debuted. In a press release, Hastings acknowledged that adoption was a multi-year proposition, but one he felt was inevitable:

We believed Internet-based movie rental represented the future, first as a means of improving service and selection, and then as a means of movie delivery. While mainstream consumer adoption of online movie watching will take a number of years due to content and technology hurdles, the time is right for Netflix to take the first step.

The age of streaming—and the beginning of the end of DVDs—was here. Four years later, Netflix Watch Instantly would make up almost a third of US Internet traffic. In 2012, the number of movies streamed online is expected to exceed the number of DVD/Blu-Ray discs sold for the first time.

Hastings’ original vision was critical because it shaped how the company was built. Netflix wasn’t disrupted by a streaming business, because it always saw itself as a streaming business that was biding its time with a DVD rental business. And because a streaming business is, by definition, a technology business, the company has always understood the importance that developers—both those employed by the company, as well as outside developers—could play in the company’s future.

Internally, Netflix oriented its business around its developers. As cloud architect Adrian Cockcroft put it:

The typical environment you have for developers is this image that they can write code that works on a perfect machine that will always work, and operations will figure out how to create this perfect machine for them. That’s the traditional dev-ops, developer versus operations contract. But then of course machines aren’t perfect and code isn’t perfect, so everything breaks and everyone complains to each other.

So we got rid of the operations piece of that and just have the developers, so you can’t depend on everybody and you have to assume that all the other developers are writing broken code that isn’t properly deployed.

Empowering developers would seem like a straightforward decision, but is hardly the norm. To Netflix’s credit, they realized not only the potential of their own technical staff, but what might be harnessed from those not on the Netflix payroll.

Although online retailers enjoy many advantages over brick-and-mortar alternatives, browsing typically isn’t one of them. Physical stores are more easily and efficiently navigated than websites limited to the size of a computer screen. As a result, sites like Amazon.com or Netflix rely heavily on algorithms to use the limited real estate of a computer screen to present users with content matched specifically to them. Netflix’s own algorithm, Cinematch, attempted to predict what rating a given user would assign to a given film. On October 2, 2006, Netflix announced the Netflix Prize: The first team of non-employees that could best their in-house algorithm by 10% would claim $1,000,000. This prize had two major implications. First, it implied that the benefits of an improved algorithm would exceed one million dollars for Netflix, presumably through customer acquisition and improvements in retention. Second, it implied that crowd-sourcing had the potential to deliver better results than the organization could produce on its own.

In this latter assumption, Netflix was proven correct. On October 8—just six days after the prize was announced—an independent team bested the Netflix algorithm, albeit by substantially less than ten percent. The 10% threshold was finally reached in 2009. In September of that year, Netflix announced that the team “BellKor’s Pragmatic Chaos”—composed of researchers from AT&T Labs, Pragmatic Theory, and Yahoo!—had won the Netflix Prize, taking home a million dollars for their efforts.

A year earlier, meanwhile, Netflix had enabled the recruitment of millions of other developers by providing official APIs. In September 2008, Netflix launched developer.netflix.com, where developers could independently register with Netflix to get access to APIs that would enable them to build applications that would manage users’ video queues, check availability, and access account details. Just as Netflix believed that the wider world might be able to build a better algorithm, so too did it believe that out of the millions of developers in the world, one of them might be able to build a better application than Netflix itself.

Why get in the way of those who would improve your business?

Get The New Kingmakers now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.