When choosing a loss function, we must also choose a corresponding learning rate that will work with our problem. Here, we will illustrate two situations, one in which L2 is preferred and one in which L1 is preferred.

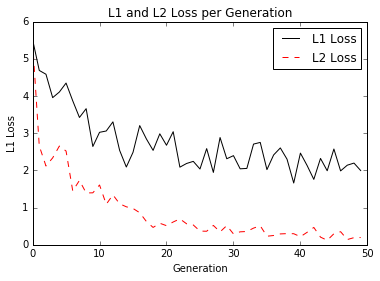

If our learning rate is small, our convergence will take more time. But if our learning rate is too large, we will have issues with our algorithm never converging. Here is a plot of the loss function of the L1 and L2 loss for the iris linear regression problem when the learning rate is 0.05:

With a learning rate of 0.05, ...