Introduction: The Rise of Modern Data Architectures

Data is creating massive waves of change and giving rise to a new data-driven economy that is only beginning. Organizations in all industries are changing their business models to monetize data, understanding that doing so is critical to competition and even survival. There is tremendous opportunity as applications, instrumented devices, and web traffic are throwing off reams of 1s and 0s, rich in analytics potential.

These analytics initiatives can reshape sales, operations, and strategy on many fronts. Real-time processing of customer data can create new revenue opportunities. Tracking devices with Internet of Things (IoT) sensors can improve operational efficiency, reduce risk, and yield new analytics insights. New artificial intelligence (AI) approaches such as machine learning can accelerate and improve the accuracy of business predictions. Such is the promise of modern analytics.

However, these opportunities change how data needs to be moved, stored, processed, and analyzed, and it’s easy to underestimate the resulting organizational and technical challenges. From a technology perspective, to achieve the promise of analytics, underlying data architectures need to efficiently process high volumes of fast-moving data from many sources. They also need to accommodate evolving business needs and multiplying data sources.

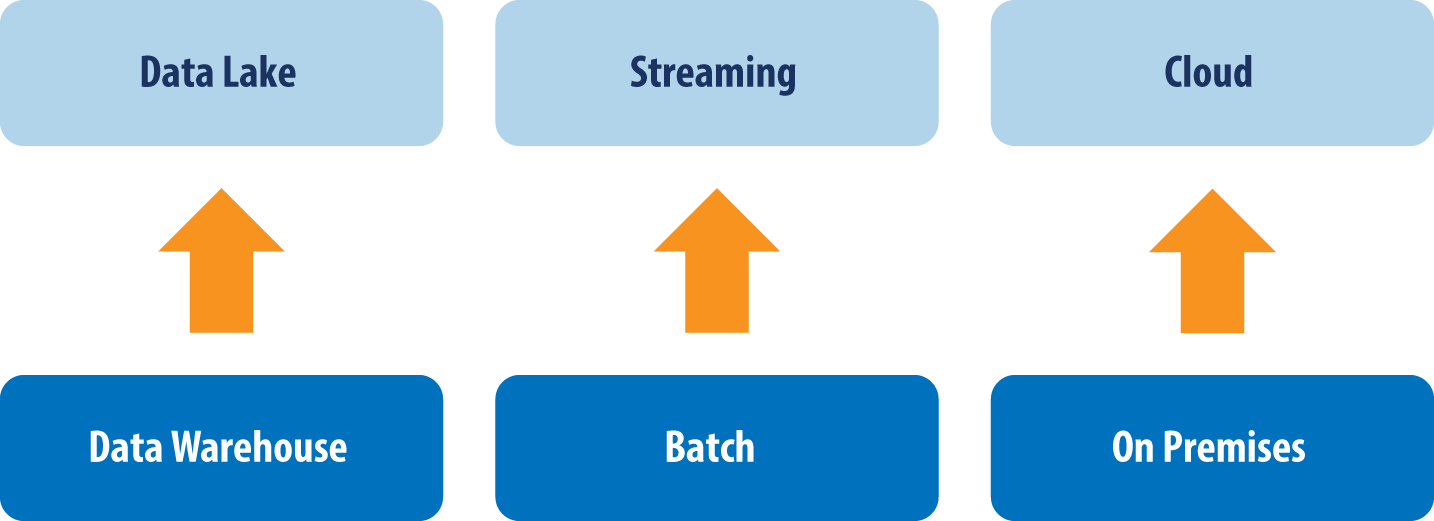

To adapt, IT organizations are embracing data lake, streaming, and cloud architectures. These platforms are complementing and even replacing the enterprise data warehouse (EDW), the traditional structured system of record for analytics. Figure I-1 summarizes these shifts.

Figure I-1. Key technology shifts

Enterprise architects and other data managers know firsthand that we are in the early phases of this transition, and it is tricky stuff. A primary challenge is data integration—the second most likely barrier to Hadoop Data Lake implementations, right behind data governance, according to a recent TDWI survey (source: “Data Lakes: Purposes, Practices, Patterns and Platforms,” TDWI, 2017). IT organizations must copy data to analytics platforms, often continuously, without disrupting production applications (a trait known as zero-impact). Data integration processes must be scalable, efficient, and able to absorb high data volumes from many sources without a prohibitive increase in labor or complexity. Table I-1 summarizes the key data integration requirements of modern analytics initiatives.

| Analytics initiative | Requirement |

|---|---|

| AI (e.g., machine learning), IoT | Scale: Use data from thousands of sources with minimal development resources and impact |

| Streaming analytics | Real-time transfer: Create real-time streams from database transactions |

| Cloud analytics | Efficiency: Transfer large data volumes from multiple datacenters over limited network bandwidth |

| Agile deployment | Self-service: Enable nondevelopers to rapidly deploy solutions |

| Diverse analytics platforms | Flexibility: Easily adopt and adapt new platforms and methods |

All this entails careful planning and new technologies because traditional batch-oriented data integration tools do not meet these requirements. Batch replication jobs and manual extract, transform, and load (ETL) scripting procedures are slow, inefficient, and disruptive. They disrupt production, tie up talented ETL programmers, and create network and processing bottlenecks. They cannot scale sufficiently to support strategic enterprise initiatives. Batch is unsustainable in today’s enterprise.

Enter Change Data Capture

A foundational technology for modernizing your environment is change data capture (CDC) software, which enables continuous incremental replication by identifying and copying data updates as they take place. When designed and implemented effectively, CDC can meet today’s scalability, efficiency, real-time, and zero-impact requirements.

Without CDC, organizations usually fail to meet modern analytics requirements. They must stop or slow production activities for batch runs, hurting efficiency and decreasing business opportunities. They cannot integrate enough data, fast enough, to meet analytics objectives. They lose business opportunities, lose customers, and break operational budgets.

Get Streaming Change Data Capture now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.