In a previous section, we have seen how the K-means work. So we can directly dive into the implementation. Since the training will be unsupervised, we need to drop the label column (that is, Region):

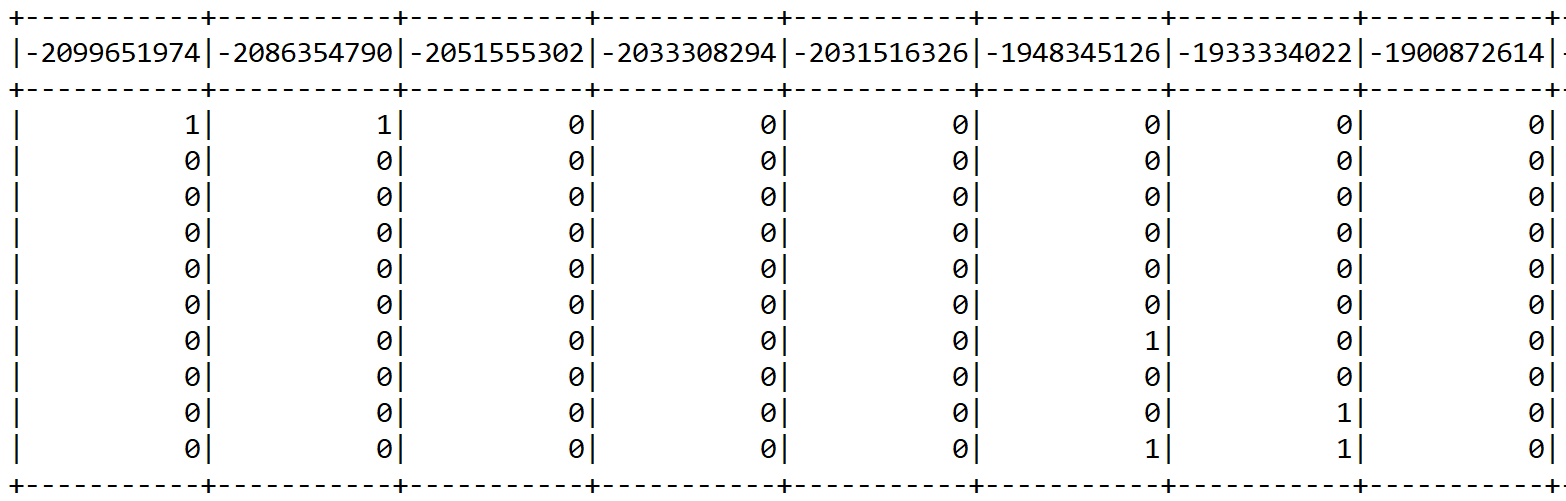

val sqlContext = sparkSession.sqlContextval schemaDF = sqlContext.createDataFrame(rowRDD, header).drop("Region")schemaDF.printSchema()schemaDF.show(10)>>>

Now, we have seen in Chapters 1, Analyzing Insurance Severity Claims and Chapter 2, Analyzing and Predicting Telecommunication Churn that Spark expects ...