To perform OLS regression using the GD algorithm, we have to minimize the cost function that measures how good a given regression line is.

This cost function will take in an (α , β) pair and return an error value based on how well the line fits our data. To compute this error for a given line, we'll iterate through each (x,y) point in our dataset and sum the square distances between each point's y value and the candidate line's y value (computed at α x + β).

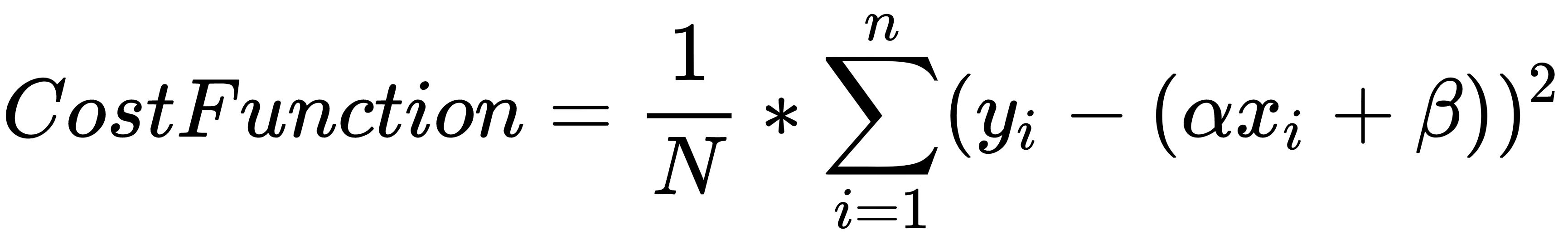

The cost function looks like the following:

If we plot the loss function (z axis) with respect to the coefficients of linear regression (α and β), the 3D plot looks like ...