As you might imagine, the benefits of parallel execution and Oracle Parallel Server do not come without a price. The next two sections discuss the various overhead issues that apply to parallel execution and Oracle Parallel Server.

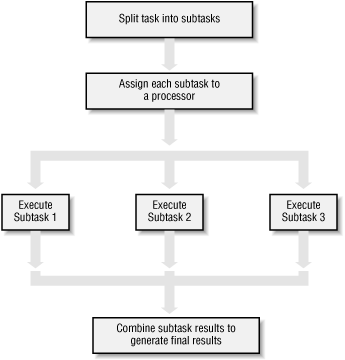

Parallel execution entails a cost in terms of the processing overhead necessary to break up a task into pieces, manage the execution of each of those pieces, and combine the results when the execution is complete. Figure 1.8 illustrates some of the steps involved in parallel execution.

Parallel execution overhead can be divided into three areas: startup cost, interference, and skew.

Startup cost refers to the time it takes to start parallel execution of a query or a DML statement. It takes time and resources to divide one large task into smaller subtasks that can be run in parallel. Time also is required to create the processes needed to execute those subtasks and to assign each subtask to one of these processes. For a large query, this startup time may not be significant in terms of the overall time required to execute the query. For a small query, however, the startup time may end up being a significant portion of the total time.

Interference refers to the slowdown that one process imposes on other processes when accessing shared resources. While the slowdown resulting from one process is small, with a large number of processors the impact can be substantial.

Skew refers to the variance in execution time of parallel subtasks. As the number of parallel subtasks increases (perhaps as a result of using more processors), the amount of work performed by each subtask decreases. The result is a reduction in processing time required for each of those subtasks and also for the overall task. There are always variations, however, in the size of these subtasks. In some situations these variations may lead to large differences in execution time between the various subtasks. The net effect, when this happens, is that the processing time of the overall task becomes equivalent to that of the longest subtask.

Let’s suppose you have a query that takes ten minutes to execute without parallel processing. Let’s further suppose when parallel processing is used that the query is broken down into ten subtasks and that the average processing time of each subtask is one minute. In this perfect situation, the overall query is also completed in one minute, resulting in a speedup of ten. However, if one subtask takes two minutes, the response of the overall query becomes two minutes. The speedup achieved is then only five. The skew, or the variation in execution time between subtasks, has reduced the efficiency of this particular parallel operation.

When Oracle Parallel Server is used, multiple instances need to synchronize access to the objects in the shared database. Synchronization is achieved by passing messages back and forth between the OPS nodes. A Lock Manager called Integrated Distributed Lock Manager (IDLM) facilitates this synchronization. The amount of synchronization required depends on the data access requirements of the particular applications running on each of the OPS nodes. If multiple OPS nodes are trying to access the same object, then there will be a lot of conflict. This increases the need for synchronization, resulting in a high amount of overhead. An OPS database needs to be designed and tuned to minimize this overhead. Otherwise, the required synchronization overhead may reduce, or even negate, the benefits of using OPS in the first place.

Oracle’s parallel execution features also may be used, and often are used, in an OPS environment. If you do use Oracle’s parallel execution features in an OPS environment, then all the issues discussed in the previous section on parallel execution overhead also apply.

Get Oracle Parallel Processing now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.