Chapter 7. Intermediate Sequence Modeling for Natural Language Processing

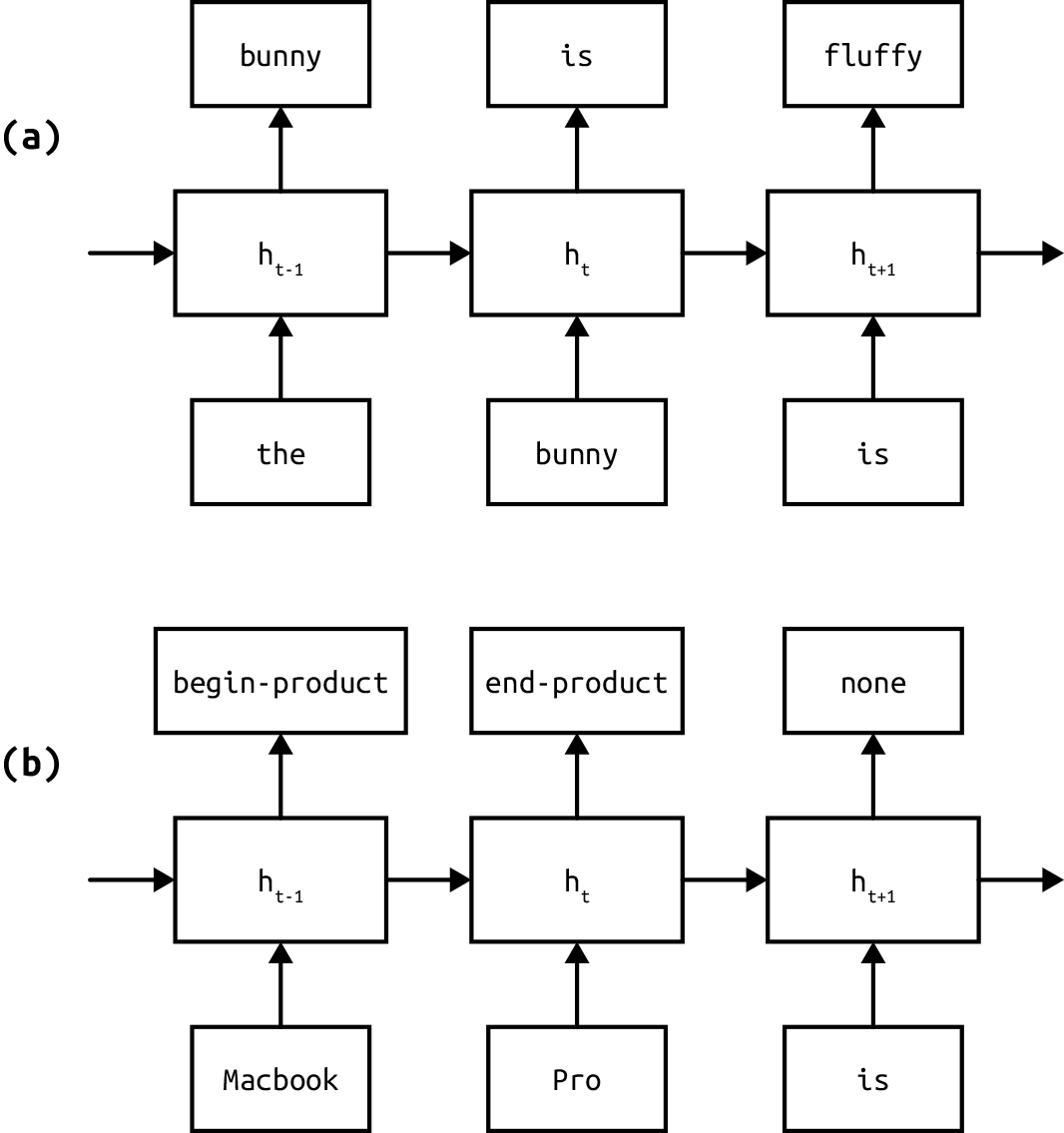

The goal of this chapter is sequence prediction. Sequence prediction tasks require us to label each item of a sequence. Such tasks are common in natural language processing. Some examples include language modeling (see Figure 7-1), in which we predict the next word given a sequence of words at each step; part-of-speech tagging, in which we predict the grammatical part of speech for each word; named entity recognition, in which we predict whether each word is part of a named entity, such as Person, Location, Product, or Organization; and so on. Sometimes, in NLP literature, the sequence prediction tasks are also referred to as sequence labeling.

Although in theory we can use the Elman recurrent neural networks introduced in Chapter 6 for sequence prediction tasks, they fail to capture long-range dependencies well and perform poorly in practice. In this chapter, we spend some time understanding why that is the case and learn about a new type of RNN architecture called the gated network.

We also introduce the task of natural language generation as an application of sequence prediction and explore conditioned generation in which the output sequence is constrained in some manner.

Figure 7-1. Two examples of sequence prediction tasks: (a) language modeling, in which the task is to predict the next word in a sequence; ...

Get Natural Language Processing with PyTorch now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.