Chapter 1. To Embed or Reference

When building a new application, often one of the first things you’ll want to do is to design its data model. In relational databases such as MySQL, this step is formalized in the process of normalization, focused on removing redundancy from a set of tables. MongoDB, unlike relational databases, stores its data in structured documents rather than the fixed tables required in relational databases. For instance, relational tables typically require each row-column intersection to contain a single, scalar value. MongoDB BSON documents allow for more complex structure by supporting arrays of values (where each array itself may be composed of multiple subdocuments).

This chapter explores one of the options that MongoDB’s rich document model leaves open to you: the question of whether you should embed related objects within one another or reference them by ID. Here, you’ll learn how to weigh performance, flexibility, and complexity against one another as you make this decision.

Relational Data Modeling and Normalization

Before jumping into MongoDB’s approach to the question of embedding documents or linking documents, we’ll take a little detour into how you model certain types of relationships in relational (SQL) databases. In relational databases, data modeling typically progresses by modeling your data as a series of tables, consisting of rows and columns, which collectively define the schema of your data. Relational database theory has defined a number of ways of putting application data into tables, referred to as normal forms. Although a detailed discussion of relational modeling goes beyond the scope of this text, there are two forms that are of particular interest to us here: first normal form and third normal form.

What Is a Normal Form, Anyway?

Schema normalization typically begins by putting your application data into the first normal form (1NF). Although there are specific rules that define exactly what 1NF means, that’s a little beyond what we want to cover here. For our purposes, we can consider 1NF data to be any data that’s tabular (composed of rows and columns), with each row-column intersection (“cell”) containing exactly one value. This requirement that each cell contains exactly one value is, as we’ll see later, a requirement that MongoDB does not impose, with the potential for some nice performance gains. Back in our relational case, let’s consider a phone book application. Your initial data might be of the following form, shown in Table 1-1.

| id | name | phone_number | zip_code |

1 | Rick | 555-111-1234 | 30062 |

2 | Mike | 555-222-2345 | 30062 |

3 | Jenny | 555-333-3456 | 01209 |

This data is actually already in first normal form. Suppose, however, that we wished to allow for multiple phone numbers for each contact, as in Table 1-2.

| id | name | phone_numbers | zip_code |

1 | Rick | 555-111-1234 | 30062 |

2 | Mike | 555-222-2345;555-212-2322 | 30062 |

3 | Jenny | 555-333-3456;555-334-3411 | 01209 |

Now we have a table that’s no longer in first normal form. If we were to

actually store data in this form in a relational database, we would have to

decide whether to store phone_numbers as an unstructured BLOB of text or as

separate columns (i.e., phone_number0, phone_number1). Suppose we decided

to store phone_numbers as a text column, as shown in

Table 1-2. If we needed to implement something like caller ID,

finding the name for a given phone number, our SQL query would look something

like the following:

SELECTnameFROMcontactsWHEREphone_numbersLIKE'%555-222-2345%';

Unfortunately, using a LIKE clause that’s not a prefix means that this query

requires a full table scan to be satisfied.

Alternatively, we can use multiple columns, one for each phone number, as shown in Table 1-3.

| id | name | phone_number0 | phone_number1 | zip_code |

1 | Rick | 555-111-1234 | NULL | 30062 |

2 | Mike | 555-222-2345 | 555-212-2322 | 30062 |

3 | Jenny | 555-333-3456 | 555-334-3411 | 01209 |

In this case, our caller ID query becomes quite verbose:

SELECTnameFROMcontactsWHEREphone_number0='555-222-2345'ORphone_number1='555-222-2345';

Updates are also more complicated, particularly deleting a phone number, since we

either need to parse the phone_numbers field and rewrite it or find and nullify

the matching phone number field. First normal form addresses these issues by

breaking up multiple phone numbers into multiple rows, as in Table 1-4.

| id | name | phone_number | zip_code |

1 | Rick | 555-111-1234 | 30062 |

2 | Mike | 555-222-2345 | 30062 |

2 | Mike | 555-212-2322 | 30062 |

2 | Jenny | 555-333-3456 | 01209 |

2 | Jenny | 555-334-3411 | 01209 |

Now we’re back to first normal form, but we had to introduce some redundancy into our data model. The problem with redundancy, of course, is that it introduces the possibility of inconsistency, where various copies of the same data have different values. To remove this redundancy, we need to further normalize the data by splitting it into two tables: Table 1-5 and Table 1-6. (And don’t worry, we’ll be getting back to MongoDB and how it can solve your redundancy problems without normalization really soon now.)

| contact_id | phone_number |

1 | 555-111-1234 |

2 | 555-222-2345 |

2 | 555-212-2322 |

3 | 555-333-3456 |

3 | 555-334-3411 |

As part of this step, we must identify a key column which uniquely identifies

each row in the table so that we can create links between the tables.

In the data model presented in Table 1-5 and Table 1-6, the contact_id forms the key of the contacts table,

and the (contact_id, phone_number) pair forms the key of the numbers table.

In this case, we have a data model that is free of redundancy, allowing us to

update a contact’s name, zip code, or various phone numbers without

having to worry about updating multiple rows.

In particular, we no longer need to worry about inconsistency in the data model.

So What’s the Problem?

As already mentioned, the nice thing about normalization is that it allows for

easy updating without any redundancy. Each fact about the application domain can

be updated by changing just one value, at one row-column intersection. The

problem arises when you try to get the data back out. For instance, in our

phone book application, we may want to have a form that displays a contact along

with all of his or her phone numbers.

In cases like these, the relational database programmer reaches for a JOIN:

SELECTname,phone_numberFROMcontactsLEFTJOINnumbersONcontacts.contact_id=numbers.contact_idWHEREcontacts.contact_id=3;

The result of this query? A result set like that shown in Table 1-7.

Indeed, the database has given us all the data we need to satisfy our screen design. The real problem is in what the database had to do to create this result set, particularly if the database is backed by a spinning magnetic disk. To see why, we need to briefly look at some of the physical characteristics of such devices.

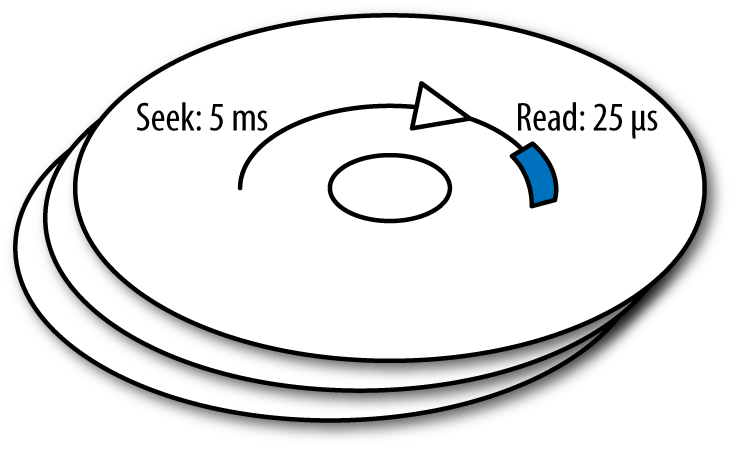

Spinning disks have the property that it takes much longer to seek to a particular location on the disk than it does, once there, to sequentially read data from the disk (see Figure 1-1). For instance, a modern disk might take 5 milliseconds to seek to the place where it can begin reading. Once it is there, however, it can read data at a rate of 40–80 MBs per second. For an application like our phone book, then, assuming a generous 1,024 bytes per row, reading a row off the disk would take between 12 and 25 microseconds.

The end result of all this math? The seek takes well over 99% of the time spent

reading a row. When it comes to disk access, random seeks are the enemy. The

reason why this is so important in this context is because JOINs typically require

random seeks. Given our normalized data model, a likely plan for our query

would be something similar to the following Python code:

fornumber_rowinfind_by_contact_id(numbers,3):yield(contact_row.name,number_row.number)

So there ends up being at least one disk seek for every contact in our

database. Of course, we’ve glossed over how find_by_contact_id works, assuming

that all it needs to do is a single disk seek. Typically, this is actually

accomplished by reading an index on numbers that is keyed by contact_id,

potentially resulting in even more disk seeks.

Of course, modern database systems have evolved structures to mitigate some of this, largely by caching frequently used objects (particularly indexes) in RAM. However, even with such optimizations, joining tables is one of the most expensive operations that relational databases do. Additionally, if you end up needing to scale your database to multiple servers, you introduce the problem of generating a distributed join, a complex and generally slow operation.

Denormalizing for Performance

The dirty little secret (which isn’t really so secret) about relational databases

is that once we have gone through the data modeling process to generate our nice

nth normal form data model, it’s often necessary to denormalize the model to

reduce the number of JOIN operations required for the queries we execute

frequently.

In this case, we might just revert to storing the name and

contact_id redundantly in the row. Of course, doing this results in the

redundancy we were trying to get away from, and leads to greater application

complexity, as we have to make sure to update data in all its redundant locations.

MongoDB: Who Needs Normalization, Anyway?

Into this mix steps MongoDB with the notion that your data doesn’t always have to be tabular, basically throwing most of traditional database normalization out, starting with first normal form. In MongoDB, data is stored in documents. This means that where the first normal form in relational databases required that each row-column intersection contain exactly one value, MongoDB allows you to store an array of values if you so desire.

Fortunately for us as application designers, that opens up some new possibilities in schema design. Because MongoDB can natively encode such multivalued properties, we can get many of the performance benefits of a denormalized form without the attendant difficulties in updating redundant data. Unfortunately for us, it also complicates our schema design process. There is no longer a “garden path” of normalized database design to go down, and the go-to answer when faced with general schema design problems in MongoDB is “it depends.”

MongoDB Document Format

Before getting into detail about when and why to use MongoDB’s array types, let’s review just what a MongoDB document is. Documents in MongoDB are modeled after the JSON (JavaScript Object Notation) format, but are actually stored in BSON (Binary JSON). Briefly, what this means is that a MongoDB document is a dictionary of key-value pairs, where the value may be one of a number of types:

In our example phone book application, we might store Jenny’s contact information in a document as follows:

{"_id":3,"name":"Jenny","zip_code":"01209","numbers":["555-333-3456","555-334-3411"]}

As you can see, we’re now able to store contact information in the initial

Table 1-2 format without going through the process of

normalization. Alternatively, we could “normalize” our model to remove the

array, referencing the contact document by its _id field:

// Contact document:{"_id":3,"name":"Jenny","zip_code":"01209"}// Number documents:{"contact_id":3,"number":"555-333-3456"}{"contact_id":3,"number":"555-334-3411"}

The remainder of this chapter is devoted to helping you decide whether referencing or embedding is the correct solution in various contexts.

Embedding for Locality

One reason you might want to embed your one-to-many relationships is data locality. As discussed earlier, spinning disks are very good at sequential data transfer and very bad at random seeking. And since MongoDB stores documents contiguously on disk, putting all the data you need into one document means that you’re never more than one seek away from everything you need.

MongoDB also has a limitation (driven by the desire for easy database partitioning)

that there are no JOIN operations available. For instance, if you used

referencing in the phone book application, your application might do something

like the following:

contact_info=db.contacts.find_one({'_id':3})number_info=list(db.numbers.find({'contact_id':3})

If we take this approach, however, we’re left with a problem that’s actually worse than a relational ‘JOIN` operation. Not only does the database still have to do multiple seeks to find our data, but we’ve also introduced additional latency into the lookup since it now takes two round-trips to the database to retrieve our data. Thus, if your application frequently accesses contacts’ information along with all their phone numbers, you’ll almost certainly want to embed the numbers within the contact record.

Embedding for Atomicity and Isolation

Another concern that weighs in favor of embedding is the desire for atomicity

and isolation in writing data.

When we update data in our database, we want to ensure that our

update either succeeds or fails entirely, never having a “partial success,” and

that any other database reader never sees an incomplete write operation.

Relational databases achieve this by using multistatement transactions. For

instance, if we want to DELETE Jenny from our normalized database, we might

execute code similar to the following:

BEGINTRANSACTION;DELETEFROMcontactsWHEREcontact_id=3;DELETEFROMnumbersWHEREcontact_id=3;COMMIT;

The problem with using this approach in MongoDB is that MongoDB is designed without multidocument transactions. If we tried to delete Jenny from our “normalized” MongoDB schema, we would need to execute the following code:

db.contacts.remove({'_id':3})db.numbers.remove({'contact_id':3})

Why no transactions?

MongoDB was designed from the ground up to be easy to scale to multiple distributed servers. Two of the biggest problems in distributed database design are distributed join operations and distributed transactions. Both of these operations are complex to implement, and can yield poor performance or even downtime in the event that a server becomes unreachable. By “punting” on these problems and not supporting joins or multidocument transactions at all, MongoDB has been able to implement an automatic sharding solution with much better scaling and performance characteristics than you’d normally be stuck with if you had to take relational joins and transactions into account.

Using this approach, we introduce the possibility that Jenny could be removed

from the contacts collection but have her numbers remain in the numbers

collection.

There’s also the possibility that another process reads the database

after Jenny’s been removed from the contacts collection, but before her numbers

have been removed.

On the other hand, if we use the embedded schema, we can remove Jenny

from our database with a single operation:

db.contacts.remove({'_id':3})

Note

One point of interest is that many relational database systems relax the requirement that transactions be completely isolated from one another, introducing various isolation levels. Thus, if you can structure your updates to be single-document updates only, you can get the effect of the serialized (most conservative) isolation level without any of the performance hits in a relational database system.

Referencing for Flexibility

In many cases, embedding is the approach that will provide the best performance and data consistency guarantees. However, in some cases, a more normalized model works better in MongoDB. One reason you might consider normalizing your data model into multiple collections is the increased flexibility this gives you in performing queries.

For instance, suppose we have a blogging application that contains posts and comments. One approach would be to use an embedded schema:

{"_id":"First Post","author":"Rick","text":"This is my first post","comments":[{"author":"Stuart","text":"Nice post!"},...]}

Although this schema works well for creating and displaying comments and posts, suppose we wanted to add a feature that allows you to search for all the comments by a particular user. The query (using this embedded schema) would be the following:

db.posts.find({'comments.author':'Stuart'},{'comments':1})

The result of this query, then, would be documents of the following form:

{"_id":"First Post","comments":[{"author":"Stuart","text":"Nice post!"},{"author":"Mark","text":"Dislike!"}]},{"_id":"Second Post","comments":[{"author":"Danielle","text":"I am intrigued"},{"author":"Stuart","text":"I would like to subscribe"}]}

The major drawback to this approach is that we get back much more data than we actually need. In particular, we can’t ask for just Stuart’s comments; we have to ask for posts that Stuart has commented on, which includes all the other comments on those posts as well. Further filtering would then be required in our Python code:

defget_comments_by(author):forpostindb.posts.find({'comments.author':author},{'comments':1}):forcommentinpost['comments']:ifcomment['author']==author:yieldpost['_id'],comment

On the other hand, suppose we decided to use a normalized schema:

// db.posts schema{"_id":"First Post","author":"Rick","text":"This is my first post"}// db.comments schema{"_id":ObjectId(...),"post_id":"First Post","author":"Stuart","text":"Nice post!"}

Our query to retrieve all of Stuart’s comments is now quite straightforward:

db.comments.find({"author":"Stuart"})

In general, if your application’s query pattern is well-known and data tends to

be accessed in only one way, an embedded approach works well. Alternatively, if

your application may query data in many different ways, or you are not able to

anticipate the patterns in which data may be queried, a more “normalized” approach

may be better.

For instance, in our “linked” schema, we’re able to sort the comments we’re

interested in, or restrict the number of comments returned from a query using

limit() and skip() operators, whereas in the embedded case, we’re stuck

retrieving all the comments in the same order they are stored in the post.

Referencing for Potentially High-Arity Relationships

Another factor that may weigh in favor of a more normalized model using document references is when you have one-to-many relationships with very high or unpredictable arity. For instance, a popular blog with a large amount of reader engagement may have hundreds or even thousands of comments for a given post. In this case, embedding carries significant penalties with it:

The problem with taking up too much RAM is that RAM is usually the most critical resource on a MongoDB server. In particular, a MongoDB database caches frequently accessed documents in RAM, and the larger those documents are, the fewer that will fit. The fewer documents in RAM, the more likely the server is to page fault to retrieve documents, and ultimately page faults lead to random disk I/O.

In the case of our blogging platform, we may only wish to display the first three comments by default when showing a blog entry. Storing all 500 comments along with the entry, then, is simply wasting that RAM in most cases.

The second point, that growing documents need to be copied, has to do with update performance. As you append to the embedded comments array, eventually MongoDB is going to need to move the document to an area with more space available. This movement, when it happens, significantly slows update performance.

The final point, about the size limit of MongoDB documents, means that if you have a potentially unbounded arity in your relationship, it is possible to run out of space entirely, preventing new comments from being posted on an entry. Although this is something to be aware of, you will usually run into problems due to memory pressure and document copying well before you reach the 16 MB size limit.

Many-to-Many Relationships

One final factor that weighs in favor of using document references is the case of many-to-many or M:N relationships. For instance, suppose we have an ecommerce system storing products and categories. Each product may be in multiple categories, and each category may contain multiple products. One approach we could use would be to mimic a relational many-to-many schema and use a “join collection”:

// db.product schema{"_id":"My Product",...}// db.category schema{"_id":"My Category",...}// db.product_category schema{"_id":ObjectId(...),"product_id":"My Product","category_id":"My Category"}

Although this approach gives us a nicely normalized model, our queries end up doing a lot of application-level “joins”:

defget_product_with_categories(product_id):product=db.product.find_one({"_id":product_id})category_ids=[p_c['category_id']forp_cindb.product_category.find({"product_id":product_id})]categories=db.category.find({"_id":{"$in":category_ids}})returnproduct,categories

Retrieving a category with its products is similarly complex. Alternatively, we can store the objects completely embedded in one another:

// db.product schema{"_id":"My Product","categories":[{"_id":"My Category",...}...]}// db.category schema{"_id":"My Category","products":[{"_id":"My Product",...}...]}

Our query is now much simpler:

defget_product_with_categories(product_id):returndb.product.find_one({"_id":product_id})

Of course, if we want to update a product or a category, we must update it in its own collection as well as every place where it has been embedded into another document:

defsave_product(product):db.product.save(product)db.category.update({'products._id':product['_id']},{'$set':{'products.*':product}},multi=True)

For many-to-many joins, a compromise approach is often best, embedding

a list of _id values rather than the full document:

// db.product schema{"_id":"My Product","category_ids":["My Category",...]}// db.category schema{"_id":"My Category"}

Our query is now a bit more complex, but we no longer need to worry about updating a product everywhere it’s included in a category:

defget_product_with_categories(product_id):product=db.product.find_one({"_id":product_id})categories=list(db.category.find({'_id':{'$in':product['category_ids']}}))returnproduct,categories

Conclusion

Schema design in MongoDB tends to be more of an art than a science, and one of

the earlier decisions you need to make is whether to embed a one-to-many

relationship as an array of subdocuments or whether to follow a more relational

approach and reference documents by their _id value.

The two largest benefits to embedding subdocuments are data locality within a document and the ability of MongoDB to make atomic updates to a document (but not between two documents). Weighing against these benefits is a reduction in flexibility when you embed, as you’ve “pre-joined” your documents, as well as a potential for problems if you have a high-arity relationship.

Ultimately, the decision depends on the access patterns of your application, and there are fewer hard-and-fast rules in MongoDB than there are in relational databases. Using wisely the flexibility that MongoDB gives you in schema design will help you get the most out of this powerful nonrelational database.

Get MongoDB Applied Design Patterns now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.