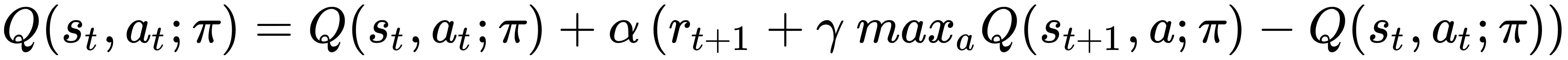

This algorithm was proposed by Watkins (in Learning from delayed rewards, Watkins C.I.C.H., Ph.D. Thesis, University of Cambridge, 1989; and further analyzed in Watkins C.I.C.H., Dayan P., Technical Note Q-Learning, Machine Learning 8, 1992) as a more efficient alternative to SARSA. The main feature of Q-learning is that the TD update rule is immediately greedy with respect to the Q(st+1, a) function:

The key idea is to compare the current Q(st, at) value with the maximum Q value achievable when the agent is in the successor state. In fact, as the policy must be GLIE, the convergence speed can be increased by avoiding wrong estimations ...