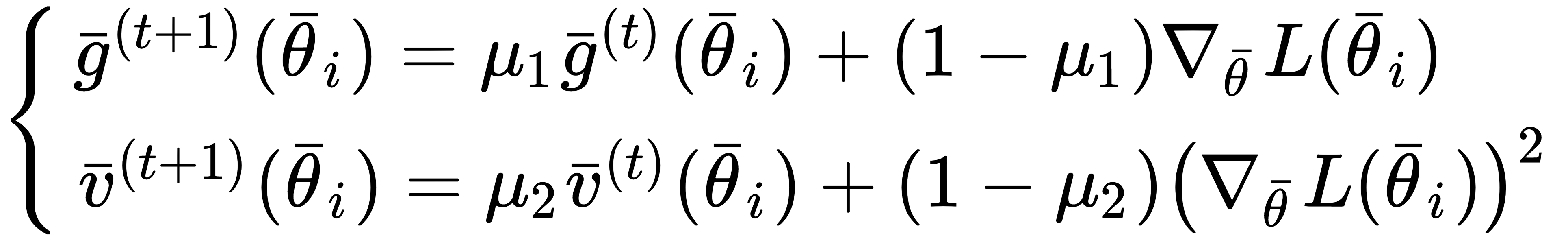

Adam (the contraction of Adaptive Moment Estimation) is an algorithm proposed by Kingma and Ba (in Adam: A Method for Stochastic Optimization, Kingma D. P., Ba J., arXiv:1412.6980 [cs.LG]) to further improve the performance of RMSProp. The algorithm determines an adaptive learning rate by computing the exponentially weighted averages of both the gradient and its square for every parameter:

In the aforementioned paper, the authors suggest to unbias the two estimations (which concern the first and second moment) by dividing them by 1 - μi, so the new moving averages become as follows:

The weight update rule for Adam is as follows:

Analyzing ...