3.4. Avoiding the Dreaded "Test Escape": Coverage and Regression Test Gaps

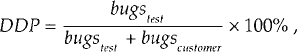

Whatever measurements your manager applies to your performance, one key criterion indicates whether you are doing your job well: the number of test escapes—that is, the number of field-reported bugs that your test team missed but could reasonably have detected during testing. This is quantifiable using the following metric:

where "DDP" stands for "defect detection percentage." We'll look at this metric again in a little more detail in Chapter 4 when we have a database in place for gathering the underlying data.

Note the word reasonably. If your testers could have found the bug only through unusual and complicated hardware configurations or obscure operations, that bug should not count as a test escape. Moreover, the count of the bugs found in the field does not include bugs previously detected by testing; in other words, if the testers found the bug during testing, but it was not fixed because of a project management decision, it doesn't count. Finally, note that in many environments one can assume that most field bugs are found in the first three months to a year after deployment, so DDP can be estimated after that period.

Test escapes usually arise through one or a combination of the following types of problems:

A low-fidelity test system.

While a low-fidelity test system might cover a significant chunk ...

Get Managing the Testing Process: Practical Tools and Techniques for Managing Hardware and Software Testing now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.