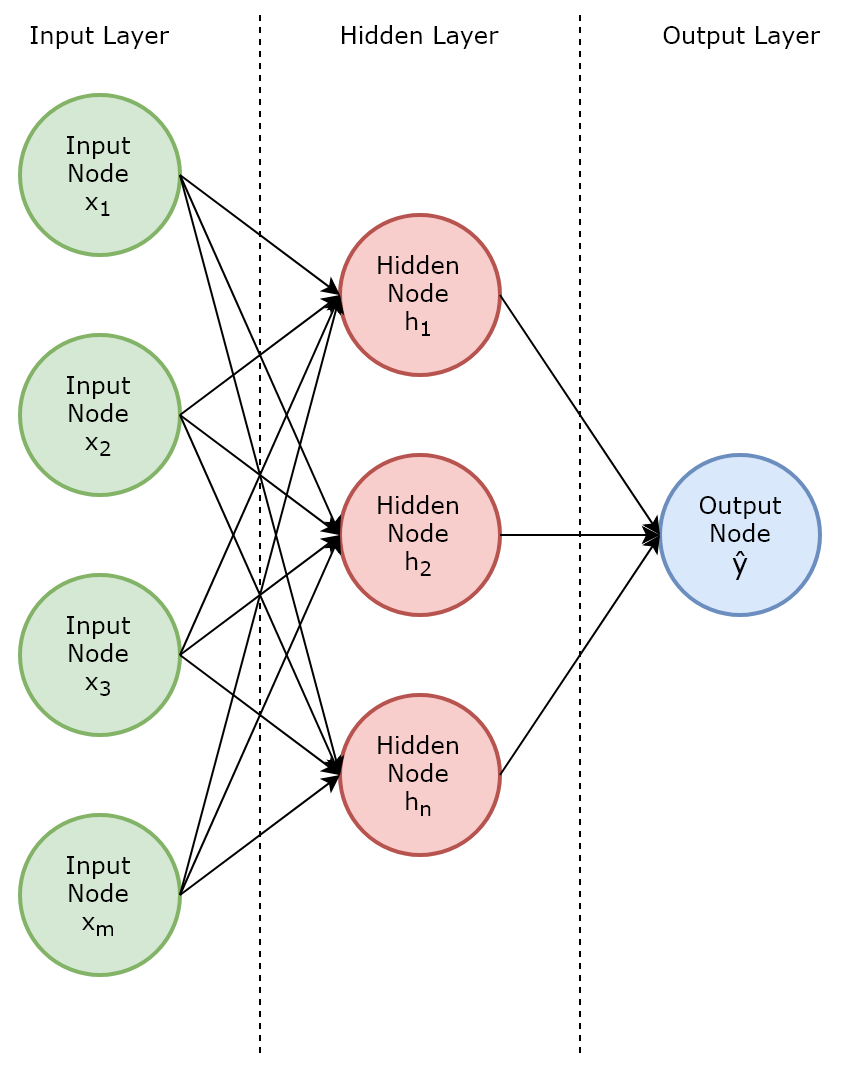

Multi-layer perceptrons differ from single-layer perceptrons as a result of the introduction of one or more hidden layers, giving them the ability to learn non-linear functions. Figure 3.11 illustrates the architecture of a multi-layer perceptron containing one hidden layer:

Backpropagation is a supervised learning process by which multi-layer perceptrons and other ANNs can learn, that is to say, derive an optimal set of weight coefficients. The first step in backpropagation is, in fact, forward propagation, whereby all weights are set randomly initially and the output from the network ...