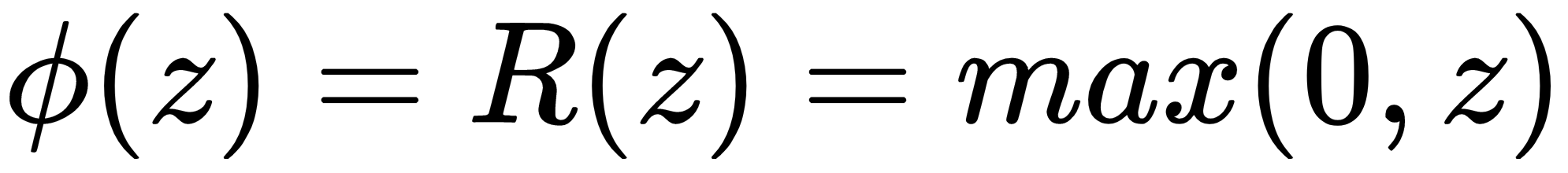

As with other neural networks, an activation function defines the output of a node and is used so that our neural network can learn nonlinear functions. Note that our input data (the RGB pixels making up the images) is itself nonlinear, so we need a nonlinear activation function. Rectified linear units (ReLUs) are commonly used in CNNs, and are defined as follows:

In other words, the ReLU function returns 0 for every negative value in its input data, and returns the value itself for every positive value in its input data. This is shown in Figure 7.12:

The ReLU function can be plotted as shown ...