Chapter 4. Routing Engine Protection and DDoS Prevention

This chapter builds upon the last by providing a concrete example of stateless firewall filter and policer usage in the context of a Routing Engine protection filter, and also demonstrates the new Trio-specific DDoS prevention feature that hardens the already robust Junos control plane with no explicit configuration required.

The RE protection topics discussed include:

IPv4 and IPv6 control plane protection filter case study

DDoS feature overview

DDoS protection case study

Mitigating DDoS with BGP flow specification

BGP flow-specification case study

RE Protection Case Study

This case study presents a current best practice example of a stateless filter to protect an MX router’s IPv4 and IPv6 control plane. In addition, the DDoS detection feature, available on Trio-based MX routers starting with release v11.2, is examined and then combined with RE filtering to harden the router against unauthorized access and resource depletion.

As networks become more critical, security and high availability become ever more crucial. The need for secure access to network infrastructure, both in terms of user-level authentication and authorization and all the way to the configuration and use of secure access protocols like SSH, is a given. So much so that these topics have been covered in many recent books. So as to not rehash the same information, readers interested in these topics are directed to Junos Enterprise Routing, Second Edition, by O’Reilly Media.

The goal of this section is to provide an up-to-date example of a strong RE protection filter for both IPv4 and IPv6, and to address the topic of why basic filters may not guard against resource depletion, which, if allowed to go unchecked, can halt a router’s operation just as effectively as any “hacker” who gains unauthorized access to the system with nefarious intent.

The topic of router security is complex and widespread. So much so that informational RFC 6192 was produced to outline IPv4 and IPv6 filtering best practices, along with example filters for both IOS- and Junos OS-based products. There is much overlap between the examples in this section and the RFC’s suggestions, which is a good sign, as you can never have too many smart people thinking about security. It’s good to see different approaches and techniques as well as a confirmation that many complex problems have common solutions that have been well tested.

IPv4 RE Protection Filter

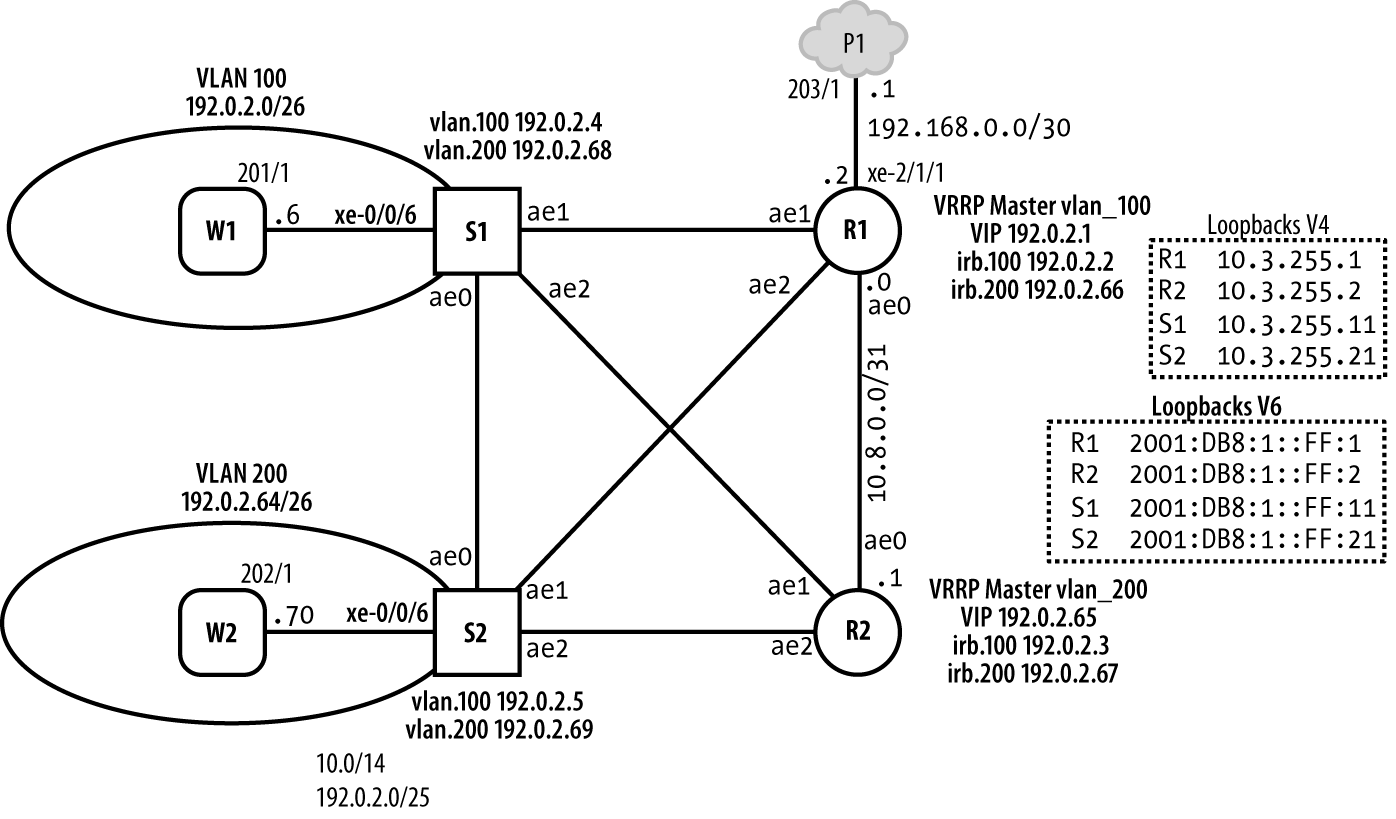

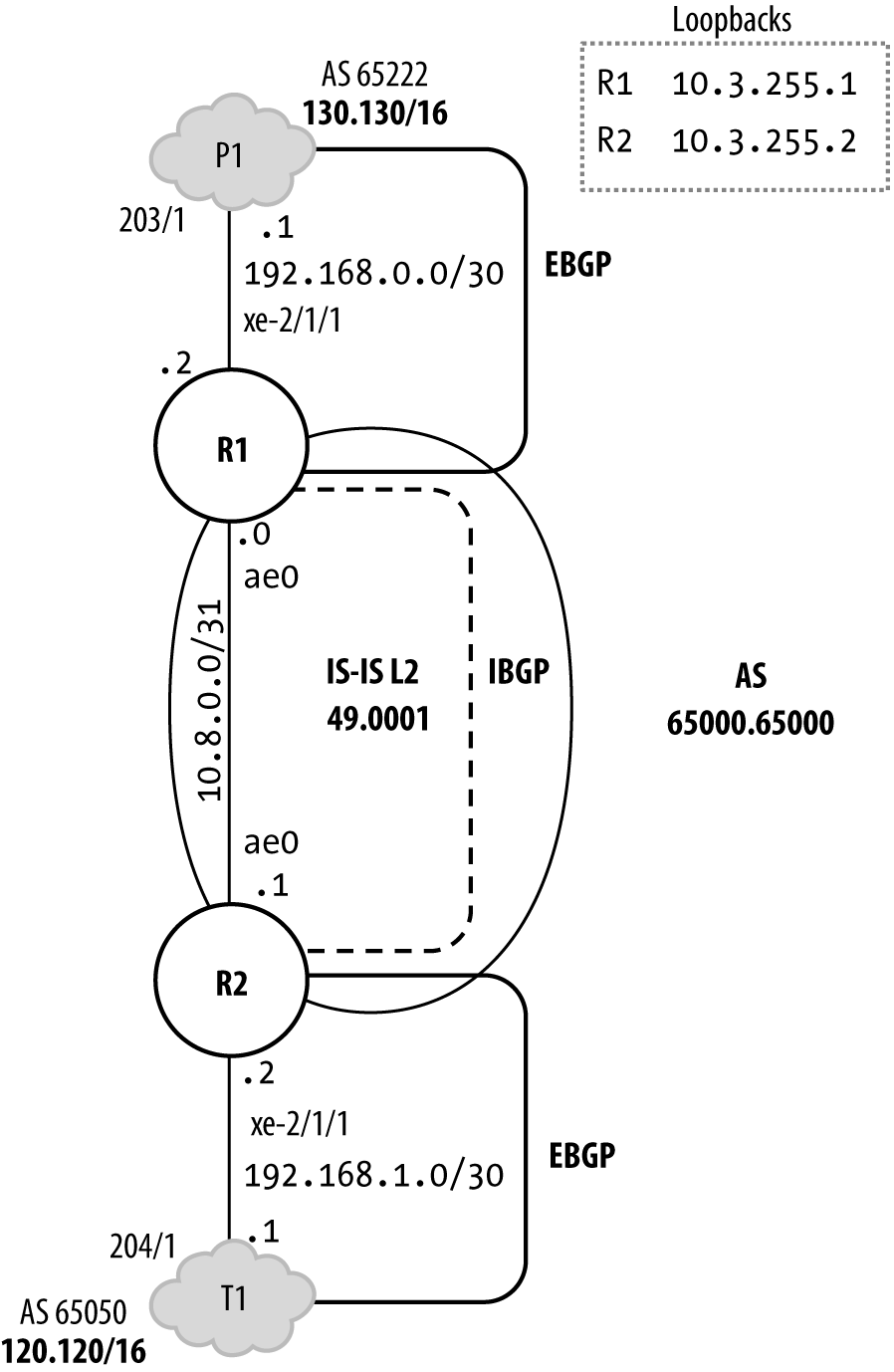

This section provides the reader with a current best practice example of an RE protection filter for IPv4 traffic. Protection filters are applied in the input direction to filter traffic arriving on PFE or management ports before it’s processed by the RE. Output filters are generally used for CoS marking of locally generated control plane traffic, as opposed to security-related reasons, as you generally trust your own routers and the traffic they originate. Figure 4-1 provides the topology details that surround this case study.

Figure 4-1. DDoS protection lab topology

The example, used with permission from Juniper Networks Books, is taken from Day One: Securing the Routing Engine by Douglas Hanks, also coauthor of this book.

Warning

Note: Router security is no small matter. The reader is encouraged to examine the filter carefully before adapting it for use in his or her own network.

The principles behind the filter’s operation and the specific rationale behind its design framework are explained in the Day One book, and so are not repeated here in the interest of brevity. The filter is included here as a case study example for several reasons:

RE protection is important and needed, and this is a really good filter. There’s no point in recreating an already perfectly round wheel, and the Day One book is freely available as a PDF.

The example makes great use of some important Junos features that are not necessarily MX-specific, and so have not been covered in this chapter, including filter nesting (a filter calling another filter),

apply-path, andprefix-list. All are powerful tools that can make managing and understanding a complex filter much simpler. The examples also make use of theapply-flags omitstatement. This flag results in the related configuration block not being displayed in ashow configuration command, unless you pipe the results todisplay omit. Again, while not a filter-specific feature, this is another cool Junos capability that can be utilized to make living with long filters that much easier.It’s a good test of this chapter and the reader’s comprehension of the same. This is a real-world example of a complex filter that solves a real issue. While specific protocol nuances, such as the specific multicast addresses used by OSPF, may not be known, having arrived here, the reader should be able to follow the filter’s operation and use of policing with little guidance.

The example is comprehensive, providing support for virtually all known legitimate routing protocols and services; be sure to remove support for any protocols or services that are not currently used, either by deleting the filter in question or by simply not including that filter in the list of filters that you ultimately apply to the lo0 interface. For example, as IS-IS is used in the current lab, there is currently no need for any OSPF-specific filter. Also, be sure to confirm that the prefix lists contain all addresses that should be able to reach the related service or protocol.

When first applying the filter list, you should replace the final discard-all term with one that matches all with an accept and log action. This is done as a safeguard to prevent service disruption in the event that a valid service or protocol has not been accommodated by a previous term. After applying the filter, pay special attention to any log hits indicating traffic has made it to the final catch-all term, as this may indicate you have more filter work to do.

Warning

Before applying any RE filter, you should carefully evaluate both the filters/terms and their application order to confirm that all valid services and remote access methods are allowed. In addition, you must also edit the sample prefix list to ensure they accurately reflect all internal and external addresses from where the related services should be reachable. Whenever making this type of change, console access should be available in the event that recovery is needed, and you should strongly consider the use of the commit confirmed command.

When your filter is correctly matched to the particulars of your network, the only traffic that should fall through to the final term should be that which is unsupported and therefore unneeded, and safe to drop. Once it is so confirmed, you should make the discard-all filter the last in the chain—its ongoing count and logging actions simplify future troubleshooting when a new service is added and no one can figure out why it’s not working. Yes, true security is a pain, but far less so in the long run then the lack of, or worse yet, a false sense of security!

Let’s begin with the policy-related configuration where prefix lists are defined in such a way that they automatically populate with addresses assigned to the system itself, as well as well-known addresses associated with common protocols. This small bit of upfront work makes later address-based matches a snap and helps ensure that ongoing address and peer definition changes are painless, as the filter automatically keeps up. Note that the sample expressions catch all addresses assigned, including those on the management network and GRE tunnels, etc. The sample presumes some use of logical systems (a feature previously known as logical routers). Where not applicable you can safely omit the related prefix list.

{master}[edit]

user1@R1-RE0# show policy-options | no-more

prefix-list router-ipv4 {

apply-path "interfaces <*> unit <*> family inet address <*>";

}

prefix-list bgp-neighbors {

apply-path "protocols bgp group <*> neighbor <*>";

}

prefix-list ospf {

224.0.0.5/32;

224.0.0.6/32;

}

prefix-list rfc1918 {

10.0.0.0/8;

172.16.0.0/12;

192.168.0.0/16;

}

prefix-list rip {

224.0.0.9/32;

}

prefix-list vrrp {

224.0.0.18/32;

}

prefix-list multicast-all-routers {

224.0.0.2/32;

}

prefix-list router-ipv4-logical-systems {

apply-path "logical-systems <*> interfaces <*> unit <*> family inet address

<*>";

}

prefix-list bgp-neighbors-logical-systems {

apply-path "logical-systems <*> protocols bgp group <*> neighbor <*>";

}

prefix-list radius-servers {

apply-path "system radius-server <*>";

}

prefix-list tacas-servers {

apply-path "system tacplus-server <*>";

}

prefix-list ntp-server {

apply-path "system ntp server <*>";

}

prefix-list snmp-client-lists {

apply-path "snmp client-list <*> <*>";

}

prefix-list snmp-community-clients {

apply-path "snmp community <*> clients <*>";

}

prefix-list localhost {

127.0.0.1/32;

}

prefix-list ntp-server-peers {

apply-path "system ntp peer <*>";

}

prefix-list dns-servers {

apply-path "system name-server <*>";

}

You can confirm your apply-path and prefix lists are doing what you expect by showing the list and piping the output to display inheritance. Again, it’s critical that your prefix lists contain all expected addresses from where a service should be reachable, so spending some time here to confirm the regular expressions work as expected is time well spent. Here, the results of the router-ipv4 apply-path regular expression are examined.

{master}[edit]

jnpr@R1-RE0# show policy-options prefix-list router-ipv4

apply-path "interfaces <*> unit <*> family inet address <*>";

{master}[edit]

jnpr@R1-RE0# show policy-options prefix-list router-ipv4 | display inheritance

##

## apply-path was expanded to:

## 192.168.0.0/30;

## 10.8.0.0/31;

## 192.0.2.0/26;

## 192.0.2.64/26;

## 10.3.255.1/32;

## 172.19.90.0/23;

##

apply-path "interfaces <*> unit <*> family inet address <*>";

If you do not see one or more commented prefixes, as in this example, then either the related configuration does not exist or there is a problem in your path statement. As additional confirmation, consider the sample BGP stanza added to R1, consisting of three BGP peer groups: two IPv6 and one IPv4:

{master}[edit]

jnpr@R1-RE0# show protocols bgp

group int_v4 {

type internal;

local-address 10.3.255.1;

neighbor 10.3.255.2;

}

group ebgp_v6 {

type external;

peer-as 65010;

neighbor fd1e:63ba:e9dc:1::1;

}

group int_v6 {

type internal;

local-address 2001:db8:1::ff:1;

neighbor 2001:db8:1::ff:2;

}

Once again, the related prefix lists are confirmed to contain all expected entries:

{master}[edit]

jnpr@R1-RE0# show policy-options prefix-list bgp-neighbors_v4 | display

inheritance

##

## apply-path was expanded to:

## 10.3.255.2/32;

##

apply-path "protocols bgp group <*_v4> neighbor <*>";

{master}[edit]

jnpr@R1-RE0# show policy-options prefix-list bgp-neighbors_v6 | display

inheritance

##

## apply-path was expanded to:

## fd1e:63ba:e9dc:1::1/128;

## 2001:db8:1::ff:2/128;

##

apply-path "protocols bgp group <*_v6> neighbor <*>";

And now, the actual filter. It’s a long one, but security is never easy and is more an ongoing process than a one-point solution anyway. At least the comprehensive nature of the filter means it’s easy to grow into new services or protocols as you simply have to apply the related filters when the new service is turned up:

{master}[edit]

jnpr@R1-RE0# show firewall family inet | no-more

prefix-action management-police-set { /* OMITTED */ };

prefix-action management-high-police-set { /* OMITTED */ };

filter accept-bgp { /* OMITTED */ };

filter accept-ospf { /* OMITTED */ };

filter accept-rip { /* OMITTED */ };

filter accept-vrrp { /* OMITTED */ };

filter accept-ssh { /* OMITTED */ };

filter accept-snmp { /* OMITTED */ };

filter accept-ntp { /* OMITTED */ };

filter accept-web { /* OMITTED */ };

filter discard-all { /* OMITTED */ };

filter accept-traceroute { /* OMITTED */ };

filter accept-igp { /* OMITTED */ };

filter accept-common-services { /* OMITTED */ };

filter accept-sh-bfd { /* OMITTED */ };

filter accept-ldp { /* OMITTED */ };

filter accept-ftp { /* OMITTED */ };

filter accept-rsvp { /* OMITTED */ };

filter accept-radius { /* OMITTED */ };

filter accept-tacas { /* OMITTED */ };

filter accept-remote-auth { /* OMITTED */ };

filter accept-telnet { /* OMITTED */ };

filter accept-dns { /* OMITTED */ };

filter accept-ldp-rsvp { /* OMITTED */ };

filter accept-established { /* OMITTED */ };

filter accept-all { /* OMITTED */ };

filter accept-icmp { /* OMITTED */ };

filter discard-frags { /* OMITTED */ };

Not much to see, given the omit flag is in play. Easy enough to fix:

{master}[edit]

jnpr@R1-RE0# show firewall family inet | no-more | display omit

prefix-action management-police-set {

apply-flags omit;

policer management-1m;

count;

filter-specific;

subnet-prefix-length 24;

destination-prefix-length 32;

}

prefix-action management-high-police-set {

apply-flags omit;

policer management-5m;

count;

filter-specific;

subnet-prefix-length 24;

destination-prefix-length 32;

}

filter accept-bgp {

apply-flags omit;

term accept-bgp {

from {

source-prefix-list {

bgp-neighbors_v4;

bgp-neighbors-logical-systems_v4;

}

protocol tcp;

port bgp;

}

then {

count accept-bgp;

accept;

}

}

}

filter accept-ospf {

apply-flags omit;

term accept-ospf {

from {

source-prefix-list {

router-ipv4;

router-ipv4-logical-systems ;

}

destination-prefix-list {

router-ipv4;

ospf;

router-ipv4-logical-systems ;

}

protocol ospf;

}

then {

count accept-ospf;

accept;

}

}

}

filter accept-rip {

apply-flags omit;

term accept-rip {

from {

source-prefix-list {

router-ipv4;

router-ipv4-logical-systems ;

}

destination-prefix-list {

rip;

}

protocol udp;

destination-port rip;

}

then {

count accept-rip;

accept;

}

}

term accept-rip-igmp {

from {

source-prefix-list {

router-ipv4;

router-ipv4-logical-systems ;

}

destination-prefix-list {

rip;

}

protocol igmp;

}

then {

count accept-rip-igmp;

accept;

}

}

}

filter accept-vrrp {

apply-flags omit;

term accept-vrrp {

from {

source-prefix-list {

router-ipv4;

router-ipv4-logical-systems ;

}

destination-prefix-list {

vrrp;

}

protocol [ vrrp ah ];

}

then {

count accept-vrrp;

accept;

}

}

}

filter accept-ssh {

apply-flags omit;

term accept-ssh {

from {

source-prefix-list {

rfc1918;

}

destination-prefix-list {

router-ipv4;

router-ipv4-logical-systems ;

}

protocol tcp;

destination-port ssh;

}

then {

policer management-5m;

count accept-ssh;

accept;

}

}

}

filter accept-snmp {

apply-flags omit;

term accept-snmp {

from {

source-prefix-list {

snmp-client-lists;

snmp-community-clients;

}

destination-prefix-list {

router-ipv4;

router-ipv4-logical-systems ;

}

protocol udp;

destination-port snmp;

}

then {

policer management-5m;

count accept-snmp;

accept;

}

}

}

filter accept-ntp {

apply-flags omit;

term accept-ntp {

from {

source-prefix-list {

ntp-server;

}

destination-prefix-list {

router-ipv4;

router-ipv4-logical-systems ;

}

protocol udp;

port ntp;

}

then {

policer management-1m;

count accept-ntp;

accept;

}

}

term accept-ntp-peer {

from {

source-prefix-list {

ntp-server-peers;

}

destination-prefix-list {

router-ipv4;

router-ipv4-logical-systems ;

}

protocol udp;

destination-port ntp;

}

then {

policer management-1m;

count accept-ntp-peer;

accept;

}

}

term accept-ntp-server {

from {

source-prefix-list {

rfc1918;

}

destination-prefix-list {

router-ipv4;

router-ipv4-logical-systems ;

}

protocol udp;

destination-port ntp;

}

then {

policer management-1m;

count accept-ntp-server;

accept;

}

}

}

filter accept-web {

apply-flags omit;

term accept-web {

from {

source-prefix-list {

rfc1918;

}

destination-prefix-list {

router-ipv4;

router-ipv4-logical-systems ;

}

protocol tcp;

destination-port [ http https ];

}

then {

policer management-5m;

count accept-web;

accept;

}

}

}

filter discard-all {

apply-flags omit;

term discard-ip-options {

from {

ip-options any;

}

then {

count discard-ip-options;

log;

syslog;

discard;

}

}

term discard-TTL_1-unknown {

from {

ttl 1;

}

then {

count discard-all-TTL_1-unknown;

log;

syslog;

discard;

}

}

term discard-tcp {

from {

protocol tcp;

}

then {

count discard-tcp;

log;

syslog;

discard;

}

}

term discard-netbios {

from {

protocol udp;

destination-port 137;

}

then {

count discard-netbios;

log;

syslog;

discard;

}

}

term discard-udp {

from {

protocol udp;

}

then {

count discard-udp;

log;

syslog;

discard;

}

}

term discard-icmp {

from {

protocol icmp;

}

then {

count discard-icmp;

log;

syslog;

discard;

}

}

term discard-unknown {

then {

count discard-unknown;

log;

syslog;

discard;

}

}

}

filter accept-traceroute {

apply-flags omit;

term accept-traceroute-udp {

from {

destination-prefix-list {

router-ipv4;

router-ipv4-logical-systems ;

}

protocol udp;

ttl 1;

destination-port 33435-33450;

}

then {

policer management-1m;

count accept-traceroute-udp;

accept;

}

}

term accept-traceroute-icmp {

from {

destination-prefix-list {

router-ipv4;

router-ipv4-logical-systems ;

}

protocol icmp;

ttl 1;

icmp-type [ echo-request timestamp time-exceeded ];

}

then {

policer management-1m;

count accept-traceroute-icmp;

accept;

}

}

term accept-traceroute-tcp {

from {

destination-prefix-list {

router-ipv4;

router-ipv4-logical-systems ;

}

protocol tcp;

ttl 1;

}

then {

policer management-1m;

count accept-traceroute-tcp;

accept;

}

}

}

filter accept-igp {

apply-flags omit;

term accept-ospf {

filter accept-ospf;

}

term accept-rip {

filter accept-rip;

}

}

filter accept-common-services {

apply-flags omit;

term accept-icmp {

filter accept-icmp;

}

term accept-traceroute {

filter accept-traceroute;

}

term accept-ssh {

filter accept-ssh;

}

term accept-snmp {

filter accept-snmp;

}

term accept-ntp {

filter accept-ntp;

}

term accept-web {

filter accept-web;

}

term accept-dns {

filter accept-dns;

}

}

filter accept-sh-bfd {

apply-flags omit;

term accept-sh-bfd {

from {

source-prefix-list {

router-ipv4;

router-ipv4-logical-systems ;

}

destination-prefix-list {

router-ipv4;

router-ipv4-logical-systems ;

}

protocol udp;

source-port 49152-65535;

destination-port 3784-3785;

}

then {

count accept-sh-bfd;

accept;

}

}

}

filter accept-ldp {

apply-flags omit;

term accept-ldp-discover {

from {

source-prefix-list {

router-ipv4;

router-ipv4-logical-systems ;

}

destination-prefix-list {

multicast-all-routers;

}

protocol udp;

destination-port ldp;

}

then {

count accept-ldp-discover;

accept;

}

}

term accept-ldp-unicast {

from {

source-prefix-list {

router-ipv4;

router-ipv4-logical-systems ;

}

destination-prefix-list {

router-ipv4;

router-ipv4-logical-systems ;

}

protocol tcp;

port ldp;

}

then {

count accept-ldp-unicast;

accept;

}

}

term accept-tldp-discover {

from {

destination-prefix-list {

router-ipv4;

router-ipv4-logical-systems ;

}

protocol udp;

destination-port ldp;

}

then {

count accept-tldp-discover;

accept;

}

}

term accept-ldp-igmp {

from {

source-prefix-list {

router-ipv4;

router-ipv4-logical-systems ;

}

destination-prefix-list {

multicast-all-routers;

}

protocol igmp;

}

then {

count accept-ldp-igmp;

accept;

}

}

}

filter accept-ftp {

apply-flags omit;

term accept-ftp {

from {

source-prefix-list {

rfc1918;

}

destination-prefix-list {

router-ipv4;

router-ipv4-logical-systems ;

}

protocol tcp;

port [ ftp ftp-data ];

}

then {

policer management-5m;

count accept-ftp;

accept;

}

}

}

filter accept-rsvp {

apply-flags omit;

term accept-rsvp {

from {

destination-prefix-list {

router-ipv4;

router-ipv4-logical-systems ;

}

protocol rsvp;

}

then {

count accept-rsvp;

accept;

}

}

}

filter accept-radius {

apply-flags omit;

term accept-radius {

from {

source-prefix-list {

radius-servers;

}

destination-prefix-list {

router-ipv4;

router-ipv4-logical-systems ;

}

protocol udp;

source-port [ radacct radius ];

tcp-established;

}

then {

policer management-1m;

count accept-radius;

accept;

}

}

}

filter accept-tacas {

apply-flags omit;

term accept-tacas {

from {

source-prefix-list {

tacas-servers;

}

destination-prefix-list {

router-ipv4;

router-ipv4-logical-systems ;

}

protocol [ tcp udp ];

source-port [ tacacs tacacs-ds ];

tcp-established;

}

then {

policer management-1m;

count accept-tacas;

accept;

}

}

}

filter accept-remote-auth {

apply-flags omit;

term accept-radius {

filter accept-radius;

}

term accept-tacas {

filter accept-tacas;

}

}

filter accept-telnet {

apply-flags omit;

term accept-telnet {

from {

source-prefix-list {

rfc1918;

}

destination-prefix-list {

router-ipv4;

router-ipv4-logical-systems ;

}

protocol tcp;

destination-port telnet;

}

then {

policer management-1m;

count accept-telnet;

accept;

}

}

}

filter accept-dns {

apply-flags omit;

term accept-dns {

from {

source-prefix-list {

dns-servers;

}

destination-prefix-list {

router-ipv4;

router-ipv4-logical-systems ;

}

protocol [ udp tcp ];

source-port 53;

}

then {

policer management-1m;

count accept-dns;

accept;

}

}

}

filter accept-ldp-rsvp {

apply-flags omit;

term accept-ldp {

filter accept-ldp;

}

term accept-rsvp {

filter accept-rsvp;

}

}

filter accept-established {

apply-flags omit;

term accept-established-tcp-ssh {

from {

destination-prefix-list {

router-ipv4;

router-ipv4-logical-systems ;

}

source-port ssh;

tcp-established;

}

then {

policer management-5m;

count accept-established-tcp-ssh;

accept;

}

}

term accept-established-tcp-ftp {

from {

destination-prefix-list {

router-ipv4;

router-ipv4-logical-systems ;

}

source-port ftp;

tcp-established;

}

then {

policer management-5m;

count accept-established-tcp-ftp;

accept;

}

}

term accept-established-tcp-ftp-data-syn {

from {

destination-prefix-list {

router-ipv4;

router-ipv4-logical-systems ;

}

source-port ftp-data;

tcp-initial;

}

then {

policer management-5m;

count accept-established-tcp-ftp-data-syn;

accept;

}

}

term accept-established-tcp-ftp-data {

from {

destination-prefix-list {

router-ipv4;

router-ipv4-logical-systems ;

}

source-port ftp-data;

tcp-established;

}

then {

policer management-5m;

count accept-established-tcp-ftp-data;

accept;

}

}

term accept-established-tcp-telnet {

from {

destination-prefix-list {

router-ipv4;

router-ipv4-logical-systems ;

}

source-port telnet;

tcp-established;

}

then {

policer management-5m;

count accept-established-tcp-telnet;

accept;

}

}

term accept-established-tcp-fetch {

from {

destination-prefix-list {

router-ipv4;

router-ipv4-logical-systems ;

}

source-port [ http https ];

tcp-established;

}

then {

policer management-5m;

count accept-established-tcp-fetch;

accept;

}

}

term accept-established-udp-ephemeral {

from {

destination-prefix-list {

router-ipv4;

router-ipv4-logical-systems ;

}

protocol udp;

destination-port 49152-65535;

}

then {

policer management-5m;

count accept-established-udp-ephemeral;

accept;

}

}

}

filter accept-all {

apply-flags omit;

term accept-all-tcp {

from {

protocol tcp;

}

then {

count accept-all-tcp;

log;

syslog;

accept;

}

}

term accept-all-udp {

from {

protocol udp;

}

then {

count accept-all-udp;

log;

syslog;

accept;

}

}

term accept-all-igmp {

from {

protocol igmp;

}

then {

count accept-all-igmp;

log;

syslog;

accept;

}

}

term accept-icmp {

from {

protocol icmp;

}

then {

count accept-all-icmp;

log;

syslog;

accept;

}

}

term accept-all-unknown {

then {

count accept-all-unknown;

log;

syslog;

accept;

}

}

}

filter accept-icmp {

apply-flags omit;

term no-icmp-fragments {

from {

is-fragment;

protocol icmp;

}

then {

count no-icmp-fragments;

log;

discard;

}

}

term accept-icmp {

from {

protocol icmp;

ttl-except 1;

icmp-type [ echo-reply echo-request time-exceeded unreachable

source-quench router-advertisement parameter-problem ];

}

then {

policer management-5m;

count accept-icmp;

accept;

}

}

}

filter discard-frags {

term 1 {

from {

first-fragment;

}

then {

count deny-first-frags;

discard;

}

}

term 2 {

from {

is-fragment;

}

then {

count deny-other-frags;

discard;

}

}

}

After all that work, don’t forget to actually apply all applicable filters as an input-list under family inet on the lo0 interface. Before making any changes, please carefully consider the following suggestions, however:

Before actually activating the lo0 application of the IPv4 protection filter, you should:

Confirm that all prefix lists are accurate for your networks and that they encompass the necessary address ranges.

Confirm that all valid services and remote access protocols are accepted in a filter, and that the filter is included in the input list; for example, in Day One: Securing the Routing Engine on M, MX, and T Series, the

accept-telnetfilter is not actually applied because Telnet is a nonsecure protocol, and frankly should never be used in a production network. While Telnet is used to access the testbed needed to develop this material, making the absence of theaccept-telnetfilter pretty obvious at time of commit . . . don’t ask me how I know this.Make sure the filter initially ends in a match-all term with accept and log actions to make sure no valid services are denied.

Consider using

commit confirmedfor this type of change. Again, don’t ask me how I know, but there is a hint in the preceding paragraphs.

The final RE protection filters used in this case study were modified from the example used in the Day One book in the following ways:

The

accept-telnetfilter is applied in the list; as a lab, Telnet is deemed acceptable. The OSPF and RIP filters are omitted as not in use or planned in the near future.The

accept-icmpfilter is modified to no longer match on fragments; this function is replaced with a global deny fragments filter that’s applied at the front of the filter list. See the related sidebar.

The list of filters applied to the lo0 interface of R1 for this example is shown; note that the list now begins with the discard-frags filter, the inclusion of the accept-telnet filter, and that the final discard-all filter is in effect. Again, for initial application in a production network, consider using a final match-all filter with accept and log actions to first confirm that no valid services are falling through to the final term before switching over to a final discard action.

The filter does not include the allow-ospf or allow-rip filters as the current test bed is using IS-IS, which cannot be affected by an inet family filter anyway. It’s worth noting that the accept-sh-bfd filter is so named as the port range specified allows single-hop BFD sessions only. According to draft-ietf-bfd-multihop-09.txt, “BFD for Multihop Paths” (now RFC 5883), multihop BFD sessions must use UDP destination port 4784:

{master}[edit]

user@R1-RE0# show interfaces lo0

unit 0 {

family inet {

filter {

input-list [ discard-frags accept-sh-bfd accept-bgp

accept-ldp accept-rsvp accept-telnet accept-common-services

discard-all ];

}

address 10.3.255.1/32;

}

family iso {

address 49.0001.0100.0325.5001.00;

}

family inet6 {

address 2001:db8:1::ff:1/128;

}

}

Warning

Pay attention to the filter order in the input-list to avoid any side effects. Actually, in the case above, if you place the accept-common-services before the filter accept-bgp your could rate-limit the eBGP session. Indeed in accept-common-services we rate-limit the TCP traceroute (TTL=1) and remember that eBGP (non-multihop) sessions use TCP packets with the TTL set to one.

A syslog is added to catch and consolidate any filter-related syslog actions for easy debug later. Remember, the log action writes to a kernel cache that is overwritten and lost in a reboot, while syslog can support file archiving and remote logging. Here, the local syslog is configured:

jnpr@R1-RE0# show system syslog

file re_filter {

firewall any;

archive size 10m;

}

After committing the filter, and breathing a sigh of relief as you confirm that remote access is still working (this time), let’s quickly look for any issues. To begin with, filter application is confirmed:

{master}[edit]

jnpr@R1-RE0# run show interfaces filters lo0

Interface Admin Link Proto Input Filter Output Filter

lo0 up up

lo0.0 up up inet lo0.0-i

iso

inet6

lo0.16384 up up inet

lo0.16385 up up inet

Next, examine the syslog to see what traffic is falling through unmatched to be discarded:

{master}[edit]

jnpr@R1-RE0# run show log re_filter

Dec 12 12:58:09 R1-RE0 fpc2 PFE_FW_SYSLOG_IP: FW: irb.200

D vrrp 192.0.2.67 224.0.0.18 0 0 (1 packets)

Dec 12 12:58:15 R1-RE0 last message repeated 7 times

Dec 12 12:58:16 R1-RE0 fpc2 PFE_FW_SYSLOG_IP: FW: irb.200

D vrrp 192.0.2.67 224.0.0.18 0 0 (2 packets)

Dec 12 12:58:17 R1-RE0 fpc2 PFE_FW_SYSLOG_IP: FW: irb.200

D vrrp 192.0.2.67 224.0.0.18 0 0 (1 packets)

Dec 12 12:58:21 R1-RE0 last message repeated 4 times

Dec 12 12:58:22 R1-RE0 fpc2 PFE_FW_SYSLOG_IP: FW: irb.200

D vrrp 192.0.2.67 224.0.0.18 0 0 (2 packets)

Dec 12 12:58:23 R1-RE0 fpc2 PFE_FW_SYSLOG_IP: FW: irb.200

D vrrp 192.0.2.67 224.0.0.18 0 0 (1 packets)

Dec 12 12:58:26 R1-RE0 last message repeated 3 times

Dec 12 12:58:27 R1-RE0 fpc2 PFE_FW_SYSLOG_IP: FW: irb.200

D vrrp 192.0.2.67 224.0.0.18 0 0 (2 packets)

Dec 12 12:58:28 R1-RE0 fpc2 PFE_FW_SYSLOG_IP: FW: irb.200

D vrrp 192.0.2.67 224.0.0.18 0 0 (1 packets)

Do’h! What was that warning about confirming the applied filter has support for all supported services, and about using an accept-all in the final term until proper operating is confirmed, again? The syslog action in the final discard-all filter has quickly shown that VRRP is being denied by the filter, which readily explains why VRRP is down, and the phones are starting to ring. The applied filter list is modified by adding the accept-vrrp filter; note the use of the insert function to ensure the correct ordering of filters by making sure that the discard-all filter remains at the end of the list:

{master}[edit interfaces lo0 unit 0 family inet]

jnpr@R1-RE0# set filter input-list accept-vrrp

{master}[edit interfaces lo0 unit 0 family inet]

jnpr@R1-RE0# show

filter {

input-list [ discard-frags accept-sh-bfd accept-bgp

accept-ldp accept-rsvp accept-telnet accept-common-services discard-all

accept-vrrp ];

}

address 10.3.255.1/32;

{master}[edit interfaces lo0 unit 0 family inet]

jnpr@R1-RE0# insert filter input-list accept-vrrp before discard-all

{master}[edit interfaces lo0 unit 0 family inet]

jnpr@R1-RE0# show

filter {

input-list [ discard-frags accept-ospf accept-rip

accept-sh-bfd accept-bgp accept-ldp accept-rsvp accept-telnet accept-

common-services accept-

vrrp discard-all ];

}

address 10.3.255.1/32;

After the change the log file is cleared, and after a few moments redisplayed:

{master}[edit interfaces lo0 unit 0 family inet]

jnpr@R1-RE0# run clear log re_filter

. . .

{master}[edit interfaces lo0 unit 0 family inet]

jnpr@R1-RE0# run show log re_filter

Dec 12 13:09:59 R1-RE0 clear-log[21857]: logfile cleared

{master}[edit interfaces lo0 unit 0 family inet]

jnpr@R1-RE0#

Perfect—the lack of syslog entry and continued operation of existing services confirms proper operation of the IPv4 RE protection filter.

IPv6 RE Protection Filter

While we have IPv4 running, many networks are only now beginning to deploy IPv6. Given the lack of ubiquity, IPv6 control planes have not been the target of many attacks; many operators have not felt the need to deploy IPv6 RE protection, leading to a general lack of experience in IPv6 filtering best practices.

Next-header nesting, the bane of stateless filters

A significant issue with any IPv6 filtering scheme is IPv6’s use of next-header nesting, which makes some stateless filtering tasks tricky, if not downright impossible. IPv6, as defined in RFC 2460, states: “In IPv6, optional internet-layer information is encoded in separate headers that may be placed between the IPv6 header and the upper-layer header in a packet. . . . an IPv6 packet may carry zero, one, or more extension headers, each identified by the Next Header field of the preceding header.”

The net result is that there can be multiple extension headers placed between the IPv6 header and the upper layer protocol that you might want to match on (TCP, UDP, OSPF3, ICMP6, etc.). Stateless filters are designed to extract keys for matching packet fields using bit positions within a packet that are assumed to be found in the same location. Stateless IPv6 filters on Trio were historically only able to match on the first protocol (next header) identified in the IPv6 packet’s next-header field, and/or on bits within the actual payload, i.e., the transport protocol (TCP or UDP) ports. In the 14.2 release, Trio line cards are capable of matching on any extension header (regardless of whether it’s the first in the list), as well as a payload port. Note that the ability to match on any extension header is limited to non-fragmented/first fragment IPv6. The other packets which are not considered as a first fragment packet are still limited to matching only on the first extension header following the IPv6 header.

Note

Very long IPv6 headers (i.e., with a lot of next-headers) cannot be parsed by Junos. Remember that the lookup chip receives a chunk of the packet (the PARCEL) which includes the first 256 bytes. In others words, an IPv6 header larger than 256 bytes (which is a very rare case) will not be parsed entirely.

The ability to match both an extension header and a payload port is supported using the extension-header keyword. As of the Junos 14.2 release, the following extension header match types are supported:

jnpr@R1-RE0# ...t6 filter foo term 1 from extension-header ? Possible completions: <range> Range of values [ Open a set of values ah Authentication header any Any extension header dstopts Destination options esp Encapsulating security payload fragment Fragment hop-by-hop Hop by hop options mobility Mobility routing Routing

Note

Keep in mind that regardless of how many extension headers are present, Trio ASICs have the ability to extract the first 32 bits following the last extension header to facilitate Layer 4 (TCP or UDP) port-based matches, even when one or more extension headers are present. On a supported release, the ability to match on a payload protocol when extension headers are present is enabled by specifying the payload-protocol keyword in your match criteria.

First, note that the presence of extension headers can lead to unpredictable filter operation when the filter uses a next-header match condition because this option forces the match to occur immediately after the IPv6 header.

To show why such a match type can be problematic, consider a user who wants to filter out Multicast Listener Discovery messages (MLD), which does for IPv6 what IGMP does for IPv4: namely allowing multicast hosts to express interest in listening to a multicast group. In this case, the user knows that MLD is an extension of ICMP6, and happily proceeds to create (and commit) the filter shown, only to find MLD messages are not matched, and therefore still allowed to pass through the filter:

{master}[edit firewall family inet6]

jnpr@R1-RE0# show

filter count_mld {

term 1 {

from {

next-header icmp;

icmp-type [ membership-query membership-report membership

-termination ];

}

then {

count mld_traffic;

discard;

}

}

term 2 {

then accept;

}

}

In this case, a quick look at RFC for MLD (RFC 3810) and the previous restriction on being able to match on a single next-header makes the reason for the filter’s failure clear. MLD requires the inclusion of the hop-by-hop extension header (as shown above in the packet capture output), which must precede the ICMP6 header that the filter seeks to match:

Internet Protocol Version 6, Src: fe80::6687:88ff:fe63:47c1 (fe80::6687:88ff:

fe63:47c1), Dst: ff02::1 (ff02::1)

0110 .... = Version: 6

.... 0000 0000 .... .... .... .... .... = Traffic class: 0x00000000

.... .... .... 0000 0000 0000 0000 0000 = Flowlabel: 0x00000000

Payload length: 32

Next header: IPv6 hop-by-hop option (0)

Hop limit: 1

Source: fe80::6687:88ff:fe63:47c1 (fe80::6687:88ff:fe63:47c1)

[Source SA MAC: JuniperN_63:47:c1 (64:87:88:63:47:c1)]

Destination: ff02::1 (ff02::1)

[Source GeoIP: Unknown]

[Destination GeoIP: Unknown]

Hop-by-Hop Option

Next header: ICMPv6 (58)

Length: 0 (8 bytes)

IPv6 Option (Router Alert)

IPv6 Option (PadN)

Internet Control Message Protocol v6

Type: Multicast Listener Query (130)

Code: 0

Checksum: 0x236c [correct]

Maximum Response Delay [ms]: 10000

Reserved: 0000

Multicast Address: :: (::)

This means if you want to filter MLD using a stateless filter you must, in fact, set the filter to match on the presence of the hop-by-hop header rather than the header you really wanted. The obvious issue here is that other protocols, like RSVP, can also make use of a hop-by-hop header (though Junos does not currently support IPv6-based MPLS signaling), so wholesale filtering based on hop-by-hop (or other extension) headers can lead to unexpected filtering actions.

In theory, this issue could be avoided by removing the next header match and replacing it with an extension header match; again, the latter would allow for a match of the specified header anywhere in the list rather than forcing it to be immediately behind the IPv6 header. The problem is that, as shown above, the 14.2 Junos release does not support ICMP as an argument to the extension header match.

Given that currently Trio firmware cannot match ICMP, the next best option here is to use a payload match type. Here, the payload is defined as the first portion of the packet that the firmware does not recognize, which in this case should equate to the ICMP header. A modified filter is shown:

{master}[edit firewall family inet6]

jnpr@R1-RE0# show

filter count_mld {

term 1 {

from {

payload-protocol icmp6;

icmp-type [ membership-query membership-report membership

-termination ];

}

then {

count mld_traffic;

discard;

}

}

term 2 {

then accept;

}

}

The sample IPv6 filter

As with the IPv4 filter example, it’s assumed that the reader is familiar with Junos firewall filter syntax and operation, as well as basic IPv6 protocol operation, header fields, and option extension headers. As always, when it comes to filters, no one size fits all, and the reader is encouraged to carefully consider the effects of the sample filter along with careful testing of its operation against the specific IPv6 protocols supported in their networks so that any necessary adjustments can be made before being placed into use on a production network.

Additional details on IPv6 protocol filtering specific to the broad range of possible ICMPv6 message types can be found in RFC 4890, “Recommendations for Filtering ICMPv6 Messages in Firewalls.”

To begin, the IPv6 prefix list definitions are displayed; the previous lists used for IPv4 remain in place, with the exception noted in the following:

jnpr@R1-RE0# show policy-options

prefix-list router-ipv4 {

. . .

prefix-list bgp-neighbors_v4 {

apply-path "protocols bgp group <*_v4> neighbor <*>";

}

prefix-list router-ipv6 {

apply-path "interfaces <*> unit <*> family inet6 address <*>";

}

prefix-list bgp-neighbors_v6 {

apply-path "protocols bgp group <*_v6> neighbor <*>";

}

prefix-list link_local {

fe80::/64;

}

prefix-list rfc3849 {

2001:db8::/32;

}

The IPv6-based prefix list performs the same function as their V4 counterparts. IPv6’s use of Link Local addressing for many routing protocols means you need to include support for them, as well as your global IPv6 interface routes. Note that the previous bgp-neighbors prefix list, as originally used for IPv4, has been renamed and the apply-path regular expression modified, so as to not conflict with the same function in IPv6. This approach assumes that you place IPv4 and IPv6 peers in separate groups with a group name that ends in either _v4 or _v6. The IPv6 RE protection filters are displayed:

{master}[edit firewall family inet6]

jnpr@R1-RE0#

filter discard-extension-headers {

apply-flags omit;

term discard-extension-headers {

from {

# Beware - VRRPv3 with authentication or OSPFv3 with Authentication

enabled may use AH/ESP!

next-header [ ah dstopts egp esp fragment gre icmp igmp ipip ipv6

no-next-header routing rsvp sctp ];

}

then {

count discard-ipv6-extension-headers;

log;

syslog;

discard;

}

}

}

filter deny-icmp6-undefined {

apply-flags omit;

term icmp6-unassigned-discard {

from {

next-header icmpv6;

icmp-type [ 102-106 155-199 202-254 ];

}

then discard;

}

term rfc4443-discard {

from {

next-header icmpv6;

icmp-type [ 100-101 200-201 ];

}

then discard;

}

}

filter accept-icmp6-misc {

apply-flags omit;

term neigbor-discovery-accept {

from {

next-header icmpv6;

icmp-type 133-136;

}

then accept;

}

term inverse-neigbor-discovery-accept {

from {

next-header icmpv6;

icmp-type 141-142;

}

then accept;

}

term icmp6-echo-request {

from {

next-header icmpv6;

icmp-type echo-request;

}

then accept;

}

term icmp6-echo-reply {

from {

next-header icmpv6;

icmp-type echo-reply;

}

then accept;

}

term icmp6-dest-unreachable-accept {

from {

next-header icmpv6;

icmp-type destination-unreachable;

}

then accept;

}

term icmp6-packet-too-big-accept {

from {

next-header icmpv6;

icmp-type packet-too-big;

}

then accept;

}

term icmp6-time-exceeded-accept {

from {

next-header icmpv6;

icmp-type time-exceeded;

icmp-code 0;

}

then accept;

}

term icmp6-parameter-problem-accept {

from {

next-header icmpv6;

icmp-type parameter-problem;

icmp-code [ 1 2 ];

}

then accept;

}

}

filter accept-shsh-bfd-v6 {

apply-flags omit;

term accept-sh-bfd-v6 {

from {

source-prefix-list {

router-ipv6;

}

destination-prefix-list {

router-ipv6;

}

source-port 49152-65535;

destination-port 3784-3785;

}

then accept;

}

}

filter accept-MLD-hop-by-hop_v6 {

apply-flags omit;

term bgp_v6 {

from {

next-header hop-by-hop;

}

then {

count hop-by-hop-extension-packets;

accept;

}

}

}

filter accept-bgp-v6 {

apply-flags omit;

term bgp_v6 {

from {

prefix-list {

rfc3849;

bgp-neighbors_v6;

}

next-header tcp;

destination-port bgp;

}

then accept;

}

}

filter accept-ospf3 {

apply-flags omit;

term ospfv3 {

from {

source-prefix-list {

link_local;

}

next-header ospf;

}

then accept;

}

}

filter accept-dns-v6 {

apply-flags omit;

term dnsv6 {

from {

source-prefix-list {

rfc3849;

}

next-header [ udp tcp ];

port domain;

}

then accept;

}

}

filter accept-ntp-v6 {

apply-flags omit;

term ntpv6 {

from {

source-prefix-list {

rfc3849;

}

next-header udp;

destination-port ntp;

}

then accept;

}

}

filter accept-ssh-v6 {

apply-flags omit;

term sshv6 {

from {

source-prefix-list {

rfc3849;

}

next-header tcp;

destination-port ssh;

}

then {

policer management-5m;

count accept-ssh;

accept;

}

}

}

filter accept-snmp-v6 {

apply-flags omit;

term snmpv6 {

from {

source-prefix-list {

rfc3849;

}

next-header udp;

destination-port snmp;

}

then accept;

}

}

filter accept-radius-v6 {

apply-flags omit;

term radiusv6 {

from {

source-prefix-list {

rfc3849;

}

next-header udp;

port [ 1812 1813 ];

}

then accept;

}

}

filter accept-telnet-v6 {

apply-flags omit;

term telnetv6 {

from {

source-prefix-list {

rfc3849;

}

next-header tcp;

port telnet;

}

then {

policer management-5m;

count accept-ssh;

accept;

}

}

}

filter accept-common-services-v6 {

apply-flags omit;

term accept-icmp6 {

filter accept-icmp6-misc;

}

term accept-traceroute-v6 {

filter accept-traceroute-v6;

}

term accept-ssh-v6 {

filter accept-ssh-v6;

}

term accept-snmp-v6 {

filter accept-snmp-v6;

}

term accept-ntp-v6 {

filter accept-ntp-v6;

}

term accept-dns-v6 {

filter accept-dns-v6;

}

}

filter accept-traceroute-v6 {

apply-flags omit;

term accept-traceroute-udp {

from {

destination-prefix-list {

router-ipv6;

}

next-header udp;

destination-port 33435-33450;

hop-limit 1;

}

then {

policer management-1m;

count accept-traceroute-udp-v6;

accept;

}

}

term accept-traceroute-icmp6 {

from {

destination-prefix-list {

router-ipv6;

}

next-header icmp;

icmp-type [ echo-request time-exceeded ];

hop-limit 1;

}

then {

policer management-1m;

count accept-traceroute-icmp6;

accept;

}

}

term accept-traceroute-tcp-v6 {

from {

destination-prefix-list {

router-ipv6;

}

next-header tcp;

hop-limit 1;

}

then {

policer management-1m;

count accept-traceroute-tcp-v6;

accept;

}

}

}

filter discard-all-v6 {

apply-flags omit;

term discard-HOPLIMIT_1-unknown {

from {

hop-limit 1;

}

then {

count discard-all-HOPLIMIT_1-unknown;

log;

syslog;

discard;

}

}

term discard-tcp-v6 {

from {

next-header tcp;

}

then {

count discard-tcp-v6;

log;

syslog;

discard;

}

}

term discard-netbios-v6 {

from {

next-header udp;

destination-port 137;

}

then {

count discard-netbios-v6;

log;

syslog;

discard;

}

}

term discard-udp {

from {

next-header udp;

}

then {

count discard-udp-v6;

log;

syslog;

discard;

}

}

term discard-icmp6 {

from {

next-header icmp;

}

then {

count discard-icmp;

log;

syslog;

discard;

}

}

term discard-unknown {

then {

count discard-unknown;

log;

syslog;

discard;

}

}

}

Note

We used the next-header option in the preceding code to actually filter the payload. As discussed earlier, sometimes protocols include extension headers. In this case, you can use the payload match option. Remember that next-header is always the first header after the IPv6 header.

The IPv6 filters make use of the same policers defined previously for IPv4, and follow the same general modular approach, albeit with less counting actions in terms that accept traffic, their use already being demonstrated for IPv4. In this case, the discard-extension-headers filter discards all unused extension headers, including the fragmentation header, which ensures fragments are not subjected to any additional term processing where unpredictable results could occur given a fragment’s lack of a transport header. As per the filter’s comment, the discard action includes traffic with either the AH and/or EH authentication headers, which can be used for legitimate traffic like OSPF3. As always, you need to carefully gauge the needs of each network against any sample filter and make adjustments accordingly.

As before, the relevant list of IPv6 filters are again applied as an input list to the lo0 interface. Now under family inet6:

{master}[edit]

jnpr@R1-RE0# show interfaces lo0 unit 0

family inet {

filter {

input-list [ discard-frags accept-sh-bfd accept-bgp

accept-ldp accept-rsvp accept-telnet accept-common-services

accept-vrrp discard-all ];

}

address 10.3.255.1/32;

}

family iso {

address 49.0001.0100.0325.5001.00;

}

family inet6 {

filter {

input-list [ discard-extension-headers accept-MLD-hop-by-hop_v6

deny-icmp6-undefined accept-sh-bfd-v6 accept-bgp-v6

accept-telnet-v6 accept-ospf3 accept-radius-v6 accept-common-services-

v6 discard-all-v6 ];

}

address 2001:db8:1::ff:1/128;

}

After applying the IPv6 filter, the syslog is cleared; after a few moments, it’s possible to display any new matches. Recall that at this stage only unauthorized traffic should be reaching the final discard-all action for both the IPv4 and IPv6 filter lists:

{master}[edit]

jnpr@R1-RE0# run show log re_filter

Dec 13 10:26:51 R1-RE0 clear-log[27090]: logfile cleared

Dec 13 10:26:52 R1-RE0 /kernel: FW: fxp0.0 D tcp 172.17.13.146 172.19.90.172

34788 21

Dec 13 10:26:55 R1-RE0 /kernel: FW: fxp0.0 D tcp 172.17.13.146 172.19.90.172

34788 21

Dec 13 10:26:55 R1-RE0 /kernel: FW: fxp0.0 D igmp 172.19.91.95 224.0.0.1 0 0

Dec 13 10:27:01 R1-RE0 /kernel: FW: fxp0.0 D tcp 172.17.13.146 172.19.90.172

34788 21

Dec 13 10:27:55 R1-RE0 /kernel: FW: fxp0.0 D igmp 172.19.91.95 224.0.0.1 0 0

. . .

Dec 13 10:34:41 R1-RE0 /kernel: FW: fxp0.0 D udp 172.19.91.43 172.19.91.255

138 138

Dec 13 10:34:55 R1-RE0 /kernel: FW: fxp0.0 D igmp 172.19.91.95 224.0.0.1 0 0

Dec 13 10:35:55 R1-RE0 /kernel: FW: fxp0.0 D igmp 172.19.91.95 224.0.0.1 0 0

Dec 13 10:36:55 R1-RE0 /kernel: FW: fxp0.0 D igmp 172.19.91.95 224.0.0.1 0 0

The result shown here is good. The only traffic not being accepted by other terms is coming from unauthorized hosts at 172.17.13.0/24, an address not included in the official lab topology, which shows the filter is having the desired effect. All the discarded traffic arrives on the shared OoB management network via fxp0, and appears to be a mix of IGMP, FTP, and NetBIOS. As a final confirmation, you confirm BGP and BFD session status at R1:

{master}[edit]

jnpr@R1-RE0# run show bgp summary

Groups: 3 Peers: 3 Down peers: 1

Table Tot Paths Act Paths Suppressed History Damp State Pending

inet.0 0 0 0 0 0 0

inet6.0 0 0 0 0 0 0

Peer AS InPkt OutPkt OutQ Flaps Last Up/Dwn State|

#Active/Received/Accepted/Damped...

10.3.255.2 65000 2010 2009 0 0 15:09:23 0/0/0/0

2001:db8:1::ff:2 65000 298 296 0 2 2:13:16 Establ

inet6.0: 0/0/0/0

fd1e:63ba:e9dc:1::1 65010 0 0 0 0 17:52:23 Active

At this point, the EBGP session to the external BGP P1 device is expected to be down, but both the IPv6 and IPv4 IBGP sessions are established, as is the BFD session between R1 and R2. This BFD session is IPv4-based and runs over the ae0.1 interface to provide the IS-IS protocol with rapid fault detection capabilities:

{master}[edit]

jnpr@R1-RE0# show protocols isis

reference-bandwidth 100g;

level 1 disable;

interface ae0.1 {

point-to-point;

bfd-liveness-detection {

minimum-interval 1000;

multiplier 3;

}

}

interface lo0.0 {

passive;

}

{master}[edit]

jnpr@R1-RE0# run show bfd session

Detect Transmit

Address State Interface Time Interval Multiplier

10.8.0.1 Up ae0.1 3.000 1.000 3

1 sessions, 1 clients

Cumulative transmit rate 1.0 pps, cumulative receive rate 1.0 pps

The continued operation of permitted services coupled with the lack of unexpected log entries from the discard-all action of both RE protection filters confirms they are working as designed and concludes the RE protection case study.

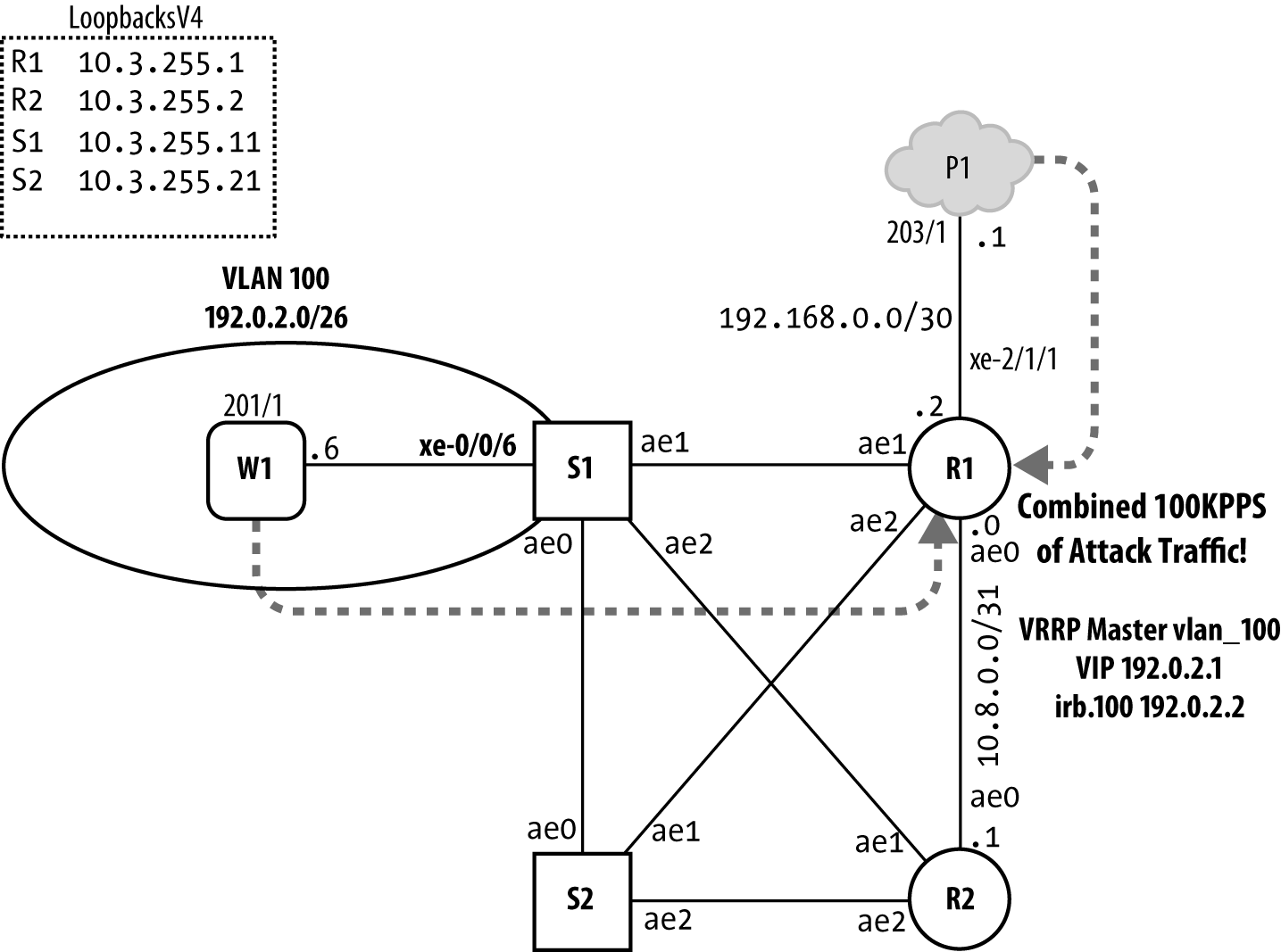

DDoS Protection Case Study

The MX Trio platforms began offering built-in DDoS protection starting with release v11.2. This feature makes use of the extensive host-bound traffic classification capabilities of the Trio chipset along with corresponding policers, implemented at various hierarchies within the system, to ensure the RE remains responsive in the event of excessive control plane exception traffic, such as can occur as the result of misconfigurations, excess scaling, or intentional DDoS types of attacks targeting a router’s control plane.

The new low-level DDoS protection provides great benefits right out of the box, so to speak, but does not in itself mitigate the need for a RE protection filter to deny traffic that is not allowed or needed. When the new DDoS protection is combined with a strong RE filter, you can eliminate the need for policing functions in the filter, or for added protection you can continue to use RE filter-based policing as an added measure of safeguard, but in these cases you should ensure the RE filter-based policers have higher bandwidth values then the corresponding PFE and RE DDoS policers, or the policers in the RE will never have a chance to activate as the DDoS policers will see all the discard action. This is because a policer called from an input filter on the loopback interface is downloaded to the Trio PFE where it is executed before any DDoS policer functionality.

The Issue of Control Plane Depletion

As routers scale to provide service to more and more users with ever increasing numbers of services, it’s not uncommon to find them operating near their capacity, especially in periods of heavy load such as route flap caused by network failures. With each new service comes additional load, but also the potential for unexpected resource usage either due to intent or in many cases because of buggy software or configuration errors that lead to unexpected operation.

Resource exhaustion can occur in a number of different places, each having their own set of operational issues. Run short on RIB/FIB and you may blackhole destinations or start using default routes with possibly undesirable paths. Low memory can lead to crashes, or slow reconvergence, as processes start swapping to disk. Run low on CPU, or on the internal communications paths needed to send and receive sessions to keep alive messages, and here comes even more trouble as BFD, BGP, and OSPF sessions begin flapping, which in turn only add more churn to an already too busy system.

In this section, the focus is on protecting the processing path, and therefore the control plane resources. Those control plane resources are needed to process remote access, routing protocols, and network management traffic as they make their way from a network interface through the PFE and onto the RE during periods of unexpected control plane traffic. The goal is to allow supported services, at reasonable levels, without allowing any one service or protocol to overrun all resources, a condition that can easily lead to denial of service for other protocols and users. Such a service outage can easily extend into the remote access needed to access a router in order to troubleshoot and correct the issue. There is little else in life as frustrating as knowing how to fix a problem, only to realize that because of the problem, you’re unable to access the device to take corrective actions.

DDoS Operational Overview

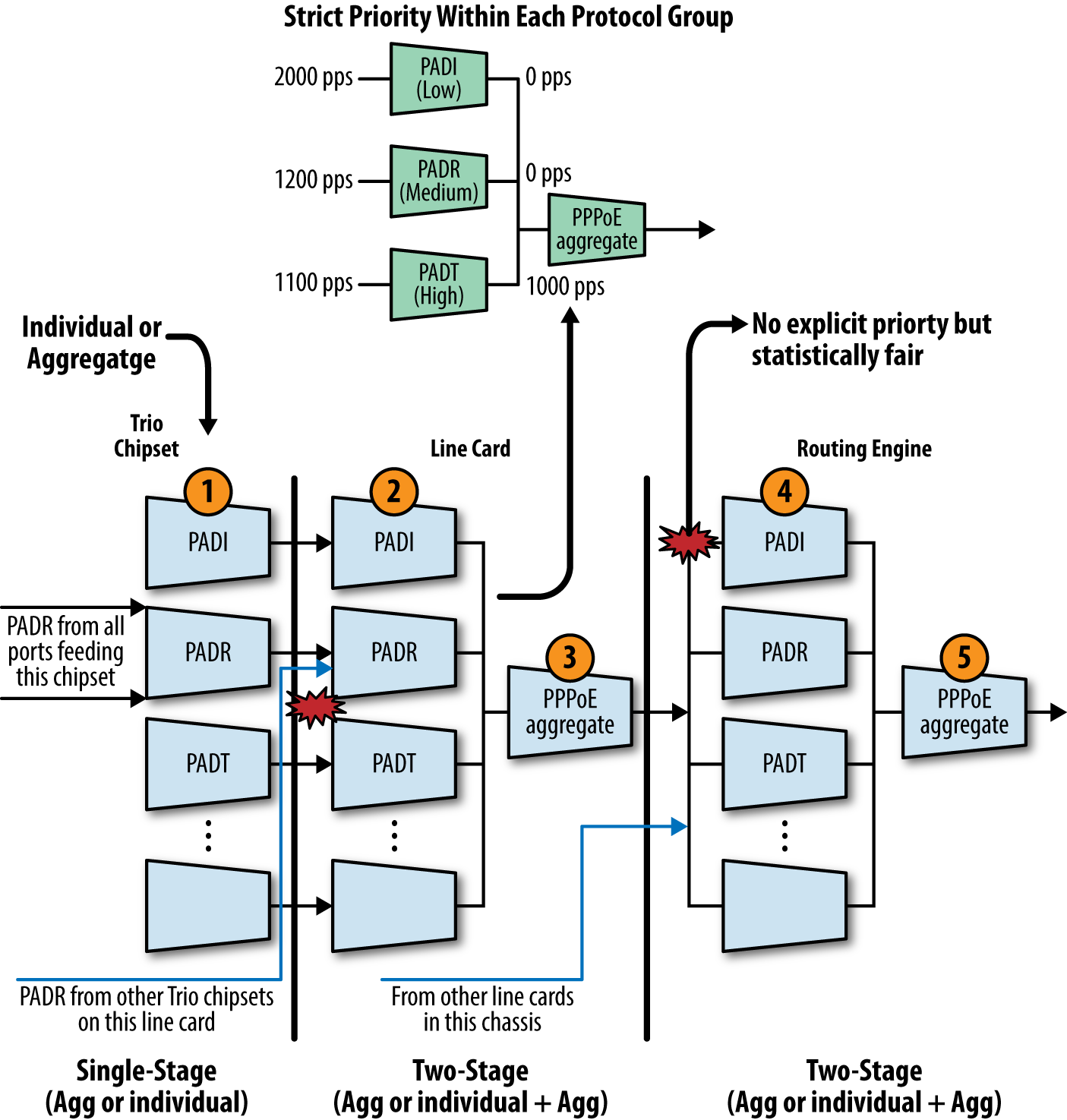

The Juniper DDoS protection feature is based on two main components: the classification of host-bound control plane traffic and a hierarchical set of individual- and aggregate-level policers that cap the volume of control plane traffic that each protocol type is able to send to the RE for processing.

These policers are organized to match the hierarchical flow of protocol control traffic. Control traffic arriving from all ports of a line card converges at the card’s Packet Forwarding Engine. Traffic from all PFEs converges into the line card/FPC. And lastly, control traffic from all line cards on the router converges on the Routing Engine. Similarly, the DDoS policers are placed hierarchically along the control paths so that excess packets are dropped as early as possible on the path. This design preserves system resources by removing excess malicious traffic so that the Routing Engine receives only the amount of traffic that it can actually process. In total, there can be as many as five levels of policing between ingress at the Trio PFE and processing at RE, and that’s not counting any additional lo0-based filtering (with related policing) that can also be in effect.

In operation, control traffic is dropped when it violates a policer’s rate limit. Each violation generates a notification in the syslog to alert the operator about a possible attack. Each violation is counted and its start time is noted, and the system also maintains a pointer to the last observed violation start and end times. When the traffic rate drops below the bandwidth violation threshold, a recovery timer determines when the traffic flow is considered to have returned to normal. If no further violation occurs before the timer expires, the violation state is cleared and a notification is again generated to report clearing of the DDoS event.

Once notified, it’s the operator’s responsibility to analyze the nature of the event to make a determination if the traffic type and volume that triggered the DDoS event was expected or abnormal. There is no easy answer here, as each network is scaled to different values with a differing mix of protocols and rate of churn. If the analysis concludes the volume of traffic was normal, then the related policers should be increased to avoid false alarms and potential service disruptions in the future. In contrast, protocols that are not used, or which are known to generate low message volume, can have their policers decreased.

Note

The default policer settings are intentionally set high to ensure there are no unwanted side effects to preexisting installations as they are upgraded to newer code with DDoS protection support, which is enabled by default. In most cases, operators will want to characterize their network’s expected control plane load and then decrease the default policer values to ensure they gain robust DDoS protection from the feature.

Policer states and statistics from each line card are relayed to the Routing Engine and aggregated. The policer states are maintained during a switchover. Note that during a GRES/NSR event, line card statistics and violation counts are preserved but RE policer statistics are not.

Warning

At this time, DDoS protection is a Trio-only feature. You can configure and commit it on a system that has older, DPC-style line cards but there will be no DDoS protection on those line cards. A chain is only as strong as the worst link; a system with a single line card that does not support DDoS is still vulnerable to an attack.

Collect some figures

As mentioned, the default policers are intentionally set high to avoid any side effects in any configuration from the smallest to the largest with intensive protocol notifications. The DDoS protection feature provides real-time statistics at each level (PFE, line card, and RE) and for each protocol. Let’s take the example of the ICMP protocol:

jnpr@R1-RE0> show ddos-protection protocols icmp statistics

Packet types: 1, Received traffic: 1, Currently violated: 0

Protocol Group: ICMP

Packet type: aggregate

System-wide information:

Aggregate bandwidth is never violated

Received: 285 Arrival rate: 0 pps

Dropped: 0 Max arrival rate: 1 pps

Routing Engine information:

Aggregate policer is never violated

Received: 285 Arrival rate: 0 pps

Dropped: 0 Max arrival rate: 1 pps

Dropped by individual policers: 0

FPC slot 0 information:

Aggregate policer is never violated

Received: 185 Arrival rate: 0 pps

Dropped: 0 Max arrival rate: 1 pps

Dropped by individual policers: 0

Dropped by flow suppression: 0

FPC slot 1 information:

Aggregate policer is never violated

Received: 100 Arrival rate: 0 pps

Dropped: 0 Max arrival rate: 0 pps

Dropped by individual policers: 0

Dropped by flow suppression: 0

FPC statistics provide information for the entire line card, meaning that you don’t have per-PFE statistics but only aggregated information regarding passed or dropped traffic from an MPC point of view. Actually, the per-PFE statistics are only displayed by a PFE command, presented in a few more pages. The Routing-Engine information and the system-wide information shown in the device output should be the same in a single chassis configuration. And as observed in this sample output, all ICMP traffic has been allowed without any policing: the RE received 285 ICMP packets, the sum of the 185 packets received on FPC 0 plus the 100 packets received on FPC 1.

These statistics can be cleared, per-protocol, with the following operational command:

jnpr@R1-RE0> clear ddos-protection protocols <protocol> statistics

When attempting to tune the DDoS policers a logical first step is to collect up-to-date data corresponding to your current network’s baseline for all supported protocols. This data should reflect PFE, MPC, and RE aggregate statistics, as optimal tuning may involve altering policers at all these hierarchies. Once obtained, you can define a reasonable starting value for each policer by adding a small margin of safety to the observed baseline; a sample of this method is provided below.

Because DDoS is enabled by default for all supported protocols, it’s a trivial matter of simply looking at the resulting DDoS statistics to begin baseline analysis. Key among these statistics is the “Max arrival rate” information. By analyzing the peak rate of each protocol (which should be done at several points of your network and over a reasonably sized monitoring window that, of course, includes periods of peak activity), you will have an accurate (and current) view of the maximum per protocol rate for your network in normal operating state. Given that few networks remain the same over time, you should periodically revisit DDoS tuning to make sure your policers always reflect a reasonably current snapshot of expected protocol loads. If not obvious, you should retune DDoS policers whenever a new protocol or service is added to your network.

You can easily use the power of Junos scripting to simplify the monitoring of a particular DDoS counter at a given hierarchy—for example, at the Routing Engine or versus the MPC level. The sample operational mode script (op script) shown here is used to display the current and maximum observed rates at the Routing Engine on a per protocol/packet type basis:

version 1.0;

ns junos = "http://xml.juniper.net/junos/*/junos";

ns xnm = "http://xml.juniper.net/xnm/1.1/xnm";

ns jcs = "http://xml.juniper.net/junos/commit-scripts/1.0";

import "../import/junos.xsl";

match / {

<op-script-results> {

/* Take traces */

var $myrpc = <get-ddos-protocols-statistics> {};

var $myddos = jcs:invoke ($myrpc);

/* Now Display */

<output> "";

<output> "";

<output> "+----------------------------------------------------------------+";

<output> jcs:printf('|%-20s |%-20s |%-11s |%-10s\n',"Protocol","Packet Type",

"Current pps","Max pps Observed");

<output> "+----------------------------------------------------------------+";

for-each( $myddos/ddos-protocol-group/ddos-protocol/packet-type ) {

var $name = .;

if (../ddos-system-statistics/packet-arrival-rate-max != "0"){

<output> jcs:printf('|%-20s |%-20s |%-11s |%-10s\n',../../group-name,

$name,../ddos-system-statistics/packet-arrival-rate,../ddos-system-

statistics/packet-arrival-rate-max);

}

}

<output> "+----------------------------------------------------------------+";

}

}

Note

Only packet-types with a “max arrival rate” greater than 0 are displayed.

This code must be copied onto the router under some filename—here, the file is called checkddos.slax, which is in turn stored in the /var/db/scripts/op folder. Next, the op script must be declared and activated in the configuration:

jnpr@R1-RE0# set system scripts op file checkddos.slax

Next, all DDOS statistics are cleared on the various routers that are used to form your baseline; for example, choose at least your largest node in addition to at least 1 P router, 1 PE router, 1 access node, etc. To make things simple, this example focuses on statistics from just one node. In a real network, for optimal protection you must dimension and tune based on each node’s role and the resulting traffic load it experiences:

jnpr@R1-RE0> clear ddos-protection protocols statistics

Determine your baseline

Control plane traffic can vary dramatically based on time of day usage patterns. In addition, you should consider the effects of a network outage as the period of reconvergence that follows is likely to represent a peak for some types of control plane traffic. As a result, you must allow the statistics to build up over a significant period of time, such as 24 hours or perhaps even for an entire week. In effect, your goal should be to “Let the network live its life,” after which you collect the data via the op script on the routers being sampled in your baseline. As noted above, it’s a good idea to plan for some type of stress testing (during a maintenance window, of course) such as performing link flaps, restarting routing, or even a router reboot. It’s likely that during recovery protocols like BGP you will find far higher pps rates, and corresponding RE load, than would be seen in steady state converged operation.

Note

In any tuning operation, there is no one-size-fits-all rule. You are always trading one thing for another. For example, if your goal is speed of reconvergence after a major outage, you will likely want to set a relatively high DDoS policer value to ensure that legitimate protocol traffic is not dropped, as that would prolong convergence. Then again, setting the policer to reflect an absolute worst-case load for all protocols tends to lessen the overall level of RE protection in the event of a real DDoS attack. Many will opt to take the difference between converged (normal) load and re-converging load (abnormal but legitimate), and use that as a compromise.

Sample output of the op script is provided:

jnpr@R1-RE0> op checkddos +-------------------------------------------------------------------------+ |Protocol |Packet Type |Current pps |Max pps Observed +-------------------------------------------------------------------------+ |resolve |aggregate |0 |67081 |resolve |mcast-v4 |0 |67081 |ICMP |aggregate |0 |1 |IGMP |aggregate |0 |30 |PIM |aggregate |1 |63 |RIP |aggregate |15 |116 |LDP |aggregate |10 |71 |BGP |aggregate |43 |7834 |VRRP |aggregate |7 |15 |SSH |aggregate |3 |564 |SNMP |aggregate |0 |145 |BGPv6 |aggregate |0 |22 |LACP |aggregate |21 |24 |ARP |aggregate |0 |10 |ISIS |aggregate |12 |643 |TCP-Flags |aggregate |2 |2 |TCP-Flags |established |2 |2 |TACACS |aggregate |0 |19 |Sample |aggregate |0 |3 |Sample |syslog |0 |1 |Sample |pfe |0 |1 +-------------------------------------------------------------------------+

Host-bound traffic classification

A modern multiservice router has to support a myriad of protocols, and multiprotocol support inherently assumes a method of recognizing each protocol so it can be directed to the correct processing daemon. The DDoS protection feature latches on to the Trio chipset’s rich protocol classification capability to correctly recognize and bin a large number of subscriber access, routing, network management, and remote access protocols. The current list is already large and expected to grow:

{master}[edit system ddos-protection global]

jnpr@R1-RE0# run show ddos-protection version

DDOS protection, Version 1.0

Total protocol groups = 98

Total tracked packet types = 220

The display shows that in v1.0, there are 98 protocol groups with a total of 220 unique packets types that can be individually policed. The Junos CLI’s ? feature is used here to display the current list:

{master}[edit system ddos-protection]

jnpr@R1-RE0# set protocols ?

Possible completions:

> amtv4 Configure AMT v4 control packets

> amtv6 Configure AMT v6 control packets

> ancp Configure ANCP traffic

> ancpv6 Configure ANCPv6 traffic

+ apply-groups Groups from which to inherit configuration data

+ apply-groups-except Don't inherit configuration data from these groups

> arp Configure ARP traffic

> atm Configure ATM traffic

> bfd Configure BFD traffic

> bfdv6 Configure BFDv6 traffic

> bgp Configure BGP traffic

> bgpv6 Configure BGPv6 traffic

> demux-autosense Configure demux autosense traffic

> dhcpv4 Configure DHCPv4 traffic

> dhcpv6 Configure DHCPv6 traffic

> diameter Configure Diameter/Gx+ traffic

> dns Configure DNS traffic

> dtcp Configure dtcp traffic

> dynamic-vlan Configure dynamic vlan exceptions

> egpv6 Configure EGPv6 traffic

> eoam Configure EOAM traffic

> esmc Configure ESMC traffic

> fab-probe Configure fab out probe packets

> filter-action Configure filter action traffic

> frame-relay Configure frame relay control packets

> ftp Configure FTP traffic

> ftpv6 Configure FTPv6 traffic

> gre Configure GRE traffic

> icmp Configure ICMP traffic

> icmpv6 Configure ICMPv6 traffic

> igmp Configure IGMP traffic

> igmpv4v6 Configure IGMPv4-v6 traffic

> igmpv6 Configure IGMPv6 traffic

> inline-ka Configure inline keepalive packets

> inline-svcs Configure inline services

> ip-fragments Configure IP-Fragments

> ip-options Configure ip options traffic

> isis Configure ISIS traffic

> jfm Configure JFM traffic

> l2pt Configure Layer 2 protocol tunneling

> l2tp Configure l2tp traffic

> lacp Configure LACP traffic

> ldp Configure LDP traffic

> ldpv6 Configure LDPv6 traffic

> lldp Configure LLDP traffic

> lmp Configure LMP traffic

> lmpv6 Configure LMPv6 traffic

> mac-host Configure L2-MAC configured 'send-to-host'

> mcast-snoop Configure snooped multicast control traffic

> mlp Configure MLP traffic

> msdp Configure MSDP traffic

> msdpv6 Configure MSDPv6 traffic

> mvrp Configure MVRP traffic

> ndpv6 Configure NDPv6 traffic

> ntp Configure NTP traffic

> oam-lfm Configure OAM-LFM traffic

> ospf Configure OSPF traffic

> ospfv3v6 Configure OSPFv3v6 traffic

> pfe-alive Configure pfe alive traffic

> pim Configure PIM traffic

> pimv6 Configure PIMv6 traffic

> pmvrp Configure PMVRP traffic

> pos Configure POS traffic

> ppp Configure PPP control traffic

> pppoe Configure PPPoE control traffic

> ptp Configure PTP traffic

> pvstp Configure PVSTP traffic

> radius Configure Radius traffic

> redirect Configure packets to trigger ICMP redirect

> reject Configure packets via 'reject' action

> rejectv6 Configure packets via 'rejectv6' action

> resolve Configure resolve traffic

> rip Configure RIP traffic

> ripv6 Configure RIPv6 traffic

> rsvp Configure RSVP traffic

> rsvpv6 Configure RSVPv6 traffic

> sample Configure sampled traffic

> services Configure services

> snmp Configure SNMP traffic

> snmpv6 Configure SNMPv6 traffic

> ssh Configure SSH traffic

> sshv6 Configure SSHv6 traffic

> stp Configure STP traffic