Chapter 1. Introduction

Get the facts first. You can distort them later.

Let’s talk about I/O. No, no, come back. It’s not really all that dull. Input/output (I/O) is not a glamorous topic, but it’s a very important one. Most programmers think of I/O in the same way they do about plumbing: undoubtedly essential, can’t live without it, but it can be unpleasant to deal with directly and may cause a big, stinky mess when not working properly. This is not a book about plumbing, but in the pages that follow, you may learn how to make your data flow a little more smoothly.

Object-oriented program design is all about encapsulation. Encapsulation is a good thing: it partitions responsibility, hides implementation details, and promotes object reuse. This partitioning and encapsulation tends to apply to programmers as well as programs. You may be a highly skilled Java programmer, creating extremely sophisticated objects and doing extraordinary things, and yet be almost entirely ignorant of some basic concepts underpinning I/O on the Java platform. In this chapter, we’ll momentarily violate your encapsulation and take a look at some low-level I/O implementation details in the hope that you can better orchestrate the multiple moving parts involved in any I/O operation.

I/O Versus CPU Time

Most programmers fancy themselves software artists, crafting clever routines to squeeze a few bytes here, unrolling a loop there, or refactoring somewhere else to consolidate objects. While those things are undoubtedly important, and often a lot of fun, the gains made by optimizing code can be easily dwarfed by I/O inefficiencies. Performing I/O usually takes orders of magnitude longer than performing in-memory processing tasks on the data. Many coders concentrate on what their objects are doing to the data and pay little attention to the environmental issues involved in acquiring and storing that data.

Table 1-1 lists some hypothetical times for performing a task on units of data read from and written to disk. The first column lists the average time it takes to process one unit of data, the second column is the amount of time it takes to move that unit of data from and to disk, and the third column is the number of these units of data that can be processed per second. The fourth column is the throughput increase that will result from varying the values in the first two columns.

|

Process time (ms) |

I/O time (ms) |

Throughput (units/sec) |

Gain (%) |

|

5 |

100 |

9.52 |

(benchmark) |

|

2.5 |

100 |

9.76 |

2.44 |

|

1 |

100 |

9.9 |

3.96 |

|

5 |

90 |

10.53 |

10.53 |

|

5 |

75 |

12.5 |

31.25 |

|

5 |

50 |

18.18 |

90.91 |

|

5 |

20 |

40 |

320 |

|

5 |

10 |

66.67 |

600 |

The first three rows show how increasing the efficiency of the processing step affects throughput. Cutting the per-unit processing time in half results only in a 2.2% increase in throughput. On the other hand, reducing I/O latency by just 10% results in a 9.7% throughput gain. Cutting I/O time in half nearly doubles throughput, which is not surprising when you see that time spent per unit doing I/O is 20 times greater than processing time.

These numbers are artificial and arbitrary (the real world is never so simple) but are intended to illustrate the relative time magnitudes. As you can see, I/O is often the limiting factor in application performance, not processing speed. Programmers love to tune their code, but I/O performance tuning is often an afterthought, or is ignored entirely. It’s a shame, because even small investments in improving I/O performance can yield substantial dividends.

No Longer CPU Bound

To some extent, Java programmers can be forgiven for their preoccupation with optimizing processing efficiency and not paying much attention to I/O considerations. In the early days of Java, the JVMs interpreted bytecodes with little or no runtime optimization. This meant that Java programs tended to poke along, running significantly slower than natively compiled code and not putting much demand on the I/O subsystems of the operating system.

But tremendous strides have been made in runtime optimization. Current JVMs run bytecode at speeds approaching that of natively compiled code, sometimes doing even better because of dynamic runtime optimizations. This means that most Java applications are no longer CPU bound (spending most of their time executing code) and are more frequently I/O bound (waiting for data transfers).

But in most cases, Java applications have not truly been

I/O bound in the sense that the operating

system couldn’t shuttle data fast enough

to keep them busy. Instead, the JVMs have not been

doing I/O efficiently. There’s an

impedance mismatch between the operating system

and the Java stream-based I/O

model. The operating system wants to move data in

large chunks (buffers), often with the assistance of hardware

Direct Memory Access

(DMA). The I/O classes of the

JVM like to operate on small pieces — single

bytes, or lines of text. This means that the operating

system delivers buffers full of data that the stream

classes of

java.io spend a lot

of time breaking down into little pieces, often copying each piece

between several layers of objects. The operating

system wants to deliver data by the truckload. The

java.io classes want to process data by the

shovelful. NIO makes it easier to back the truck

right up to where you can make direct use of the data (a

ByteBuffer object).

This is not to say that it was impossible to move large amounts of

data with the traditional I/O model — it

certainly was (and still is). The

RandomAccessFile class

in particular can be quite efficient if you stick to the array-based

read( ) and

write( ) methods. Even those methods entail at

least one buffer copy, but are pretty close to the underlying

operating-system calls.

As illustrated by Table 1-1, if your code finds itself spending most of its time waiting for I/O, it’s time to consider improving I/O performance. Otherwise, your beautifully crafted code may be idle most of the time.

Getting to the Good Stuff

Most of the development effort that goes into operating systems is targeted at improving I/O performance. Lots of very smart people toil very long hours perfecting techniques for schlepping data back and forth. Operating-system vendors expend vast amounts of time and money seeking a competitive advantage by beating the other guys in this or that published benchmark.

Today’s operating systems are modern marvels of software engineering (OK, some are more marvelous than others), but how can the Java programmer take advantage of all this wizardry and still remain platform-independent? Ah, yet another example of the TANSTAAFL principle.[1]

The JVM is a double-edged sword. It provides a uniform operating environment that shelters the Java programmer from most of the annoying differences between operating-system environments. This makes it faster and easier to write code because platform-specific idiosyncrasies are mostly hidden. But cloaking the specifics of the operating system means that the jazzy, wiz-bang stuff is invisible too.

What to do? If you’re a developer, you could write some native code using the Java Native Interface (JNI) to access the operating-system features directly. Doing so ties you to a specific operating system (and maybe a specific version of that operating system) and exposes the JVM to corruption or crashes if your native code is not 100% bug free. If you’re an operating-system vendor, you could write native code and ship it with your JVM implementation to provide these features as a Java API. But doing so might violate the license you signed to provide a conforming JVM. Sun took Microsoft to court about this over the JDirect package which, of course, worked only on Microsoft systems. Or, as a last resort, you could turn to another language to implement performance-critical applications.

The

java.nio package provides new

abstractions to address this problem. The

Channel and Selector

classes in particular provide generic APIs to

I/O services that were not reachable prior to

JDK 1.4. The TANSTAAFL

principle still applies: you won’t be able to access

every feature of every operating system, but these

new classes provide a powerful new framework that encompasses the

high-performance I/O features commonly available

on commercial operating systems today. Additionally, a new Service

Provider Interface (SPI) is provided in

java.nio.channels.spi

that allows you to plug in new types of channels and selectors

without violating compliance with the specifications.

With the addition of NIO, Java is ready for serious business, entertainment, scientific and academic applications in which high-performance I/O is essential.

The JDK 1.4 release contains many other significant improvements in addition to NIO. As of 1.4, the Java platform has reached a high level of maturity, and there are few application areas remaining that Java cannot tackle. A great guide to the full spectrum of JDK features in 1.4 is Java In A Nutshell, Fourth Edition by David Flanagan (O’Reilly).

I/O Concepts

The Java platform provides a rich set of I/O metaphors. Some of these metaphors are more abstract than others. With all abstractions, the further you get from hard, cold reality, the tougher it becomes to connect cause and effect. The NIO packages of JDK 1.4 introduce a new set of abstractions for doing I/O. Unlike previous packages, these are focused on shortening the distance between abstraction and reality. The NIO abstractions have very real and direct interactions with real-world entities. Understanding these new abstractions and, just as importantly, the I/O services they interact with, is key to making the most of I/O-intensive Java applications.

This book assumes that you are familiar with basic I/O concepts. This section provides a whirlwind review of some basic ideas just to lay the groundwork for the discussion of how the new NIO classes operate. These classes model I/O functions, so it’s necessary to grasp how things work at the operating-system level to understand the new I/O paradigms.

In the main body of this book, it’s important to understand the following topics:

Buffer handling

Kernel versus user space

Virtual memory

Paging

File-oriented versus stream I/O

Multiplexed I/O (readiness selection)

Buffer Handling

Buffers, and how buffers are handled, are the basis of all I/O. The very term “input/output” means nothing more than moving data in and out of buffers.

Processes perform I/O by requesting of the operating system that data be drained from a buffer (write) or that a buffer be filled with data (read). That’s really all it boils down to. All data moves in or out of a process by this mechanism. The machinery inside the operating system that performs these transfers can be incredibly complex, but conceptually, it’s very straightforward.

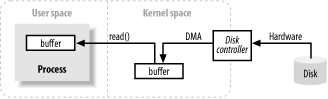

Figure 1-1 shows a simplified logical diagram of

how block data moves from an external source, such as a disk, to a

memory area inside a running process. The process requests that its

buffer be filled by making the

read( ) system call.

This results in the kernel issuing a command to the disk controller

hardware to fetch the data from disk. The disk controller writes the

data directly into a kernel memory buffer by DMA

without further assistance from the main CPU. Once

the disk controller finishes filling the buffer, the kernel copies

the data from the temporary buffer in kernel space to the buffer

specified by the process when it requested the read(

) operation.

This obviously glosses over a lot of details, but it shows the basic steps involved.

Note the concepts of user space and kernel space in Figure 1-1. User space is where regular processes live. The JVM is a regular process and dwells in user space. User space is a nonprivileged area: code executing there cannot directly access hardware devices, for example. Kernel space is where the operating system lives. Kernel code has special privileges: it can communicate with device controllers, manipulate the state of processes in user space, etc. Most importantly, all I/O flows through kernel space, either directly (as decsribed here) or indirectly (see Section 1.4.2).

When a process requests an I/O operation, it

performs a

system call,

sometimes known as a

trap, which transfers

control into the kernel. The low-level open( ),

read( ), write( ), and

close( ) functions so familiar to C/C++ coders

do nothing more than set up and perform the appropriate system calls.

When the kernel is called in this way, it takes whatever steps are

necessary to find the data the process is requesting and transfer it

into the specified buffer in user space. The kernel tries to cache

and/or prefetch data, so the data being requested by the process may

already be available in kernel space. If so, the data requested by

the process is copied out. If the data isn’t

available, the process is suspended while the kernel goes about

bringing the data into memory.

Looking at Figure 1-1, it’s probably occurred to you that copying from kernel space to the final user buffer seems like extra work. Why not tell the disk controller to send it directly to the buffer in user space? There are a couple of problems with this. First, hardware is usually not able to access user space directly.[2] Second, block-oriented hardware devices such as disk controllers operate on fixed-size data blocks. The user process may be requesting an oddly sized or misaligned chunk of data. The kernel plays the role of intermediary, breaking down and reassembling data as it moves between user space and storage devices.

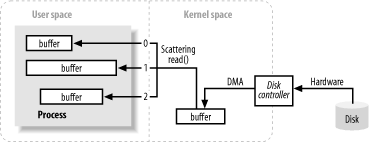

Scatter/gather

Many operating systems can make the assembly/disassembly process even more efficient. The notion of scatter/gather allows a process to pass a list of buffer addresses to the operating system in one system call. The kernel can then fill or drain the multiple buffers in sequence, scattering the data to multiple user space buffers on a read, or gathering from several buffers on a write (Figure 1-2).

This saves the user process from making several system calls (which can be expensive) and allows the kernel to optimize handling of the data because it has information about the total transfer. If multiple CPUs are available, it may even be possible to fill or drain several buffers simultaneously.

Virtual Memory

All modern operating systems make use of virtual memory. Virtual memory means that artificial, or virtual, addresses are used in place of physical (hardware RAM) memory addresses. This provides many advantages, which fall into two basic categories:

More than one virtual address can refer to the same physical memory location.

A virtual memory space can be larger than the actual hardware memory available.

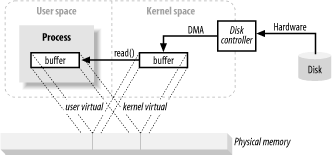

The previous section said that device controllers cannot do DMA directly into user space, but the same effect is achievable by exploiting item 1 above. By mapping a kernel space address to the same physical address as a virtual address in user space, the DMA hardware (which can access only physical memory addresses) can fill a buffer that is simultaneously visible to both the kernel and a user space process. (See Figure 1-3.)

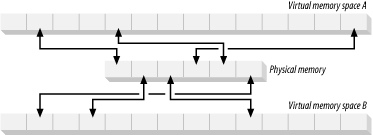

This is great because it eliminates copies between kernel and user space, but requires the kernel and user buffers to share the same page alignment. Buffers must also be a multiple of the block size used by the disk controller (usually 512 byte disk sectors). Operating systems divide their memory address spaces into pages, which are fixed-size groups of bytes. These memory pages are always multiples of the disk block size and are usually powers of 2 (which simplifies addressing). Typical memory page sizes are 1,024, 2,048, and 4,096 bytes. The virtual and physical memory page sizes are always the same. Figure 1-4 shows how virtual memory pages from multiple virtual address spaces can be mapped to physical memory.

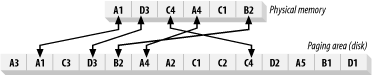

Memory Paging

To support the second attribute of virtual memory (having an addressable space larger than physical memory), it’s necessary to do virtual memory paging (often referred to as swapping, though true swapping is done at the process level, not the page level). This is a scheme whereby the pages of a virtual memory space can be persisted to external disk storage to make room in physical memory for other virtual pages. Essentially, physical memory acts as a cache for a paging area, which is the space on disk where the content of memory pages is stored when forced out of physical memory.

Figure 1-5 shows virtual pages belonging to four processes, each with its own virtual memory space. Two of the five pages for Process A are loaded into memory; the others are stored on disk.

Aligning memory page sizes as multiples of the disk block size allows the kernel to issue direct commands to the disk controller hardware to write memory pages to disk or reload them when needed. It turns out that all disk I/O is done at the page level. This is the only way data ever moves between disk and physical memory in modern, paged operating systems.

Modern CPUs contain a subsystem known as the Memory Management Unit (MMU). This device logically sits between the CPU and physical memory. It contains the mapping information needed to translate virtual addresses to physical memory addresses. When the CPU references a memory location, the MMU determines which page the location resides in (usually by shifting or masking the bits of the address value) and translates that virtual page number to a physical page number (this is done in hardware and is extremely fast). If there is no mapping currently in effect between that virtual page and a physical memory page, the MMU raises a page fault to the CPU.

A page fault results in a trap, similar to a system call, which vectors control into the kernel along with information about which virtual address caused the fault. The kernel then takes steps to validate the page. The kernel will schedule a pagein operation to read the content of the missing page back into physical memory. This often results in another page being stolen to make room for the incoming page. In such a case, if the stolen page is dirty (changed since its creation or last pagein) a pageout must first be done to copy the stolen page content to the paging area on disk.

If the requested address is not a valid virtual memory address (it doesn’t belong to any of the memory segments of the executing process), the page cannot be validated, and a segmentation fault is generated. This vectors control to another part of the kernel and usually results in the process being killed.

Once the faulted page has been made valid, the MMU is updated to establish the new virtual-to-physical mapping (and if necessary, break the mapping of the stolen page), and the user process is allowed to resume. The process causing the page fault will not be aware of any of this; it all happens transparently.

This dynamic shuffling of memory pages based on usage is known as demand paging. Some sophisticated algorithms exist in the kernel to optimize this process and to prevent thrashing, a pathological condition in which paging demands become so great that nothing else can get done.

File I/O

File I/O occurs within the context of a filesystem. A filesystem is a very different thing from a disk. Disks store data in sectors, which are usually 512 bytes each. They are hardware devices that know nothing about the semantics of files. They simply provide a number of slots where data can be stored. In this respect, the sectors of a disk are similar to memory pages; all are of uniform size and are addressable as a large array.

A filesystem is a higher level of abstraction. Filesystems are a particular method of arranging and interpreting data stored on a disk (or some other random-access, block-oriented device). The code you write almost always interacts with a filesystem, not with the disks directly. It is the filesystem that defines the abstractions of filenames, paths, files, file attributes, etc.

The previous section mentioned that all I/O is done via demand paging. You’ll recall that paging is very low level and always happens as direct transfers of disk sectors into and out of memory pages. So how does this low-level paging translate to file I/O, which can be performed in arbitrary sizes and alignments?

A filesystem organizes a sequence of uniformly sized data blocks. Some blocks store meta information such as maps of free blocks, directories, indexes, etc. Other blocks contain file data. The meta information about individual files describes which blocks contain the file data, where the data ends, when it was last updated, etc.

When a request is made by a user process to read file data, the filesystem implementation determines exactly where on disk that data lives. It then takes action to bring those disk sectors into memory. In older operating systems, this usually meant issuing a command directly to the disk driver to read the needed disk sectors. But in modern, paged operating systems, the filesystem takes advantage of demand paging to bring data into memory.

Filesystems also have a notion of pages, which may be the same size as a basic memory page or a multiple of it. Typical filesystem page sizes range from 2,048 to 8,192 bytes and will always be a multiple of the basic memory page size.

How a paged filesystem performs I/O boils down to the following:

Determine which filesystem page(s) (group of disk sectors) the request spans. The file content and/or metadata on disk may be spread across multiple filesystem pages, and those pages may be noncontiguous.

Allocate enough memory pages in kernel space to hold the identified filesystem pages.

Establish mappings between those memory pages and the filesystem pages on disk.

Generate page faults for each of those memory pages.

The virtual memory system traps the page faults and schedules pageins to validate those pages by reading their contents from disk.

Once the pageins have completed, the filesystem breaks down the raw data to extract the requested file content or attribute information.

Note that this filesystem data will be cached like other memory pages. On subsequent I/O requests, some or all of the file data may still be present in physical memory and can be reused without rereading from disk.

Most filesystems also prefetch extra filesystem pages on the assumption that the process will be reading the rest of the file. If there is not a lot of contention for memory, these filesystem pages could remain valid for quite some time. In which case, it may not be necessary to go to disk at all when the file is opened again later by the same, or a different, process. You may have noticed this effect when repeating a similar operation, such as a grep of several files. It seems to run much faster the second time around.

Similar steps are taken for writing file data, whereby changes to

files (via write( )) result in dirty filesystem

pages that are subsequently paged out to synchronize the file content

on disk. Files are created by establishing mappings to empty

filesystem pages that are flushed to disk following the write

operation.

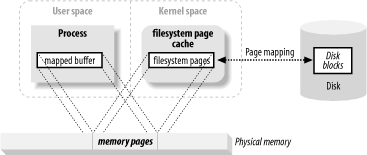

Memory-mapped files

For

conventional file I/O, in which user processes

issue read( ) and write( )

system calls to transfer data, there is almost always one or more

copy operations to move the data between these filesystem pages in

kernel space and a memory area in user space. This is because there

is not usually a one-to-one alignment between filesystem pages and

user buffers. There is, however, a special type of

I/O operation supported by most operating

systems that allows user processes to take maximum

advantage of the page-oriented nature of system

I/O and completely avoid buffer copies. This is

memory-mapped I/O, which is illustrated in

Figure 1-6.

Memory-mapped I/O uses the filesystem to establish a virtual memory mapping from user space directly to the applicable filesystem pages. This has several advantages:

The user process sees the file data as memory, so there is no need to issue

read( )orwrite( )system calls.As the user process touches the mapped memory space, page faults will be generated automatically to bring in the file data from disk. If the user modifies the mapped memory space, the affected page is automatically marked as dirty and will be subsequently flushed to disk to update the file.

The virtual memory subsystem of the operating system will perform intelligent caching of the pages, automatically managing memory according to system load.

The data is always page-aligned, and no buffer copying is ever needed.

Very large files can be mapped without consuming large amounts of memory to copy the data.

Virtual memory and disk I/O are intimately linked and, in many respects, are simply two aspects of the same thing. Keep this in mind when handling large amounts of data. Most operating systems are far more effecient when handling data buffers that are page-aligned and are multiples of the native page size.

File locking

File locking is a scheme by which one process can prevent others from accessing a file or restrict how other processes access that file. Locking is usually employed to control how updates are made to shared information or as part of transaction isolation. File locking is essential to controlling concurrent access to common resources by multiple entities. Sophisticated applications, such as databases, rely heavily on file locking.

While the name “file locking” implies locking an entire file (and that is often done), locking is usually available at a finer-grained level. File regions are usually locked, with granularity down to the byte level. Locks are associated with a particular file, beginning at a specific byte location within that file and running for a specific range of bytes. This is important because it allows many processes to coordinate access to specific areas of a file without impeding other processes working elsewhere in the file.

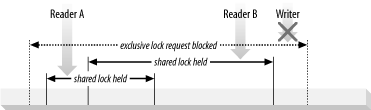

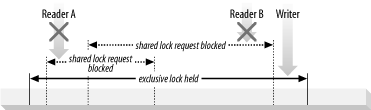

File locks come in two flavors: shared and exclusive. Multiple shared locks may be in effect for the same file region at the same time. Exclusive locks, on the other hand, demand that no other locks be in effect for the requested region.

The classic use of shared and exclusive locks is to control updates to a shared file that is primarily used for read access. A process wishing to read the file would first acquire a shared lock on that file or on a subregion of it. A second wishing to read the same file region would also request a shared lock. Both could read the file concurrently without interfering with each other. However, if a third process wishes to make updates to the file, it would request an exclusive lock. That process would block until all locks (shared or exclusive) are released. Once the exclusive lock is granted, any reader processes asking for shared locks would block until the exclusive lock is released. This allows the updating process to make changes to the file without any reader processes seeing the file in an inconsistent state. This is illustrated by Figures Figure 1-7 and Figure 1-8.

File locks are either advisory or mandatory. Advisory locks provide information about current locks to those processes that ask, but such locks are not enforced by the operating system. It is up to the processes involved to cooperate and pay attention to the advice the locks represent. Most Unix and Unix-like operating systems provide advisory locking. Some can also do mandatory locking or a combination of both.

Mandatory locks are enforced by the operating system and/or the filesystem and will prevent processes, whether they are aware of the locks or not, from gaining access to locked areas of a file. Usually, Microsoft operating systems do mandatory locking. It’s wise to assume that all locks are advisory and to use file locking consistently across all applications accessing a common resource. Assuming that all locks are advisory is the only workable cross-platform strategy. Any application depending on mandatory file-locking semantics is inherently nonportable.

Stream I/O

Not all I/O is block-oriented, as described in previous sections. There is also stream I/O, which is modeled on a pipeline. The bytes of an I/O stream must be accessed sequentially. TTY (console) devices, printer ports, and network connections are common examples of streams.

Streams are generally, but not necessarily, slower than block devices and are often the source of intermittent input. Most operating systems allow streams to be placed into nonblocking mode, which permits a process to check if input is available on the stream without getting stuck if none is available at the moment. Such a capability allows a process to handle input as it arrives but perform other functions while the input stream is idle.

A step beyond nonblocking mode is the ability to do readiness selection. This is similar to nonblocking mode (and is often built on top of nonblocking mode), but offloads the checking of whether a stream is ready to the operating system. The operating system can be told to watch a collection of streams and return an indication to the process of which of those streams are ready. This ability permits a process to multiplex many active streams using common code and a single thread by leveraging the readiness information returned by the operating system. This is widely used in network servers to handle large numbers of network connections. Readiness selection is essential for high-volume scaling.

Summary

This overview of system-level I/O is necessarily terse and incomplete. If you require more detailed information on the subject, consult a good reference — there are many available. A great place to start is the definitive operating-system textbook, Operating System Concepts, Sixth Edition, by my old boss Avi Silberschatz (John Wiley & Sons).

With the preceding overview, you should now have a pretty good idea of the subjects that will be covered in the following chapters. Armed with this knowledge, let’s move on to the heart of the matter: Java New I/O (NIO). Keep these concrete ideas in mind as you acquire the new abstractions of NIO. Understanding these basic ideas should make it easy to recognize the I/O capabilities modeled by the new classes.

We’re about to begin our Grand Tour of NIO. The bus is warmed up and ready to roll. Climb on board, settle in, get comfortable, and let’s get this show on the road.

Get Java NIO now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.