Chapter 1. King Canute and the Butterfly: How we create the illusion of being in control.

“If the doors of perception were cleansed every thing would appear to man as it is, infinite. For man has closed himself up, till he sees all things thro’ narrow chinks of his cavern.”

William Blake, The Marriage of Heaven and Hell

Imagine a scene in a movie. A quiet night on the ocean, on the deck of a magnificent ship, sailing dreamily into destiny. Moonlight reflects in a calm pond that stretches off into the distance, waves lap serenely against the bow of the ship, and – had there been crickets on the ocean, we would have heard that reassuring purring of the night to calm the senses.

The captain, replete with perfectly adjusted uniform, comes up to the night helmsman and asks: “How goes it, sailor?” To which the sailor replies: “No problem. All’s quiet, sir. Making a small course correction. Everything’s shipshape and under control.”

At this moment, the soundtrack stirs, swelling into darker tones, because we know that those famous last words are surely a sign of trouble in any Hollywood script.

At that very moment, the camera seems to dive into the helmsman’s body, swimming frantically along his arteries with his bloodstream to a cavernous opening, where we view a deadly parasite within him that will kill him within the hour. Then the camera pulls back of him and pans out, rising above the ship, up into the air to an altitude at which the clear, still pond of the ocean seems to freckle and soon becomes obscured by clouds. The calm sea, it turns out, is just the trough of a massive wave that, miles away, reaches up to ten times the height of the ship, and is racing across the planet with imminent destruction written all over it. As we rise up, and zoom out of the detail, we see the edge of a massive storm, swirling with fierce intensity and wreaking havoc on what is now a hair’s breadth away on the screen. And then, pulling even farther out, just beyond the edge of the planet, is a swarm of meteorites, firing down onto the human realm, one of which is the size of Long Island (it’s always Long Island), and will soon wipe out all life on Earth. Picking up speed now, the camera zooms back and we see the solar system spinning around a fiery sun, and see stars and galaxies and we return to a calm serenity, where detail is mere shades of colour on a simple black canvas. Then the entire universe is swallowed into a black hole.

Shipshape and under control?

Well, don’t worry. This scene is not a prediction of anyone’s future, and hopefully you recognized the smirk of irony injected for humorous effect. What the imaginary scene is supposed to convey is that our perceptions of being ‘in control’ always have a lot to do with the scale at which we focus our attention—and, by implication, the information that is omitted. We sometimes think we are in control because we either don’t have or choose not to see the full picture.

Is this right or wrong?

That is one of the questions that I want to offer some perspectives on in this book. How much of the world can we really control and harness for our purpose? To make infrastructure, we need to make certain assurances. From the parody presented above, one has the sense that we can control some parts of the world, but that there are also forces beyond our control, ‘above’ and ‘below’ on the scale of things.

This makes eminent sense. The world, after all, is not a continuous and uniform space, it is made of bits and pieces, of enclosed regions and open expanses – and within this tangle of environments, there are many things going on about which we often have very limited knowledge. In technical language, we say that we have incomplete information about the world. This theme of missing information will be one of the central ideas in this book.

Some of the missing information is concealed by distance, some by obstacles standing in our way. Some is not available because it has not arrived yet, or it has since passed, and some of the information is just occurring on such a different scale that we are unable to comprehend it using the sensory apparatus that we are equipped with. Using a microscope we can see a little of what happens on a small scale, and using satellites and other remote tools we can capture imagery on a scale larger than ourselves, but when we are looking down the microscope we cannot see the clouds, and when we are looking through the satellite, we cannot perceive bacteria.

Truly, our capacity for taking in information is limited by scale in a number of ways, but we should not think for a moment that this is merely a human failing. There is more going on here.

Sometimes a lack of information doesn’t matter to predicting what comes next, but sometimes it does. Phenomena have differing sensitivities to information, and that information is passed on as an influence between things. So this sensitivity to information is not merely a weakness that applies to humans, like saying that we need better glasses: the ability to interact with the world varies hugely with different phenomena – and those interactions themselves are often sensitive to a preferred scale. We know this from physics itself, and it is fundamental, as we shall see in the chapters ahead.

Let’s consider for a moment why scale should play a role in how things interact. This will be important when we decide how to successfully build tools and systems for the world. Returning for a moment to our ship on the sea, we observe that the hull has a certain length and a certain width and a certain height, and the waves that strike it have a certain size. This is characterized by a wavelength (the distance between one wave and the next), and an amplitude (the mean height of the wave). Intuition alone should now tell us that waves that are high compared to height of the ship will have a bigger impact on it when they hit the ship than waves that are only small compared to its size. Moreover, waves that are much shorter in length compared to the length and width of the ship will strike the ship more often than a long wave: bang bang bang instead of gradually rising and falling. Indeed, if a single wave were many kilometres in wavelength, the ship would scarcely notice that anything was happening as the wave passed it, even if the wave gradually lifted the ship a distance of three times its height. Relative scales in time and in space matter.

The ship is a moving, physical object, but could we not avoid the effects of scale by just watching a scene without touching anything? In fact, we can’t because we cannot observe any without interacting with it somehow. This was one of the key insights made by quantum theory founder Werner Heisenberg, at the beginning of the 20th century. He pointed out that, in order to transfer information from one object to another, we have to interact with it, and that we change it in the process. For instance, to measure the pressure of a tyre, you have to let some of the air out of the tyre into a pressure gauge, reducing the pressure slightly. There are no one-way interactions, only mutual interactions. In fact, the situation is quite similar to that of the waves on the ship, because all matter and energy in the universe has a wavelike nature.

Knowing your scales is a very practical problem for information infrastructure. To first create, and then maintain information rich services, we have to know how to extract accurate information and act on it cheaply, without making basic science errors. For example, to respond quickly to phone coverage demand in a flash crowd, you cannot merely measure the demand just twice daily and expect to capture what is going on. On the other hand, rechecking the catalogue of movies on an entertainment system every second would be a pointless excess.

One has to use the right kind of probe to see the right level of detail. When scientists take picture of atoms and microstructures, they use small wavelength waves like X-rays and electron waves, where the wavelength is comparable to the size of the atoms themselves. The situation is much like trying to feel the shape of tiny detail with your fingers. Think of a woollen sweater. Sweaters have many different patterns of stitching, but if you close your eyes and try to describe the shape of the stitching just by feeling it with your fat fingers, you would not be able to because each finger is wider than several threads. You can see the patterns with your eyes because light has a wavelength much smaller than the width of either your fingers or the wool.

Now suppose you could blow up the sweater to a much greater size (or equivalently shrink your fingers), so that the threads of wool were like ropes; then you would be able to feel the edges of each thread and sense when one thread goes under or over another. You would be able to describe a lot more information about it. Your interaction with the system of the sweater would be able to resolve detail and provide sufficient information4.

To develop a deep understanding of systems, we shall need to understand information in some depth, especially how it works at different scales. We’ll need to discuss control, expectation and stability, and how these things are affected by the incompleteness of the information we have about the world. We need to think bigger than computers, to the world itself as a computer, and we need to ask how complete and how certain is the information we rely on.

Information we perceive is limited by our ability to probe things as we interact with them—we are trapped between the characteristic scales of the observer and the observed. Infrastructure is limited in the same way. This is more than merely a biological limitation to be overcome, or a problem of a poorly designed instrument. In truth, we work around such limitations in ingenious ways all the time, but it is not just that. This limitation in our ability to perceive is also a benefit. We also use that limitation purposely as a tool to understand things, to form the illusion of mastery and control over a limited scale of things, because by being able to isolate only a part of the world, we reduce a hopeless problem to a manageable one.

We tune into a single frequency on the radio, we focus on a particular distance, zoom in or zoom out, looking at one scale at a time. We place things in categories, or boxes, files into folders, we divide up markets into stalls, and malls into shops, cities into buildings, and countries into counties, all to make our comprehension of the world more manageable by limiting the amount of information we have to interact with at any time. Our experience of the world can be made comprehensible or incomprehensible, by design.

Analogies will be helpful to us in understanding the many technical issues about information. Thinking back to the ship, our fateful helmsman, who reported that everything was under control, was sitting inside a ship, within a calm region of ocean with sensory devices that were unable to see the bigger picture. Without being distracted by the inner workings of his body, he was able to observe the ship and the ocean around him and steer the ship appropriately within this region. By being the size that he was, he could fit inside the safety of the ship, avoiding the cold of the night, and by fitting into the calm trough of the ocean’s mega-wave, the ship was able to be safely isolated from damage caused by the massive energies involved in lifting such an amount of water.

The mental model of the ship on the ocean seemed pretty stable to observers at that scale of things, with no great surprises. This allowed the ship to function in a controlled manner and for humans to perceive a sense of control in the local region around the ship, without being incapacitated by global knowledge of large scale reality. How would this experience translate into what we might expect for society’s information infrastructure?

Scale is thus both a limiter and a tool. By shutting out the bigger (or smaller) picture, we create a limited arena in which our actions seem to make a difference5. We say that our actions determine the outcome, or that the outcomes are deterministic. On a cosmic scale, this is pure hubris—matters might be wildly out of control in the grand scheme of things, indeed we have no way of even knowing what we don’t know; but that illusion of local order, free of significant threat, has a powerful effect on us. If fortune is the arrival of no unexpected surprises, then fortune is very much our ally, as humans, in surviving and manipulating the world around us.

The effect of limited information is that we perceive and build the world as a collection of containers, patches or environments, separated from one another by limited information flow. These structures define characteristic scales. In human society, we make countries, territories, shops, houses, families, tribes, towns, workplaces, parks, and recreation centres. They behave like units of organization, if not physically separated then at least de-marked from one another; and, within each, there are clusters of interaction, the molecules of human chemistry. Going beyond what humans have built, we have environments such as micro-climates, ponds, the atmosphere, the lithosphere, the magnetosphere, the atomic scale, the nuclear scale, the collision scale or mean free path, and so on. All of these features of the world that we identify can be seen as emerging from a simple principle: a finite range of influence, or limited transmission of information, relative to a certain phenomenon.

There are really two complementary issues at play here: perception and influence. We need to understand the effect that scale has upon these. The more details we can see, the less we have a sense of control. This is why layers of management in an organization tend to separate from the hands-on workers. It is not a class distinction, but a skill separation. In the semantic realm, this is called the separation of concerns, and it is not only a necessary consequence of loss of resolution due to scale, but also a strategy for staying sane6. Control seems then to be a combination of two things:

Control → Predictability + Interaction

To profit from interactions with the world, in particular the infrastructure we build, it has to be predictable enough to use to our advantage. If the keyboard I am typing on were continuously changing or falling apart, it would not be usable to me. I have to actually be able to interact with it—to touch it.

Rather than control, we may talk about certainty. How sure we can we be of the outcomes of our actions? Later in the book, I will argue that we can say something like this:

Certainty → Knowledge + Information

where knowledge is a relationship to the history of what we’ve already observed in the past (i.e. an expectation of behaviour), and information is evidence of the present: that things are proceeding as expected.

Predictability and interaction: these foundations of control lie at the very heart of physics, and are the essence of information, but can we guarantee them in sufficient measure to build a world on top? Even supposing that one were able to arrange an island of calm, in which to assert sufficient force to change and manipulate the world, are we still guaranteed absolute control? Will infrastructure succeed in its purpose?

The age of the Enlightenment was the time of figures like Galileo Galilei (1564-1642) and Isaac Newton (1642-1727), philosophers who believed strongly in the idea of reason. During these times, there emerged a predominantly machine-like view of the world. This was in contrast with the views of Eastern philosophers. The world itself, Newton believed, existed essentially as a deterministic infrastructure for God’s own will. Man could merely aspire to understand and control using these perfect laws.

The concept of determinism captures the idea that cause and effect are tightly linked to bring certainty, i.e. that one action inevitably causes another action in a predictable fashion, to assure an outcome as if intended7. Before the upheavals of the 19th and 20th century discoveries, this seemed a reasonable enough view. After all, if you push something, it moves. If you hold it, it stops. Mechanical interaction itself was equated with control and perfectly deterministic outcome. Newton used his enormous skill and intellect to formalise these ideas.

The laws of geometry were amongst the major turning points of modern thinking that cheered on this belief in determinism. Seeing how simple geometric principles could be used to explain broad swathes of phenomena, many philosophers, including Newton, were inspired to mimic these properties to more general use. Thomas Hobbes (1588-1679) was one such man, and a figure whom we shall stumble across throughout this story of infrastructure. A secretary to Francis Bacon (1561-1626), one of the founders of scientific thinking, he attempted to codify principles to understand human cooperation, inspired by the power of such statements of truth as ‘two straight lines cannot enclose a space’. He dreamt not just of shaping technology, but society itself, by controlling it with law and reason.

Information, on the other hand, as an idea and as a commodity, played an inconspicuous role during this time of Enlightenment. It crept into science more slowly and circuitously than determinism, through bookkeeping ledgers of experimental observation, but also implicitly through the new theory for moving objects. Its presence, although incognito, was significant nonetheless in linking descriptive state with the behaviour of things. Information was the key to control, if only it could be mastered.

Laws, inspired by geometry then, began to enter science. Galileo’s law of inertia, which later became co-opted as Newton’s first law of motion, implicitly linked information and certainty with the physics of control, in a surprising way. It states that, unless acted upon by external forces, bodies continue in a state of rest or uniform motion in a straight line. This is basically a statement that bodies possess motional stability unless perturbed by external influences. Prior to this, it had seemed from everyday experience that one had to continually push something to make it move. The concepts of friction and dissipation were still unappreciated. Thus Newton’s insights took science from a general sense of motion being used up, like burned wood, to the idea that motion was a property that might be conserved and moved around, like money.

Newton’s first law claimed that, as long as no interactions were made with other bodies, there could be no payment, and thus motion would remain constant. Thus emerged a simple accounting principle for motion, which is what we now call energy. The concept of energy, as book-keeping information, was first used formally by German philosopher Gottfried Wilhelm Leibniz (1646-1716), Newton’s contemporary and rival in scientific thought, though Thomas Young is recorded as the first person to use the modern terminology in lectures to the Royal Society in 1802.

Newton’s second law of motion was a quantification of how these energy payments could be made between moving bodies. It provided the formula that described how transmission of a form of information altered a stable state of motion. The third law said that the transmission of influence between bodies had to result in a mutual change in both parties: what was given to one must be lost by the other. In other words, for every force given there is an equal and opposite back-reaction on the giver.

The notion of physical law emerging was that the world worked basically like a clockwork machine, with regular lawful and predictable behaviour, bookkeeping its transactions as if audited by God himself. It all worked through the constant and fixed infrastructure of a physical law. If one could only uncover all the details of that law, Man would have total knowledge of the future. Information could become a tool, a technology. This was Newton’s vision of God, and there is surely a clue in here to the search for certainty.

These thoughts were essential to our modern view of science. They painted a picture of a world happening, like a play, against a cosmic backdrop, with machine-like predictability. They still affect the way we view the separation of ‘system’ and ‘environment’, such as the activities of consumers or users from society’s background infrastructure. Universality of phenomena independent of environments allowed one to reason, infer and plan ahead.

When Galileo dropped balls of different mass from the Tower of Pisa in the 16th century, predicting that they would hit the ground at the same time, he codified this as a law of nature that all masses fall at the same rate8. The original experiment was said to have been a hammer and a feather9, which had not worked due to the air resistance of the feather. The experiment was repeatable and led to the promise that the same thing would happen again if the experiment were repeated. His experiment was repeated on the Moon by Apollo 15 astronaut David Scott, actually using a hammer and a feather. He proved that if one could eliminate the interfering factors, or separate the concerns, the rule remained true. (Note how the concept of promises seems to be relevant in forming expectations. This theme will return in Part III of the book.)

We might choose to take this continuity of physical behaviour as an axiom, but the example illustrates something else important: that what seems to be a law often needs to be clarified for the context in which it is made. On Earth, dropping a hammer and a feather would not have the same result because of the air resistance of the feather. In outer space, the lack of uniform gravitation would prevent the objects from falling at all. Had we simply made the promise (or more boldly, the ‘law’) that says hammers will fall at a constant acceleration, it would have been wrong. The way we isolate effects on a local scale and use them to infer general rules is a testament to the homogeneity of physical behaviour.

But how far does this go? Still there is the issue of scale. Is the world truly clockwork in its machinations, and at all scales, and in all contexts? During Newton’s time, many philosophers believed that it was10.

The desire to control is a compelling one that has seduced many minds over the years. A significant amount of effort is used to try to predict the stock market prices, for instance, where millions of dollars in advantage can result from being ahead of competitors in the buying and selling of stocks. We desire an outcome, and we desire the ability to determine the outcome, so that we can control things. Yet this presupposes that the world is regular and predictable with the continuity of cause and effect. It presupposes that we can isolate multiple attempts to influence the behaviour of something so that the actual thing we do is the deciding factor for outcome —with no interference from outside. Noise and interference (radio noise, the weather, flocks of birds at airports, etc.), are constant forces to be reckoned with in building technologies for our use.

Shutting things into isolation, by separating concerns is the classic strategy to win apparent control from nature. This is reflected in the way laws of physics are formulated. For example: “A body continues in a state of rest or uniform motion in the absence of net external forces”. This is a very convenient idealization. In fact, there is no known place in the universe where a body could experience exactly no external force. The law is a fiction, or – as we prefer to say – a suitably idealized approximation to reality. Similarly, examples of Newtonian mechanics talk about perfectly smooth spheres, with massless wires and frictionless planes. Such things do not exist, but, had they existed, they would have made the laws so much simpler, separating out only the relevant or dominant concerns for a particular scale. Physics is thus a creative work of abstraction, far from the theory of everything that is sometimes claimed.

For about a century, then, determinism was assumed to exist and to be the first requirement to be able to exert precise control over the world. This has come to dominate our cultural attitudes towards control. Today, determinism is known to be fundamentally false, and yet the illusion of determinism is still clung onto with fervour in our human world of bulk materials, artificial environments, computers and information systems.

The impact of Newton and Leibniz on our modern day science, and everyday cultural understanding of the world, can hardly be overestimated. The clockwork universe they built is surely one of the most impressive displays of theoretical analysis in history. If we need further proof that science is culture, we only have to look at the way their ideas pervade every aspect of the way that we think about the world. Words like energy, momentum, force and reaction creep into everyday speech. But they are approximations.

The problem was this: the difficult problems in physics from Newton’s era were to do with the movements of celestial bodies, i.e. the physics of the very large. Seemingly deterministic methods could be developed to approximate these large scale questions, and today’s modern computers make possible astounding feats of prediction, like the Martian landings and the Voyager space probe’s journey through the solar system, with a very high degree of accuracy. What could be wrong with that?

The answer lay in the microscopic realm, and it would not be many years following Newton, before determinism began to show its cracks, and new methods of statistics had to be developed to deal with the uncertainties of observing even this large scale celestial world of tides and orbits. For a time science could ignore indeterminism. Two areas of physics defied this dominion of approximation, however, and shattered physicists’ views about the predictability of the natural world. The first was quantum theory, and the second was the weather.

The 20th century saw the greatest shift away from determinism and its clockwork view of the universe, towards explicitly non-deterministic ideas. It began with the development of statistical mechanics to understand the errors in celestial orbits, and the thermodynamics of gases and liquids that lay behind the ingenious innovations of the industrial revolution11. Following that, came the discovery that the physics of the very small could only be derived from a totally new kind of physical theory, one that did not refer to actual moving objects at all, but was instead about different states of information.

That matter is made up of atoms is probably known by everyone with even the most rudimentary education today. Many also know that the different atomic elements are composed of varying numbers of protons, neutrons and electrons, and that even more particles were discovered after these, to form part of a sizable menagerie of things in the microscopic world of matter. Although commonplace, this knowledge only emerged relatively recently in the 20th century, a mere hundred years ago, and yet its impact on our culture has been immense12. What is less well known is that these microscopic parts of nature are goverened by the dynamics of states rather than smooth motion.

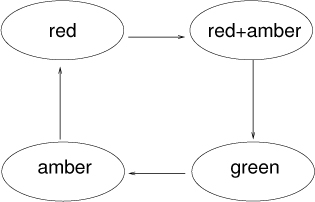

A state is a very peculiar idea, if you are used to thinking about particles and bodies in motion, but it is one that has, more recently, become central in information technology. A state describes something like a signal on a traffic light, i.e. its different colour combinations. We say that a traffic light can exist in a number of allowed states.

For example, in many countries, there are three allowed states: red, amber and green. In the UK and some European and Commonwealth countries, however, it can exist in one of four states: red, amber, red-amber, and green. The state of the system undergoes transitions: from green to amber to red, for instance.

Figure 1-1. The traffic light cycle is made up of four distinct states. It gives us a notion of orbital stability in a discrete system of states, in the next chapter.

What quantum science tells us is that subatomic particles like electrons are things that exist in certain states. Their observable attributes are influenced (but not determined) by states that are hidden somewhere from view. Even attributes like the positions of the particles are unknown a priori, and seem to be influenced by these states. At the quantum level, particles seem to live everywhere and nowhere. Only when we observe them, do we see a snapshot outcome. Moreover, instead of having just three or four states, particles might have a very large number, and the changes in them only determine the probability that we might be able to observe the state in the particle.

It is as if we cannot see the colour of nature’s states directly, we can only infer them indirectly by watching when the traffic starts and stops. These are very strange ideas, nothing like the clockwork universe of Newton.

The history of these discoveries was of the greatest significance, gnawing at the very roots of our world view. It marked a shift from describing outcomes in terms of forces and inevitable laws, to using sampled information and chance. The classical notion of a localized point-like particle was obliterated leaving a view based just on information about observable states. The concept of a particle was still ‘something observable’, but we know little more than that. Amazingly, the genius of quantum mechanics has been that we also don’t need to understand the details to make predictions13. We can get along without knowing what might be happening ‘deep down’. Although a bit unnerving, knowing that we have to let go of determinism has not brought the world to a standstill.

There are lessons to be learnt from this, about how to handle the kind of uncertainties we find today in modern information infrastructure, as its complexity pushes it beyond the reach of simple methods of control.

The way ‘control’ emerges in a quantum mechanical sense is in the manipulation of guard-rails or constraining walls, forces called potentials: containers that limit the probable range of electrons to an approximately predictable region. This is not control, but loading the dice by throwing other dice at them. Similarly, when building technologies to deal with uncertainty, we must use similar ideas of constraint.

We don’t experience the strangeness of the quantum world in our lives because, at the coarse scale of the human eye, of many millions of atoms, the bizarre quantum behaviour evens out—just as the waves lapping onto our ship were dwarfed by the larger features of the ocean when zooming out. We can expect the same thing to happen in information systems too. As our technology has become miniaturized to increasingly atomic dimensions, the same scaling effect is happening effectively in our information infrastructure today, thanks to increased speed and traffic density.

Determinism is thus displaced, in the modern world, from being imagined as the clockwork mechanism of Newtonian ‘physical law’, to merely the representing the intent to measure: the interaction with the observer14. This is a symptom of a much deeper shift that has shaken science from a description in terms of ballistic forces to one of message passing, i.e. an information based perspective. We’ll return further to this issue and describe what this for infrastructure means in Chapter 3.

Our modern theory of matter in the universe is a model that expressly disallows precise knowledge of its internal machinery. Quantum Mechanics, and its many founders15 throughout the first half of the 20th century, showed that our earlier understanding of the smallest pieces of the world was deeply flawed, and only seemed to be true at the scale of the macroscopic world. Once we zoom in to look more closely, things look very different. Nevertheless, we can live with this lack of knowledge and make progress. The same is true modelling information infrastructure, if we only understand the scales in the right way. This presents a very different challenge to building technology: instead of dealing with absolute certainty, we are forced to make the best of unavoidable uncertainty16.

But there is more. As if the quantum theory of the very small weren’t enough, there was a further blow to determinism in the 1960s, not from quantum theory this time, but rather from the limits of information on a macroscopic scale. These limits revealed themselves during the solving of complicated hydrodynamic and fluid mechanical models, by the new generation of digital computers, for the more mundane purpose of the weather forecast.

What makes weather forecasting difficult is physics itself. The physics of the weather is considerably harder than the physics of planetary motion. Why? The simple answer is scale. In planetary motion, planets and space probes interact weakly by gravitational attraction. The force of attraction is very weak, which has the effect that planets experience a kind of calm ocean effect, like our imaginary ship. What each celestial body experiences is very predictable, and the time-scales over which major changes occur are long in most cases of interest.

The atmosphere of a planet is a thin layer of gases and water, frothing and swirling in constant motion. Gas and liquid are called fluids, because they flow (they are not solids). They experience collisions (pressure), random motion (temperature) and even internal friction and stickiness (viscosity), fluids can be like water or like treacle, and unlike planets their properties change depending on how warm they are. The whole of thermodynamics and the industrial revolution depended on these qualities. The timescales of warming and cooling and movement of the atmosphere are similar to the times over which large bodies of fluid move. All of this makes the weather a very complex fluid dynamics problem.

Now, if we zoom in with a microscope, down to the level of molecules, the world looks a little bit like a bunch of planets in motion, but only a superficially. A gas consists of point-like bodies flying around. So why is a fluid so much harder to understand? The answer is, again, the scales at work. In a gas, there are billions of times more bodies flying around than in a solar system, and they weigh basically nothing compared to a planet. Molecules in a gas are strongly impacted when sunlight shines onto them – because they are so light, they get a significant kick from a single ray of light. A planet, on the other hand, is affected only infinitesimally by the impact of light from the sun.

So when we model fluids, we have to use methods that can handle all of these complex, strong interactions. When we model planets, we can ignore all of those effects as if they were small waves lapping against a huge ship. The asteroid belt is the one feature of our solar system that one might consider modelling as a kind of fluid. It contains many bodies in constant, random motion, within a constrained gravitational field. However, even the asteroid belt is not affected by the kind of sudden changes of external force that our atmosphere experiences. All of these things conspire to make the weather an extremely difficult phenomenon to understand. We sometimes call this chaos.

The Navier-Stokes equations for fluid motion were developed in the 1840s by scientists Claude-Louis Navier (1785-1836) and George Gabriel Stokes (1819-1903), on the basis of a mechanical model for idealized incompressible fluids. They are used extensively in industry as well as in video games to simulate the flowing of simple and complex mixtures of fluids through pipes, as well as convection cells in houses and in the atmosphere. As you might imagine, the equations describing the dynamics of fluids and all of their strongly interacting parts are unlike those for planetary motion, and contain so-called non-linear parts. This makes solving problems in fluid mechanics orders of magnitude more difficult than for planetary motion, even with the help of computers. The reason for this is rather interesting.

The problem with understanding it was that its tightly coupled parts made computational accuracy extremely important. The mixing of scales in the Navier-Stokes equations was the issue. When phenomena have strongly interacting parts, understanding their behaviour is hard, because the details at one scale can directly affect the details at another scale. The result is that answers become very sensitive to the accuracy of the calculations. If couplings in systems are only weak, then a small error in one part will not affect another part very strongly and approximations work well. But in a strongly connected system, small errors get amplified. For example, planets are only weakly coupled to one another, so an earthquake in California (which is a small scale phenomenon) does not affect the orbit of the Moon (a large scale phenomenon).

Suppose our ship was not floating on the water with a propeller, but being pushed across the water by a metal shaft from the land, like a piston engine. Then the ship and the land would have been strongly coupled, and what happened to one would be immediately transmitted back to the other. Another way of putting it is that what would have remained short-range and local to the ship, suddenly attained a long-range effect. So long- and short-scale behaviour would not separate.

The coupling in a fluid is not quite as strong as a metal shaft, between every atom, but it is somewhere in between that and a collection of weakly interacting planets. This means that the separation of scales does not happen in a neat, convenient way.

What does all this have to do with the weather forecast? Well, the simple answer is that it made calculations and modelling of the weather unreliable. In fact, the effects of all of this strong coupling came very graphically into the public consciousness when another scientist in the 1960s tried to use the Navier-Stokes equations to study convection of the atmosphere. American mathematician and meteorologist Edward Lorenz (1917-2008) developed a set of equations, called naturally the Lorenz equations to model the matter of two dimensional convection, derived with the so-called Boussinesq approximation to Navier-Stokes. On the surface of the Earth, which is two dimensional, convection is responsible for driving many of the processes that lead to the weather.

The Lorenz equations, which look deceptively simple compared to the Navier-Stokes equation, are a set of mutually modulating differential equations which, through their coupling, become non-linear. Certain physical processes are referred to as having non-linear behaviour, which means that the graph of their response is not a straight line, giving them an amplifying effect. The response of such a process to a small disturbance is disproportionately larger than the disturbance itself, like when you whisper into a microphone that is connected to a huge public address system and blow back the hairstyles of your audience. Disproportionately means, again, not a proportional straight-line relationship, but a superlinear curve which is therefore ‘non-linear’.

Non-linearity makes amplification of effect much harder to keep track of because it amplifies different quantities by different amounts, and makes computations inaccurate in inconsistent ways. When you combine those results later, the resulting error is even harder to gauge and so the problems pile up. Everything is deterministic all the way, but neither humans nor computers can work to unlimited accuracy, and the methods of computation involve approximation that gets steadily worse. The problem is not determinism but instability.

When not much is happening in the weather, the calculational approximation is good and we can be more certain of an accurate answer, but when there is a lot of change, amplification of error gets worse and worse. That is why predicting the weather is hard. If it looks like there will be no change, that is a reliable prediction. But if it looks like change is afoot (which is what we are really interested in knowing), then our chances of getting the calculations right are much smaller.

Lorenz made popular the notion of the butterfly effect, which is well known these days as an illustration of chaos theory. It has appeared in popular culture in films and literature for many years17. The butterfly effect suggests somewhat whimsically that a delicate and tiny movement like the flapping of a butterfly wing in the Amazon rain forest could, through the non-linear amplification of the weather processes, be imagined to lead to a devastating storm or cataclysm18 on the other side of the planet. Such is the strength of the coupling – as if an earthquake could shake the Moon. Although his point was meant as an amusing parody of non-linearity, it made a compelling image that popularized the notion of the amplification of small effects into large ones.

Strong coupling turns out to be a particular problem in computer-based infrastructure, though this point needs further explanation in the chapters to come. Chaos is easily contained, given the nature of information systems, yet systems are often pushed beyond the brink of instability. We do not escape from uncertainty so easily.

Prediction is an important aspect of control, but what about our ability to influence the world? You can’t adjust the weather with a screwdriver, or tighten a screw with a fan. That tells us there are scale limitations to influence too. I would like to close this section with a story about the effect of scale on control.

The presumably apocryphal tale of King Canute, or Knut the great, who was a king of Denmark, England, Norway, and parts of Sweden at the close of the first millennium, is well known to many as a fable of futility in the face of a desire to control. Henry of Huntingdon, the 12th century chronicler, told how Canute set his throne on the beach and commanded the waves to stay back. Then, as the waves rolled relentlessly ashore, the tide unaffected by his thought processes, or the stamping of his feet, Canute declared: “Let all men know how empty and worthless is the power of kings!”

Written in this way, with a human pitting himself against a force of nature that we are all familiar with, has a certain power to capture our imaginations. It seems like an obvious lesson, and we laugh at the idea of King Canute and his audacious stunt, but we should hold our breaths. The same mistake is being made every day, somewhere in the world. Our need to control is visceral, and we go to any lengths to try it by brute force, before reason wins the day.

Many of us truly believe that brute force is the answer to such problems: that if we can just apply sufficient force, we will conquer any problem. The lessons of non-predictability count for little if you don’t know them. Force can be an effective tool in certain situations, but scale plays a more important role.

Canute could not hold back the ocean because he had no influential tools on the right scale. Mere thought does not interact with anything outside of our brains, naturally, so it had little hope of holding back the water. As a man, he could certainly have held back water. He had sufficient force—after all, we are all mostly made up of water, so holding back water requires about the same amount of force as holding back other people. However, unlike other people, water is composed of much smaller pieces that can easily flow around a man. Moreover, the sheer size of the body of water would have simply run over him as a finger slides over woollen threads – the water would barely have noticed his presence. A futile mismatch of scales – he could never even have used the force he was able to exert against an army because the interaction between a solid man and liquid water is rendered so weak as to be utterly ineffective.

As humans, we forget quickly the natural disasters after they have happened and go back to believing we are in control19. Control is important to us. We talk about it a lot. It is ultimately connected with our notions of free will, of intent and of purpose. We associate it with our personal identity, and our very survival as a species. We have come to define ourselves as a creative species, and one that has learnt to master its environments. Much of that ingenuity gives us a sense of control.

A central theme of this chapter is that control is about information, and the scale or resolution at which we can perceive it. Surely, using today’s information systems, we could build a machine to do what Canute could not? If not actually hold back the tide, then at least adapt to it so quickly as to keep dry in some smart manner? Couldn’t windows clean themselves? Couldn’t smart streets in developing countries clear stagnant water and deploy agents to fight off malaria and other diseases? Couldn’t smart environments ward off forces on a scale that we as individuals cannot to address? These things might be very possible indeed, but would they be stable? The bounds of human ingenuity are truly great, but if such things are possible, it still begs an important question: would such smart technologies be stable to such a degree that we could rely on them to be safe?20.

Now think one step further than this, not to a modified natural world, but to the artificial worlds of our own creation: our software and information systems. These systems are coupled to almost every aspect of human survival today. They have woven themselves into the fabric of society in the so-called developed world and we now depend on them for our survival, just like our physical environment. Are these systems stable and reliable?

In a sense, they are precisely what King Canute could not accomplish: systems that hold back a deluge of communication and data records, on which the world floats, from overwhelming us, neutralizing information processing tasks that we might have tried to accomplish by brute force in earlier generations, or could not have done at all without their help. Even if it is a problem of our own making, without computers we would truly drown in the information that our modern way of living needs to function.

What can we say about the stability of our modern IT infrastructure? Software systems do not obey the laws of physics, even though they run on machines that do. The laws of behaviour in the realm of computers are not directly related to the physical laws just discussed. Nevertheless, the principles we’ve been describing here, of scale and determinism, must still apply, because those principles are about information. Only the realization of the principles will be different.

Scale matters.

Will our future, information-infused world be safe and reliable? Will it be a calm moonlit ocean or a chaotic mushroom cloud? The answer to these questions is: it will be what we make it, but subject to the limitations of scale and complexity. It will depend on how stable and reliable the environment around it is. So we must ask: are the circumstances around our IT systems (i.e. the dominant forces and flows of information at play) sufficiently predictable that we can maneuver reliably within their boundaries? If so, we will feel in control, but there are always surprises in store—the freak waves, the unexpected flapping of a butterfly’s wings in an unwittingly laid trap of our own making. How can we know?

Well, we go in search of certainty, and the quest begins with the key idea of the first part of this book: stability.

Get In Search of Certainty now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.