AOL

Figure 17-6. http://www.aol.com

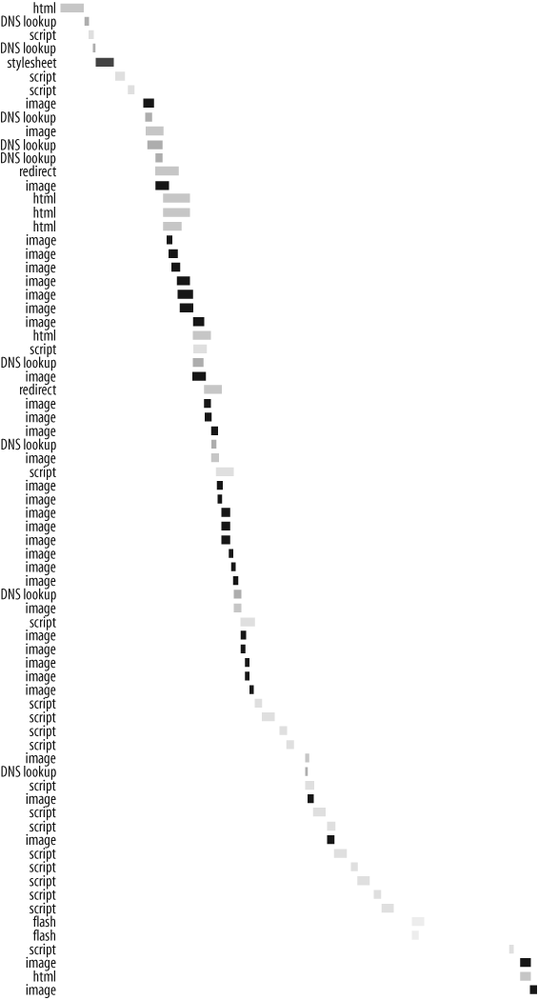

The HTTP requests for AOL (http://www.aol.com) show a high degree of parallelization of downloads in the first half, but in the second half, the HTTP requests are made sequentially (see Figure 17-7). In turn, the page load time is increased. There are two interesting implementation details here: downgrading to HTTP/1.0 and multiple scripts.

Figure 17-7. AOL HTTP requests

In the first half, where there is greater parallelization, the

responses have been downgraded from HTTP/1.1 to HTTP/1.0. I discovered

this by looking at the HTTP headers where the request method specifies

HTTP/1.1, whereas the response states

HTTP/1.0.

GET /_media/aolp_v21/bctrl.gif HTTP/1.1 Host: www.aolcdn.com

HTTP/1.0 200 OK

For HTTP/1.0, the specification recommends up to four parallel downloads per hostname, versus HTTP/1.1's guideline of two per hostname. Greater parallelization is achieved as a result of the web server downgrading the HTTP version in the response.

Typically, I've seen this result from outdated server configurations, but it's also possible that it's done intentionally to increase the amount of parallel downloading. At Yahoo!, we tested this, but determined that HTTP/1.1 had better overall performance because it supports persistent connections by default (see the ...

Get High Performance Web Sites now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.