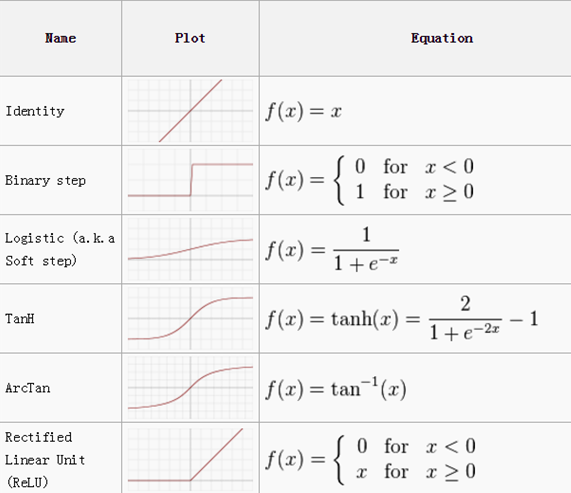

So far, we have considered only the sigmoid activation function in a hidden layer. However, there are quite a few other activation functions that are useful in building a neural network. This chart gives the details of various activation functions:

The more commonly used activation functions are ReLU, TanH, and logistic or sigmoid activations.

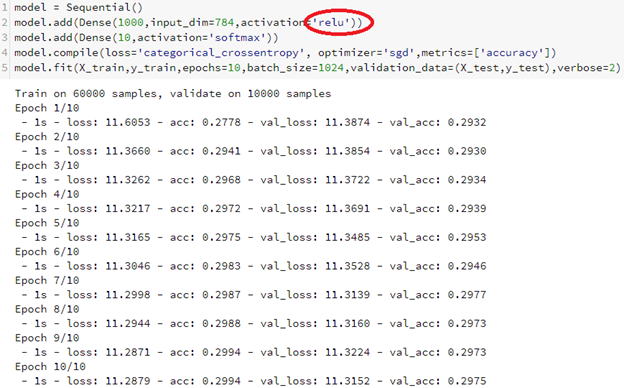

Let's explore the accuracy on the test dataset for various activation functions:

Note that the accuracy on the test dataset is a mere 29.75% when using ReLU activation.

However, while ...