Another way to reduce overfitting is by implementing the dropout technique. While performing weight updates in a typical back propagation, we ensure that some random portion of the weights are left out from updating the weights in a given epoch—hence the name dropout.

Dropout as a technique can also help in reducing overfitting, as reduction in the number of weights that need to be updated in a single epoch results in less chance that the output depends on few input values.

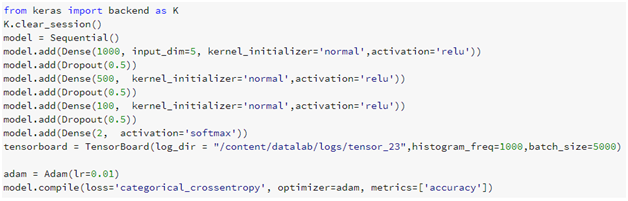

Dropout can be implemented as follows:

The result ...