In a regression technique, we minimize the overall squared error. However, in a classification technique, we minimize the overall cross-entropy error.

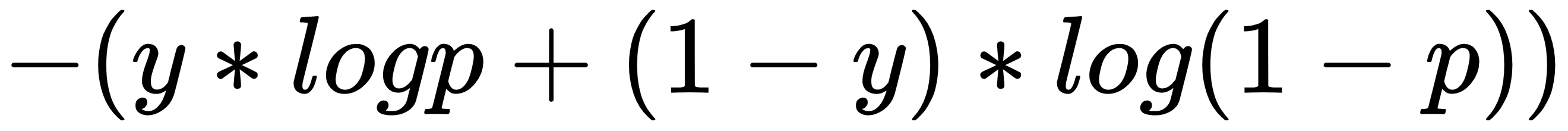

A binary cross-entropy error is as follows:

In the given equation:

- y is the actual dependent variable

- p is the probability of an event happening

For a classification exercise, all the preceding algorithms work; it's just that the objective function changes to cross-entropy error minimization instead of squared error.

In the case of a decision tree, the variable that belongs to the root node is the variable that provides the highest information gain when ...