As discussed earlier, the lesser the batch size, the more often the weights get updated in a given neural network. This results in a lesser number of epochs required to achieve a certain accuracy on the network. At the same time, if the batch size is too low, the network structure might result in instability in the model.

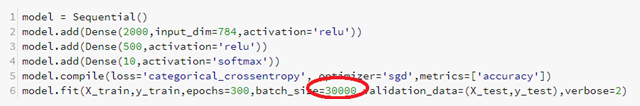

Let's compare the previously built network with a lower batch size in one scenario and a bigger batch size in the next scenario:

Note that in the preceding scenario, where the batch size is very high, the test dataset accuracy at the end of 300 epochs is only 89.91%.

The reason for this is that ...