Primary Considerations and Risk Management

We can break this use case into a number of primary considerations, which we discuss further in the subsequent sections:

-

Consumption of source data

-

Guarantees around data delivery

-

Data governance

-

Latency and confirmations of delivery

-

Access patterns against the destination data

Let’s look at these considerations and how attributes of each will affect our priorities.

Source data consumption

When we talk about data sources, we’re basically talking about the things that create the data that’s required for building your solutions. Sources could be anything from phones, sensors, applications, machine logs, operational and transactional databases, and so on. The source itself is mostly out of scope of your pipeline and staging use cases. In fact, you can evaluate the success of your scoping by how much of your time you spend working with the source team. The more time your data engineering team spends on source integration can often be inversely correlated to how well designed the source integration is.

There are standard approaches we can use for source data collection:

- Embedded code

-

This is when you provide code embedded within the source system that knows how to send required data into your data pipeline.

- Agents

-

This is an independent system that is close to the source and in many cases is on the same device. This is different from the embedded code example because the agents run as separate processes with no dependency concerns.

- Interfaces

-

This is the lightest of the options. An example would be a Representational State Transfer (REST) or WebSocket endpoint that receives data sent by the sources.

It should be noted that there are other commonly used options to perform data collection; for example:

-

Third-party data integration tools, either open source or commercial

-

Batch data ingest tools such as Apache Sqoop or tools provided with specific projects; for example, the Hadoop Distributed File System (HDFS)

putcommand

Depending on your use case, these options can be useful in building your pipelines and worth considering. Because they’re already covered in other references or vendor and project documentation, we don’t cover these options further in this section.

Which approach is best is often determined by the sources of data, but in some cases multiple approaches might be suitable. The more important part can be ensuring a correct implementation, so let’s discuss some considerations around these different collection types, starting with the embedded code option.

Embedded code

Consider the following guidelines when implementing embedded code as part of source data collection:

- Limit implementation languages

-

Don’t try to support multiple programming languages; instead, implement with a single language and then use bindings for other languages. For example, consider using C, C++, or Java and then create bindings for other languages that you need to support. As an example of this, consider Kafka, which includes a Java producer and consumer as part of the core project, whereas other libraries or clients for other languages require binding to libraries that are included as part of the Kafka distribution.

- Limit dependencies

-

A problem with any embedded piece of code is potential library conflicts. Making efforts to limit dependencies can help mitigate this issue.

- Provide visibility

-

With any embedded code, there can be concerns with what is under the hood. Providing access to code—for example, by open sourcing the code or at least providing the code via a public repository—provides an easy and safe way to relieve these fears. The user can then get full view of the code to alleviate potential concerns involving things like memory usage, network usage, and so on.

- Operationalizing code

-

Another consideration is possible production issues with embedded code. Make sure you’ve taken into account things like memory leaks or performance issues and have defined a support model. Logging and instrumentation of code can help to ensure that you have the ability to debug issues when they arise.

- Version management

-

When code is embedded, you likely won’t be able to control the scheduling of things like updates. Ensuring things like backward compatibility and well-defined versions is key.

Agents

The following are things to keep in mind when using agents in your architecture:

- Deployment

-

As with other components in your architecture, make sure deployment of agents is tested and repeatable. This might mean using some type of automation tool or containers.

- Resource usage

-

Ensure that the source systems have sufficient resources to reliably support the agent processes, including memory, CPU, and so on.

- Isolation

-

Even through agents run externally from the processing applications, you’ll still want to protect against problems with the agent that can negatively affect data collection.

- Debugging

-

Again, here we want to take steps to ensure that we can debug and recover from inevitable production issues. This might mean logging, instrumentation, and so forth.

Interfaces

Following are some guidelines for using interfaces:

- Versioning

-

Versioning again is an issue here, although less painful than the embedded solution. Just make sure your interface has versioning as a core concept from day one.

- Performance

-

With any source collection framework, performance and throughput are critical. Additionally, even if you design and implement code to ensure performance, you might find that source or sink implementations are suboptimal. Because you might not control this code, having a way to detect and notify when performance issues surface will be important.

- Security

-

While in the agent and embedded models you control the code that is talking to you, in the interface model you have only the interface as a barrier to entry. The key is to keep the interface simple while still injecting security. There are a number of models for this such as using security tokens.

Risk management for data consumption

The risks that you need to worry about when building out a data source collection system include everything you would normally worry about for an externally facing API as well as concerns related to scale. Let’s look at some of the major concerns that you need to be looking for.

Version management

Everyone loves a good API that just works. The problem is that we rarely can design interfaces with such foresight that they won’t require incompatible changes at some point. You will want to have a robust versioning strategy and a plan for providing backward compatibility guarantees to protect against this as well as ensuring that this plan is part of your communication strategy.

Impacts from source failures

There are a number of possible failure scenarios at the source layer for which you need to plan. For example, if you have embedded code that’s part of the source execution process, a failure in your code can lead to an overall failure in data collection. Additionally, even if you don’t have embedded code, if there’s a failure in your collection mechanism such as an agent, how is the source affected? Is there data loss? Can this affect expected uptime for the application?

The answer to this is to have options and know your sources and communicate those different options with clear understanding that failure and downtime will happen. This will allow adding any required safeguards to protect against these possible failure scenarios.

Note that failure in the data pipeline should be a rare occurrence for a well-designed and implemented pipeline, but it will inevitably happen. Because failure is inevitable, our pipelines need to have mechanisms in place to alert us when undesired things take place. Examples would be monitoring of things like throughput and alerting when metrics are seen to deviate from specific thresholds. The idea is to build the most resilient pipelines for the use case but have insight into when things go wrong.

Additionally, consider having replicated pipelines. In failure cases, if one pipeline goes down, another can take over. This is more than node failure protection; having a separate pipeline protects you from difficult-to-predict failures like badly configured deployments or a bad build getting pushed. Ideally, we should design our pipelines in a way that we can deploy them as simply as you would deploy a web application.

Protection from sources that behave poorly

When you build a data ingestion system, it’s possible that sources might misuse your APIs, send too much data, and so on. Any of these actions could have negative effects on your system. As you design and implement your system, make sure to put in place mechanisms to protect against these risks. These might include considerations like the following:

- Throttling

-

This will limit the number of records a source can send you. As the source sends you more records, your system can increase the time to accept that data. Additionally, you might even need to send a message indicating that the source is making too many connections.

- Dropping

-

If your system doesn’t provide guarantees, you could simply drop messages if you become overloaded or have trouble processing input data. However, opening the door to this can introduce a belief that your system is lossy and can lower the overall trust in your system. Under most circumstances, though, being lossy might be fine as long as it is communicated to the source that loss is happening in real time so that clients can take appropriate action. In short, whenever pursuing the approach of dropping data, make sure your clients have full knowledge of when and why.

Data delivery guarantees

When planning a data pipeline, there are a number of promises that you will need to give to the owners of the data you’re collecting. With any data collection system, you can offer different levels of guarantees:

- Best effort

-

If a message is sent to you, you try to deliver it, but data loss is possible. This is suitable when you’re not concerned with capturing every event. An example might be if you’re performing processing on incoming data to capture aggregate metrics for which total precision isn’t required.

- At least once

-

If a message is sent to you, you might duplicate it, but you won’t lose it. This will probably be the most common use case. Although it adds some complexity over best effort, in most cases you’ll probably want to ensure that all events are captured in your pipeline. Note that this might also require adding logic in your pipeline to deduplicate data, but in most cases this is probably easier and less expensive to implement than the exactly-once option described next.

- Exactly once

-

If a message is received by the pipeline, you guarantee that it’s processed and will never be duplicated. As we noted, this is the most expensive and technically complex option. Although many systems now promise to provide this, you should carefully consider whether this is necessary or whether you can put other mechanisms in place to account for potential duplicate records.

Again, for most use cases at least once is likely suitable given that it’s often less expensive to perform deduplication after ingesting data. Regardless of the level of guarantee, you should plan for, document, and communicate this to users of the system.

Data management and governance

A robust data collection system today must have two critical features:

- Data model management

- Data regulation

-

This is the ability to know everything that is being collected and the risks that might exist if that data is misused or exposed.

Note

We talk more about this when we cover metadata management in Chapter 6, but for now let’s look at them in terms of scope and goals.

Data model management

You need to have mechanisms in place to capture your system’s data models, and, ideally, this should mean that groups using your data pipeline don’t need to engage your team to make a new data feed or change an existing data feed. An example of a system that provides an approach for this is the Confluent Schema Registry for Kafka, which allows for the storage of schemas, including multiple versions of schemas. This provides support for backward compatibility for different versions of applications that access data in the system.

Declaring a schema is only part of the problem. You might also need the following mechanisms:

- Registration

-

The definition of a new data feed along with its schema.

- Routing

-

Which data feeds should go to which topics, processing systems, and possible storage systems.

- Sampling

-

An extension to routing, with the added feature of removing part of the data. This is ideal for a staging environment and for testing.

- Access controls

-

Who will be able to see the data, both in final persisted state and off the stream.

- Metadata captured

-

The ability to attach metadata to fields.

Additional features that you will find in more advanced systems include the following:

- Transformational logic

-

The ability to transform the data in custom ways before it lands in the staging areas.

- Aggregation and sessionization

-

Transformational logic that understands how to perform operations on data windows.

Regulatory concerns

As more data is collected, stored, and analyzed, concern for things like data protection and privacy have grown. This, of course, means that you need to have plans in place to respond to regulations as well as protecting against external hacks, internal misuse, and so on. Part of this is making sure that you have a clear understanding and catalog of all the data you collect; we return to this topic in Chapter 6.

Latency and delivery confirmation

Unlike the requirements of a real-time system, a data pipeline normally gets a lot of leeway when it comes to latency and confirmations of delivery. However, this is a very important area for you to scope and define expectations. Let’s define these two terms and what you’ll need to establish with respect to each.

Latency

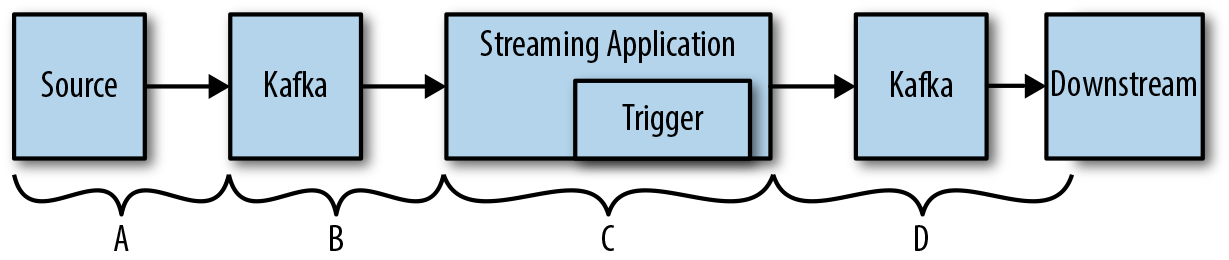

This is the time it takes from when a source publishes information until that information is accessible by a given processing layer or a given staging system. To illustrate this, we use the example of a stream-processing application. For this example, assume that we have data coming in through Kafka that is being consumed by a Flink or Spark Streaming application. That application might then be sending alerts based on the outcome of processing to downstream systems. In this case, we can quantify latency into multiple buckets, as illustrated in Figure 1-1.

Figure 1-1. Quantifying system latency

Let’s take a closer look at each bucket:

-

A: Time to get from the source to Kafka. This can be a direct shot, in which case we are talking about the time to buffer and send over the network. However, there might be a fan-in architecture that includes load balancers, microservices, or other hops that can result in increased latency.

-

B: Time to get through Kafka will depend on a number of things such as the Kafka configuration and consumer configuration.

-

C: Time between when the processing engine receives the event to when it triggers on the event. With some engines like Spark Streaming, triggering happens on a time interval, whereas others like Flink can have lower latency. This will also be affected by configuration as well as use cases.

-

D: Again, we have the time to get into Kafka and be read from Kafka. Latency here will be highly dependent on buffering and polling configurations in the producer and consumer.

Delivery confirmation

With respect to a data pipeline, delivery confirmations let the source know when the data has arrived at different stages in your pipeline and could even let the source know if the data has reached the staging area. Here are some guidelines on designing this into your system:

- Do you really need confirmation?

-

The advantage of confirmation is that it allows the source to resend data in the event of a failure; for example, a network issue or hardware failure. Because failure is inevitable, providing confirmation will likely be suitable for most use cases. However, if this is not a requirement, you can reduce the time and complexity to implement your pipeline, so make sure to confirm whether you really need confirmation.

- How to deliver the confirmation?

-

If you do need to provide confirmations, you need to add this to your design. This will likely include selecting software solutions that provide confirmation functionality and building logic into any custom code you implement as part of your pipeline. Because there’s some complexity involved in providing delivery confirmation, as much as possible try to use existing solutions that can provide this.

Risk management for data delivery

Data delivery promises can be risky. For one, you want to be able to offer a system that gives users everything they want, but in the real world, things can and will go awry, and at some point, your guarantees might fail.

There are two suggested ways to deal with this risk. The first is to have a clean architecture that’s shared with stakeholders in order to solicit input that might help to ensure stability. Additionally, having adequate metrics and logging is important to validate that the implementation has met the requirements.

Additionally, you will want a mechanism that will notify users and the source systems when guarantees are being missed. This will provide time to adjust or switch to a backup system seamlessly.

Access patterns

The last focus of the data pipeline use case is access patterns for the data. What’s important to call out here are the types of access and requirements that you should plan for when defining your data pipeline.

We can break this into two groupings: access to data, and retention of data. Let’s look at data access and the types of jobs that will most likely come up as priorities. This includes the following:

-

Batch jobs that do large scans and aggregations

-

Streaming jobs that do large scans

-

Point data requests; for example, random access

-

Search queries

Batch jobs with large scans

Batch jobs that scan large blocks of data are core workloads of data research and analytics. Let’s run through four typical workload types that fit into this categorization to help with defining this category. To gain a solid grasp on the concept, let’s discuss some real-world use cases with respect to a supermarket chain:

- Analytical SQL

-

This might entail using SQL to do rollups of which items are selling by zip code and date. It would be very common that reports like this would run daily and be visible to leadership within the company.

- Sessionization

-

Using SQL or tools like Apache Spark, we might want to sessionize the buying habits of our customers; for example, to better predict their individual shopping needs and patterns and be alerted to churn risks.

- Model training

-

A possible use case for machine learning in our supermarket example is a recommendation solution that would look at the shopping habits of our customers and provide suggestions for items based on customers’ known preferences.

- Scenario predictions evaluation

-

In the supermarket use case, we need to define a strategy for deciding how to order the items to fill shelves in our stores. We can use historical data to know whether our purchasing model is effective.

The important point here is that we want the data for long periods of time, and we want to be able to bring a large amount of processing power to our analytics in order to produce actionable outcomes.

Streaming jobs with large scans

There are two main differences between batch and streaming workloads: the time between execution of the job, and the idea of progressive workloads. With respect to time, streaming jobs are normally thought to be in the range of millisecond to minutes, whereas batch workloads are generally minutes to hours or even days. The progressive workload is maybe the bigger difference because it is a difference in the output of the job. Let’s look at our four types of jobs and see how progressiveness affects them:

- Analytical SQL

-

With streaming jobs, our rollups and reports will update at smaller intervals like seconds or minutes, allowing for visibility into near-real-time changes for faster reaction time. Additionally, ideally the processing expense will not be that much bigger than batch because we are working on only the newly added data and not reprocessing past data.

- Sessionization

-

As with the rollups, sessionization in streaming jobs also happens in smaller intervals, allowing more real-time actionable results. Consider that in our supermarket example we use sessionization to analyze the items our customers put into their cart and, by doing so, predict what they plan to make for dinner. With that knowledge, we might also be able to suggest items for dessert that are new to the customer.

- Model training

-

Not all models lend themselves to real-time training. However, the trained models might be ideal for real-time execution to make real-time actionable calls in our business.

- Scenario predictions evaluation

-

Additionally, now that we are making real-time calls with technology, we need a real-time way to evaluate those decisions to power humans to know whether corrections need to be made. As we move to more automation in terms of decision making, we need to match that with real-time evaluation.

Where the batch processing needs massive storage and massive compute, the streaming component really only needs enough compute, storage, and memory to hold windows in context.

Note

We talk more about stream processing in Chapter 8.

Point requests

Until now, we have talked about access patterns that are looking at all of the data either in given tables or partitions, or through a stream. The idea with point requests is that we want to fetch a specific record or data point very fast and with high concurrency.

Think about the following use cases for our supermarket example:

-

Look up the current items in stock for a given store

-

Look up the current location of items being shipped to your store

-

Look up the complete supply chain for items in your store

-

Look up events over time and be able to scan forward and backward in time to investigate how those events affect things like sales

When we explore storage in Chapter 5, we’ll go through indexable storage solutions that will be optimal for these use cases. For now, just be aware that this category is about items or events in time related to an entity and being able to fetch that information in real time at scale.

Searchable access

The last access pattern that is very common is searchable access to data. In this use case, access patterns need to be fast while also allowing for flexibility in queries. Thinking about our supermarket use case, you might want to quickly learn what types of vegetables are in stock, or maybe look at all shipments from a specific farm because there was a contamination.

Note

We talk more about solutions to target these use cases when we examine storage in Chapter 5.

Risk management for access patterns

In previous sections, the focus was on data sources and creating data pipelines to make the data available in a system. In this section, we talk about making data available to people who want to use it, which means a completely different group of stakeholders. Another consideration is that access pattern requirements might change more rapidly simply because of the larger user base trying to extract value from the data. This is why we’ve organized our discussion of access patterns into four key offerings. If you can find a small set of core access patterns and make them work at scale, you can extend them for many use cases.

With that being said, doing the following will help ensure that you manage risks associated with access patterns:

-

Help ensure that users are using the right access patterns for their use case.

-

Make sure your storage systems are available and resilient.

-

Verify that your offering is meeting your users’ needs. If they are copying your data into another system to do work, that could be a sign that your offering is not good enough.