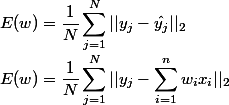

The Stochastic Gradient Descent algorithm (SGD) finds the optimal weights {wi} of the model by minimizing the error between the true and the predicted values on the N training samples:

Where ![]() are the predicted values, ŷi the real values to be predicted; we have N samples, and each sample has n dimensions.

are the predicted values, ŷi the real values to be predicted; we have N samples, and each sample has n dimensions.

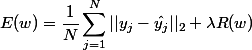

Regularization consists of adding a term to the previous equation and to minimize the regularized error: