Memory is used to hold data and software for the processor. There is a variety of memory types, and often a mix is used within a single system. Some memory will retain its contents while there is no power, yet will be slow to access. Other memory devices will be high capacity, yet will require additional support circuitry and will be slower to access. Still other memory devices will trade capacity for speed, giving relatively small devices, yet are capable of keeping up with the fastest of processors.

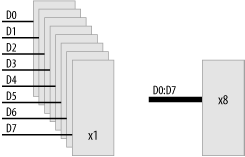

Memory can be organized in two ways, either

word-organized or

bit-organized. In the word-organized scheme,

complete nybbles, bytes, or words are stored within a single

component, whereas with bit-organized memory, each bit of a byte or

word is allocated to a separate component (Figure 1-10).

Memory chips come in different sizes, with the width specified as part of the size description. For instance, a DRAM (dynamic RAM) chip might be described as being 4M x 1 (bit-organized), whereas a SRAM (static RAM) may be 512k x 8 (word-organized). In both cases, each chip has exactly the same storage capacity, but they are organized in different ways. In the DRAM case, it would take eight chips to complete a memory block for an 8-bit data bus, whereas the SRAM requires only one chip.

However, because the DRAMs are organized in parallel, they are

accessed simultaneously. The final size of the DRAM block is (4M x 1)

x 8 devices, which is 32M. It is common practice for multiple DRAMs

to be placed on a memory module. This is the

common way that DRAMs are installed in standard computers.

The common widths for memory chips are x1, x4, and x8, although x16 devices are available.

RAM stands for Random Access Memory. This is

a bit of a misnomer, since most (all)

computer memory may be considered “random

access.” RAM is the “working

memory” in the computer system. It is where the

processor may easily write data for temporary storage. RAM is

generally volatile

, losing its contents when the system

loses power. Any information stored in RAM that must be retained must

be written to some form of permanent storage before the system powers

down. There are special nonvolatile RAMs that integrate a

battery-backup system, so that the RAM remains powered even when its

computer system has shut down.

RAMs generally fall into two categories—static

RAM (also known as SRAM) and

dynamic RAM (also known as

DRAM).

Static RAMs use pairs of logic gates to hold each bit of data. Static RAMs are the fastest form of RAM available, require little external support circuitry, and have relatively low power consumption. Their drawbacks are that their capacity is considerably less than dynamic RAM, yet they are much more expensive. Their relatively low capacity requires more chips to be used to implement the same size memory. A modern PC built using nothing but static RAM would be a considerably bigger machine and would cost a small fortune to produce. (It would be very fast, however.)

Dynamic RAM uses arrays of what are essentially capacitors to hold individual bits of data. The capacitor arrays will hold their charge only for a short period of time before it begins to diminish. Therefore, dynamic RAMs need continuous refreshing, every few milliseconds or so. This perpetual need for refreshing requires additional support and also can delay processor access to the memory. If a processor access conflicts with the need to refresh the array, the refresh cycle must take precedence.

Dynamic RAMs are the highest capacity memory devices available and come in a wide and diverse variety of subspecies. Interfacing DRAMs to small microcontrollers is generally not possible, and certainly not practical. Most processors with large address spaces include support for DRAMs. Connecting DRAMs to such processors is simply a case of connecting the dots (or pins, as the case may be). For processors that do not include DRAM support, special DRAM controller chips are available that make interfacing the DRAMs very simple indeed.

Many processors have instruction and/or data

caches

,

which store recent memory accesses. These caches are often internal

to the processors and are implemented with fast memory cells and

high-speed data paths. Instruction execution normally runs out of the

instruction cache, providing for fast execution. The processor is

capable of rapidly reloading the caches from main memory should a

cache miss occur. Some processors have logic that is able to

anticipate a cache miss and begin the cache reload prior to the cache

miss occurring. Caches are implemented using fast SRAM and are most

often used to compensate for the slowness of the main DRAM

array in large

systems.

ROM stands for Read-Only Memory. This

is also a bit of a misnomer, since many

(modern) ROMs can also be written to. ROMs are nonvolatile memory,

requiring no current to retain their contents. They are generally

slower than RAM and considerably slower than static RAM.

The primary purpose of ROM within a system is to hold the code (and

sometimes data) that needs to be present at power-up. Such software

is generally known as

firmware

,

and contains software to initialize the computer by placing I/O

devices into a known state, may contain either a bootloader program

to load an operating system off disk or network, or, in the case of

an embedded system, may contain the application itself.

Many microcontrollers contain on-chip ROM, thereby reducing component count and simplifying system design.

Standard ROM is fabricated (in a simplistic sense) from a large array

of diodes. The unwritten state for a ROM is all 1s, each byte

location reading as 0xFF. The process of loading

software into a ROM is known as burning the

ROM

. This term comes from the fact that the

programming process is performed by passing a sufficiently large

current through the appropriate diodes to “blow

them” or burn them, thereby

creating a zero at that bit location. A device known as a

ROM burner can accomplish this, or if the system

supports it, the ROM may be programmed in-circuit. This is known as

In-System

Programming

(ISP), or sometimes,

In-Circuit Programming

(ICP).

One-Time

Programmable

(OTP)

ROMs, as the name implies, can be burned only once. Computer

manufacturers typically use them in systems in which the firmware is

stable and the product is shipping in bulk to customers.

Mask-programmable

ROMs are also one-time programmable, but

unlike OTPs, they are burned by the chip manufacturer prior to

shipping. Like OTPs, they are used once the software is known to be

stable and have the advantage of lowering production costs

for large

shipments.

OTP ROMs are great for shipping in final products, but they are wasteful for debugging, since, with each iteration of software, a new chip must be burned and the old one thrown away. As such, OTPs make for a very expensive development option.

A better choice for system development and debugging is the

Erasable Programmable Read-Only Memory, or

EPROM. Shining ultraviolet light through a small window on the top of

the chip can erase the EPROM, allowing it to be reprogrammed and

reused. They are pin and signal compatible with comparable OTP and

mask devices. Thus, an EPROM can be used during development, while

OTPs can be used in production, with no change to the rest of the

system.

EPROMs and their equivalent OTP cousins range in capacity from a few kilobytes (exceedingly rare these days) to a megabyte or more.

The drawback with EPROM technology is that the chip must be removed from the circuit to be erased, and the erasure can take many minutes to complete. Then the chip is placed in the burner, loaded with software, and placed back in circuit. This can lead to very slow debugging cycles. Further, it makes the device useless for storing changeable system parameters.

EEROM is Electrically Erasable

Read-Only Memory and is also

known as

EEPROM (Electrically Erasable

Programmable Read-Only Memory). Very rarely it is also

called Electrically Alterable Read-Only Memory

(EAROM). EEROM can be pronounced as either

“e-e ROM” or

“e-squared ROM” or sometimes just

“e-squared” for short.

EEROMs can be erased and reprogrammed in-circuit. Their capacity is significantly smaller than standard ROM (typically only a few kilobytes), and so they are not suited to holding firmware. They are typically used instead for holding system parameters and mode information, to be retained during power-off.

It is common for many microcontrollers to incorporate a small EEROM on-chip for holding system parameters. This is especially useful in embedded systems and may be used for storing network addresses, configuration settings, serial numbers, servicing records, and so on.

Flash is the newest ROM technology and is rapidly becoming dominant. Flash memory has the reprogrammability of EEROM and the large capacity of standard ROMs. Flash chips are sometimes referred to as “flash ROMs” or “flash RAMs.” Since they are not like standard ROMs nor standard RAMs, I prefer to just call them “flash” and save on the confusion.

Flash is normally organized as sectors and has the advantage that individual sectors may be erased and rewritten without affecting the contents of the rest of the device. Typically, before a sector can be written, it must be erased. It can’t just be written over as with a RAM.

There are several different flash technologies, and the erasing and programming requirements of flash devices vary from manufacturer to manufacturer.

Get Designing Embedded Hardware now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.