We need our classifier to predict the probability of seizure, which is class 1. This means that our output will be constrained to [0,1] as it would be in a traditional logistic regression model. Our cost function, in this case, will binary cross-entropy, which is also known as log loss. If you've worked with classifiers before, this math is likely familiar to you; however, as a refresher, I'll include it here.

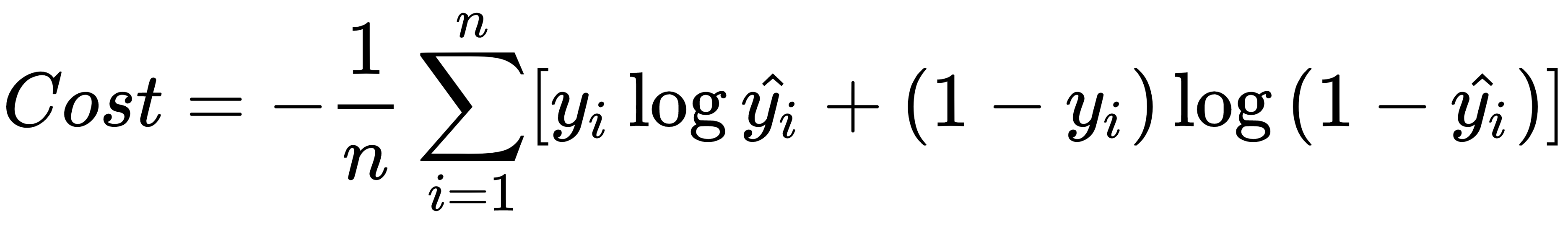

The complete formula for log loss looks like this:

This can probably be seen more simply as a set of two functions, one for case and :

When and

When

The log function is used here to result in a monotonic function ...