The cost function we will be using is called multinomial cross-entropy. Multinomial cross-entropy is really just a generalization of the binary cross-entropy function that we saw in Chapter 4, Using Keras for Binary Classification.

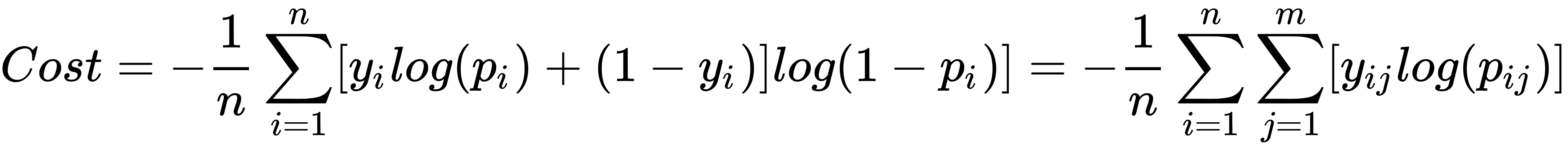

Instead of just showing you categorical cross-entropy, let's look at them both together. I'm going to assert they are equal, and then explain why:

The preceding equation is true (when m=2)

OK, don't freak out. I know, that's a whole bunch of math. The categorical cross-entropy equation is the one that exists all the way on the right. Binary cross-entropy is next to it. Now, imagine a situation where ...