This world that we've framed up happens to be a Markov Decision Process (MDP), which has the following properties:

- It has a finite set of states, S

- It has a finite set of actions, A

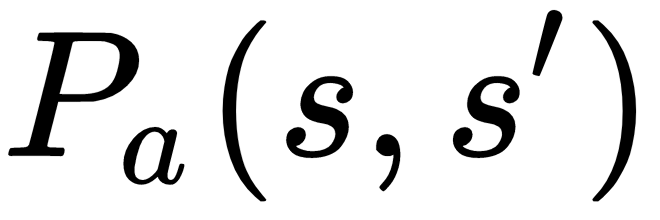

is the probability that taking action A will transition between state s and state

is the probability that taking action A will transition between state s and state

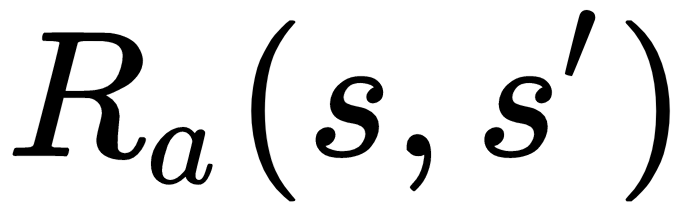

is the immediate reward for transition between s and

is the immediate reward for transition between s and - is the discount factor, which is how much we discount future rewards over present rewards (more on ...