9.3 Mapping Sound Features to Control Parameters

9.3.1 The Mapping Structure

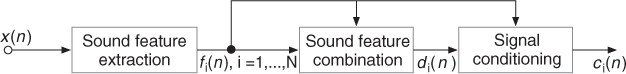

While recent studies define specific strategies of mapping for gestural control of sound synthesizers [Wan02] or audio effects [WD00], some mapping strategies for ADAFX were specifically derived from the three-layer mapping that uses a perceptive layer [ACKV02, AV03, VWD06], which is shown in Figure 9.30. To convert sound features fi(n), i = 1, ..., M into effect control parameters cj(n), j = 1, ..., N, we use an M-to-N explicit mapping scheme3 divided into two stages: sound-feature combination and control-signal conditioning (see Figure 9.30).

Figure 9.30 Mapping structure between sound features and one effect control ci(n): sound features are first combined, and then conditioned in order to provide a valid control to the effect. Figure reprinted with IEEE permission from [VZA06].

The sound features may often vary rapidly and with a constant sampling rate (synchronous data), whereas the gestural controls used in sound synthesis vary less frequently and sometimes in an asynchronous mode. For that reason, we chose sound features for direct control of the effect and optional gestural control for modifications of the mapping between sound features and effect control parameters [VWD06], thus providing navigation by interpolation between presets.

Defining a clear mapping structure offers a higher level of ...

Get DAFX: Digital Audio Effects, Second Edition now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.