Appendix A. On the Unreasonable Effectiveness of Data: Why Is More Data Better?

Note

This appendix is reproduced (with slight modifications and corrections) from a post, of the same name, from the author’s blog.

In the paper “The unreasonable effectiveness of data,”1 Halevy, Norvig, and Pererira, all from Google, argue that interesting things happen when corpora get to web scale:

simple models and a lot of data trump more elaborate models based on less data.

In that paper and the more detailed tech talk given by Norvig, they demonstrate that when corpora get to hundreds of millions or trillions of training sample or words, very simple models with basic independence assumptions can outperform more complex models such as those based on carefully-crafted ontologies with smaller data. However, they provided relatively little explanation as to why more data is better. In this appendix, I want to attempt to think through that.

I propose that there are several classes of problems and reasons for why more data is better.

Nearest Neighbor Type Problems

The first are nearest neighbor type problems. Halevy et al. give an example:

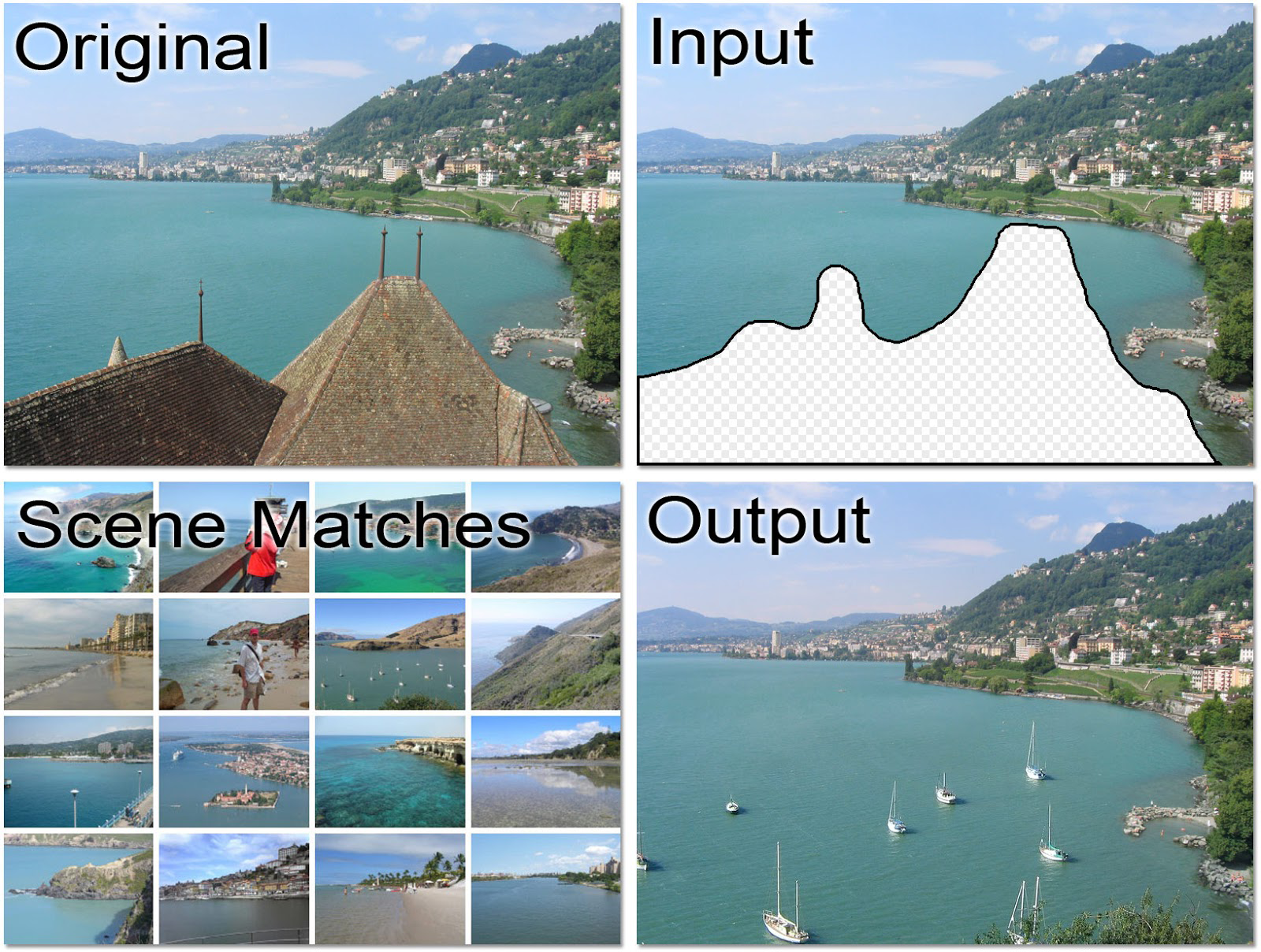

James Hays and Alexei A. Efros addressed the task of scene completion: removing an unwanted, unsightly automobile or ex-spouse from a photograph and filling in the background with pixels taken from a large corpus of other photos.2

Figure A-1. Hayes and Efros’s ...

Get Creating a Data-Driven Organization now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.