7 CLASSIFIER SELECTION

7.1 PRELIMINARIES

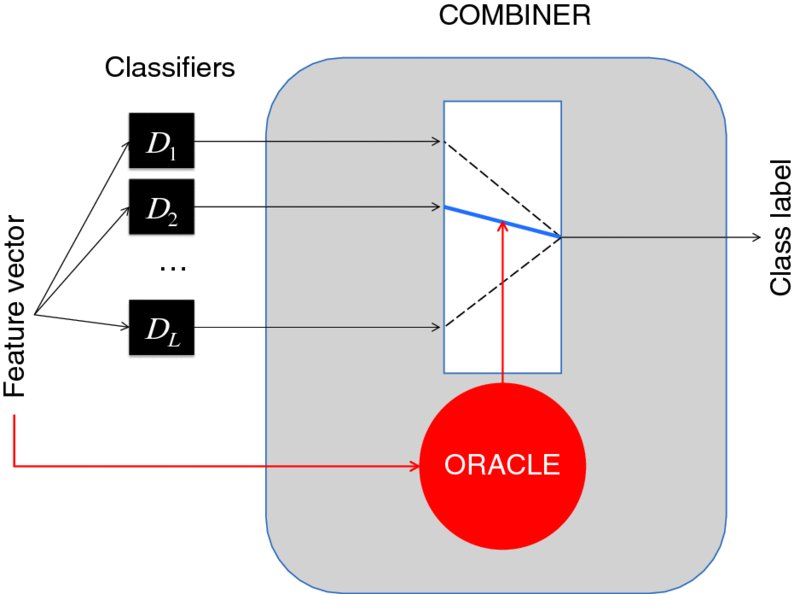

The presumption in classifier selection is that there is an oracle that can identify the best expert for a particular input x. This expert’s decision is accepted as the decision of the ensemble for x. The operation of a classifier selection example is shown in Figure 7.1.

FIGURE 7.1 Operation of the classifier selection ensemble.

We note that classifier selection is different from creating a large number of classifiers and then selecting an ensemble among these. We call the latter approach overproduce and select and discuss it in Chapter 8.

The idea of using different classifiers for different input objects was suggested by Dasarathy and Sheela back in 1979 [82]. They combine a linear classifier and a k-nearest neighbor (k-nn) classifier. The composite classifier identifies a conflict domain in the feature space and uses k-nn in that domain while the linear classifier is used elsewhere. In 1981, Rastrigin and Erenstein [322] gave a methodology for classifier selection almost in the form it is used now.

We may assume that the classifier “realizes” its competence for labeling x. For example, if the 10-nearest neighbor is used, and 9 of the 10 neighbors suggest the same class label, then the confidence in the decision is high. If the classifier outputs are reasonably well calibrated estimates of the posterior probabilities, then the ...

Get Combining Pattern Classifiers: Methods and Algorithms, 2nd Edition now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.