16.1. Least-Squares Method

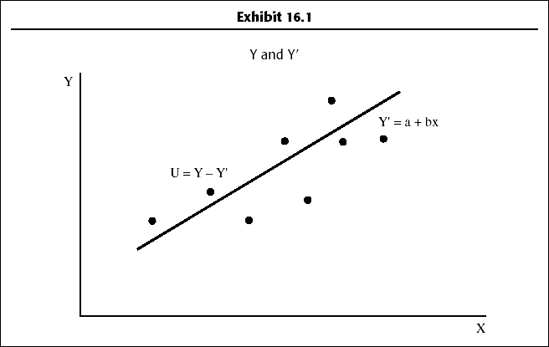

The least-squares method is widely used in regression analysis for estimating the parameter values in a regression equation. The regression method includes all the observed data and attempts to find a line of best fit. To find this line, a technique called the least-squares method is used. Exhibit 16.1 shows the regression relationship.

To explain the least-squares method, we define the error as the difference between the observed value and the estimated one and denote it with u.

- Symbolically, u =Y - Y′

- where Y= observed value of the dependent variab]

- Y′= estimated value based on Y′ = a + bX

The least-squares criterion requires that the line of best fit be such that the sum of the squares of the errors (or the vertical distance in Exhibit 16.1 from the observed data points to the line) is a minimum, that is,

- Minimum: Σu2 = Σ(Y - a - bX)2

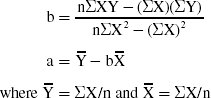

Using differential calculus we obtain these equations, called normal equations:

- ΣY = na +bσX

- ΣXY = aσX +bσX2

Solving the equations for b and a yields

Example 1

To illustrate the computations of b and a, we will refer to the data in Exhibit 16.2. All the sums required are computed and shown in the exhibit.

From Exhibit 16.2:

Substituting these values into the formula for b first:

Thus, Y' = 10.5836 + 0.5632 X

Get Budgeting Basics and Beyond now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.