Chapter 4. Storage

Large-scale data processing operations access data in a way that traditional file systems are not designed for. Data tends to be written and read in large batches, multiple megabytes at once. Efficiency is a higher priority than features like directories that help organize information in a user-friendly way. The massive size of the data also means that it needs to be stored across multiple machines in a distributed way. As a result, several specialized technologies have appeared that support those needs and trade off some of the features of general purpose file systems required by POSIX standards.

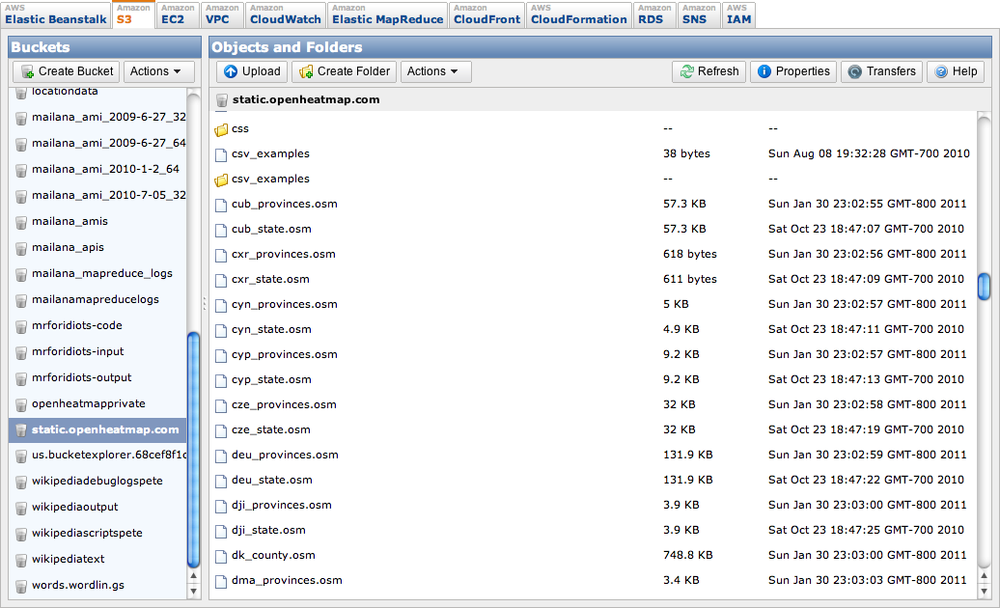

Amazon’s S3 service lets you store large chunks of data on an online service, with an interface that makes it easy to retrieve the data over the standard web protocol, HTTP. One way of looking at it is as a file system that’s missing some features like appending, rewriting or renaming files, and true directory trees. You can also see it as a key/value database available as a web service and optimized for storing large amounts of data in each value.

To give a concrete example, you could store the data for a .png image into the system using the API provided by Amazon. You’d first have to create a bucket, which is a bit like a global top-level directory that’s owned by one user, and which must have a unique name. You’d then supply the bucket name, a file name (which can contain slashes, and so may appear like a file in a subdirectory), the data itself, and any metadata to create the object.

If you specified that the object was publicly accessible, you’d then be able to access it through any web browser at an address like http://yourbucket.s3.amazonaws.com/your/file/name.png. If you supplied the right content-type in the metadata, it would be displayed as an image to your browser.

I use S3 a lot because it’s cheap, well-documented, reliable, fast, copes with large amounts of traffic, and is very easy to access from almost any environment, thanks to its support of HTTP for reads. In some applications I’ve even used it as a crude database, when I didn’t need the ability to run queries and was only storing a comparatively small number of large data objects. It also benefits from Amazon’s investment in user interfaces and APIs that have encouraged the growth of an ecosystem of tools.

The Hadoop Distributed File System (HDFS) is designed to support applications like MapReduce jobs that read and write large amounts of data in batches, rather than more randomly accessing lots of small files. It abandons some POSIX requirements to achieve this, but unlike S3, it does support renaming and moving files, along with true directories. You can only write to a file once at creation time, to make it easier to handle coherency problems when the data’s hosted on a cluster of machines, so that cached copies of the file can be read from any of the machines that have one, without having to check if the contents have changed. The client software stores up written data in a temporary local file, until there’s enough to fill a complete HDFS block. All files are stored in these blocks, with a default size of 64 MB. Once enough data has been buffered, or the write operation is closed, the local data is sent across the network and written to multiple servers in the cluster, to ensure it isn’t lost if there’s a hardware failure.

To simplify the architecture, HDFS uses a single name node to keep track of which files are stored where. This does mean there’s a single point of failure and potential performance bottleneck. In typical data processing applications, the metadata it’s responsible for is small and not accessed often, so in practice this doesn’t usually cause performance problems. The manual intervention needed for a name node failure can be a headache for system maintainers, though.

Get Big Data Glossary now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.