Hijacking the JVM

The refrain of “Java is slow” haunts Java developers to this day. The bulk of this comment derives from the experiences of non-Java developers with early JVMs back in the mid-to-late 1990s. Since that time those of us who work with Java know that it has moved in leaps and bounds. The driving force behind this improvement is also the key to speeding up JPC: the environment of a conventional Java process can be partitioned very simply between program regions and data regions.

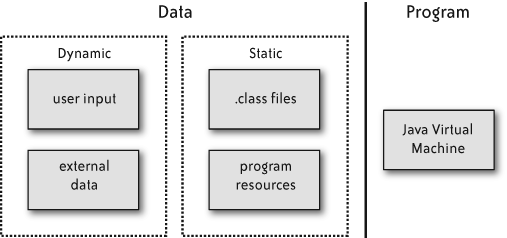

In Figure 9-6 we can see that the data region is then further split between static data, which can be known at compile time, and dynamic data, which cannot. The bulk of the static data in a Java environment are the class bytes loaded from the classpath. Although the class bytes are loaded as data, it is clear that they actually represent code, and they will get interpreted by the JVM at runtime. So it is obvious that we would like to maneuver these class bytes onto the other side of the diagram.

Figure 9-6. Program and data regions in a Java process

In a “Just-In-Time” compiled environment such as Sun HotSpot, the commonly used sections of bytecode are translated or dynamically compiled into the native instruction set of the host machine. This moves the class bytes from the data region into the code region. These classes then execute as native code that accelerate the program to native speed. See ...

Get Beautiful Architecture now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.