Within the customer support industry, both social media and big data have been among the most current trends in effectively taking care of customers. Tools in this area hold the promise of automating routine customer responses, improving visibility of customer opinions, and enabling customers to interact with one another as a community. At a deeper level, they signal a sea change in how much of a voice customers have within the business community.

Like other areas that have “gone social,” customer care is a field where the hype and promise of social media may never be matched by reality. At the same time, it has become a key part of the reality of serving customers, for companies of all sizes. However, similar to what we discussed in Chapter 2, social media can be used in two ways: it can be a method of reaching out to customers—a new channel for interaction—or all that data can be leveraged in a complete different context. In this chapter, we look at the more operational questions, like if you use social media as a channel, why should you do this and what kind of metrics should you observe to serve your customers better? This chapter is not meant to be an in-depth guide on how to set up a social media customer care center. The challenges of such a task are more operational than technical. It is meant to look at the operational metrics and data around social-enabled customer care. In Chapter 5, we will look more into what else we might do with customer care data beyond pure operational actions.

At the beginning of the millennium, customer care was seen by many companies as a necessary evil, a margin-diminishing cost center. As long as service was not outrageously bad, you could get by. As a consequence, many companies started to cut cost by trying to reduce so-called handling times with the customer. Unfortunately, this was the wrong approach. Agents would become very short on the phone in order to keep their handling times short, process more calls, and appear more productive. Escalation to more knowledgeable staff was discouraged, and long hold times (complete with bad music) were common. The business case for this service revolved largely around cost control rather than customer satisfaction, brand promotion, and customer retention.

It was a system of cost cutting and negativity. The result is all too well known. Many of us hate the prospect of having to contact a customer service call center. Initial advances in technology made things even worse. Instead of classical music, you were faced with a computer that asked you 1,001 questions, and if you did not answer correctly, it hung up on you. “Sorry, I could not understand your 18-digit−long customer ID, goodbye!” Only the customer who had repetitive or large purchases would be treated differently, getting an extra phone number with shorter waiting times or even a dedicated customer care representative.

The onset of social media, however, has shifted power back toward the customer. There is one fundamental reason for this: visability. When a company delivers poor service to one isolated customer at a time, the damage to its reputation is often gradual, and meeting performance metrics at the expense of the customer becomes all too tempting. In the world of social media, however, unsatisfied customers are immediately on display for all to see. First, service provided directly via social media is often public, and so others can see the Facebook posts or tweets between the company and its customer. Second, the customers can now share and distribute their own opinions and reviews.

As we saw in Chapter 2, user reviews have a strong influence on the purchasing decision process. Those reviews could cover any interaction with the product, whether positive or negative. Allowing this “free” discussion even instills trust in the product or service. However, this is surely only true if there are not many unhappy customers online who complain about the product.

Suddenly, the hidden discussions between a call center somewhere in the off-shored world and you—the customer—became public and open.

In 2005, influentual blogger Jeff Jarvis, the creator of Entertainment Weekly magazine, began a series of posts entitled Dell Hell, detailing his bad experiences trying unsuccessfully to get Dell Computer to fix his laptop.[79] It struck a chord with readers and generated hundreds of comments from others with similarly bad experiences. He was not the first customer who had a complaint about Dell, nor was he the first customer to create a column or a blog about it; at that time, one could find many clients complaining about the service. However, he had the ability to get Dell’s attention by virtue of his reach within the social media world.

The attention in the media was enormous, and so was the damage to Dell’s image. Dell, however, reacted directly and proactively; it changed its PR policies and invited Jeff to visit its headquarters. More important, it made moves to become more responsive to consumers, including reaching out to disgruntled customers on blogs, allowing customers to rate products on its site, and starting a blog. Jarvis was impressed enough that he eventually wrote a column in BusinessWeek magazine praising the company’s efforts.[80] Dell has learned its lesson and become more attentive to the voice of the customer. Still, the damage from this time has stuck with it, and one can still find references to “Dell Hell” or complaint sites such as dell.pissedconsumer.com.

What if the customer care representative from Dell had known that the person on the other side was a journalist and influential blogger? What if the phone system could have assessed the likelihood that this person was influential enough to create a PR and marketing disaster? Yes, for sure, Dell would have given him a different—a preferential—treatment from the beginning and would have avoided the whole PR issue.

The history of social media is full of customer complaints that blew up in publicity. They all follow a similar storyline—David (alias for the poor, beaten-up customer) versus Goliath (alias for the big, cold, bad corporation). One of the more public PR blow ups in this ongoing saga was United Airlines’ response to baggage problems.

In 2009, as his bandmates watched, horrified, from the passenger window, Canadian musician Dave Carroll’s expensive Taylor guitar was broken as a result of rough handling by baggage handlers on a United flight. He tried to get reimbursed for this, but after numerous contacts, United declined to pay for the damage. Dave was probably not the first person to encounter this situation. However, his response was to write a song and publish a music video on YouTube titled, “United Breaks Guitars.” At the time of this writing, it had been watched more than 13 million times and was a public relations nightmare for United Airlines. After top managers realized what was happening, they finally offered to address Dave’s case, but it was too late—the public damage was already done. Though it is hard to put a number on the amount of damage that was done by this video, some sources claimed it was a factor in a subsequent drop of $180 million in the price of United Airline’s stock price, nearly 10% of its market capitalization. While the consensus nowadays is that the viral video alone was unlikely to have had such a large financial effect, it certainly had a negative impact on the company’s reputation.[81]

Perhaps a more important lesson is that today, you don’t need to be an influential blogger or a talented video producer to make your voice heard in social media. In a case that was eerily similar to “United Breaks Guitars,” in late 2012, working musician Dave Schneider had his vintage guitar crushed by baggage handlers after Delta Airlines refused to allow him to carry it on board. He described Delta’s response as a “runaround” until he took to social media channels, including Facebook and Gawker. It wasn’t until Yahoo! News ran a feature story the following month that Delta finally agreed to pay for repairs to his guitar, as well as give him vouchers for two free future flights.[82]

Examples such as these demonstrate that the open nature of social media makes it much harder to hide bad customer service. Will the consequence be that everyone gets VIP treatment? Despite the fact that every company says, “Our customers are king,” it’s not true. Most companies want to make a bottom line for its shareholders, and VIP treatments for everyone would cost way too much.

The solution is to use your own service tools to help customers who are complaining on social media. In the following chapter, we’ll show that this doesn’t need to come with more cost and that it provides you with new ways to measure performance and to gather insights.

Similar to what we discussed in Chapter 1, social media provides a complementary channel to the existing ways of communicating with your customers. Instead of calling a toll-free number, your customer can tweet or write on your Facebook wall. Then your social media team responds to the customer, with no need to wait on hold or stand in line.

This is the fantasy of social customer service. In some cases, it has been the reality as well: for example, cable service provider Comcast helped restore its customer service reputation, in part, around the efforts of a talented social media team led by executive Frank Eliason, dubbed by BusinessWeek as “the most famous customer service manager in the US” after the success of his @ComcastCares service channel on Twitter. On a broader level, many customer support automation and CRM vendors now offer capabilities to manage customer issues through Facebook fan pages or Twitter.[83]

On the other hand, despite the hype, people are not all flocking to vent their customer concerns through social media. According to customer support portal site SupportIndustry.com, only 20% of customer support centers currently serve customers via social media, and these in turn only handle between 1% and 10% of their transactions through this channel. With some few exceptions, such as Microsoft Xbox’s Elite TweetFleet (@XboxSupport), which has a dedicated team of representatives monitoring Twitter proactively for comments from gamers, social media has become just one of many channels for customer care, along with telephone, web chat, and others.[84]

So what does customer care 2.0 really look like? It is a world where companies interact with their customers as they always did, but with two key differences. First, some of these customer contact channels, such as social media support or online user communities, now expose people’s service quality to the public. The incentive to squeeze performance metrics or control costs is now tempered by the reality that people will often see how well they or others are served. Second, customers now have a voice they never had before. Many of us nowadays rarely make purchases, particularly major ones, without checking online comments and ratings from other consumers.

This trend has tremendous implications for both the profession of customer care and the underlying data analytics that drive it. Let’s look at some of the ramifications of moving toward customer care 2.0.

Customer care does not just serve customers in the present moment. It also generates knowledge that can often be reused and mined as data. Now there are protocols such as the Knowledge-Centered Support (KCS) approach of the IT Infrastructure Library (ITIL), developed by the nonprofit Consortium for Service Innovation in the 1990s. KCS outlines a process by which solutions are generated by agents, validated by reuse, and then published for external use. Major corporations have been using such approaches more and more in recent years.

Another increasingly important source of knowledge is the customers themselves. For any product or service, people often use search engines such as Google to see if other people have posted solutions to customer issues on their blogs or social media sites. And for larger companies, formal online support communities often serve as a rich source of knowledge. The main challenge of such communities is their economy of scale: to have sufficient participation, you need either a large or very dedicated user base. Hence such tools are mainly found among larger firms like Apple, Dell, and Microsoft, or companies that were able to create a high degree of attachment to their product, such as Evernote.

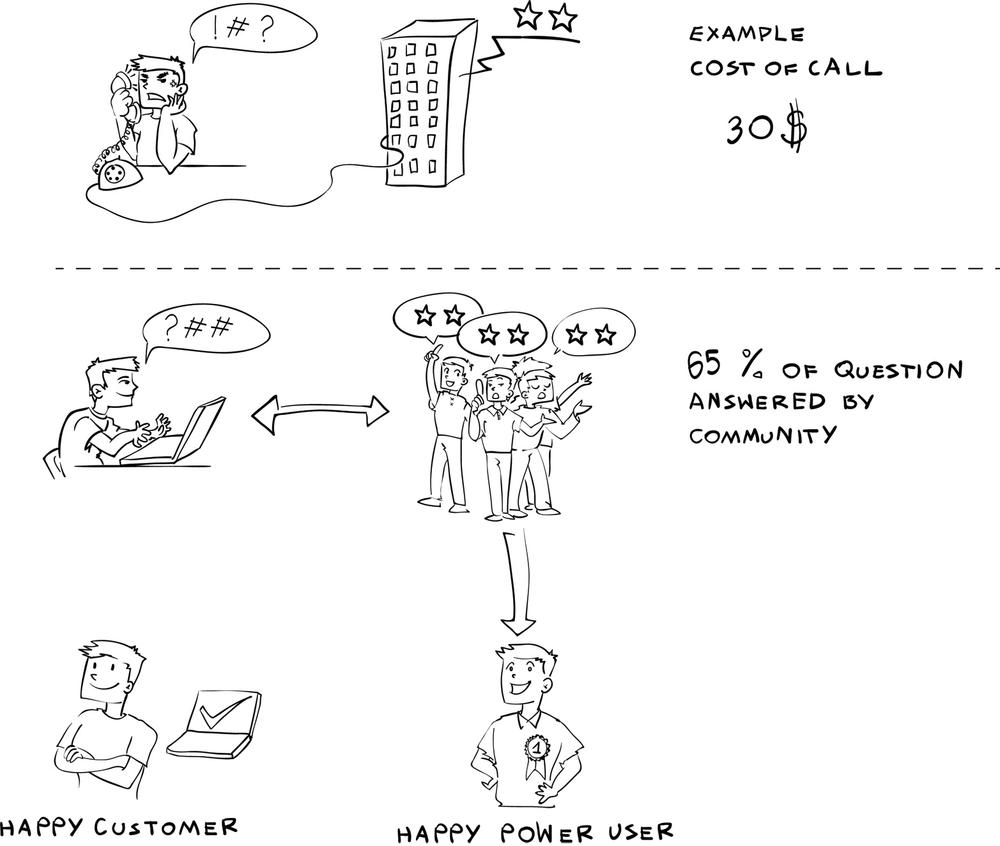

In both cases, one goal of knowledge databases is to have customers serve themselves, an objective that is better for both the customer and the financial interests of the company. Figure 4-1 compares those two approaches. A customer calling into the call center creates cost, let’s say $5 to $10 per call.[85] Most questions have been asked before, and most questions are known to the community. We assume in Figure 4-1 that 65% of all questions can be answered by the community. Customers who got their question answered are less likely to call in (only 40% call in to seek a second opinion—60% of those calls are “deflected”). That means that there will be about 39% fewer calls.

Moreover, each person who expresses appreciation for an answer from the community encourages more people to join, which strengthens the brand awareness of the product.

Social customer service benefits your employees as well as your customers. Few brands enjoy the economy of scale of a Microsoft or Apple, so instead of self-serving community teams, many firms will build up their own social customer care teams. You will soon find that those teams have the highest morale within the customer service organization.

Usually service staff get criticized for all kinds of product- or marketing-related issues. They often have to take the complaints for situations they can neither fix nor avoid. Service staff who try hard often get only a quick “thank you” at the end of a call. This is different in customer care conversations happening over social media. Here, happy clients often create a written and publicly available statement, and this public appraisal boosts employee morale. For example, Jens Riewa, a famous German television presenter, had an issue with his telecom provider. The social media team offered fast help without going through long phone queues. Jens offered as his personal sign of appreciation to “wave” his pen shortly before the end of his evening news program. Björn Ognibeni, who consulted this social media customer care team, reported later, “This one single moment had created an enthusiasm that carried the team forward for a while.”

Surely one’s own social media team comes with its own cost. Since those teams are publicly visible, meaning that that bad service could create a negative effect on brand and image, these teams need to be carefully trained. To build a social customer care center, you must carefully select appropriate staff to not only solve issues, but also to communicate effectively. In some cases this will create additional costs compared to using an outsourced call center, but hopefully at an overall benefit to your brand and market share.

We discussed in PR to Warn that social media has the capability to spread information fast and effectively. Unsatisfied customers who go online and tell their woes to a larger audience are often a cause for PR issues such as those we saw in the examples at the beginning of this chapter.

Now there will always be cases where a customer leaves unhappy. Not even VIP service for all would fix this issue. A well-run social customer care system should be able to detect a potential danger of dissatisfaction spreading. There is no bulletproof system for detecting spreading or contagious messages. However, the more confined the system is, the better the chance of such detection. With customer care you actually have some good ways to measure the extent to which a given complaint is important.

The old, nonsocial-media world factors such as revenue are still important to understanding the potential impact of this kind of service call. But social media now adds a new component to the mix—the social network.

How well is someone networked? We concluded in Chapter 1 that the effect of word of mouth is often overestimated because a network can create only reach but not necessarily buying intention. However, an unhappy comment can prohibit buying intention, and if this goes together with reach, this public comment needs greater attention.

The way customer care teams should select messages is by analyzing the level of unhappiness of a comment and the risk of the message spreading to a larger audience. This assessment needs to be done automatically so that the customer service team replies to the most urgent one first.

The potential reach could be assessed by complex metrics such as centrality or in-betweeness. As a first proxy, it’s sufficient to measure just the network size: the bigger the network, the bigger the risk of spreading information. As an example, within Twitter or Facebook, you can derive two variables for each of your complaining customers:

- What is the size of the social network of the customer?

The network is the basic variable. The more people you can complain to, then the more dangerous your complaint might be.

- How big is the network after the second degree of separation?

For customer service complaints, this metric is most likely more powerful than the pure size of an individual network. A message often spreads only once it hits a certain gatekeeper, as we saw in Chapter 3. Take the Vancouver Olympics in 2010. After the IOC had issued guidelines about how athletes should use social media so as not to alienate their respective sponsors, Lindsey Vonn wrongly claimed that the IOC had forbidden any use of social media. The IOC reacted promptly and tweeted, “Athletes, go ahead and tweet.” This catchy phrase was not picked up strongly by the followers of the Olympic Twitter account. But it did start spreading after Lindsey Vonn published it herself to her 35,000 followers, which created the needed tipping point.

The size of the social network of your customers is not the only metric to analyze commentary within your customer care discussions. Most other measurements are very similar to what we discussed in Chapter 3. Engagement and content are other critical areas to measure:

- Engagement

Messages that create a lot of public attention via likes or retweets or a similarly spreading mechanism should be ranked higher within the customer care system to draw the attention of a customer care representative to it.

- Content

Similar messages should be flagged if they use certain critical words or if the conversation starts to become long and repetitive.

A social CRM does not just offer the ability to pick up customers where they are. It also offers a unique ability to pass on successful customer service requests. The same way the publicity will enhance negative messages, it can enhance positive messages. Surely to become really contagious the message needs to be appealing. “I fixed your telephone line” will be probably not spread, except perhaps for examples like the phone line of Jens Riewa the TV presenter.

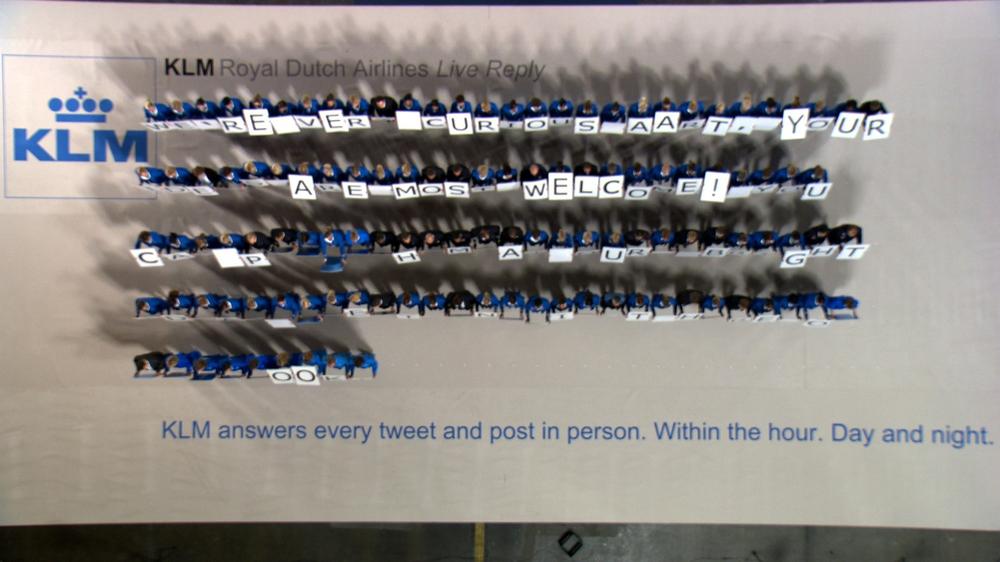

Dutch airline KLM knew this, and in order to introduce its new 24/7 Facebook and Twitter support, they generated live reply messages (see Figure 4-2). Each social media customer care representative would carry a letter, and the team would line up in a way to form a response. This process was filmed and published on YouTube. It was an instant hit, and the news spread. KLM became known for their great social customer care support.

Even seemingly outrageous complaints might become a positive PR opportunity. One British feminine hygiene company showed, in an excellent video response to a critical Facebook post, how to do this. A man posted (probably tongue-in-cheek) about how Bodyforms “lied” by showing active women enjoying themselves during their menstrual periods, when in fact his own girlfriend became like “the little girl from The Exorcist with added venom” during her own period.

In response, the company posted a YouTube video response from a fictitious CEO named Carolyn Williams. She apologized for this deception, stating that it was a response to focus groups in the 1980s showing shocked and fearful men crying as they learned about their partner’s periods. She went on to admit that there was no such thing as a happy period, and closed by breaking wind and noting that “we do that, too.” The video was a viral hit that resulted in tremendous publicity for the company on social media.[86]

Are you convinced that you should “go social” in your CRM? Good! Here are a few dos and don’ts that we have been seeing:

You should help and serve your clients publicly. No need to wait until they call in. Try to address them where you can find them, in the same channel. Do this publicly to show that you care. But keep in mind that 140 characters isn’t enough to handle conflict or customer care topics. So once you pick them up, try to get clients into your traditional service tool as soon as possible. This transition should be painless for the user, as he came to complain, not to go through several customer service channels.

It is important to get clients into your traditional service tool for several reasons. It could be that the customer issue involves how a credit card is disposed, or some other private information. This is not something to handle in the public space. And even if this sounds logical, just to make sure it never happens, move customers as quickly and as painlessly as possible into a nonpublic tool.

A second reason you should move customers swiftly to your own channels is the question of control. As many companies moved into online social networking sites like Facebook, they found that their clients were not interested in “liking” the most recent marketing promotion but rather in voicing their issues with the product. As a natural consequence, companies opened up and reacted on the social networking platform by giving feedback and advice. They used the social network as their own customer care platform. While in principle a good idea, there is an inherent risk to this, as companies own neither the data nor the outlay of the page. As soon as a platform decides to change the page layout, the companies have to follow suit no matter whether this is actually useful for customer care or not. This is another reason to move clients as quickly as possible into other online tools, where the company owns the content.

The third reason to move from a public discussion in a social networking platform to a more controlled environment is that visible customer care discussions are also visible to your competition. They might use the discussions to analyze your weaknesses (see Automation and Business Intelligence) or even try to engage with your customers by offering a better service or a better solution to the customer’s issues.

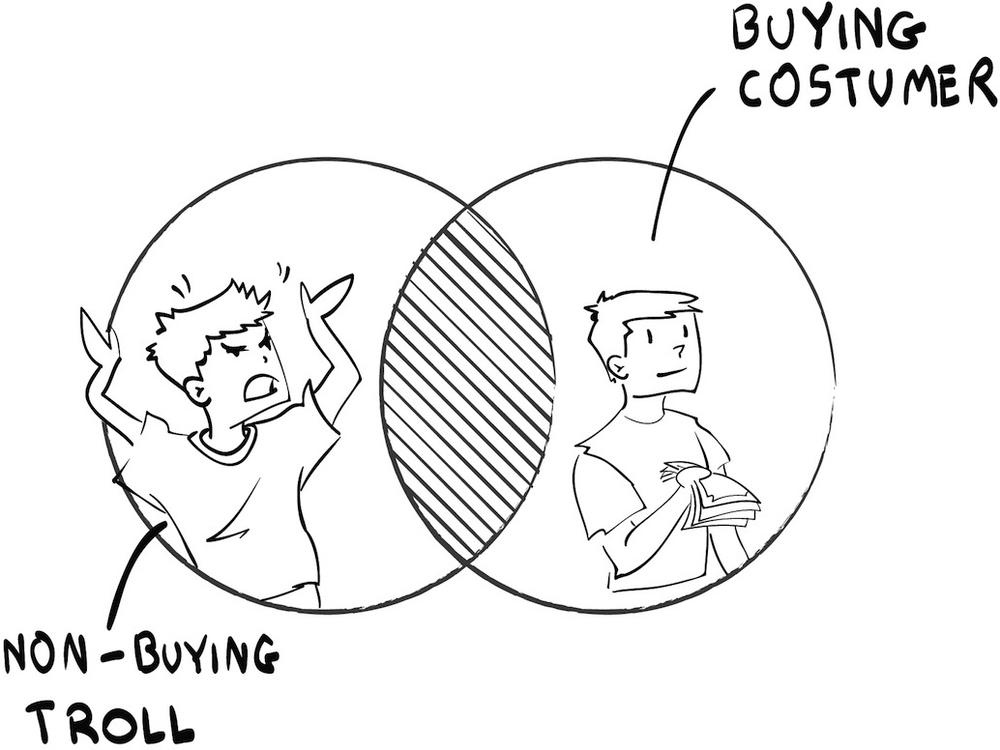

Any customer service representative can tell you what a “troll” is. They existed long before the onset of social media, but social media has made their life even more pleasant. A troll is someone who just has fun complaining and bullying, often in a loud and nasty way. Should an organization jump and try to give these “trolls” a higher service level? Not necessarily. It might be that those clients are not the right ones they are trying to reach. It is often helpful to create a figure such as Figure 4-3. There’s not always an overlap between the people who are complaining and the ones buying.

At the end of 2010, the German train company Deutsche Bahn decided to start its own Facebook offering. To drive initial uptake of Facebook fans, the Bahn offered a so-called “chef ticket.” Those were only available to Facebook fans of the Deutsche Bahn and were considerably cheaper than they would have been otherwise. Depending on the kind of trip, you could have saved up to almost 90% of a ticket price.

The campaign was supported by a YouTube video and an associated marketing package. The video was leaked to the public a few days before the actual campaign started, and the German social media scene was not impressed. The company was not known for innovative marketing, and this Facebook page raised the ire of many net activists. Complaints about the tone, execution, and content were voiced on Twitter, Facebook, and in news articles.

However, all of these complaints happened before the Facebook page even went live. What should the company have done? Stop the campaign? Excuse itself publicly? The company decided to do nothing, and went live as planned.

In the end, the complaining net activists were not the fans who were interested in buying train tickets. The circles as displayed in Figure 4-3 did not have a strong overlap. Meanwhile, the customers loved the price reduction offer, and the Facebook campaign became a success. Within the first 24 hours, more than 5,000 “fans” registered. After six weeks, the number had risen to over 60,000 Fans. There are no official numbers about the financial success of the campaign, but insiders comment that over 180,000 tickets were sold, accounting for $5.6 million in revenue.

Any customer care setup faces the question of staffing, whether it involves traditional channels such as the telephone or newer social channels. Complex models exist, such as the Erlang C model, for making sure the right number of agents exist to serve the customer. While an understaffed call center may frustrate customers who are waiting on hold or cannot get through, a staff shortage in social customer care will also frustrate people who expect at least a same-day response (or in some cases, such as an airline flight delay, a same-hour response). Moreover, social customer care has the disadvantage that your lack of response is extremely visible to the public.

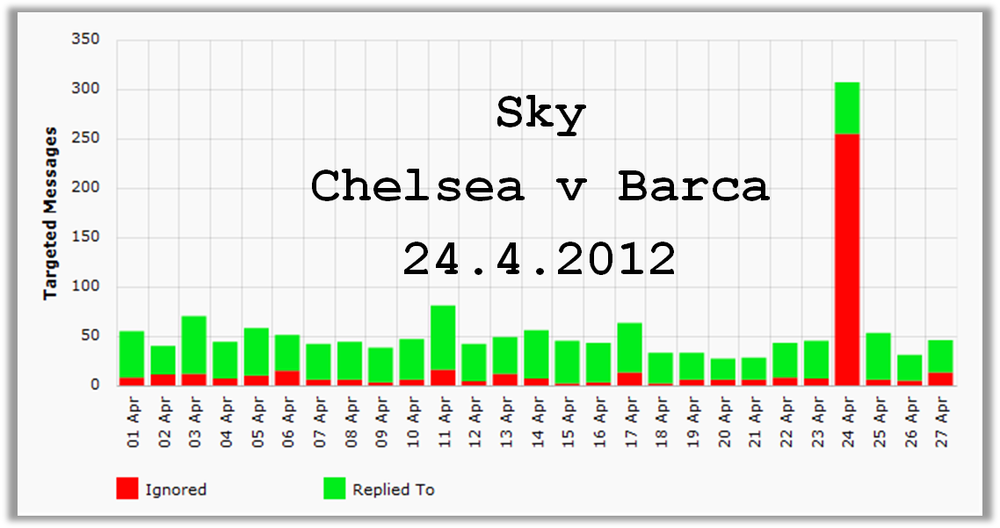

Even well-oiled social customer care systems can look bad during an unexpected peak time. In April 2012, during one of the most important football matches, the pay-TV channel Sky had broadcasting difficulties, and the Twitter community reacted in a state of outrage. Luke Brynley-Jones, a blogger from Our Social Times, explained this with a response metric over time (see Figure 4-4). More then 60% of all messages simply went by unanswered. While Sky normally has very good service, in this case they could not cope.

The lesson here is to be ready to have additional resources on demand when needed. Those could be employees who normally have a different job but who could come and help during a crisis situation. For example, electronics retail chain Best Buy encourages employees at all levels of the organization to tweet answers to customers on Twitter as part of its Twelpforce. Together with its regular social media footprint, including blogs, a Facebook page, and community forums, the company credits this model with over $5 million in call center deflection. Infrastructures such as these help to engrain a social service culture into the company culture.[87]

Social customer care can certainly add value to your service offerings. However, if the lessons of history are any indication, it would not be a good idea to bet your competitiveness on social customer care. Just like having a web page or an email address, the bad news is that social customer care is quickly evolving into a commodity. Any business can have a Facebook fan page or a Twitter account in a matter of minutes, and these are rapidly becoming routine and expected.

One of the best examples from the past is the case of banks in the United States. Years ago, banks were not often noted for their service quality. Then a few banks started to brand themselves around offering better customer service, and others soon followed suit. Today high customer satisfaction is not a competitive asset for a bank; it is simply a hygiene factor that is expected in the marketplace.

A more subtle case has evolved in the computer industry. Apple combines a very efficient customer support infrastructure that depends heavily on online resources such as knowledgebases and crowdsourced user community forums, with the ability to obtain free premium service in person by visiting a “Genius Bar” at an Apple store, which includes features such as free computer courses and easy-to-access service staff. The model for the Genius Bar was the hotel lounge, with the idea that customers should be as relaxed as they are in such lounges. Apple set a standard that has led many other hardware suppliers to begin improving their service support. Today, in many industries, good customer service has become an expectation, and thus social customer care is about to become a commodity as well.

With all that customer commentary online, a new segment of business intelligence was born to analyze how companies interact with their clients and how happy the clients are. The results promised to be faster and more easy to obtain than any customer satisfaction index. Tools and software to dig through large amounts of unstructured commentary and distill customer opinion data from millions of online social media interactions developed at a fast pace. Their main promise was to find out what customers were thinking about your product and about your competitors’ products. Listening to client conversation seemed to have the promise of replacing surveys. We would not need to ask our customers anymore. No, we just need to listen to their conversations. While this is true in principle, the reality is in most cases very different.

We will see in Chapter 9 how complex it is to set up a keyword. But the keyword only determines the datapool to be analyzed. It determines which conversations are selected. The real work is about to begin after this selection. Automated business intelligence tries to find metrics in order to give answers about what the customers thought, what their feelings were, etc. This is a way more complex task, and it unfortunately often goes wrong. This is due to the complexity of sentiment analytics.

With sentiment analytics, we try to find out a specific sentiment toward a certain position. Does this customer like my product or not? We try to look for clues in the text to indicate a sentiment that might not be clearly formulated. The human language can be very subtle and multifaceted and therefore too complex to be analyzed simply by an automated machine-learning program. You might have heard such an argument before, when the first automated translation systems hit the market. They were terrible, and we claimed that the human language was too multifaceted for them. We can assume that sentiment analytics will improve over time due to statistical methods or just due to “brute force” calculations from computers as much as translation programs did—they will never be perfect, but way better.

Until we see those improvements, we will need to take the same approach as we did with automated translation programs. We need to reduce the scope of the task. Instead of looking for all possible sentiments, we are analyzing only specific cases. For example, “what you think about this restaurant?” The ways to answer here are more limited, and thus a computer can be trained to be more focused. In turn, the sentiment algorithm will produce reliable and useful results. Whether that sentiment data will yield the fourth “V” of data, however, depends very much on whether the question you trained the machine to answer actually has a tangible and business-centric action attached. The question “what do you think of this restaurant,” for example, will easily help companies like Yelp to sort through thousands of user generated comments and display good restaurants higher up on their search engine. However, in the PR world, sentiment is often only asked in hindsight and is just a reporting number that does not lead to action.

The next section provides some examples of the issues involved in trying to leverage the stream of social media information into actionable customer intelligence.

In late 2009, an Asian airline asked us to analyze the satisfaction level of airline passengers. The best method would be to poll the passenger using a questionnaire. This would create structured data, but it would take time and money. Is there an easier way to get to this data? Yes, by using social media comments from passengers.

Of course, the biggest challenge here is separating the signal from the noise. As we will discuss in Chapter 9, the first step is a data-reduction process, where we reduce the media types surveyed (such as focusing on Twitter data), data content (for example, restricting attention to tweets referencing the company Twitter name or hashtag), and the use case (such as common words used by customers, particularly frustrated ones).

Jeffrey Breen (@JeffreyBreen) has done a similar exercise for US airlines. He also used those reductions and R, an open source software environment and programming language for statistical computing and graphics, to analyze the data coming from the open Twitter API. He describes his approach step by step in a slideshare doc.[88] We would encourage anyone with some technical interest to try this for yourself at home. It is fun.

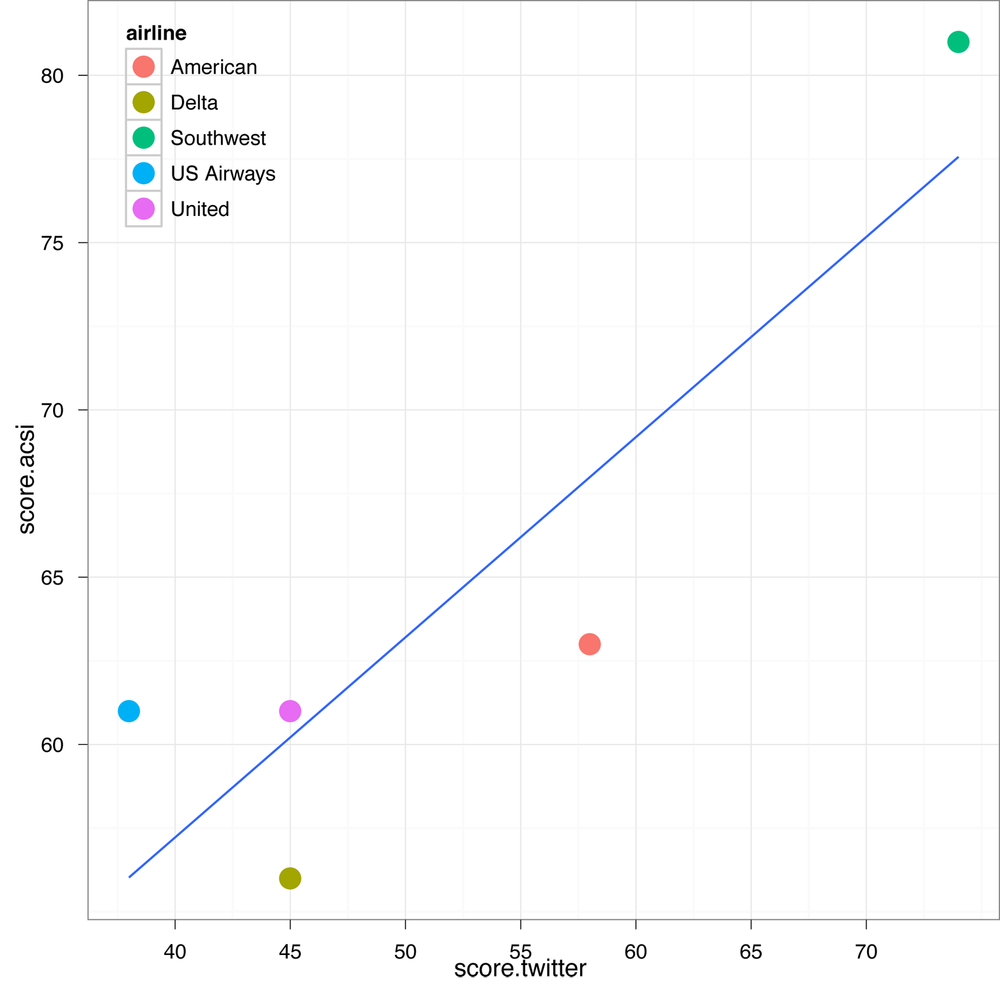

Stunningly, the result is not all too different from the survey done by the airline industry itself, as one can see in Figure 4-5. On the horizontal axis you can see the sentiment scores he had calculated using a Twitter feed; on the vertical axis, he has the scores from those industry services. It is not a perfect correlation, but the trend is clearly indicating that those focused sentiments correlate to the overall survey result.

Figure 4-5. Comparison of Twitter sentiment to the American Customer Satisfaction Index (courtesy of Jeffrey Breen.)

Surely there are several shortcomings to this approach:

The questionnaire has many more in-depth insights versus what Twitter analytics will have.

The questionnaire from the ACSI is done on a proper statistical sample, while those complaining to airlines on Twitter are anything but representative.

The use of predefined language clues brings a bias by the person designing the analytics. Small critical tones will not be easily visible.

Of course, Jeffrey did not attempt to replace the surveys done by the ACSI in a single go. But this experiment shows that it is possible to analyze social media data, but you have to be careful what kind of data you use.

In his example, Jeffrey Breen used a so-called word sentiment list. He used an opinion lexicon with 6,800 positive and negative words. Positive words would be signaled by words such as “cool,” “best,” and “amazing,” and negative words by words like “worst,” “awful,” and “terrible.”

This is the simplest form of a sentiment algorithm. The algorithm then compares a list of words with the tweet and assigns a negative (-1) or positive (1) sentiment score. The overall sentiment is just a sum of all sentiment scores. For example, let’s look at the the following tweet:

“@Continental this flight was so bad.”

“Bad” is a negative word, which the algorithm counts as negative. At the end, the computer adds up all negative and positive words to get an overall score for the article. The programming approach is relatively straightforward.

The main advantage of such an algorithm is that it can be precalculated, as there is not a given subject. For example, a company like Sysomos uses such precalculated sentiment scores. The main advantage is that within seconds, the sentiment of thousands of underlying articles can be added up and presented.

But look at the following fictitious tweet:[89]

“@Continental, you could be more friendly—@southwest I never had such a bad flight.”

The net amount of positive and negative sentiment would be neutral: 1 × (-1) “bad” + 1 × (1) “friendly” = 0 meaning neutral. But in reality, the author of this tweet is most likely not satisfied with Continental. If you are interested in the sentiment for Continental, the algorithm should only count the sentiment expressed toward Continental and not toward Southwest, but already it is hard to tell whether “bad” relates to @southwest or @continental. The context will make it clear, but automated algortithms are often not able to relate to the context.

Granted, with Twitter and its 140 characters, there is often not too much context, and those tweets are just made-up examples. However, a good blogger or journalist would balance words between negative and positive sentiment to keep the article interesting. Thus a realistic score will simply not work.

Katie Delahaye Paine, a highly respected PR expert, issued a warning in 2009 about the inaccuracy of those measurements. However, this serves as an example of how to start thinking about automatically filtering online customer comments into useful data.[90]

The algorithm that would be needed to accurately measure sentiment would look for a so-called baseword. For sentiment algorithms, the “baseword” is the word we focus on. Thus what is the sentiment in regards to this very word? Take again this fictive tweet:

“@Continental, this was a terrible flight—I should take the friendly @southwest next time.”

If the baseword is @Continental, then only adjectives relating to this word are looked at, therefore, only “terrible.” The word “friendly” does not seem to be connected to @Continental, as this is already the next sentence. Most professional social media mining companies use this approach.

Further sophistication can be added if the algorithm will not only look for the baseword, but also for linguistic links to the baseword (e.g., “it,” “he,” and “his”). Such referential links as those created by pronouns are referred to as anaphora. This will be needed because many articles will not repeat the baseword over and over again:

“Took a @continental flight today. It was awesome.”

The algorithm would realize that the word “it” relates to the baseword @continental, even though this is a new grammatical structure.

Despite enhancements of the algorithm such as these, the power of these algorithms to correctly judge the sentiment has been quite low. The biggest issue is the missing context for a fixed or hard-coded algorithm as we already saw on the phrase: “@Continental, you could be more friendly—@southwest I never had such a bad flight.”

Context is very important for the overall judgement. Take as an example the phrase, “Go read the book,” which could be a positive or negative sentence, depending on whether it was a comment about a book or a movie.

Many research groups have worked to include insights given by context. Their approach is often very similar: they split the text into grammar structures such as noun, object, adjective, and verb. They then classify nouns, verbs, or some combination into themes by matching those words against a dictionary. Each context, however, would demand, as we just saw, its own dictionary. Those context specific dictionaries are called “corpus.” For example, companies like Provalis Research offer those kinds of dictionaries or corpuses for political and financial news. For general purposes, psychological dictionaries, such as the positive and negative word lists of the Harvard-IV General Inquirer can be used.

Most word lists will not directly lead to the sufficient results. You will need to train them for a specific situation. While doing so, there are three dimensions to watch:

- Train by question

The more specific a question is, the better it is. Try to be more specific than only “movies” or “phones.”

- Train by media type

As we saw, news, blogs, and microblogs sometimes use drastically different language and have different possibilities for leveraging both negative and positive words.

- Train by language

Often, measurement companies take a “shortcut” and first translate text into English before starting analysis. While it is highly cost-effective, it strongly reduces the quality, as an algorithm that produces a high rate of error (sentiment algorithm) is fed by content that might have a high degree of unreadability after an automated translation.

Thus a large number of corpuses/word lists would need to be developed by a machine-learning approach. This approach is purely theoretical because the data and the time capacity are not available, at least not so far. However, using a standard word list raises accuracy concerns. Michael Liebmann, a researcher who analyzed press releases from companies (see Chapter 7), said that predefined word lists might miss between 83% and 94% of positve/negative perception.

In addition to context, the algorithm will have a hard time correctly measuring ironic or satiric content. So are there any cases in which an automatic analytics of customer sentiment makes sense? Yes, there are, as we already saw in the example illustrated by Jeffrey Breen. However, in order to automatically analyze customer care comments, the most important part is to reduce the scope to such a limit that the shortcomings of the algorithm do not play a role.

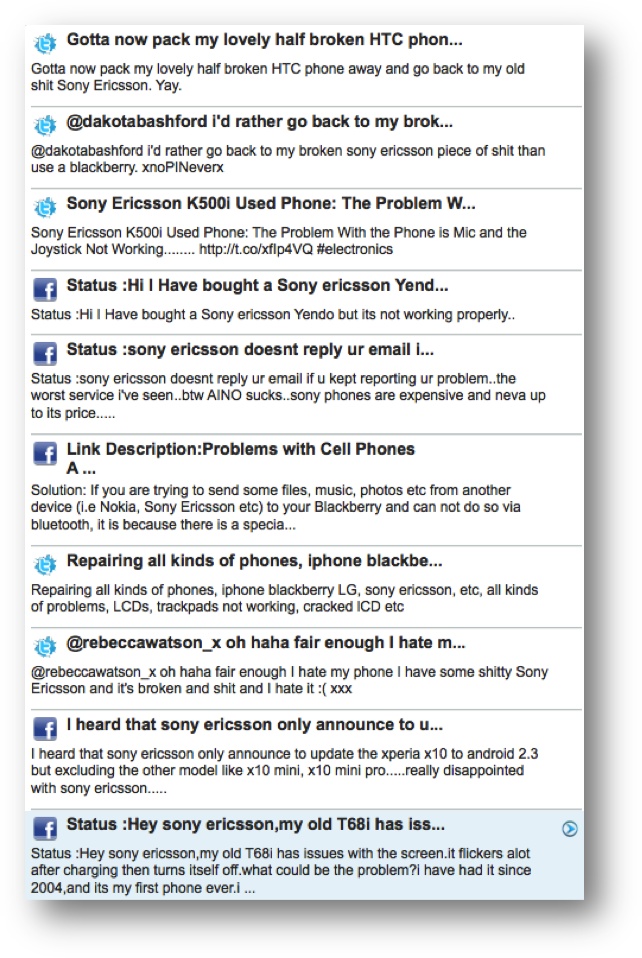

Reducing scope can mean focusing only on a small set of emotions: for example, only focus on swear words and explicit language. This way one could easily spot the biggest annoyances. In Figure 4-6 you can see how this is done in the case of Sony Ericsson. All messages are selected that use swear language. You could now relatively easily sort those comments by topics, or you could compare the same swear word analytics with other competitors.

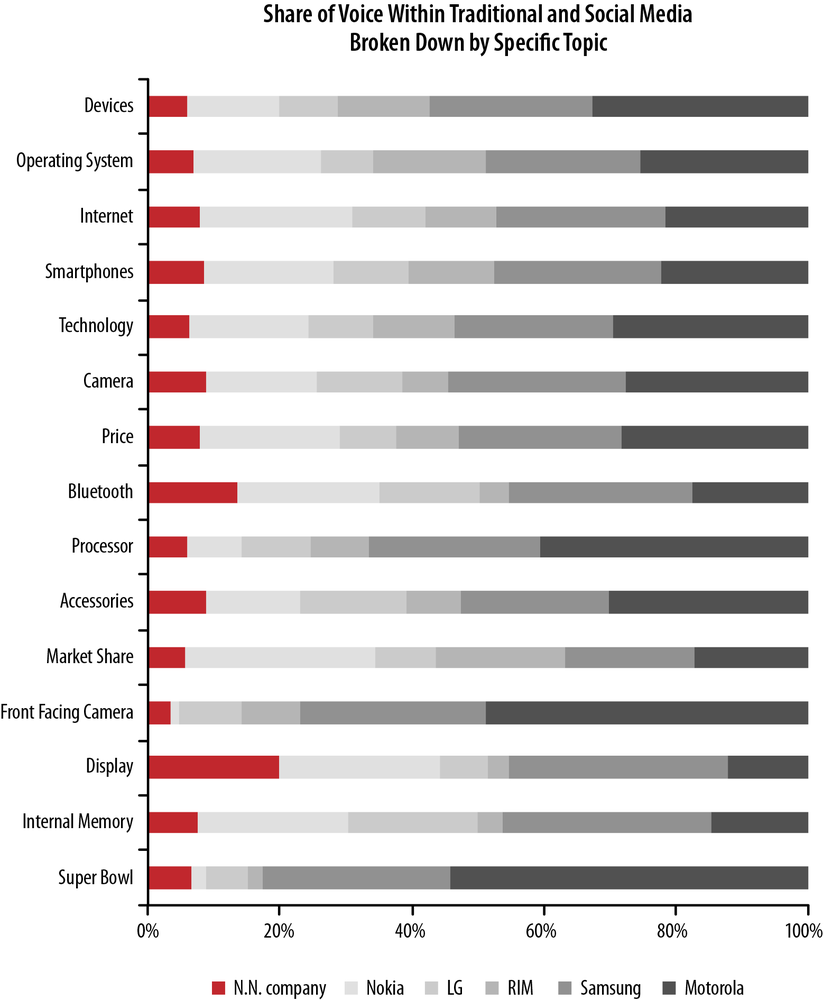

Another possible focus could be to reduce brand discussions only to very specific topics, as shown in Figure 4-7. On the horizontal axis, the share of voice of each brand is displayed, broken down by specific topics. You can see, for example, that Motorola managed better than anyone else to get into the public discussion in relation to its new front-facing camera.

All of the techniques discussed have the shortcoming that they are hard-coded via word lists. The quality of those lists determines the quality of the algorithm. Moreover, those lists might not evolve over time in terms of wording, media types, and contexts, and surely different languages will need different lists.

Those shortcomings might be best overcome with supervised machine learning. An algorithm learns what is correct and what is not and can figure this out without our assumption that a specific word actually carries bad sentiment and another word carries good sentiment.

This might sound like a solution; however, this algorithm needs to be trained. That is the “supervised” bit. A good example of how this training can work is online customer care sites. Once you submit a ticket in writing, an automated algorithm attempts to research what is in the knowledge database in order to try and resolve the issue before the ticket itself is transferred to the queue of a live customer care agents.

Based on this simple search, the database suggests possible solutions and asks you whether or not any of these answers would resolve your issue. If not, the system may search for a new answer and/or give you the option of submitting the ticket for live customer care.

This question about whether these solutions resolved the issue is not only the check on whether to provide you another solution or access to live support, but it is also the quality-control check on whether the algorithm has understood your question correctly. Each time you click on yes or no, the algorithm will train and improve the algorithm.

The following are two examples of how to apply machine learning within customer care on unstructured comments:

Ashish Gambhir created a specific “use case.” His own company newBrandAnalytics only focuses on one specific sector: the hospitality industry. He aggregates customer feedback from social media, as well as traditional sources like guest surveys, to produce proprietary customer satisfaction metrics for firms in this sector. In the future, this firm plans to expand this approach to other vertical markets.

Thus from all possible discussions, he reduces his approach only to what people say about restaurants. This is a quite feasible task for a machine to get trained on, as in not much training data is needed. However, Ashish needs to train the machine by hand.

This approach is manual because a human needs to decide what is positive or negative commentary. There is no self-enforcing algorithm because he has no clients rating it for him, unlike what users might do at sites such as Microsoft technical support.

Stuart Shulman from Textifter[91] trains his algorithim using the input from customer care teams. A human decides where a given complaint should be sent. If it’s a complaint about a printer, then it should be sent to the printing department. If it’s an inquiry about a laptop, then it should be sent to the consumer division, and so on. Each time the customer care agent dispatches a mail or a tweet to the actual responsible customer care person, the underlying algorithm learns what is behind the distribution. The tool will soon take over and automatically dispatch all tweets and emails by itself. If a complaint was misclassified again, the tool will learn from those mistakes.

Note

In any sentiment algorithm, the most important part is that the algorithm can be trained relatively easily.

Social media has changed the balance of power between customers and the companies that serve them. Thanks to social media platforms and online communities, even the most humble customer can now have a voice that may be heard. To measure this voice is essential because on one hand, companies should mind the trolls, those people who are just abusing their new power. On the other hand, distinguishing between important and less important service requests will become a key success factor for a cost-optimized customer care organization, since not each voice in the online world is equal.

As we saw in the examples from KLM, social-enabled customer care offers companies a whole new ability to position themselves. Every service request can be used as an opportunity to position the brand or to demonstrate the value of the service or product.

Social media does not offer only operational metrics to guide and measure the customer care efforts. The data created by that interaction contains value that we need to lift. We discussed two main examples for this fourth “V”:

Comments from users can be data mined for sentiment. Due to the complex nature of human sentiment, those metrics work best if they are very focused, as we saw in the example from Sony Ericsson or newBrandAnalytics.

Customer Care requests can be analyzed to best understand which service request needs which question. This approach only works if there are many requests present because the underlying technology utilizes a machine-learning approach.

Social Media data has already created both management visibility and efficiency improvements in the way we serve our customers, and with time and technology, these benefits are likely to continue increasing. Meanwhile, these data have helped usher in an entirely new era of putting the interests of the customer first.

Social-enabled customer care has become reality for many companies. Let’s look at the measurements you are using:

Which social media metrics do you use to empower your employees? Can you see improved morale as their customer care dialogue is taken public?

What are good metrics to differentiate your customers? Is each customer equally important? Is each comment equally visible?

We often recommend to our clients that they should only have the start and end of the customer service discussion public. How is the discussion flow in your company? Would you like to change the flow? Try to determine when interactions should be public.

Have you experienced troll comments? What are their characteristics? Could one automate their detection? If so, how?

The data has a value that can help to improve and processes. Let’s discuss how:

How many user comments or customer care comments do you have? Are they statistically relevant?

Have you sorted those comments manually into topics? What could an automated approach look like?

Assuming you would know what a customer needs from the written customer-care ticket, how could you treat him differently? What are potential engagement opportunities?

The area of social customer care is probably the most mature. Please share your experiences and thoughts via Twitter, @askmeasurelearn, or write on our LinkedIn or Facebook page.

[79] Jeff Jarvis, “Dell lies. Dell sucks.” Buzzmachine.com, June 2005, http://buzzmachine.com/2005/06/21/dell-lies-dell-sucks/.

[80] Jeff Jarvis, “Dell learns to Listen”, BusinessWeek, Oct. 2007, http://buswk.co/II8xDy.

[81] Ravi Sawhney, “Broken Guitar Has United Playing the Blues to the Tune of $180 Million,” Fast Company, July 2009. http://bit.ly/1bz1CCM.

[82] Dylan Stableford, “Musician whose vintage guitar was smashed by Delta gets check from airline, new one from Gibson,” Jan. 9, 2013. http://yhoo.it/IS1sj6.

[83] Rebecca Reisner, “Comcast’s Twitter Man,” Bloomberg Businessweek, Jan. 2009, http://buswk.co/1b25HPV.

[84] Casey Hibbard, “How Microsoft Xbox Uses Twitter to Reduce Support Costs,” Xocial Media Examiner, July 2010, http://bit.ly/1crJBHN.

[86] Barnett E., “Bodyform’s response to Facebook rant a viral hit,” The Telegraph (UK), Oct. 17, 2012, http://bit.ly/1bCJFqr.

[87] IBM Global Business Services, From social media to Social CRM (report), June 2011, http://ibm.co/1fbKAyS.

[88] Jeffrey Breen, “R by example: mining Twitter for consumer attitudes towards airlines,” Boston Predictive Analytics Meetup, Jul 2011, http://slidesha.re/ITMKYW.

[89] The following tweets are fictive and are just created to explain the system.

[90] Ld Jacobson, Linda. Public Relations Tactics, Nov2009, Vol. 16 Issue 11, p18-18, 1p.

[91] Acquired by Vision Critical.

Get Ask, Measure, Learn now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.