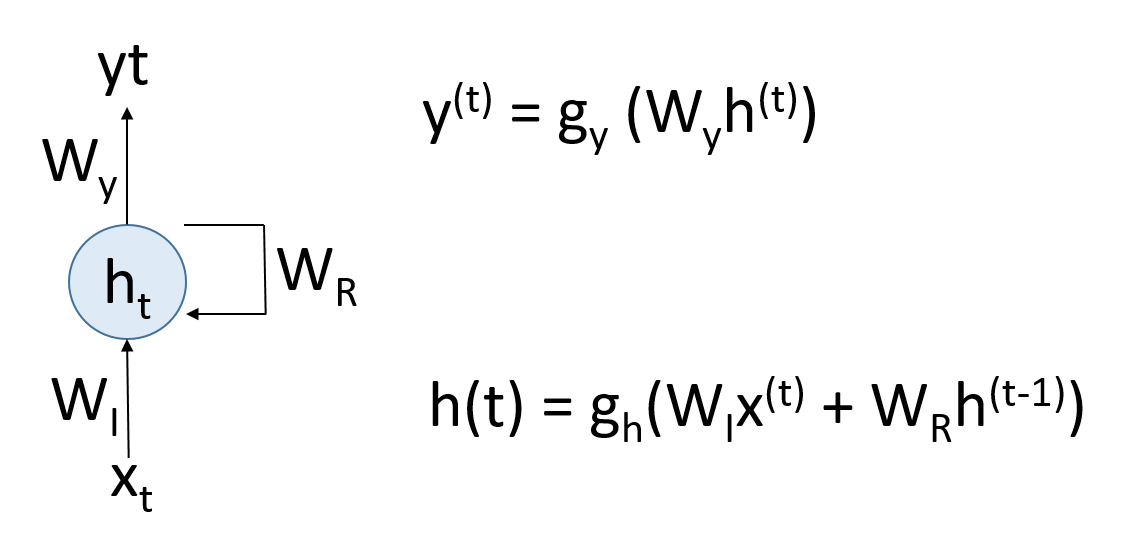

A simple representation of an RNN is when we consider the output of one iteration as the input to the next forward propagation iteration. This can be illustrated as follows:

A liner unit that receives input, xt, applies a weight, WI, and generates a hypothesis with an activation function metamorphosis into an RNN when we feed a weight matrix, WR, back to the hypothesis function output in time with the introduction of a recurrent connection.

In the preceding example, t represents the activation in t time space. Now the activity of the network ...